Technology peripherals

Technology peripherals

AI

AI

A replacement for ChatGPT that can be run on a laptop is here, with a full technical report attached

A replacement for ChatGPT that can be run on a laptop is here, with a full technical report attached

A replacement for ChatGPT that can be run on a laptop is here, with a full technical report attached

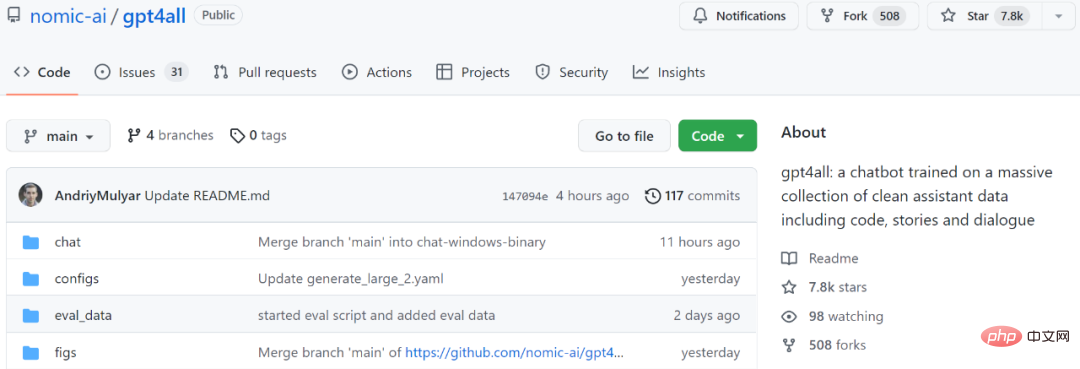

GPT4All is a chatbot trained based on a large amount of clean assistant data (including code, stories and conversations). The data includes ~800k pieces of GPT-3.5-Turbo generated data. It is completed based on LLaMa and can be used in M1 Mac, Windows and other environments. Can run. Perhaps as its name suggests, the time has come when everyone can use personal GPT.

Since OpenAI released ChatGPT, chatbots have become increasingly popular in recent months.

Although ChatGPT is powerful, it is almost impossible for OpenAI to open source it. Many people are working on open source, such as LLaMA, which was open sourced by Meta some time ago. It is a general term for a series of models with parameter quantities ranging from 7 billion to 65 billion. Among them, the 13 billion parameter LLaMA model can outperform the 175 billion parameter GPT-3 "on most benchmarks".

The open source of LLaMA has benefited many researchers. For example, Stanford added instruct tuning to LLaMA and trained a new 7 billion parameter model called Alpaca (based on LLaMA 7B ). The results show that the performance of Alpaca, a lightweight model with only 7B parameters, is comparable to very large-scale language models such as GPT-3.5.

For another example, the model we are going to introduce next, GPT4All, is also a new 7B language model based on LLaMA. Two days after the project was launched, the number of Stars has exceeded 7.8k.

Project address: https://github.com/nomic-ai/gpt4all

To put it simply, GPT4All is 800k in GPT-3.5-Turbo Training is performed on pieces of data, including text questions, story descriptions, multiple rounds of dialogue and code.

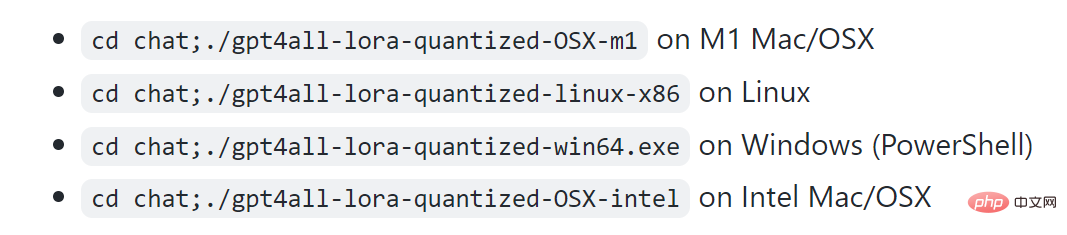

According to the project display, M1 can run in Mac, Windows and other environments.

Let’s take a look at the effect first. As shown in the figure below, users can communicate with GPT4All without any barriers, such as asking the model: "Can I run a large language model on a laptop?" GPT4All's answer is: "Yes, you can use a laptop to train and test neural networks Or machine learning models for other natural languages (such as English or Chinese). Importantly, you need enough available memory (RAM) to accommodate the size of these models..."

Next, if you don't know You can continue to ask GPT4All how much memory is needed, and it will give an answer. Judging from the results, GPT4All’s ability to conduct multiple rounds of dialogue is still very strong.

Real-time sampling on M1 Mac

Some people call this research "game-changing" Rules, with the blessing of GPT4All, you can now run GPT locally on MacBook."

Similar to GPT-4, GPT4All also provides a "technical Report".

Technical report address: https://s3.amazonaws.com/static.nomic.ai/gpt4all/2023_GPT4All_Technical_Report.pdf

This preliminary The technical report briefly describes the construction details of GPT4All. The researchers disclosed the collected data, data wrangling procedures, training code, and final model weights to promote open research and reproducibility. They also released a quantized 4-bit version of the model, which means almost Anyone can run the model on a CPU.

Next, let’s take a look at what’s written in this report.

GPT4All Technical Report

1. Data collection and sorting

During the period from March 20, 2023 to March 26, 2023, the research Researchers used the GPT-3.5-Turbo OpenAI API to collect approximately 1 million pairs of prompt responses.

First, the researchers collected different question/prompt samples by utilizing three publicly available data sets:

- Unified chip2 subset of LAION OIG

- A random subsample set of Stackoverflow Questions Coding questions

- Bigscience/P3 subsample set for instruction tuning

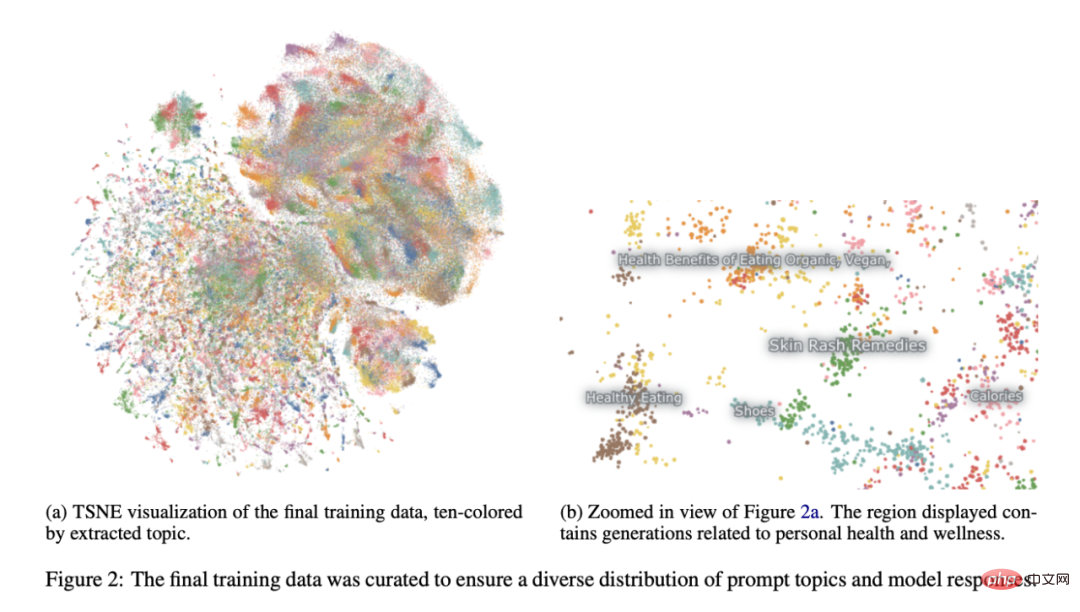

Referring to the Stanford University Alpaca project (Taori et al., 2023), researchers have paid a lot of attention to data preparation and organization. After collecting the initial dataset of prompt-generated pairs, they loaded the data into Atlas to organize and clean it, removing any samples in which GPT-3.5-Turbo failed to respond to prompts and produced malformed output. This reduces the total number of samples to 806199 high-quality prompt-generated pairs. Next, we removed the entire Bigscience/P3 subset from the final training dataset because its output diversity was very low. P3 contains many homogeneous prompts that generate short and homogeneous responses from GPT-3.5-Turbo.

This elimination method resulted in a final subset of 437,605 prompt-generated pairs, as shown in Figure 2.

Model training

The researchers combined several models in an instance of LLaMA 7B (Touvron et al., 2023) Make fine adjustments. Their original public release-related model was trained with LoRA (Hu et al., 2021) on 437,605 post-processed examples for 4 epochs. Detailed model hyperparameters and training code can be found in the relevant resource library and model training logs.

Reproducibility

The researchers released all data (including unused P3 generations), training code and model weights for the community to reproduce. Interested researchers can find the latest data, training details, and checkpoints in the Git repository.

Cost

It took the researchers about four days to create these models, and the GPU cost was $800 (rented from Lambda Labs and Paperspace, including several failed training), in addition to the $500 OpenAI API fee.

The final released model gpt4all-lora can be trained in approximately 8 hours on Lambda Labs’ DGX A100 8x 80GB, at a total cost of $100.

This model can be run on an ordinary laptop. As a netizen said, "There is no cost except the electricity bill."

Evaluation

The researchers conducted a preliminary evaluation of the model using human evaluation data in the SelfInstruct paper (Wang et al., 2022). The report also compares the ground truth perplexity of this model with the best known public alpaca-lora model (contributed by huggingface user chainyo). They found that all models had very large perplexities on a small number of tasks and reported perplexities up to 100. Models fine-tuned on this collected dataset showed lower perplexity in Self-Instruct evaluation compared to Alpaca. The researchers say this evaluation is not exhaustive and there is still room for further evaluation - they welcome readers to run the model on a local CPU (documentation available on Github) and get a qualitative sense of its capabilities.

Finally, it is important to note that the authors publish data and training details in the hope that it will accelerate open LLM research, especially in the areas of alignment and interpretability. GPT4All model weights and data are for research purposes only and are licensed against any commercial use. GPT4All is based on LLaMA, which has a non-commercial license. Assistant data was collected from OpenAI's GPT-3.5-Turbo, whose terms of use prohibit the development of models that compete commercially with OpenAI.

The above is the detailed content of A replacement for ChatGPT that can be run on a laptop is here, with a full technical report attached. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

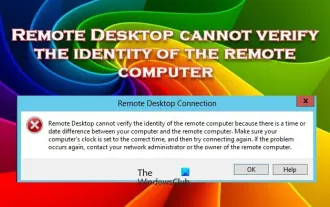

Remote Desktop cannot authenticate the remote computer's identity

Feb 29, 2024 pm 12:30 PM

Remote Desktop cannot authenticate the remote computer's identity

Feb 29, 2024 pm 12:30 PM

Windows Remote Desktop Service allows users to access computers remotely, which is very convenient for people who need to work remotely. However, problems can be encountered when users cannot connect to the remote computer or when Remote Desktop cannot authenticate the computer's identity. This may be caused by network connection issues or certificate verification failure. In this case, the user may need to check the network connection, ensure that the remote computer is online, and try to reconnect. Also, ensuring that the remote computer's authentication options are configured correctly is key to resolving the issue. Such problems with Windows Remote Desktop Services can usually be resolved by carefully checking and adjusting settings. Remote Desktop cannot verify the identity of the remote computer due to a time or date difference. Please make sure your calculations

2024 CSRankings National Computer Science Rankings Released! CMU dominates the list, MIT falls out of the top 5

Mar 25, 2024 pm 06:01 PM

2024 CSRankings National Computer Science Rankings Released! CMU dominates the list, MIT falls out of the top 5

Mar 25, 2024 pm 06:01 PM

The 2024CSRankings National Computer Science Major Rankings have just been released! This year, in the ranking of the best CS universities in the United States, Carnegie Mellon University (CMU) ranks among the best in the country and in the field of CS, while the University of Illinois at Urbana-Champaign (UIUC) has been ranked second for six consecutive years. Georgia Tech ranked third. Then, Stanford University, University of California at San Diego, University of Michigan, and University of Washington tied for fourth place in the world. It is worth noting that MIT's ranking fell and fell out of the top five. CSRankings is a global university ranking project in the field of computer science initiated by Professor Emery Berger of the School of Computer and Information Sciences at the University of Massachusetts Amherst. The ranking is based on objective

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

0.What does this article do? We propose DepthFM: a versatile and fast state-of-the-art generative monocular depth estimation model. In addition to traditional depth estimation tasks, DepthFM also demonstrates state-of-the-art capabilities in downstream tasks such as depth inpainting. DepthFM is efficient and can synthesize depth maps within a few inference steps. Let’s read about this work together ~ 1. Paper information title: DepthFM: FastMonocularDepthEstimationwithFlowMatching Author: MingGui, JohannesS.Fischer, UlrichPrestel, PingchuanMa, Dmytr

Abandon the encoder-decoder architecture and use the diffusion model for edge detection, which is more effective. The National University of Defense Technology proposed DiffusionEdge

Feb 07, 2024 pm 10:12 PM

Abandon the encoder-decoder architecture and use the diffusion model for edge detection, which is more effective. The National University of Defense Technology proposed DiffusionEdge

Feb 07, 2024 pm 10:12 PM

Current deep edge detection networks usually adopt an encoder-decoder architecture, which contains up and down sampling modules to better extract multi-level features. However, this structure limits the network to output accurate and detailed edge detection results. In response to this problem, a paper on AAAI2024 provides a new solution. Thesis title: DiffusionEdge:DiffusionProbabilisticModelforCrispEdgeDetection Authors: Ye Yunfan (National University of Defense Technology), Xu Kai (National University of Defense Technology), Huang Yuxing (National University of Defense Technology), Yi Renjiao (National University of Defense Technology), Cai Zhiping (National University of Defense Technology) Paper link: https ://ar

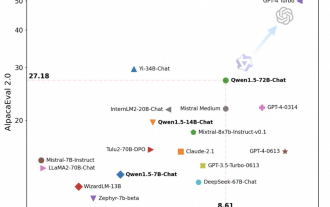

Tongyi Qianwen is open source again, Qwen1.5 brings six volume models, and its performance exceeds GPT3.5

Feb 07, 2024 pm 10:15 PM

Tongyi Qianwen is open source again, Qwen1.5 brings six volume models, and its performance exceeds GPT3.5

Feb 07, 2024 pm 10:15 PM

In time for the Spring Festival, version 1.5 of Tongyi Qianwen Model (Qwen) is online. This morning, the news of the new version attracted the attention of the AI community. The new version of the large model includes six model sizes: 0.5B, 1.8B, 4B, 7B, 14B and 72B. Among them, the performance of the strongest version surpasses GPT3.5 and Mistral-Medium. This version includes Base model and Chat model, and provides multi-language support. Alibaba’s Tongyi Qianwen team stated that the relevant technology has also been launched on the Tongyi Qianwen official website and Tongyi Qianwen App. In addition, today's release of Qwen 1.5 also has the following highlights: supports 32K context length; opens the checkpoint of the Base+Chat model;

Large models can also be sliced, and Microsoft SliceGPT greatly increases the computational efficiency of LLAMA-2

Jan 31, 2024 am 11:39 AM

Large models can also be sliced, and Microsoft SliceGPT greatly increases the computational efficiency of LLAMA-2

Jan 31, 2024 am 11:39 AM

Large language models (LLMs) typically have billions of parameters and are trained on trillions of tokens. However, such models are very expensive to train and deploy. In order to reduce computational requirements, various model compression techniques are often used. These model compression techniques can generally be divided into four categories: distillation, tensor decomposition (including low-rank factorization), pruning, and quantization. Pruning methods have been around for some time, but many require recovery fine-tuning (RFT) after pruning to maintain performance, making the entire process costly and difficult to scale. Researchers from ETH Zurich and Microsoft have proposed a solution to this problem called SliceGPT. The core idea of this method is to reduce the embedding of the network by deleting rows and columns in the weight matrix.

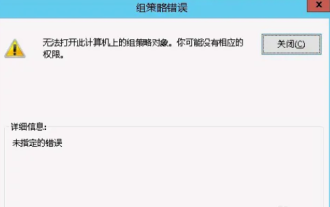

Unable to open the Group Policy object on this computer

Feb 07, 2024 pm 02:00 PM

Unable to open the Group Policy object on this computer

Feb 07, 2024 pm 02:00 PM

Occasionally, the operating system may malfunction when using a computer. The problem I encountered today was that when accessing gpedit.msc, the system prompted that the Group Policy object could not be opened because the correct permissions may be lacking. The Group Policy object on this computer could not be opened. Solution: 1. When accessing gpedit.msc, the system prompts that the Group Policy object on this computer cannot be opened because of lack of permissions. Details: The system cannot locate the path specified. 2. After the user clicks the close button, the following error window pops up. 3. Check the log records immediately and combine the recorded information to find that the problem lies in the C:\Windows\System32\GroupPolicy\Machine\registry.pol file

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Boston Dynamics Atlas officially enters the era of electric robots! Yesterday, the hydraulic Atlas just "tearfully" withdrew from the stage of history. Today, Boston Dynamics announced that the electric Atlas is on the job. It seems that in the field of commercial humanoid robots, Boston Dynamics is determined to compete with Tesla. After the new video was released, it had already been viewed by more than one million people in just ten hours. The old people leave and new roles appear. This is a historical necessity. There is no doubt that this year is the explosive year of humanoid robots. Netizens commented: The advancement of robots has made this year's opening ceremony look like a human, and the degree of freedom is far greater than that of humans. But is this really not a horror movie? At the beginning of the video, Atlas is lying calmly on the ground, seemingly on his back. What follows is jaw-dropping