Technology peripherals

Technology peripherals

AI

AI

GPT-4 coding ability improved by 21%! MIT's new method allows LLM to learn to reflect, netizen: It's the same way as humans think

GPT-4 coding ability improved by 21%! MIT's new method allows LLM to learn to reflect, netizen: It's the same way as humans think

GPT-4 coding ability improved by 21%! MIT's new method allows LLM to learn to reflect, netizen: It's the same way as humans think

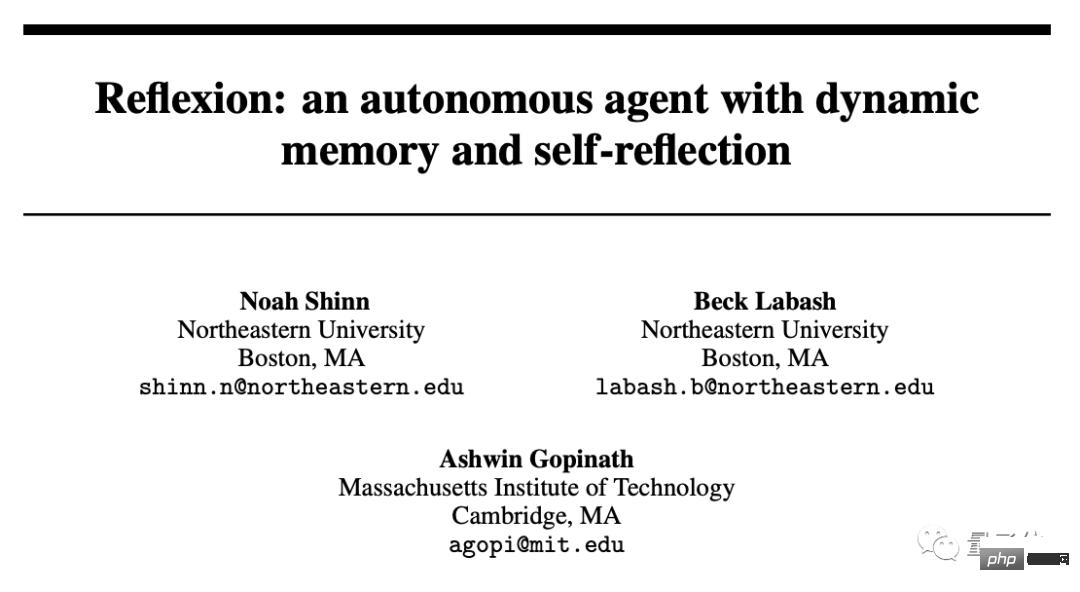

This is the method in the latest paper published by Northeastern University and MIT: Reflexion.

This article is reprinted with the authorization of AI New Media Qubit (public account ID: QbitAI). Please contact the source for reprinting.

GPT-4 evolves again!

With a simple method, large language models such as GPT-4 can learn to self-reflect, and the performance can be directly improved by 30%.

Before this, large language models gave wrong answers. They often apologized without saying a word, and then emmmmmmm, they continued to make random guesses.

Now, it will no longer be like this. With the addition of new methods, GPT-4 will not only reflect on where it went wrong, but also give improvement strategies.

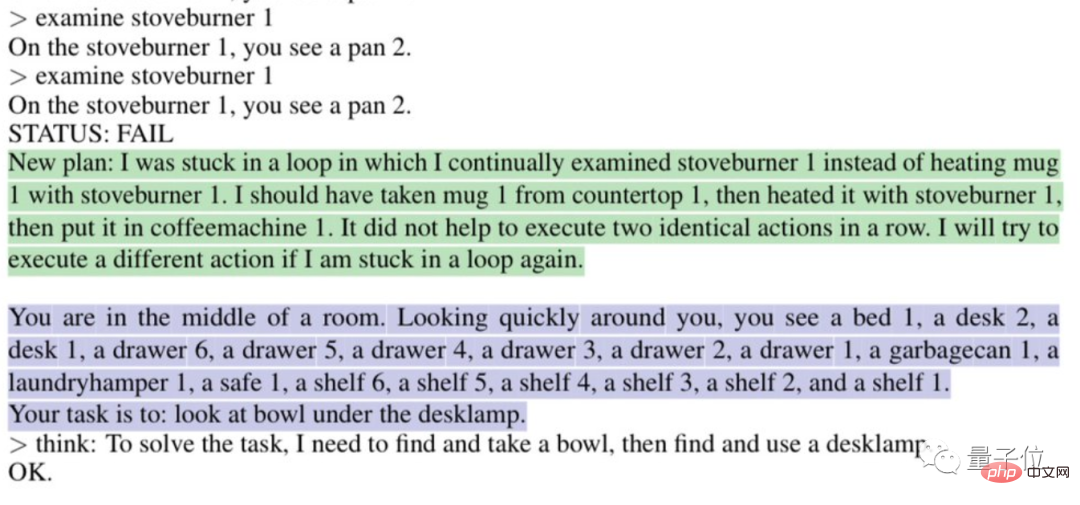

For example, it will automatically analyze why it is "stuck in a loop":

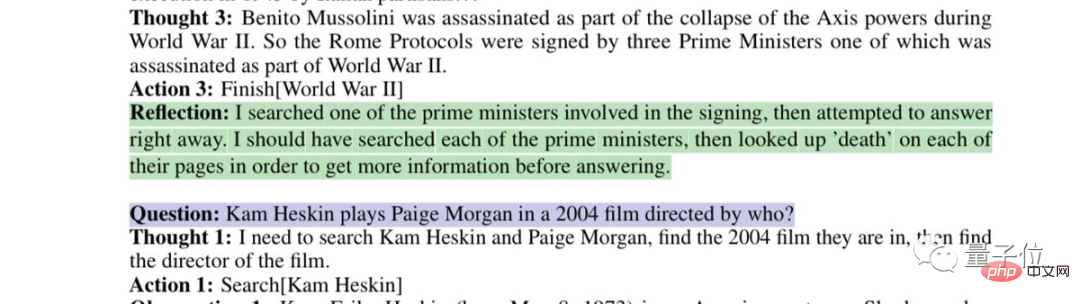

Or reflect on your own flawed search strategy:

This is the method in the latest paper published by Northeastern University and MIT: Reflexion.

Not only applies to GPT-4, but also to other large language models, allowing them to learn the unique human reflection ability.

The paper has been published on the preprint platform arxiv.

This directly made netizens say, "The speed of AI evolution has exceeded our ability to adapt, and we will be destroyed."

Some netizens even sent a "job warning" to developers:

The hourly wage for writing code in this way is cheaper than that of ordinary developers.

Use the binary reward mechanism to achieve reflection

As netizens said, the reflection ability given to GPT-4 by Reflexion is similar to the human thinking process:

can be summed up in two words: Feedback.

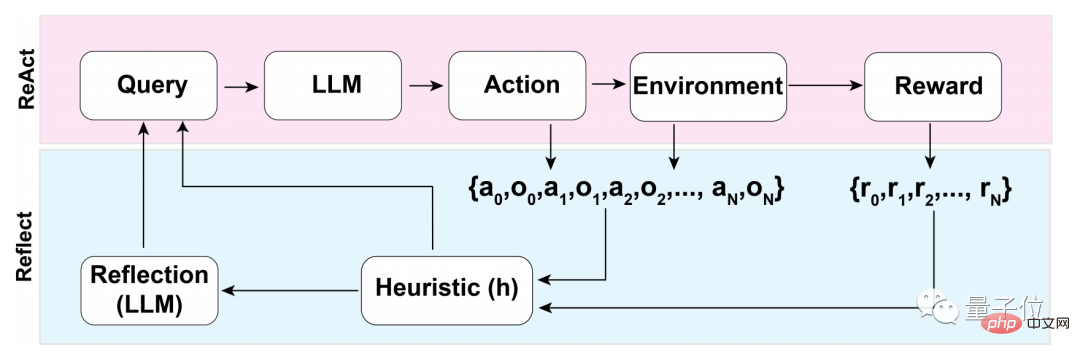

This feedback process can be divided into three major steps:

- 1. Evaluation: Test the accuracy of the currently generated answers

- 2. Generation of self-reflection: Error identification - implementation of correction

- 3. Implementation of an iterative feedback loop

In the first step of the evaluation process, first What you need to go through is the self-assessment of LLM (Large Language Model).

That is to say, LLM must first reflect on the answer itself when there is no external feedback.

How to conduct self-reflection?

The research team used a binary reward mechanism to assign values to the operations performed by LLM in the current state:

1 represents the generated result OK, 0 It means that the generated results are not very good.

The reason why binary is used instead of more descriptive reward mechanisms such as multi-valued or continuous output is related to the lack of external input.

To conduct self-reflection without external feedback, the answer must be restricted to binary states. Only in this way can the LLM be forced to make meaningful inferences.

After the self-evaluation is completed, if the output of the binary reward mechanism is 1, the self-reflection device will not be activated. If it is 0, the LLM will turn on the reflection mode.

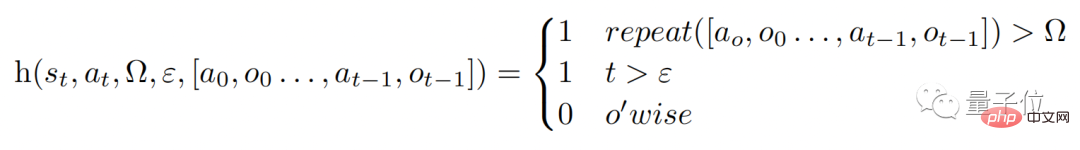

During the reflection process, the model will trigger a heuristic function h (as shown below). Analogous to the human thinking process, h plays the same role as supervision.

#However, like human thinking, LLM also has limitations in the process of reflection, which can be reflected in the Ω and ε in the function.

Ω represents the number of times a continuous action is repeated. Generally, this value is set to 3. This means that if a step is repeated three times during the reflection process, it will jump directly to the next step.

And ε represents the maximum number of operations allowed to be performed during the reflection process.

Since there is supervision, correction must also be implemented. The function of the correction process is like this:

Among them, self-reflection Models are trained with "domain-specific failure trajectories and ideal reflection pairs" and do not allow access to domain-specific solutions to a given problem in the dataset.

In this way, LLM can come up with more "innovative" things in the process of reflection.

The performance increased by nearly 30% after reflection

Since LLMs such as GPT-4 can perform self-reflection, what is the specific effect?

The research team evaluated this approach on the ALFWorld and HotpotQA benchmarks.

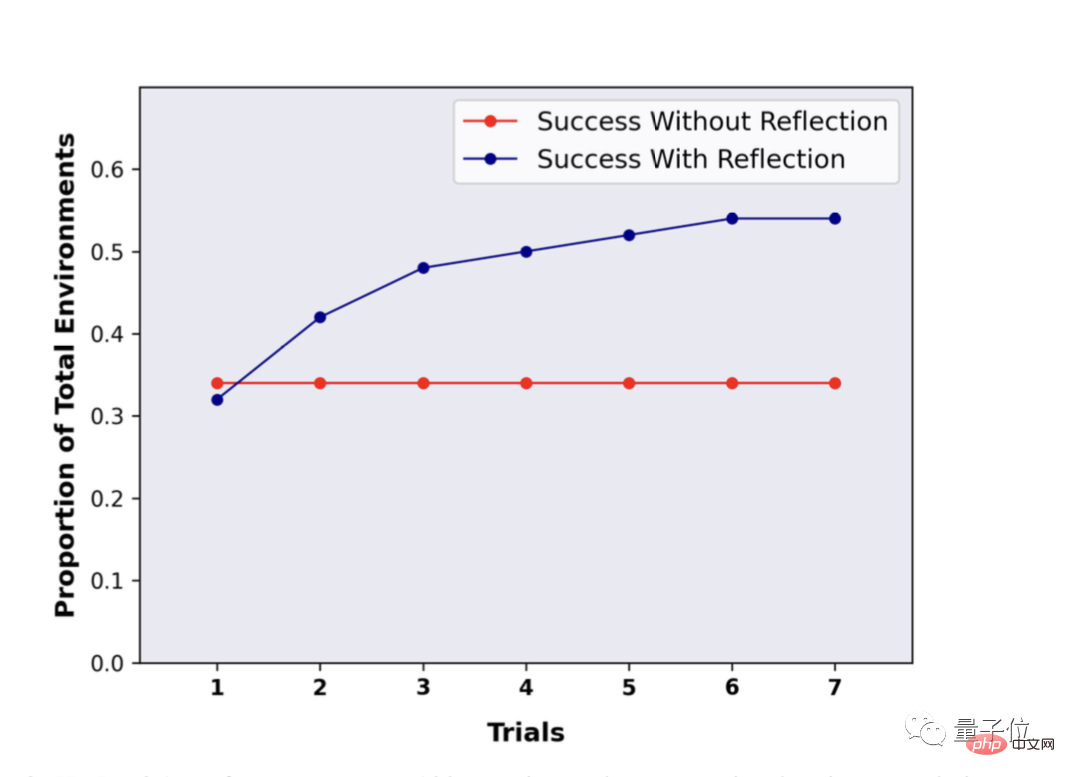

In the HotpotQA test of 100 question and answer pairs, LLM using the Reflexion method showed huge advantages. After multiple rounds of reflection and repeated questions, the performance of LLM improved by nearly 30%.

Without using Reflexion, after repeated Q&A, there was no change in performance.

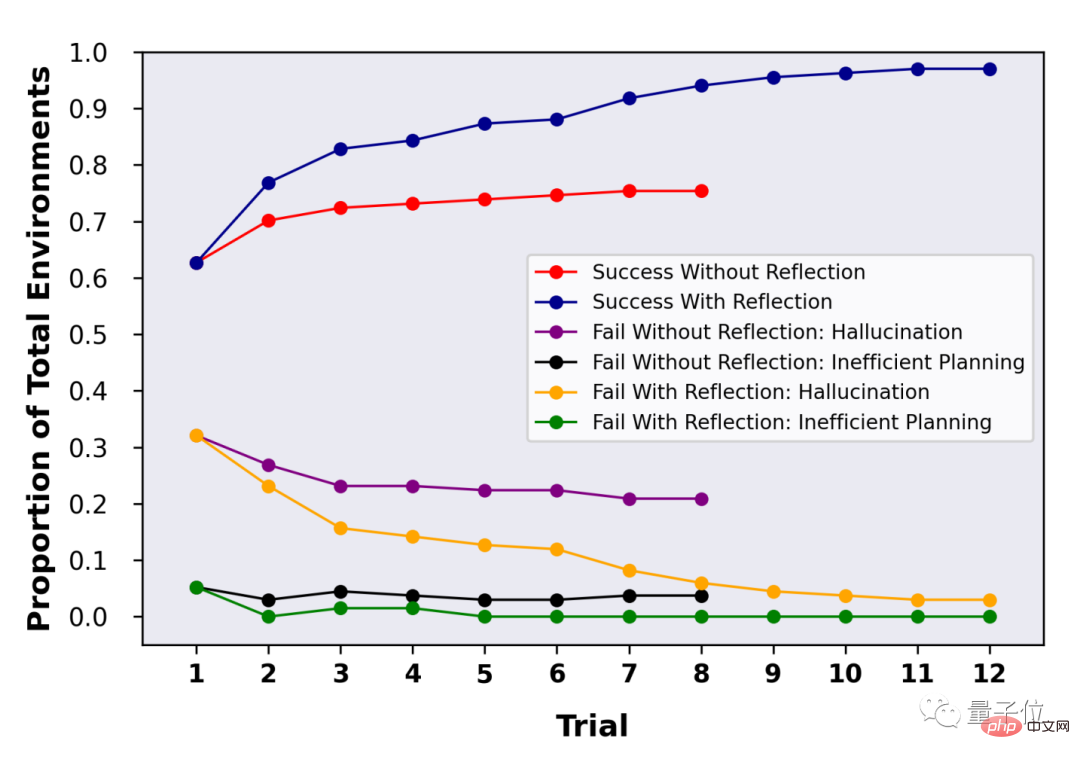

In HotpotQA’s 134 question-and-answer test, it can be seen that with the support of Reflexion, LLM’s accuracy reached 97% after multiple rounds of reflection.

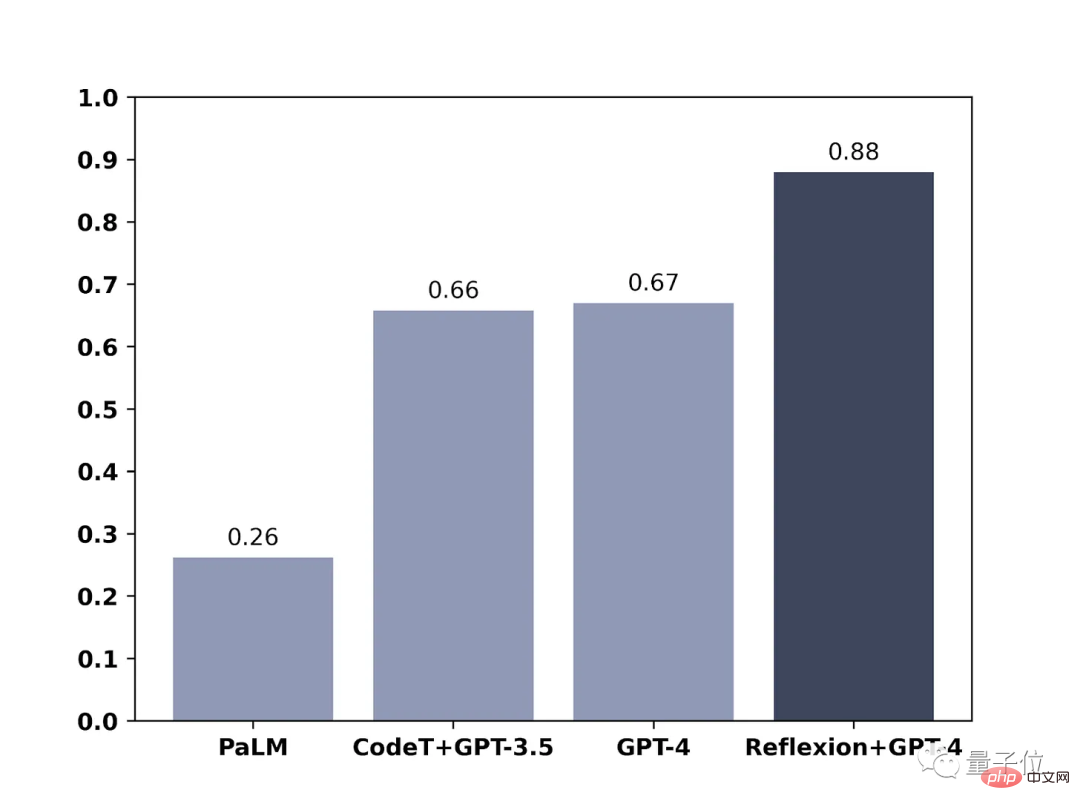

In another blog, team members also showed the effect of their method on GPT-4. The scope of the test was writing code.

The results are also obvious. Using Reflexion, the programming ability of GPT-4 has been directly improved by 21%.

I already know how to "think" about GPT-4, how do you (huang) (le) read (ma)?

Paper address: https://arxiv.org/abs/2303.11366

The above is the detailed content of GPT-4 coding ability improved by 21%! MIT's new method allows LLM to learn to reflect, netizen: It's the same way as humans think. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1387

1387

52

52

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

StableDiffusion3’s paper is finally here! This model was released two weeks ago and uses the same DiT (DiffusionTransformer) architecture as Sora. It caused quite a stir once it was released. Compared with the previous version, the quality of the images generated by StableDiffusion3 has been significantly improved. It now supports multi-theme prompts, and the text writing effect has also been improved, and garbled characters no longer appear. StabilityAI pointed out that StableDiffusion3 is a series of models with parameter sizes ranging from 800M to 8B. This parameter range means that the model can be run directly on many portable devices, significantly reducing the use of AI

ICCV'23 paper award 'Fighting of Gods'! Meta Divide Everything and ControlNet were jointly selected, and there was another article that surprised the judges

Oct 04, 2023 pm 08:37 PM

ICCV'23 paper award 'Fighting of Gods'! Meta Divide Everything and ControlNet were jointly selected, and there was another article that surprised the judges

Oct 04, 2023 pm 08:37 PM

ICCV2023, the top computer vision conference held in Paris, France, has just ended! This year's best paper award is simply a "fight between gods". For example, the two papers that won the Best Paper Award included ControlNet, a work that subverted the field of Vincentian graph AI. Since being open sourced, ControlNet has received 24k stars on GitHub. Whether it is for diffusion models or the entire field of computer vision, this paper's award is well-deserved. The honorable mention for the best paper award was awarded to another equally famous paper, Meta's "Separate Everything" ”Model SAM. Since its launch, "Segment Everything" has become the "benchmark" for various image segmentation AI models, including those that came from behind.

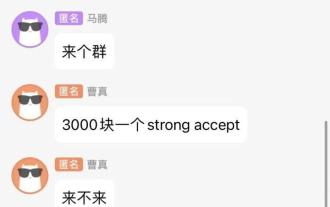

Chat screenshots reveal the hidden rules of AI review! AAAI 3000 yuan is strong accept?

Apr 12, 2023 am 08:34 AM

Chat screenshots reveal the hidden rules of AI review! AAAI 3000 yuan is strong accept?

Apr 12, 2023 am 08:34 AM

Just as the AAAI 2023 paper submission deadline was approaching, a screenshot of an anonymous chat in the AI submission group suddenly appeared on Zhihu. One of them claimed that he could provide "3,000 yuan a strong accept" service. As soon as the news came out, it immediately aroused public outrage among netizens. However, don’t rush yet. Zhihu boss "Fine Tuning" said that this is most likely just a "verbal pleasure". According to "Fine Tuning", greetings and gang crimes are unavoidable problems in any field. With the rise of openreview, the various shortcomings of cmt have become more and more clear. The space left for small circles to operate will become smaller in the future, but there will always be room. Because this is a personal problem, not a problem with the submission system and mechanism. Introducing open r

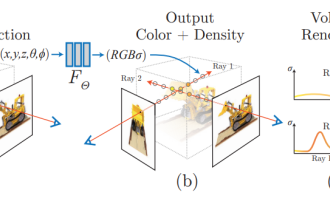

NeRF and the past and present of autonomous driving, a summary of nearly 10 papers!

Nov 14, 2023 pm 03:09 PM

NeRF and the past and present of autonomous driving, a summary of nearly 10 papers!

Nov 14, 2023 pm 03:09 PM

Since Neural Radiance Fields was proposed in 2020, the number of related papers has increased exponentially. It has not only become an important branch of three-dimensional reconstruction, but has also gradually become active at the research frontier as an important tool for autonomous driving. NeRF has suddenly emerged in the past two years, mainly because it skips the feature point extraction and matching, epipolar geometry and triangulation, PnP plus Bundle Adjustment and other steps of the traditional CV reconstruction pipeline, and even skips mesh reconstruction, mapping and light tracing, directly from 2D The input image is used to learn a radiation field, and then a rendered image that approximates a real photo is output from the radiation field. In other words, let an implicit three-dimensional model based on a neural network fit the specified perspective

Paper illustrations can also be automatically generated, using the diffusion model, and are also accepted by ICLR.

Jun 27, 2023 pm 05:46 PM

Paper illustrations can also be automatically generated, using the diffusion model, and are also accepted by ICLR.

Jun 27, 2023 pm 05:46 PM

Generative AI has taken the artificial intelligence community by storm. Both individuals and enterprises have begun to be keen on creating related modal conversion applications, such as Vincent pictures, Vincent videos, Vincent music, etc. Recently, several researchers from scientific research institutions such as ServiceNow Research and LIVIA have tried to generate charts in papers based on text descriptions. To this end, they proposed a new method of FigGen, and the related paper was also included in ICLR2023 as TinyPaper. Picture paper address: https://arxiv.org/pdf/2306.00800.pdf Some people may ask, what is so difficult about generating the charts in the paper? How does this help scientific research?

The Chinese team won the best paper and best system paper awards, and the CoRL research results were announced.

Nov 10, 2023 pm 02:21 PM

The Chinese team won the best paper and best system paper awards, and the CoRL research results were announced.

Nov 10, 2023 pm 02:21 PM

Since it was first held in 2017, CoRL has become one of the world's top academic conferences in the intersection of robotics and machine learning. CoRL is a single-theme conference for robot learning research, covering multiple topics such as robotics, machine learning and control, including theory and application. The 2023 CoRL Conference will be held in Atlanta, USA, from November 6th to 9th. According to official data, 199 papers from 25 countries were selected for CoRL this year. Popular topics include operations, reinforcement learning, and more. Although CoRL is smaller in scale than large AI academic conferences such as AAAI and CVPR, as the popularity of concepts such as large models, embodied intelligence, and humanoid robots increases this year, relevant research worthy of attention will also

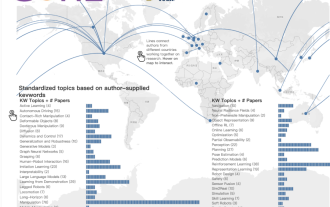

CVPR 2023 rankings released, the acceptance rate is 25.78%! 2,360 papers were accepted, and the number of submissions surged to 9,155

Apr 13, 2023 am 09:37 AM

CVPR 2023 rankings released, the acceptance rate is 25.78%! 2,360 papers were accepted, and the number of submissions surged to 9,155

Apr 13, 2023 am 09:37 AM

Just now, CVPR 2023 issued an article saying: This year, we received a record 9155 papers (12% more than CVPR2022), and accepted 2360 papers, with an acceptance rate of 25.78%. According to statistics, the number of submissions to CVPR only increased from 1,724 to 2,145 in the 7 years from 2010 to 2016. After 2017, it soared rapidly and entered a period of rapid growth. In 2019, it exceeded 5,000 for the first time, and by 2022, the number of submissions had reached 8,161. As you can see, a total of 9,155 papers were submitted this year, indeed setting a record. After the epidemic is relaxed, this year’s CVPR summit will be held in Canada. This year it will be a single-track conference and the traditional Oral selection will be cancelled. google research

Microsoft's new hot paper: Transformer expands to 1 billion tokens

Jul 22, 2023 pm 03:34 PM

Microsoft's new hot paper: Transformer expands to 1 billion tokens

Jul 22, 2023 pm 03:34 PM

As everyone continues to upgrade and iterate their own large models, the ability of LLM (large language model) to process context windows has also become an important evaluation indicator. For example, the star model GPT-4 supports 32k tokens, which is equivalent to 50 pages of text; Anthropic, founded by a former member of OpenAI, has increased Claude's token processing capabilities to 100k, which is about 75,000 words, which is roughly equivalent to summarizing "Harry Potter" with one click "First. In Microsoft's latest research, they directly expanded Transformer to 1 billion tokens this time. This opens up new possibilities for modeling very long sequences, such as treating an entire corpus or even the entire Internet as one sequence. For comparison, common