Technology peripherals

Technology peripherals

AI

AI

Microsoft proposes OTO, an automated neural network training pruning framework, to obtain high-performance lightweight models in one stop

Microsoft proposes OTO, an automated neural network training pruning framework, to obtain high-performance lightweight models in one stop

Microsoft proposes OTO, an automated neural network training pruning framework, to obtain high-performance lightweight models in one stop

OTO is the industry’s first automated, one-stop, user-friendly and versatile neural network training and structure compression framework.

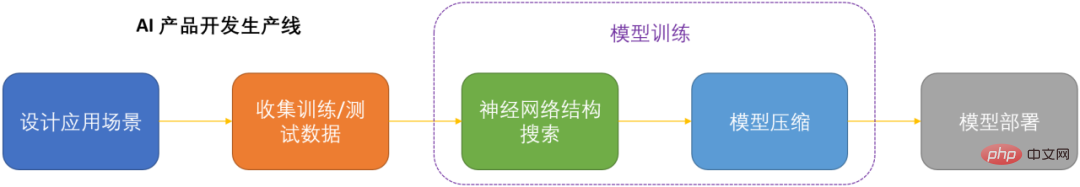

In the era of artificial intelligence, how to deploy and maintain neural networks is a key issue for productization. In order to save computing costs while minimizing the loss of model performance as much as possible, compressing neural networks has become one of the keys to productizing DNN. .

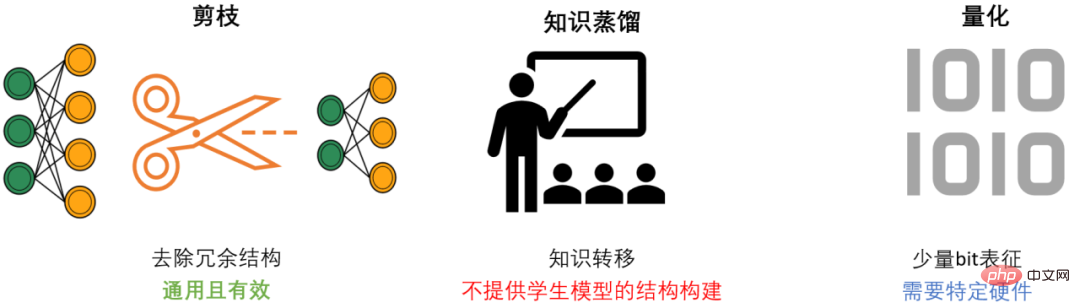

#DNN compression generally has three methods, pruning, knowledge distillation and quantization. Pruning aims to identify and remove redundant structures, slim down DNN while maintaining model performance as much as possible. It is the most versatile and effective compression method. Generally speaking, the three methods can complement each other and work together to achieve the best compression effect.

However, most of the existing pruning methods only target specific models and specific tasks, and require strong professional domain knowledge, so it usually requires AI developers to spend a lot of energy to Applying these methods to your own scenarios consumes a lot of manpower and material resources.

OTO Overview

In order to solve the problems of existing pruning methods and provide convenience to AI developers, the Microsoft team proposed the Only-Train-Once OTO framework. OTO is the industry's first automated, one-stop, user-friendly and universal neural network training and structure compression framework. A series of work has been published in ICLR2023 and NeurIPS2021.

By using OTO, AI engineers can easily train target neural networks and obtain high-performance and lightweight models in one stop. OTO minimizes the developer's investment in engineering time and effort, and does not require the time-consuming pre-training and additional model fine-tuning that existing methods usually require.

- Paper link:

- OTOv2 ICLR 2023: https://openreview.net/pdf?id=7ynoX1ojPMt

- OTOv1 NeurIPS 2021: https://proceedings .neurips.cc/paper_files/paper/2021/file/a376033f78e144f494bfc743c0be3330-Paper.pdf

- Code link:

https://github.com/tianyic/only_train_once

Framework Core Algorithm

The ideal structural pruning algorithm should be able to: automatically train from scratch in one stop for general neural networks, while achieving high performance and lightweight models, without the need for follow-up Fine tune. But because of the complexity of neural networks, achieving this goal is extremely challenging. To achieve this ultimate goal, the following three core questions need to be systematically addressed:

- How to find out which network structures can be removed?

- How to remove the network structure without losing model performance as much as possible?

- How can we accomplish the above two points automatically?

The Microsoft team designed and implemented three sets of core algorithms, systematically and comprehensively solving these three core problems for the first time.

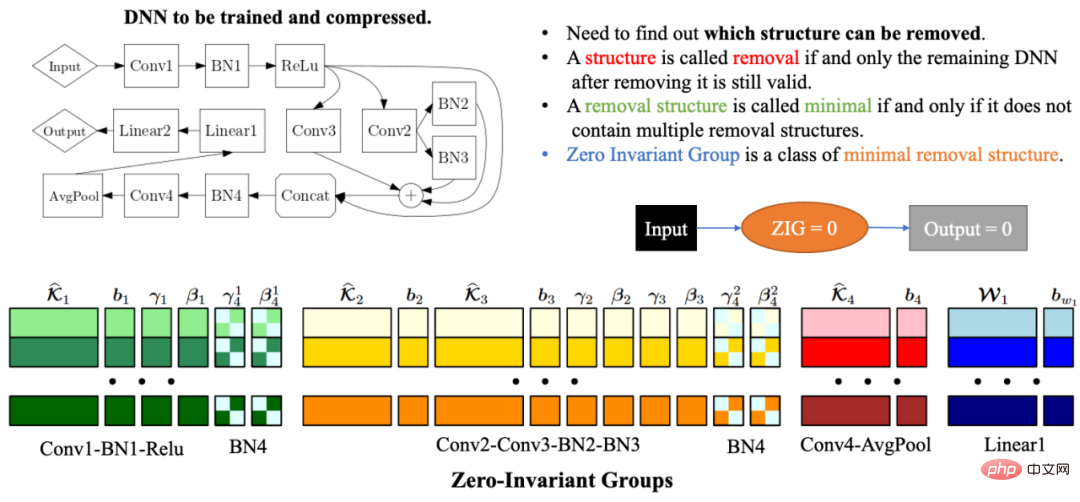

Automated Zero-Invariant Groups (zero invariant group) grouping

Due to the complexity and correlation of the network structure, deleting any network structure may result in remaining The network structure is invalid. Therefore, one of the biggest problems in automated network structure compression is how to find the model parameters that must be pruned together so that the remaining network is still valid. To solve this problem, the Microsoft team proposed Zero-Invariant Groups (ZIGs) in OTOv1. The zero-invariant group can be understood as a type of smallest removable unit, so that the remaining network is still valid after the corresponding network structure of the group is removed. Another great property of a zero-invariant group is that if a zero-invariant group is equal to zero, then no matter what the input value is, the output value is always zero. In OTOv2, the researchers further proposed and implemented a set of automated algorithms to solve the grouping problem of zero-invariant groups in general networks. The automated grouping algorithm is a carefully designed combination of a series of graph algorithms. The entire algorithm is very efficient and has linear time and space complexity.

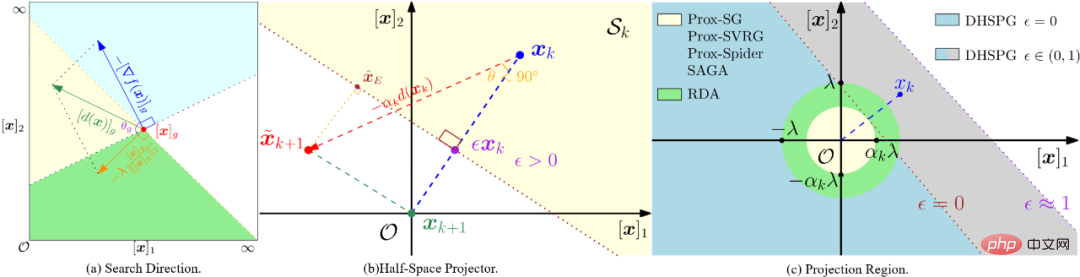

Double half-plane projected gradient optimization algorithm (DHSPG)

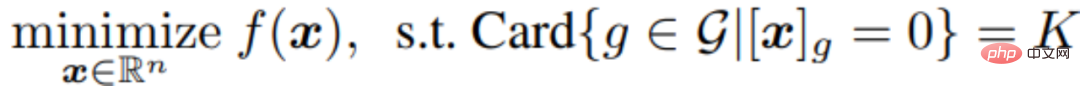

After dividing all zero-invariant groups of the target network, then The following model training and pruning tasks need to find out which zero-invariant groups are redundant and which ones are important. The network structure corresponding to the redundant zero-invariant groups needs to be deleted, and the important zero-invariant groups need to be retained to ensure the performance of the compression model. The researchers formulated this problem as a structural sparsification problem and proposed a new Dual Half-Space Projected Gradient (DHSPG) optimization algorithm to solve it.

DHSPG can very effectively find redundant zero-invariant groups and project them to zero, and continuously train important zero-invariant groups to achieve performance comparable to the original model. performance.

Compared with traditional sparse optimization algorithms, DHSPG has stronger and more stable sparse structure exploration capabilities, and expands the training search space and therefore usually achieves higher actual performance results.

Automatically build a lightweight compression model

By using DHSPG to train the model, we will get a zero-invariant A solution with high structural sparsity of groups, that is, a solution with many zero-invariant groups that are projected to zero, will also have high model performance. Next, the researchers deleted all structures corresponding to redundant zero-invariant groups to automatically build a compression network. Due to the characteristics of zero-invariant groups, that is, if a zero-invariant group is equal to zero, then no matter what the input value is, the output value will always be zero, so deleting redundant zero-invariant groups will not have any impact on the network. Therefore, the compressed network obtained through OTO will have the same output as the complete network, without the need for further model fine-tuning required by traditional methods.

Numerical experiment

Classification task

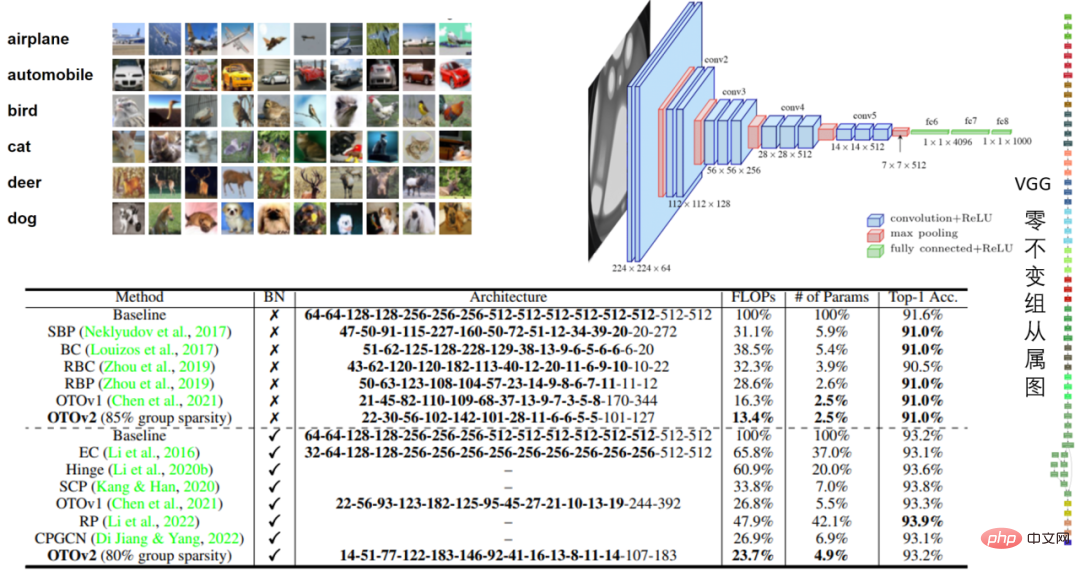

##Table 1: VGG16 and VGG16- in CIFAR10 BN model performance

In the VGG16 experiment of CIFAR10, OTO reduced the floating point number by 86.6% and the number of parameters by 97.5%. The performance was impressive.

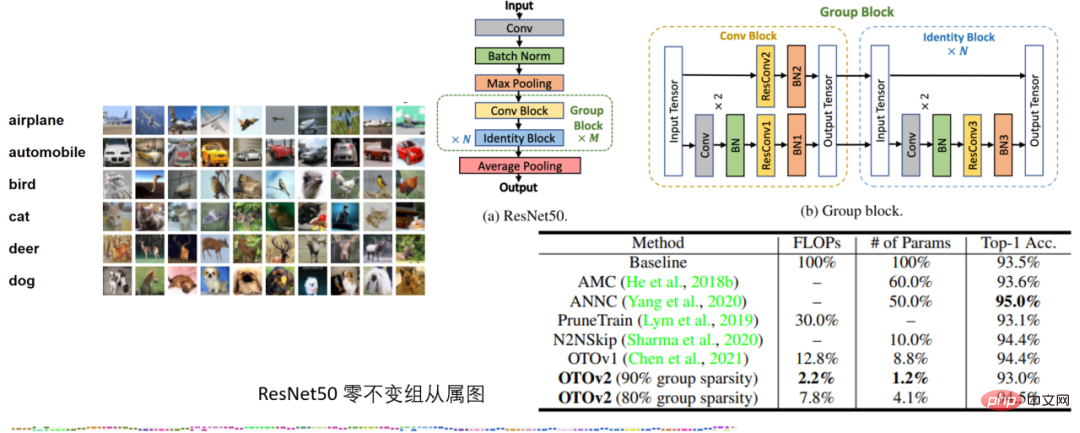

Table 2: ResNet50 experiment of CIFAR10

In the ResNet50 experiment of CIFAR10, OTO outperforms without quantization The SOTA neural network compression frameworks AMC and ANNC use only 7.8% of FLOPs and 4.1% of parameters.

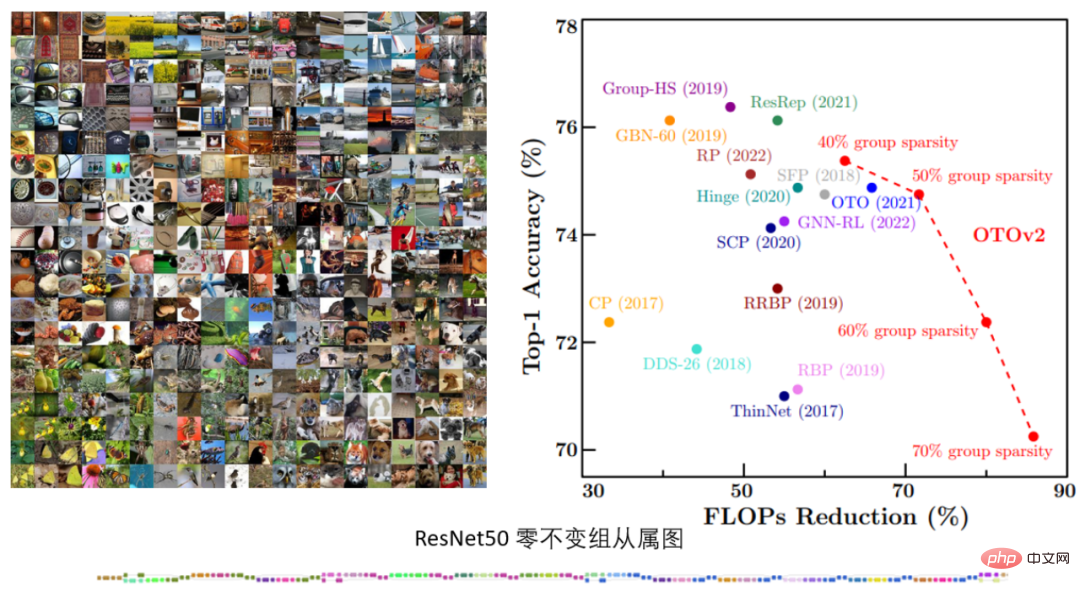

Table 3. ImageNet’s ResNet50 experiment

In the ImageNet’s ResNet50 experiment, OTOv2 under different structural sparseness targets, It shows performance that is comparable to or even better than existing SOTA methods.

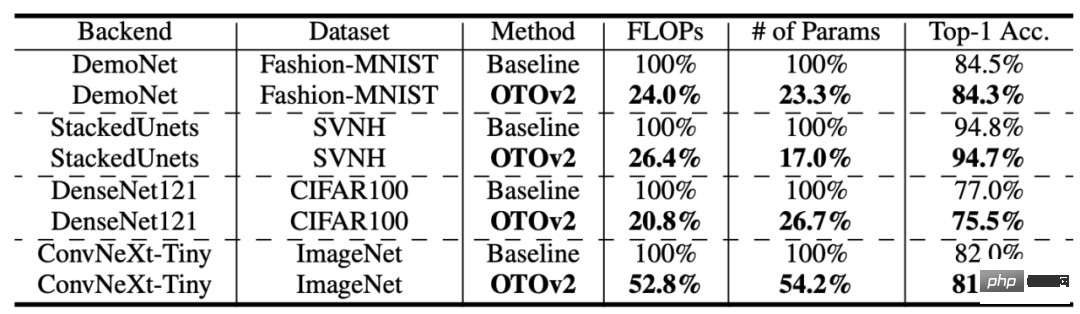

Table 4: More structures and data sets

OTO has also achieved more data sets and model structures Not a bad performance.Low-Level Vision Task

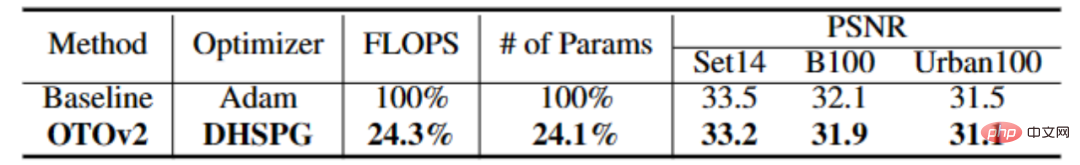

Table 4: Experiment of CARNx2

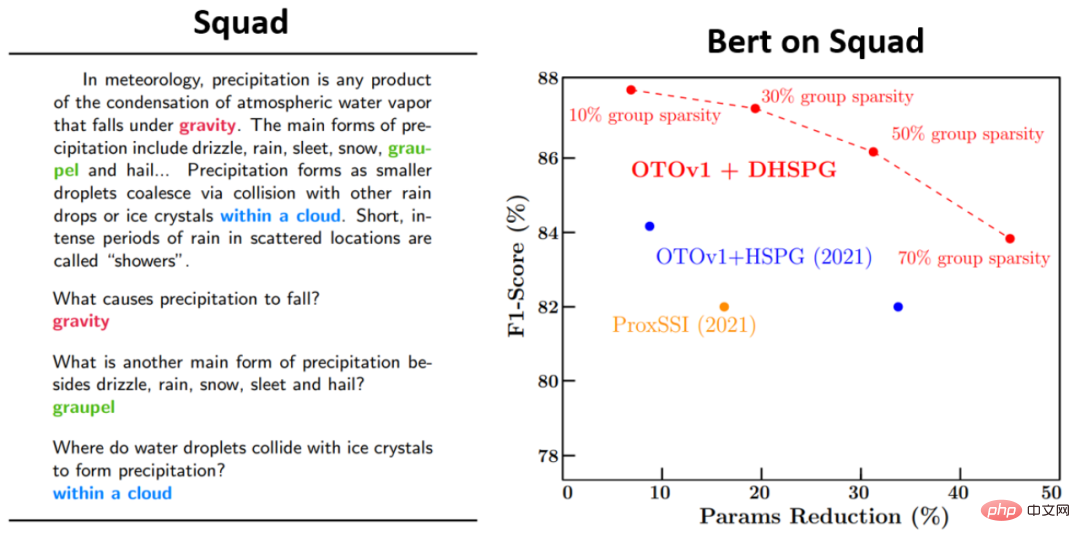

In the super-resolution task, OTO one-stop training compressed the CARNx2 network, achieving competitive performance with the original model and compressing the calculation amount and model size by more than 75%.Language model task

The above is the detailed content of Microsoft proposes OTO, an automated neural network training pruning framework, to obtain high-performance lightweight models in one stop. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1385

1385

52

52

Microsoft releases Win11 August cumulative update: improving security, optimizing lock screen, etc.

Aug 14, 2024 am 10:39 AM

Microsoft releases Win11 August cumulative update: improving security, optimizing lock screen, etc.

Aug 14, 2024 am 10:39 AM

According to news from this site on August 14, during today’s August Patch Tuesday event day, Microsoft released cumulative updates for Windows 11 systems, including the KB5041585 update for 22H2 and 23H2, and the KB5041592 update for 21H2. After the above-mentioned equipment is installed with the August cumulative update, the version number changes attached to this site are as follows: After the installation of the 21H2 equipment, the version number increased to Build22000.314722H2. After the installation of the equipment, the version number increased to Build22621.403723H2. After the installation of the equipment, the version number increased to Build22631.4037. The main contents of the KB5041585 update for Windows 1121H2 are as follows: Improvement: Improved

Microsoft Edge upgrade: Automatic password saving function banned? ! Users were shocked!

Apr 19, 2024 am 08:13 AM

Microsoft Edge upgrade: Automatic password saving function banned? ! Users were shocked!

Apr 19, 2024 am 08:13 AM

News on April 18th: Recently, some users of the Microsoft Edge browser using the Canary channel reported that after upgrading to the latest version, they found that the option to automatically save passwords was disabled. After investigation, it was found that this was a minor adjustment after the browser upgrade, rather than a cancellation of functionality. Before using the Edge browser to access a website, users reported that the browser would pop up a window asking if they wanted to save the login password for the website. After choosing to save, Edge will automatically fill in the saved account number and password the next time you log in, providing users with great convenience. But the latest update resembles a tweak, changing the default settings. Users need to choose to save the password and then manually turn on automatic filling of the saved account and password in the settings.

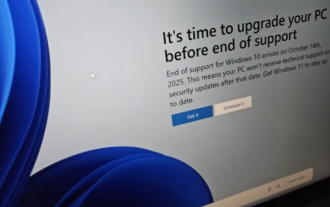

Microsoft's full-screen pop-up urges Windows 10 users to hurry up and upgrade to Windows 11

Jun 06, 2024 am 11:35 AM

Microsoft's full-screen pop-up urges Windows 10 users to hurry up and upgrade to Windows 11

Jun 06, 2024 am 11:35 AM

According to news on June 3, Microsoft is actively sending full-screen notifications to all Windows 10 users to encourage them to upgrade to the Windows 11 operating system. This move involves devices whose hardware configurations do not support the new system. Since 2015, Windows 10 has occupied nearly 70% of the market share, firmly establishing its dominance as the Windows operating system. However, the market share far exceeds the 82% market share, and the market share far exceeds that of Windows 11, which will be released in 2021. Although Windows 11 has been launched for nearly three years, its market penetration is still slow. Microsoft has announced that it will terminate technical support for Windows 10 after October 14, 2025 in order to focus more on

Microsoft Win11's function of compressing 7z and TAR files has been downgraded from 24H2 to 23H2/22H2 versions

Apr 28, 2024 am 09:19 AM

Microsoft Win11's function of compressing 7z and TAR files has been downgraded from 24H2 to 23H2/22H2 versions

Apr 28, 2024 am 09:19 AM

According to news from this site on April 27, Microsoft released the Windows 11 Build 26100 preview version update to the Canary and Dev channels earlier this month, which is expected to become a candidate RTM version of the Windows 1124H2 update. The main changes in the new version are the file explorer, Copilot integration, editing PNG file metadata, creating TAR and 7z compressed files, etc. @PhantomOfEarth discovered that Microsoft has devolved some functions of the 24H2 version (Germanium) to the 23H2/22H2 (Nickel) version, such as creating TAR and 7z compressed files. As shown in the diagram, Windows 11 will support native creation of TAR

Microsoft Edge browser update: Added "zoom in image" function to improve user experience

Mar 21, 2024 pm 01:40 PM

Microsoft Edge browser update: Added "zoom in image" function to improve user experience

Mar 21, 2024 pm 01:40 PM

According to news on March 21, Microsoft recently updated its Microsoft Edge browser and added a practical "enlarge image" function. Now, when using the Edge browser, users can easily find this new feature in the pop-up menu by simply right-clicking on the image. What’s more convenient is that users can also hover the cursor over the image and then double-click the Ctrl key to quickly invoke the function of zooming in on the image. According to the editor's understanding, the newly released Microsoft Edge browser has been tested for new features in the Canary channel. The stable version of the browser has also officially launched the practical "enlarge image" function, providing users with a more convenient image browsing experience. Foreign science and technology media also paid attention to this

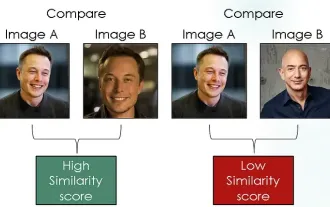

Exploring Siamese networks using contrastive loss for image similarity comparison

Apr 02, 2024 am 11:37 AM

Exploring Siamese networks using contrastive loss for image similarity comparison

Apr 02, 2024 am 11:37 AM

Introduction In the field of computer vision, accurately measuring image similarity is a critical task with a wide range of practical applications. From image search engines to facial recognition systems and content-based recommendation systems, the ability to effectively compare and find similar images is important. The Siamese network combined with contrastive loss provides a powerful framework for learning image similarity in a data-driven manner. In this blog post, we will dive into the details of Siamese networks, explore the concept of contrastive loss, and explore how these two components work together to create an effective image similarity model. First, the Siamese network consists of two identical subnetworks that share the same weights and parameters. Each sub-network encodes the input image into a feature vector, which

Microsoft plans to phase out NTLM in Windows 11 in the second half of 2024 and fully shift to Kerberos authentication

Jun 09, 2024 pm 04:17 PM

Microsoft plans to phase out NTLM in Windows 11 in the second half of 2024 and fully shift to Kerberos authentication

Jun 09, 2024 pm 04:17 PM

In the second half of 2024, the official Microsoft Security Blog published a message in response to the call from the security community. The company plans to eliminate the NTLAN Manager (NTLM) authentication protocol in Windows 11, released in the second half of 2024, to improve security. According to previous explanations, Microsoft has already made similar moves before. On October 12 last year, Microsoft proposed a transition plan in an official press release aimed at phasing out NTLM authentication methods and pushing more enterprises and users to switch to Kerberos. To help enterprises that may be experiencing issues with hardwired applications and services after turning off NTLM authentication, Microsoft provides IAKerb and

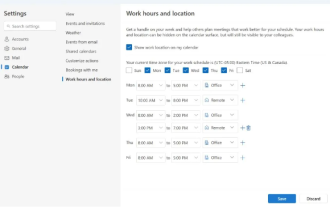

Microsoft launches new version of Outlook for Windows: comprehensive upgrade of calendar functions

Apr 27, 2024 pm 03:44 PM

Microsoft launches new version of Outlook for Windows: comprehensive upgrade of calendar functions

Apr 27, 2024 pm 03:44 PM

In news on April 27, Microsoft announced that it will soon release a test of a new version of Outlook for Windows client. This update mainly focuses on optimizing the calendar function, aiming to improve users’ work efficiency and further simplify daily workflow. The improvement of the new version of Outlook for Windows client lies in its more powerful calendar management function. Now, users can more easily share personal working time and location information, making meeting planning more efficient. In addition, Outlook has also added user-friendly settings, allowing users to set meetings to automatically end early or start later, providing users with more flexibility, whether they want to change meeting rooms, take a break or enjoy a cup of coffee. arrange. according to