Technology peripherals

Technology peripherals

AI

AI

Remove video flicker with one click, this study proposes a general framework

Remove video flicker with one click, this study proposes a general framework

Remove video flicker with one click, this study proposes a general framework

This paper successfully proposes the first universal de-flicker method that requires no additional guidance or understanding of flicker and can eliminate various flicker artifacts.

#High-quality videos are usually consistent in time, but many videos will exhibit flickering for various reasons. For example, the brightness of old movies can be very unstable due to the poor quality of some old camera hardware and the inability to set the exposure time to the same for each frame. Additionally, high-speed cameras with very short exposure times can capture high-frequency (e.g., 60 Hz) changes in indoor lighting.

#Flickering may occur when applying image algorithms to temporally consistent videos, such as image enhancement, image colorization, and style transfer, among other efficient processing algorithms.

Video generated by video generation methods may also contain flicker artifacts.

Removing flicker from video is very popular in the fields of video processing and computational photography since temporally consistent videos are generally more visually appealing.

This CVPR 2023 paper is dedicated to researching a universal flicker removal method that: (1) has high generalization to various flicker patterns or levels (e.g., old movies, slow motion pictures captured by high-speed cameras) action video), (2) only requires a flicker video and does not require other auxiliary information (e.g., flicker type, additional time-consistent videos). Since this method does not make too many assumptions, it has a wide range of application scenarios.

Code link: https://github.com/ChenyangLEI/All-in-one-Deflicker

Project link: https://chenyanglei. github.io/deflicker

Paper link: https://arxiv.org/pdf/2303.08120.pdf

Method

General flicker removal methods are challenging, Because it's difficult to enforce temporal consistency throughout the video without any additional guidance.

Existing techniques usually design specific strategies for each flicker type and use specific knowledge. For example, for slow-motion videos captured by high-speed cameras, previous work can analyze lighting frequencies. For videos processed by image processing algorithms, the blind video temporal consistency algorithm can use the temporally consistent unprocessed video as a reference to obtain long-term consistency. However, flicker types or unprocessed videos are not always available, so existing flicker-specific algorithms cannot be applied to this case.

An intuitive solution is to use optical flow to track correspondences. However, the optical flow obtained from flicker videos is not accurate enough, and the cumulative error of optical flow also increases with the number of frames.

Through two key observations and designs, the author successfully proposed a general de-flickering method that can eliminate various flickering artifacts without additional guidance.

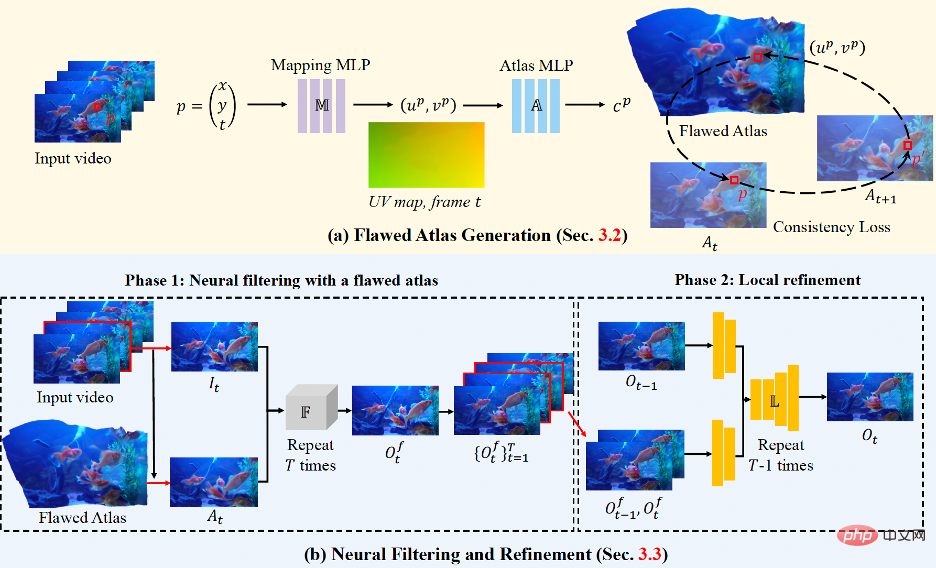

A good blind de-flicker model should have the ability to track corresponding points between all video frames. Most network structures in video processing can only take a small number of frames as input, resulting in a small receptive field and unable to guarantee long-term consistency. The researchers observed that neural atlases are well suited for the flicker elimination task and will therefore introduce neural atlases to this task. Neural atlases are a unified and concise representation of all pixels in a video. As shown in Figure (a), let p be a pixel, and each pixel p is input into the mapping network M, which predicts 2D coordinates (up, vp), indicating the corresponding position of the pixel in the atlas. Ideally, corresponding points between different frames should share a pixel in the atlas, even if the input pixels are of different colors. That is, this ensures temporal consistency.

Secondly, although the frames obtained from the shared layers are consistent, the structure of the images is flawed: neural layers cannot easily model dynamic objects with large motion; Optical flow isn't perfect either. Therefore, the authors propose a neural filtering strategy to pick good parts from defective layers. The researchers trained a neural network to learn invariance under two types of distortion, which simulate artifacts in layers and flicker in videos. When tested, the network worked well as a filter to preserve consistency properties and block artifacts in defective layers.

Experiment

The researchers constructed a data set containing various real flicker videos. Extensive experiments show that our method achieves satisfactory de-flicker effects on multiple types of flicker videos. The researchers' algorithm even outperformed baseline methods using additional guidance on public benchmarks.

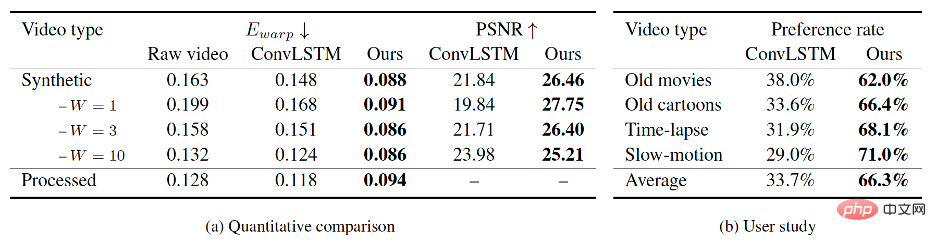

The researcher provides (a) a quantitative comparison of the processed flicker video and the synthesized flicker video. The deformation error of the researcher's method is much smaller than the baseline. , according to PSNR, the researcher's results are also closer to the real value on synthetic data. For other real-world videos, the study provided (b) a double-blind experiment for comparison, and most users preferred the researchers' results.

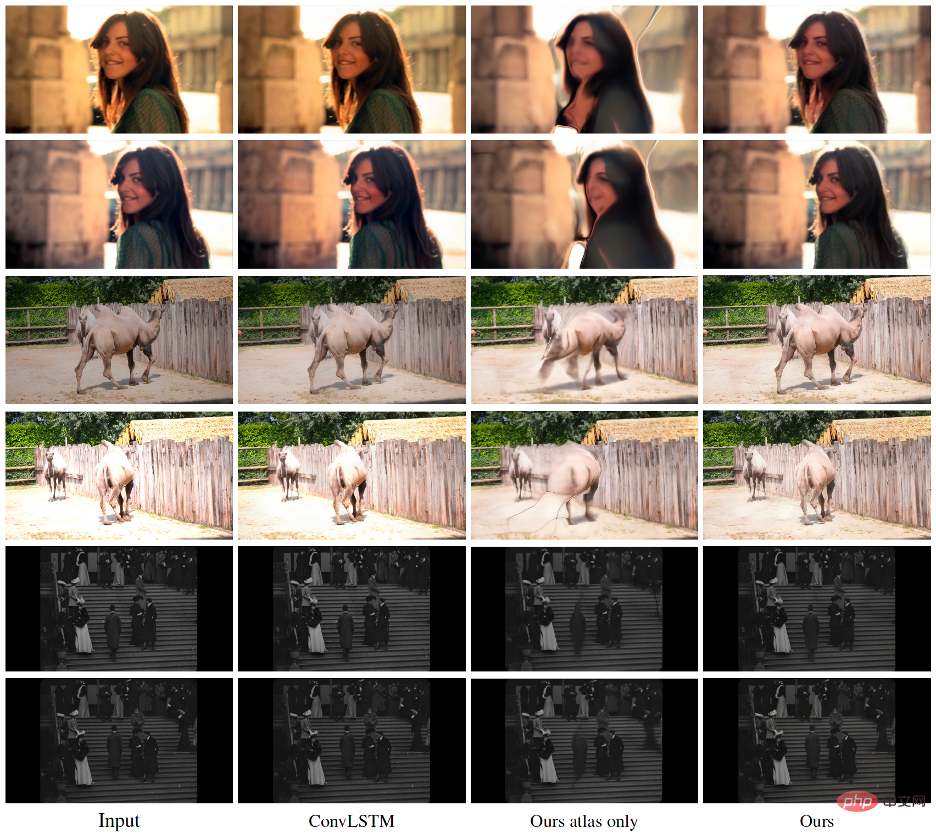

As shown in the figure above, the researcher's algorithm can very well remove flicker from the input video. Note that the third column of pictures shows the results of the neural layer. Obvious defects can be observed, but the researcher's algorithm can make good use of its consistency and avoid introducing these defects.

This framework can remove different categories of flicker contained in old movies and AI-generated videos.

##

##

The above is the detailed content of Remove video flicker with one click, this study proposes a general framework. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1377

1377

52

52

Where are video files stored in browser cache?

Feb 19, 2024 pm 05:09 PM

Where are video files stored in browser cache?

Feb 19, 2024 pm 05:09 PM

Which folder does the browser cache the video in? When we use the Internet browser every day, we often watch various online videos, such as watching music videos on YouTube or watching movies on Netflix. These videos will be cached by the browser during the loading process so that they can be loaded quickly when played again in the future. So the question is, in which folder are these cached videos actually stored? Different browsers store cached video folders in different locations. Below we will introduce several common browsers and their

Is it infringing to post other people's videos on Douyin? How does it edit videos without infringement?

Mar 21, 2024 pm 05:57 PM

Is it infringing to post other people's videos on Douyin? How does it edit videos without infringement?

Mar 21, 2024 pm 05:57 PM

With the rise of short video platforms, Douyin has become an indispensable part of everyone's daily life. On TikTok, we can see interesting videos from all over the world. Some people like to post other people’s videos, which raises a question: Is Douyin infringing upon posting other people’s videos? This article will discuss this issue and tell you how to edit videos without infringement and how to avoid infringement issues. 1. Is it infringing upon Douyin’s posting of other people’s videos? According to the provisions of my country's Copyright Law, unauthorized use of the copyright owner's works without the permission of the copyright owner is an infringement. Therefore, posting other people’s videos on Douyin without the permission of the original author or copyright owner is an infringement. 2. How to edit a video without infringement? 1. Use of public domain or licensed content: Public

How to remove video watermark in Wink

Feb 23, 2024 pm 07:22 PM

How to remove video watermark in Wink

Feb 23, 2024 pm 07:22 PM

How to remove watermarks from videos in Wink? There is a tool to remove watermarks from videos in winkAPP, but most friends don’t know how to remove watermarks from videos in wink. Next is the picture of how to remove watermarks from videos in Wink brought by the editor. Text tutorial, interested users come and take a look! How to remove video watermarks in Wink 1. First open wink APP and select the [Remove Watermark] function in the homepage area; 2. Then select the video you want to remove the watermark in the album; 3. Then select the video and click the upper right corner after editing the video. [√]; 4. Finally, click [One-click Print] as shown in the figure below and then click [Process].

How to make money from posting videos on Douyin? How can a newbie make money on Douyin?

Mar 21, 2024 pm 08:17 PM

How to make money from posting videos on Douyin? How can a newbie make money on Douyin?

Mar 21, 2024 pm 08:17 PM

Douyin, the national short video platform, not only allows us to enjoy a variety of interesting and novel short videos in our free time, but also gives us a stage to show ourselves and realize our values. So, how to make money by posting videos on Douyin? This article will answer this question in detail and help you make more money on TikTok. 1. How to make money from posting videos on Douyin? After posting a video and gaining a certain amount of views on Douyin, you will have the opportunity to participate in the advertising sharing plan. This income method is one of the most familiar to Douyin users and is also the main source of income for many creators. Douyin decides whether to provide advertising sharing opportunities based on various factors such as account weight, video content, and audience feedback. The TikTok platform allows viewers to support their favorite creators by sending gifts,

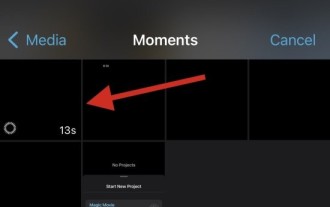

2 Ways to Remove Slow Motion from Videos on iPhone

Mar 04, 2024 am 10:46 AM

2 Ways to Remove Slow Motion from Videos on iPhone

Mar 04, 2024 am 10:46 AM

On iOS devices, the Camera app allows you to shoot slow-motion video, or even 240 frames per second if you have the latest iPhone. This capability allows you to capture high-speed action in rich detail. But sometimes, you may want to play slow-motion videos at normal speed so you can better appreciate the details and action in the video. In this article, we will explain all the methods to remove slow motion from existing videos on iPhone. How to Remove Slow Motion from Videos on iPhone [2 Methods] You can use Photos App or iMovie App to remove slow motion from videos on your device. Method 1: Open on iPhone using Photos app

How to publish Xiaohongshu video works? What should I pay attention to when posting videos?

Mar 23, 2024 pm 08:50 PM

How to publish Xiaohongshu video works? What should I pay attention to when posting videos?

Mar 23, 2024 pm 08:50 PM

With the rise of short video platforms, Xiaohongshu has become a platform for many people to share their lives, express themselves, and gain traffic. On this platform, publishing video works is a very popular way of interaction. So, how to publish Xiaohongshu video works? 1. How to publish Xiaohongshu video works? First, make sure you have a video content ready to share. You can use your mobile phone or other camera equipment to shoot, but you need to pay attention to the image quality and sound clarity. 2. Edit the video: In order to make the work more attractive, you can edit the video. You can use professional video editing software, such as Douyin, Kuaishou, etc., to add filters, music, subtitles and other elements. 3. Choose a cover: The cover is the key to attracting users to click. Choose a clear and interesting picture as the cover to attract users to click on it.

How to post videos on Weibo without compressing the image quality_How to post videos on Weibo without compressing the image quality

Mar 30, 2024 pm 12:26 PM

How to post videos on Weibo without compressing the image quality_How to post videos on Weibo without compressing the image quality

Mar 30, 2024 pm 12:26 PM

1. First open Weibo on your mobile phone and click [Me] in the lower right corner (as shown in the picture). 2. Then click [Gear] in the upper right corner to open settings (as shown in the picture). 3. Then find and open [General Settings] (as shown in the picture). 4. Then enter the [Video Follow] option (as shown in the picture). 5. Then open the [Video Upload Resolution] setting (as shown in the picture). 6. Finally, select [Original Image Quality] to avoid compression (as shown in the picture).

How to convert videos downloaded by uc browser into local videos

Feb 29, 2024 pm 10:19 PM

How to convert videos downloaded by uc browser into local videos

Feb 29, 2024 pm 10:19 PM

How to turn videos downloaded by UC browser into local videos? Many mobile phone users like to use UC Browser. They can not only browse the web, but also watch various videos and TV programs online, and download their favorite videos to their mobile phones. Actually, we can convert downloaded videos to local videos, but many people don't know how to do it. Therefore, the editor specially brings you a method to convert the videos cached by UC browser into local videos. I hope it can help you. Method to convert uc browser cached videos to local videos 1. Open uc browser and click the "Menu" option. 2. Click "Download/Video". 3. Click "Cached Video". 4. Long press any video, when the options pop up, click "Open Directory". 5. Check the ones you want to download