Technology peripherals

Technology peripherals

AI

AI

Google's super AI supercomputer crushes NVIDIA A100! TPU v4 performance increased by 10 times, details disclosed for the first time

Google's super AI supercomputer crushes NVIDIA A100! TPU v4 performance increased by 10 times, details disclosed for the first time

Google's super AI supercomputer crushes NVIDIA A100! TPU v4 performance increased by 10 times, details disclosed for the first time

Although Google deployed the most powerful AI chip at the time, TPU v4, in its own data center as early as 2020.

But it was not until April 4 this year that Google announced the technical details of this AI supercomputer for the first time.

##Paper address: https://arxiv.org/abs/2304.01433

Compared with TPU v3, the performance of TPU v4 is 2.1 times higher, and after integrating 4096 chips, the performance of the supercomputer is increased by 10 times.

In addition, Google also claims that its own chip is faster and more energy-efficient than Nvidia A100.

In the paper, Google stated that for systems of comparable size, TPU v4 It can provide 1.7 times better performance than NVIDIA A100, while also improving energy efficiency by 1.9 times.

In addition, Google’s supercomputing speed is about 4.3 times to 4.5 times faster than Graphcore IPU Bow.

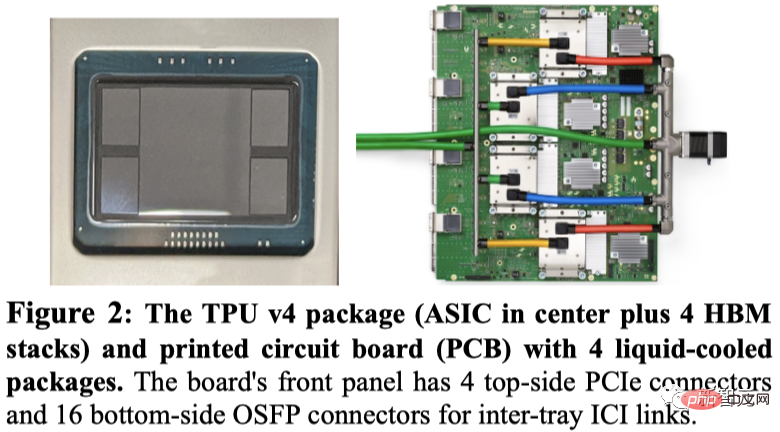

Google demonstrated the TPU v4 package, as well as 4 packages mounted on the circuit board.

Like TPU v3, each TPU v4 contains two TensorCore (TC). Each TC contains four 128x128 matrix multiplication units (MXU), a vector processing unit (VPU) with 128 channels (16 ALUs per channel), and 16 MiB vector memory (VMEM).

Two TCs share a 128 MiB common memory (CMEM).

It is worth noting that the A100 chip and Google’s fourth-generation TPU were launched at the same time, so how is their specific performance compared?

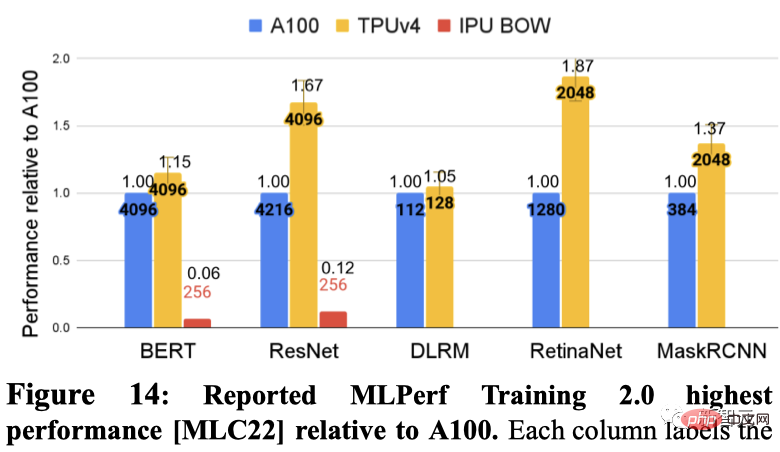

Google demonstrated the fastest performance of each DSA on 5 MLPerf benchmarks separately. These include BERT, ResNET, DLRM, RetinaNet, and MaskRCNN.

Among them, Graphcore IPU submitted results in BERT and ResNET.

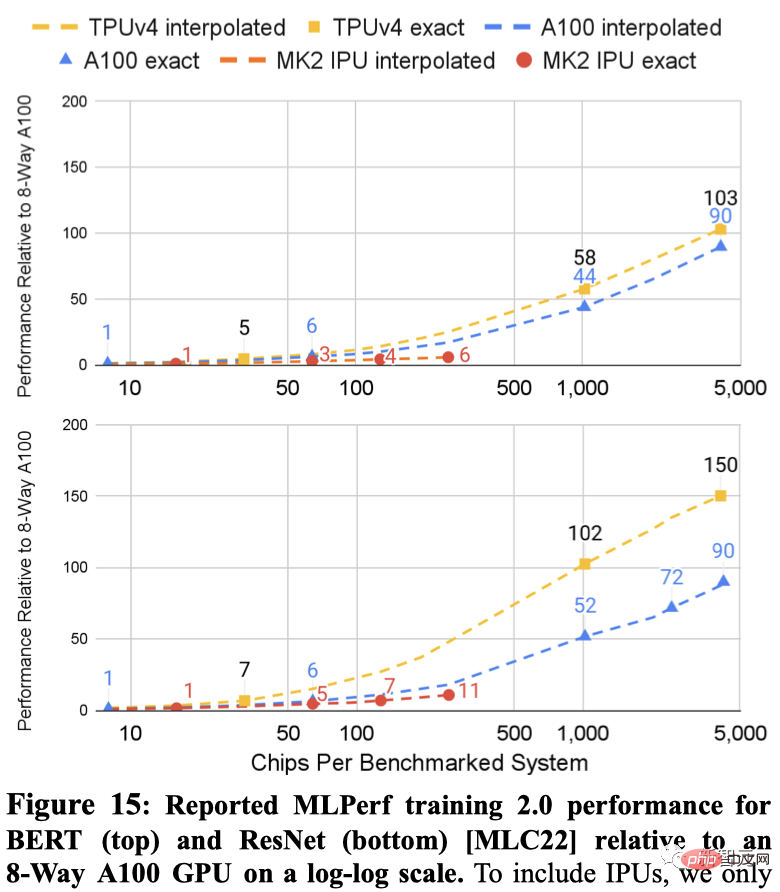

The results of the two systems on ResNet and BERT are shown below. The dotted lines between the points are interpolations based on the number of chips.

MLPerf results for both TPU v4 and A100 scale to larger systems than the IPU (4096 chips vs. 256 chips).

For similarly sized systems, TPU v4 is 1.15 times faster than A100 on BERT and approximately 4.3 times faster than IPU. For ResNet, TPU v4 is 1.67x and about 4.5x faster respectively.

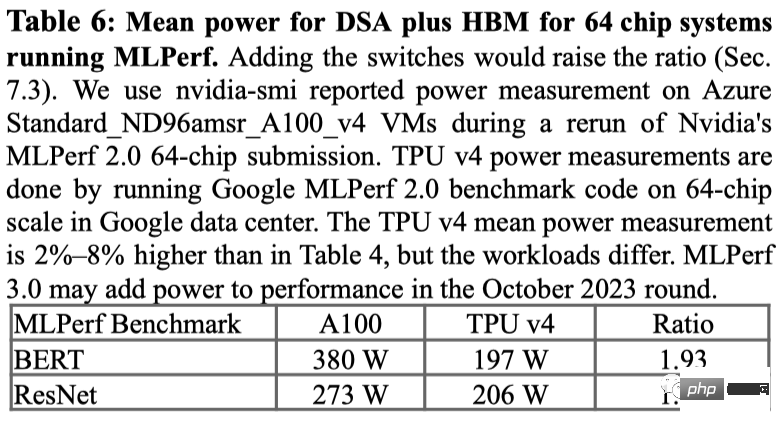

For power usage on the MLPerf benchmark, the A100 used 1.3x to 1.9x more power on average.

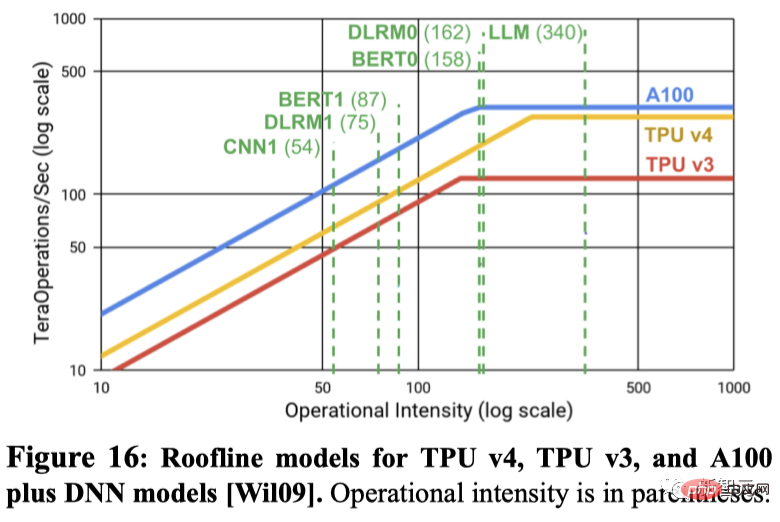

Does peak floating point operations per second predict actual performance? Many people in the machine learning field believe that peak floating point operations per second is a good proxy for performance, but in fact it is not.

For example, TPU v4 is 4.3x to 4.5x faster on two MLPerf benchmarks than IPU Bow on the same size system, despite only having a 1.10x advantage in peak floating point operations per second.

Another example is that the A100's peak floating point operations per second is 1.13 times that of TPU v4, but for the same number of chips, TPU v4 is 1.15 to 1.67 times faster.

The following figure uses the Roofline model to show the relationship between peak FLOPS/second and memory bandwidth.

So, the question is, why doesn’t Google compare with Nvidia’s latest H100?

Google said that because the H100 was built using newer technology after the launch of Google's chips, it did not compare its fourth-generation product to Nvidia's current flagship H100 chip.

However, Google hinted that it is developing a new TPU to compete with Nvidia H100, but did not provide details. Google researcher Jouppi said in an interview with Reuters that Google has "a production line for future chips."

TPU vs GPU

While ChatGPT and Bard are "fighting it out", the two behemoths are also working hard behind the scenes to keep them running - NVIDIA CUDA support GPU (Graphics Processing Unit) and Google's customized TPU (Tensor Processing Unit).

In other words, this is no longer about ChatGPT vs. Bard, but TPU vs. GPU, and how efficiently they can do matrix multiplication.

Due to their excellent design in hardware architecture, NVIDIA’s GPUs are ideally suited for matrix multiplication tasks – effectively switching between multiple CUDA cores Implement parallel processing.

Therefore, since 2012, training models on GPU has become a consensus in the field of deep learning, and it has not changed to this day.

With the launch of NVIDIA DGX, NVIDIA is able to provide one-stop hardware and software solutions for almost all AI tasks, which competitors cannot provide due to lack of intellectual property rights. .

In contrast, Google launched the first-generation tensor processing unit (TPU) in 2016, which not only included a custom ASIC (dedicated integrated circuit), and is also optimized for its own TensorFlow framework. This also gives TPU an advantage in other AI computing tasks besides matrix multiplication, and can even accelerate fine-tuning and inference tasks.

In addition, researchers at Google DeepMind have also found a way to create a better matrix multiplication algorithm-AlphaTensor.

However, even though Google has achieved good results through self-developed technology and emerging AI computing optimization methods, Microsoft and Nvidia’s long-term in-depth cooperation has relied on their respective expertise in the industry. The accumulation of products has simultaneously expanded the competitive advantages of both parties.

Fourth generation TPU

## Back in 2021 at the Google I/O conference, Pichai announced it for the first time Google's latest generation AI chip TPU v4.

"This is the fastest system we have deployed on Google and is a historic milestone for us."

This improvement has become a key point in the competition between companies building AI supercomputers, because large language models like Google’s Bard or OpenAI’s ChatGPT have been implemented at parameter scale. Explosive growth.

This means that they are far larger than the capacity that a single chip can store, and the demand for computing power is a huge "black hole".

So these large models have to be distributed across thousands of chips, and then those chips have to work together for weeks, or even longer, to train the models.

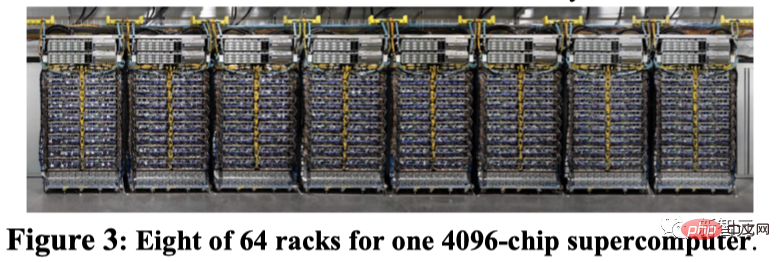

Currently, Google’s largest language model publicly disclosed so far, PaLM, has 540 billion parameters, which was divided into two 4,000-chip supercomputers for training within 50 days. of.

Google said its supercomputers can easily reconfigure the connections between chips to avoid problems and perform performance tuning.

Google researcher Norm Jouppi and Google distinguished engineer David Patterson wrote in a blog post about the system,

"Circuit switching enables bypassing It becomes easy to overcome failed components. This flexibility even allows us to change the topology of the supercomputer interconnection to accelerate the performance of machine learning models."

Although Google is only now releasing relevant Details of its supercomputer, which has been online since 2020 at a data center located in Mayes County, Oklahoma.

Google said that Midjourney used this system to train its model, and the latest version of V5 allows everyone to see the amazing image generation.

Recently, Pichai said in an interview with the New York Times that Bard will be transferred from LaMDA to PaLM.

Now with the blessing of TPU v4 supercomputer, Bard will only become stronger.

The above is the detailed content of Google's super AI supercomputer crushes NVIDIA A100! TPU v4 performance increased by 10 times, details disclosed for the first time. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Which of the top ten currency trading platforms in the world are the latest version of the top ten currency trading platforms

Apr 28, 2025 pm 08:09 PM

Which of the top ten currency trading platforms in the world are the latest version of the top ten currency trading platforms

Apr 28, 2025 pm 08:09 PM

The top ten cryptocurrency trading platforms in the world include Binance, OKX, Gate.io, Coinbase, Kraken, Huobi Global, Bitfinex, Bittrex, KuCoin and Poloniex, all of which provide a variety of trading methods and powerful security measures.

Recommended reliable digital currency trading platforms. Top 10 digital currency exchanges in the world. 2025

Apr 28, 2025 pm 04:30 PM

Recommended reliable digital currency trading platforms. Top 10 digital currency exchanges in the world. 2025

Apr 28, 2025 pm 04:30 PM

Recommended reliable digital currency trading platforms: 1. OKX, 2. Binance, 3. Coinbase, 4. Kraken, 5. Huobi, 6. KuCoin, 7. Bitfinex, 8. Gemini, 9. Bitstamp, 10. Poloniex, these platforms are known for their security, user experience and diverse functions, suitable for users at different levels of digital currency transactions

What are the top ten virtual currency trading apps? The latest digital currency exchange rankings

Apr 28, 2025 pm 08:03 PM

What are the top ten virtual currency trading apps? The latest digital currency exchange rankings

Apr 28, 2025 pm 08:03 PM

The top ten digital currency exchanges such as Binance, OKX, gate.io have improved their systems, efficient diversified transactions and strict security measures.

What are the top currency trading platforms? The top 10 latest virtual currency exchanges

Apr 28, 2025 pm 08:06 PM

What are the top currency trading platforms? The top 10 latest virtual currency exchanges

Apr 28, 2025 pm 08:06 PM

Currently ranked among the top ten virtual currency exchanges: 1. Binance, 2. OKX, 3. Gate.io, 4. Coin library, 5. Siren, 6. Huobi Global Station, 7. Bybit, 8. Kucoin, 9. Bitcoin, 10. bit stamp.

Which of the top ten currency trading platforms in the world are among the top ten currency trading platforms in 2025

Apr 28, 2025 pm 08:12 PM

Which of the top ten currency trading platforms in the world are among the top ten currency trading platforms in 2025

Apr 28, 2025 pm 08:12 PM

The top ten cryptocurrency exchanges in the world in 2025 include Binance, OKX, Gate.io, Coinbase, Kraken, Huobi, Bitfinex, KuCoin, Bittrex and Poloniex, all of which are known for their high trading volume and security.

How to measure thread performance in C?

Apr 28, 2025 pm 10:21 PM

How to measure thread performance in C?

Apr 28, 2025 pm 10:21 PM

Measuring thread performance in C can use the timing tools, performance analysis tools, and custom timers in the standard library. 1. Use the library to measure execution time. 2. Use gprof for performance analysis. The steps include adding the -pg option during compilation, running the program to generate a gmon.out file, and generating a performance report. 3. Use Valgrind's Callgrind module to perform more detailed analysis. The steps include running the program to generate the callgrind.out file and viewing the results using kcachegrind. 4. Custom timers can flexibly measure the execution time of a specific code segment. These methods help to fully understand thread performance and optimize code.

Decryption Gate.io Strategy Upgrade: How to Redefine Crypto Asset Management in MeMebox 2.0?

Apr 28, 2025 pm 03:33 PM

Decryption Gate.io Strategy Upgrade: How to Redefine Crypto Asset Management in MeMebox 2.0?

Apr 28, 2025 pm 03:33 PM

MeMebox 2.0 redefines crypto asset management through innovative architecture and performance breakthroughs. 1) It solves three major pain points: asset silos, income decay and paradox of security and convenience. 2) Through intelligent asset hubs, dynamic risk management and return enhancement engines, cross-chain transfer speed, average yield rate and security incident response speed are improved. 3) Provide users with asset visualization, policy automation and governance integration, realizing user value reconstruction. 4) Through ecological collaboration and compliance innovation, the overall effectiveness of the platform has been enhanced. 5) In the future, smart contract insurance pools, forecast market integration and AI-driven asset allocation will be launched to continue to lead the development of the industry.

How much is Bitcoin worth

Apr 28, 2025 pm 07:42 PM

How much is Bitcoin worth

Apr 28, 2025 pm 07:42 PM

Bitcoin’s price ranges from $20,000 to $30,000. 1. Bitcoin’s price has fluctuated dramatically since 2009, reaching nearly $20,000 in 2017 and nearly $60,000 in 2021. 2. Prices are affected by factors such as market demand, supply, and macroeconomic environment. 3. Get real-time prices through exchanges, mobile apps and websites. 4. Bitcoin price is highly volatile, driven by market sentiment and external factors. 5. It has a certain relationship with traditional financial markets and is affected by global stock markets, the strength of the US dollar, etc. 6. The long-term trend is bullish, but risks need to be assessed with caution.