Since Meta released and open sourced the LLaMA series of models, researchers from Stanford University, UC Berkeley and other institutions have carried out "second creation" on the basis of LLaMA, and have successively launched Alpaca, Vicuna and other " Alpaca" large model.

Alpaca has become the new leader in the open source community. Due to the abundance of "secondary creations", the English words for the biological alpaca genus are almost out of use, but it is also possible to name the large model after other animals.

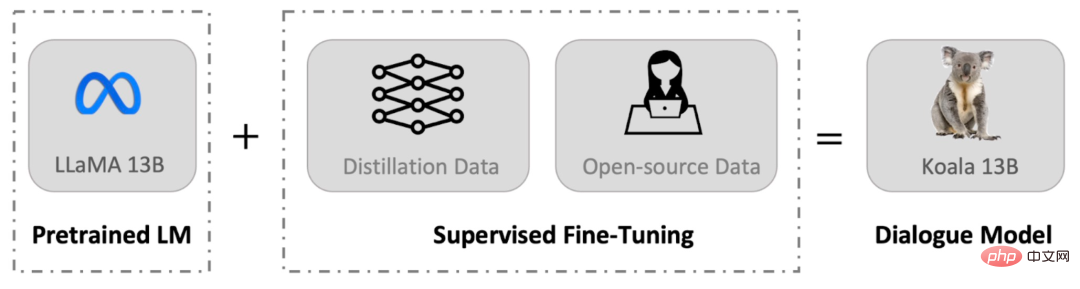

Recently, UC Berkeley’s Berkeley Artificial Intelligence Institute (BAIR) released a conversation model Koala (literally translated as Koala) that can run on consumer-grade GPUs. Koala fine-tunes the LLaMA model using conversation data collected from the web.

Project address: https://bair.berkeley.edu/blog/2023/04/03/koala/

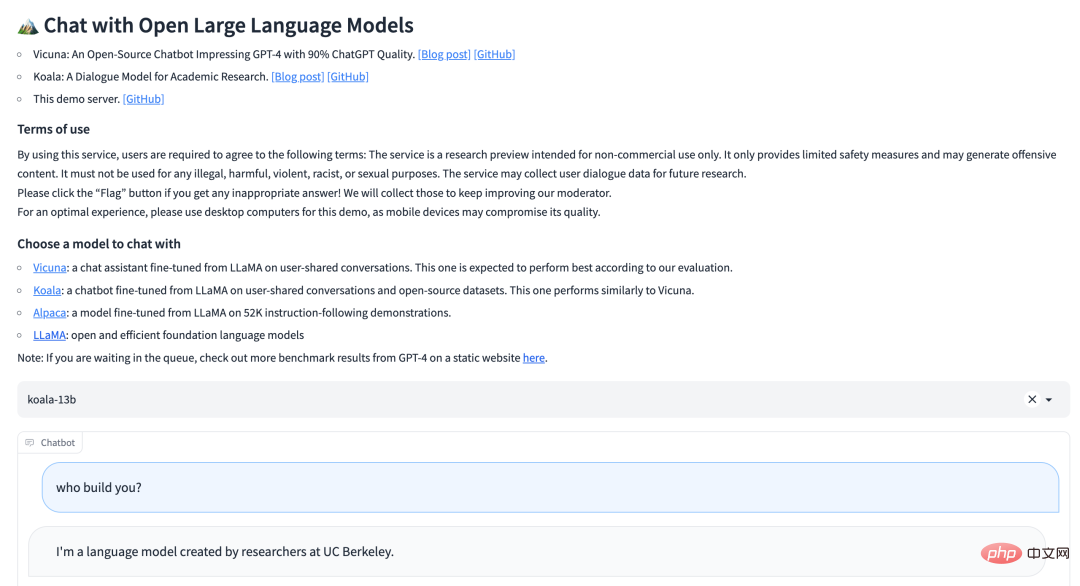

Koala has launched an online test demo:

Like Vicuna, Koala also uses conversation data collected from the network to fine-tune the LLaMA model, with a focus on ChatGPT Public data of closed-source large model dialogues.

The research team stated that the Koala model is implemented in EasyLM using JAX/Flax and the Koala model is trained on a single Nvidia DGX server equipped with 8 A100 GPUs. It takes 6 hours to complete 2 epochs of training. The cost of such training is typically less than $100 on public cloud computing platforms.

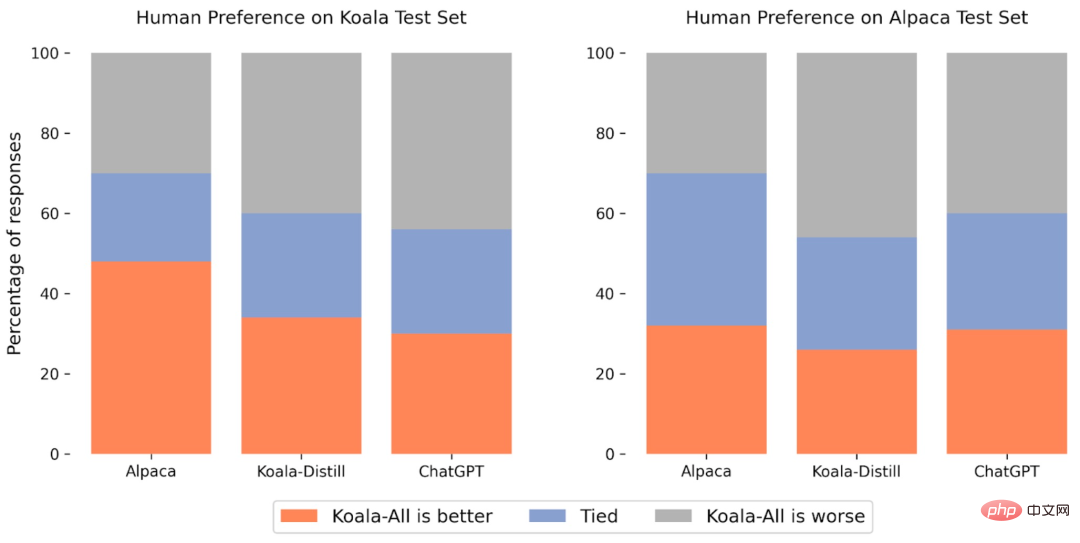

The research team experimentally compared Koala with ChatGPT and Stanford University's Alpaca. The results showed that Koala-13B with 13 billion parameters can effectively respond to various user queries and generate Response is generally better than Alpaca's and is comparable to ChatGPT's performance in more than half of the cases.

The most important significance of Koala is that it shows that when trained on a higher quality data set, a model small enough to run locally can also achieve excellent performance similar to that of a large model . This means that the open source community should work harder to curate high-quality datasets, as this may lead to more secure, realistic, and powerful models than simply increasing the size of existing systems. From this perspective, Koala is a small but refined alternative to ChatGPT.

However, Koala is only a research prototype and still has significant flaws in content, security, and reliability, and should not be used for any purpose other than research.

Datasets and TrainingThe main hurdle in building a conversation model is managing the training data. Large conversation models such as ChatGPT, Bard, Bing Chat, and Claude all use proprietary datasets with extensive human annotations. To build Koala's training dataset, the research team collected and curated conversation data from the web and public datasets, which contain data shared publicly by users speaking to large language models such as ChatGPT.

Unlike other models that crawl as much network data as possible to maximize the data set, Koala focuses on collecting small high-quality data sets, including the question and answer part of public data sets, human Feedback (positive and negative) and dialogue with existing language models. Specifically, Koala's training data set includes the following parts:

ChatGPT distillation data:

Open source data:

This study conducted a manual evaluation comparing the generation of Koala-All with Koala-Distill, Alpaca and ChatGPT. The results are compared and the results are shown in the figure below. Among them, two different data sets are used for testing, one is Stanford's Alpaca test set, which includes 180 test queries (Alpaca Test Set), and the other is the Koala Test Set.

Overall, the Koala model is sufficient to demonstrate many features of LLM, while being small enough to facilitate fine-tuning or in situations where computing resources are limited. Use below. The research team hopes that the Koala model will become a useful platform for future academic research on large-scale language models. Potential research application directions may include:

The above is the detailed content of 13 billion parameters, 8 A100 training, UC Berkeley releases dialogue model Koala. For more information, please follow other related articles on the PHP Chinese website!

Disk scheduling algorithm

Disk scheduling algorithm

The difference between shingled disks and vertical disks

The difference between shingled disks and vertical disks

Android desktop software recommendations

Android desktop software recommendations

Why is my phone not turned off but when someone calls me it prompts me to turn it off?

Why is my phone not turned off but when someone calls me it prompts me to turn it off?

Springcloud five major components

Springcloud five major components

Bitcoin latest price trend

Bitcoin latest price trend

Comparative analysis of iqooneo8 and iqooneo9

Comparative analysis of iqooneo8 and iqooneo9

What is the role of kafka consumer group

What is the role of kafka consumer group

Flutter framework advantages and disadvantages

Flutter framework advantages and disadvantages