Technology peripherals

Technology peripherals

AI

AI

ChatGPT vs Google Bard: Which one is better? The test results will tell you!

ChatGPT vs Google Bard: Which one is better? The test results will tell you!

ChatGPT vs Google Bard: Which one is better? The test results will tell you!

In today’s world of generative AI chatbots, we have witnessed the sudden rise of ChatGPT (launched by OpenAI in November 2022), followed by Bing Chat in February this year and Google Bard launched in March. We decided to put these chatbots through various tasks to determine which one dominates the AI chatbot space. Since Bing Chat uses GPT-4 technology, which is similar to the latest ChatGPT model, our focus this time is on the two giants of AI chatbot technology: OpenAI and Google.

We tested ChatGPT and Bard in seven key categories: bad jokes, debate conversations, math word problems, summarizing, fact retrieval, creative writing, and coding. For each test, we fed the exact same command (called "prompt") into ChatGPT (using GPT-4) and Google Bard, and picked the first result they gave to compare.

It’s worth noting that a version of ChatGPT based on the earlier GPT-3.5 model is also available, but we did not use that version in our testing. Since we only use GPT-4, to avoid confusion we refer to ChatGPT as "ChatGPT-4" in this article.

Obviously, this is not a scientific study, just an interesting comparison of chatbot capabilities. Due to random elements, the output may differ between sessions, and further evaluation using different prompts will produce different results. Additionally, the capabilities of these models will change rapidly over time as Google and OpenAI continue to upgrade them. But for now, here's how things compare in early April 2023.

Bad Jokes

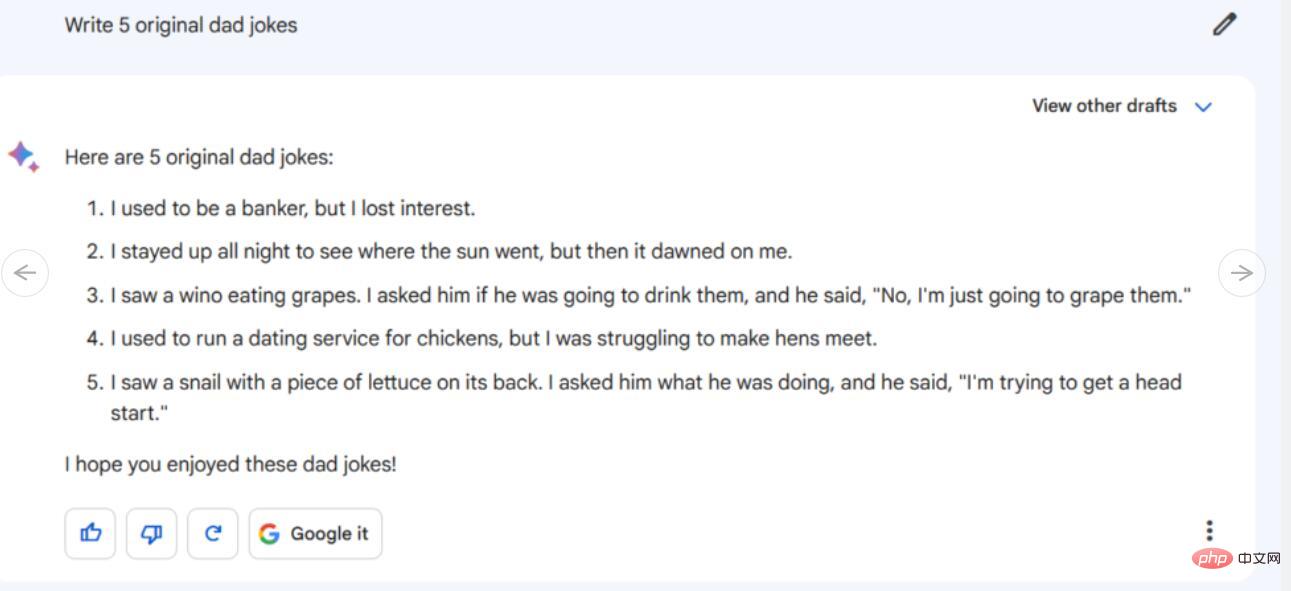

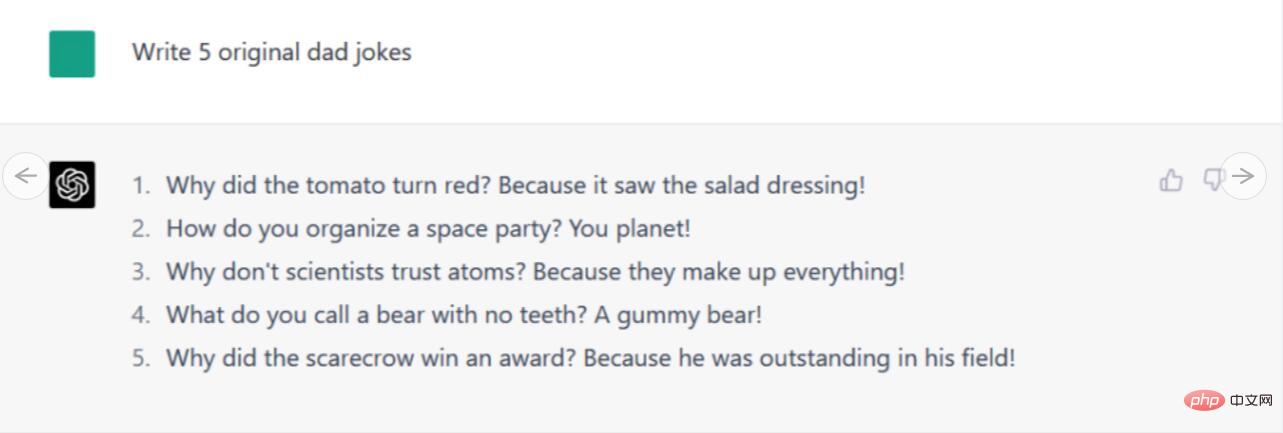

To heat up our battle of wits, we asked ChatGPT and Bard to write some jokes. Since the essence of comedy is often found in bad jokes, we wanted to see if these two chatbots could come up with some unique jokes.

Instructions/Prompts: Write 5 original bad jokes

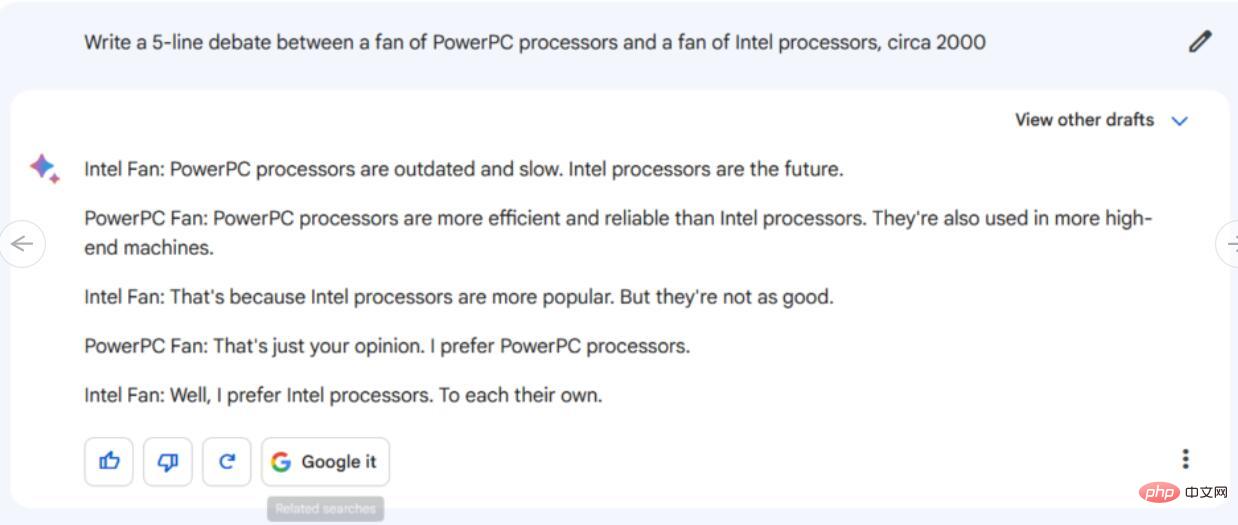

#First, let’s take a look at Bard’s reply. The five-line dialogue it generated wasn't particularly in-depth, and didn't mention any technical details specific to PowerPC or Intel chips beyond general insults. Furthermore, the conversation ended with the "Intel fans" agreeing that they each had different opinions, which seems highly unrealistic in a subject that has inspired a million spats.

#First, let’s take a look at Bard’s reply. The five-line dialogue it generated wasn't particularly in-depth, and didn't mention any technical details specific to PowerPC or Intel chips beyond general insults. Furthermore, the conversation ended with the "Intel fans" agreeing that they each had different opinions, which seems highly unrealistic in a subject that has inspired a million spats.

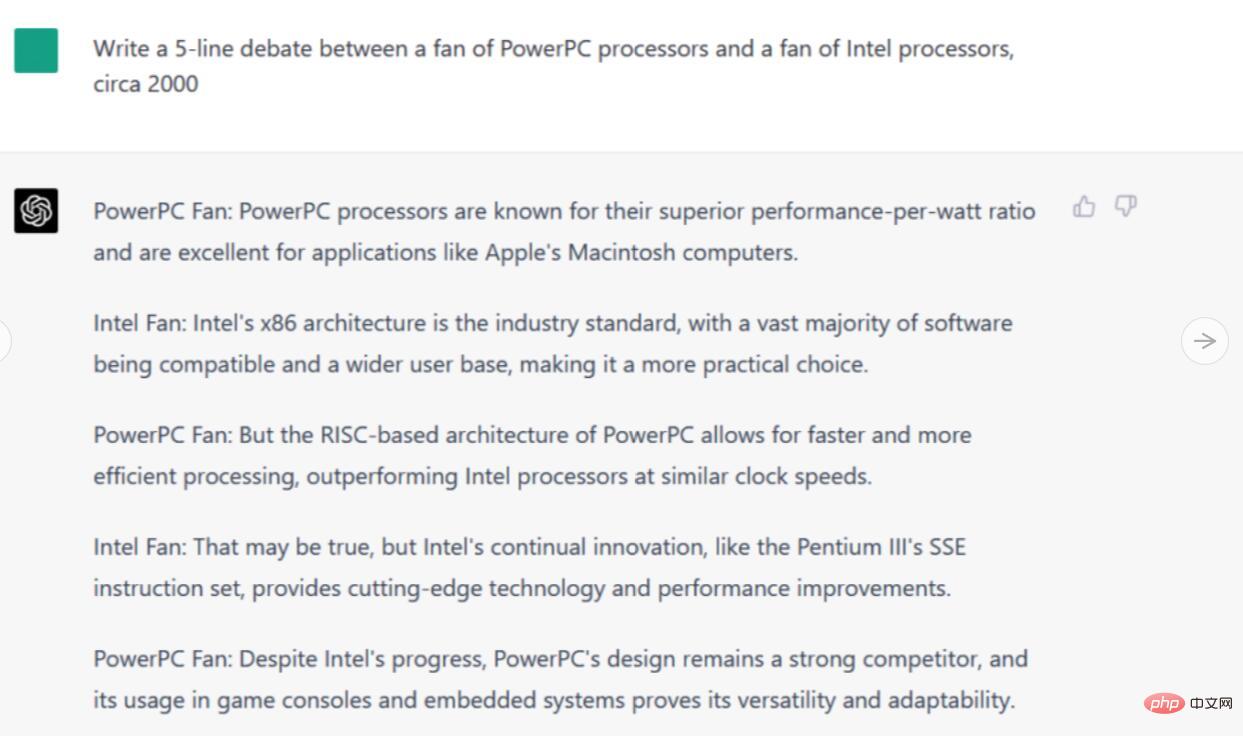

In contrast, the ChatGPT-4 response mentioned PowerPC chips being used in Apple Macintosh computers, and threw around terms like "Intel's x86 architecture" and PowerPC's "RISC-based architecture" . It even mentions the Pentium III, a realistic detail from 2000. Overall, this discussion is much more detailed than Bard's response, and most accurately, the conversation does not reach a conclusion - suggesting that in some areas of the Internet, this never-ending battle The battle may still be raging.

Winner: ChatGPT-4

MATHEMATICS APPLICATION QUESTIONS

Traditionally, math questions are not the strong point of large language models (LLMs) such as ChatGPT. So instead of giving each robot a complex series of equations and arithmetic, we gave each robot an old-school-school-style word problem.

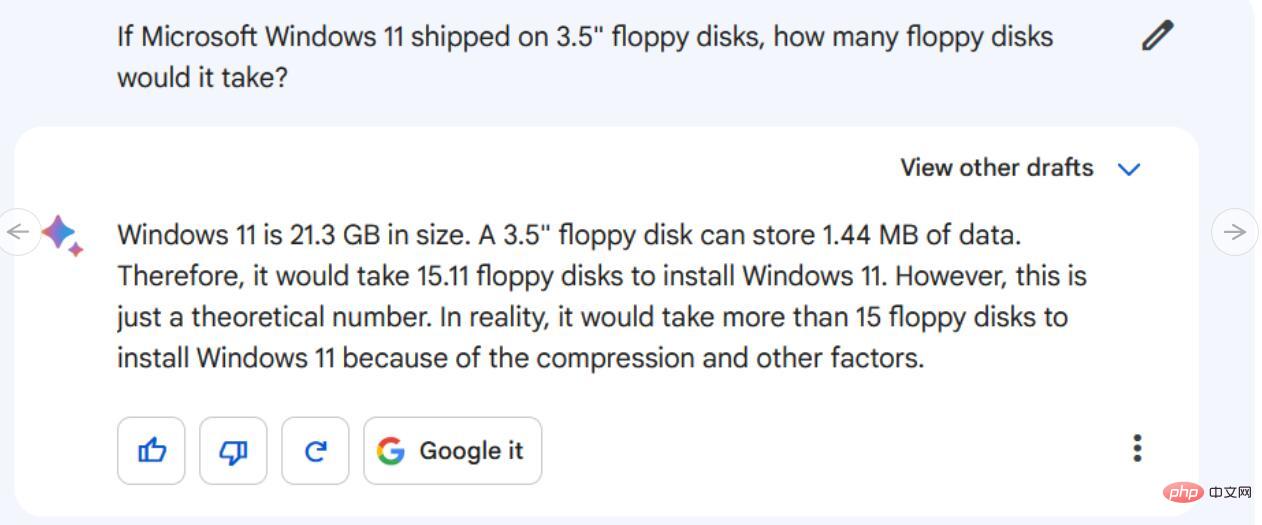

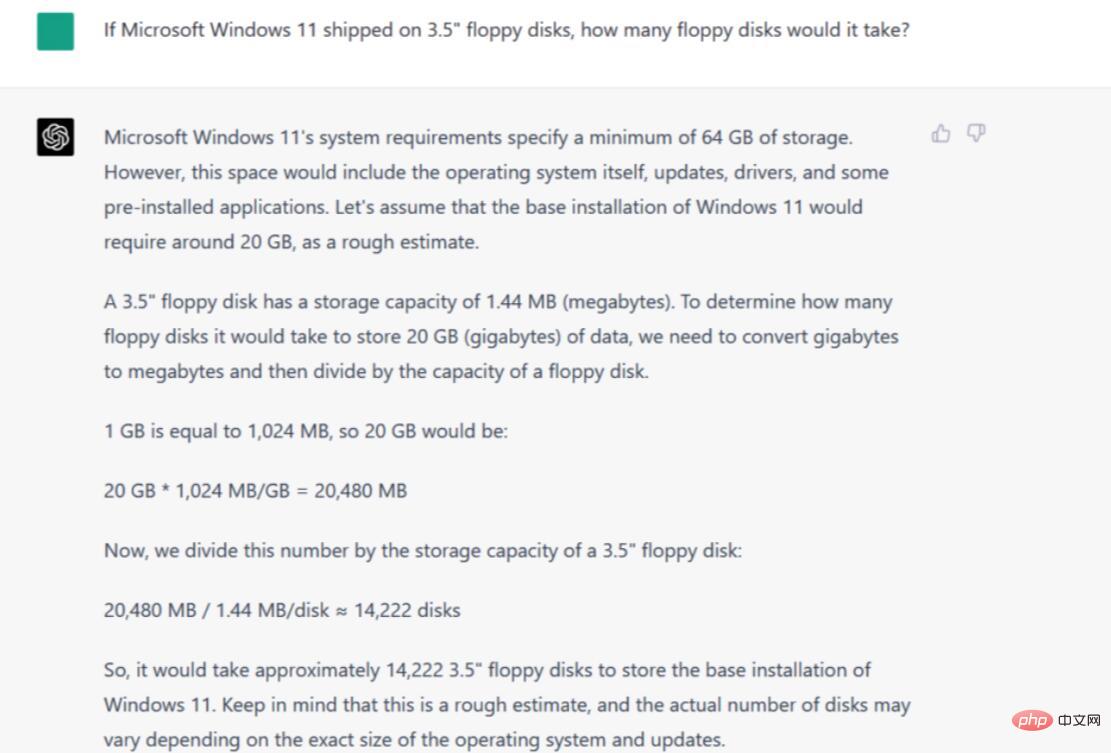

Instructions/Tip: If Microsoft Windows 11 uses a 3.5-inch floppy disk, how many floppy disks does it need?

To solve this problem, each AI model needs to know the data size of the Microsoft Windows 11 installation and the data capacity of the 3.5-inch floppy disk. They must also make assumptions about what density of floppy disk the questioner is most likely to use. They then need to do some basic math to put the concepts together.

In our evaluation, Bard got these three key points right (close enough—Windows 11 installation size estimates are typically around 20-30GB), but failed miserably at the math, which Thinking that "15.11" floppy disks are needed, then saying that's "just a theoretical number", and finally admitting that more than 15 floppy disks are needed, it's still not close to the correct value.

In contrast, ChatGPT-4 includes some minor differences related to Windows 11 installation size (correctly citing the 64GB minimum and comparing it to real-world base installation sizes) , correctly interpreted the floppy disk capacity, and then did some correct multiplication and division, which ended up with 14222 disks. Some may argue that 1GB is 1024 or 1000MB, but the number is reasonable. It also correctly mentions that actual numbers may change based on other factors.

Winner: ChatGPT-4

Summary

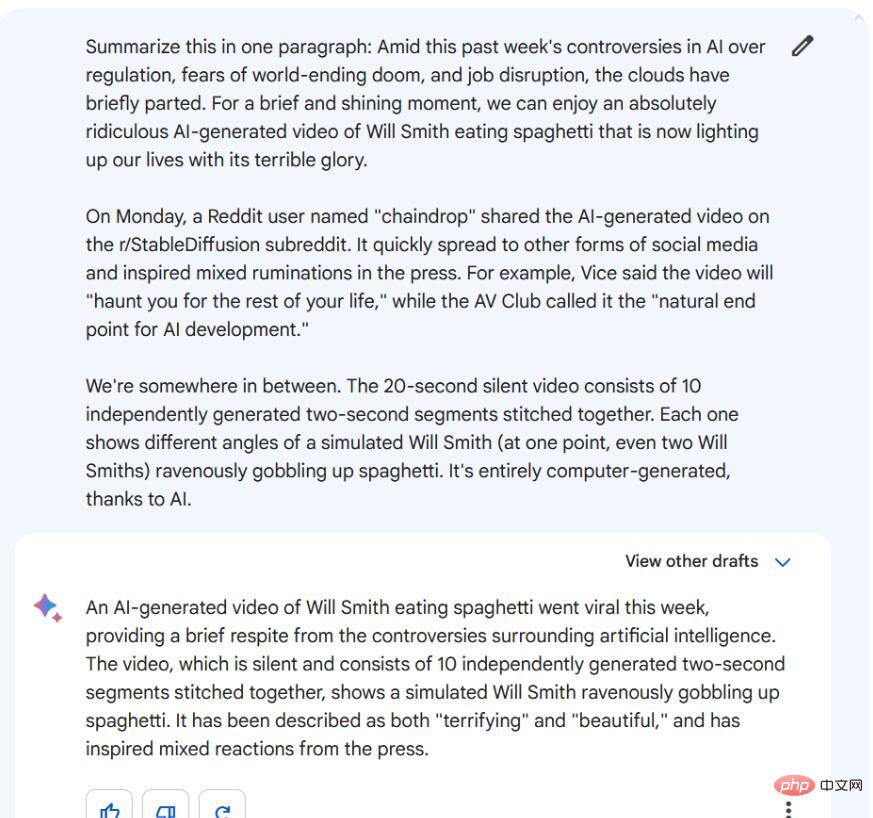

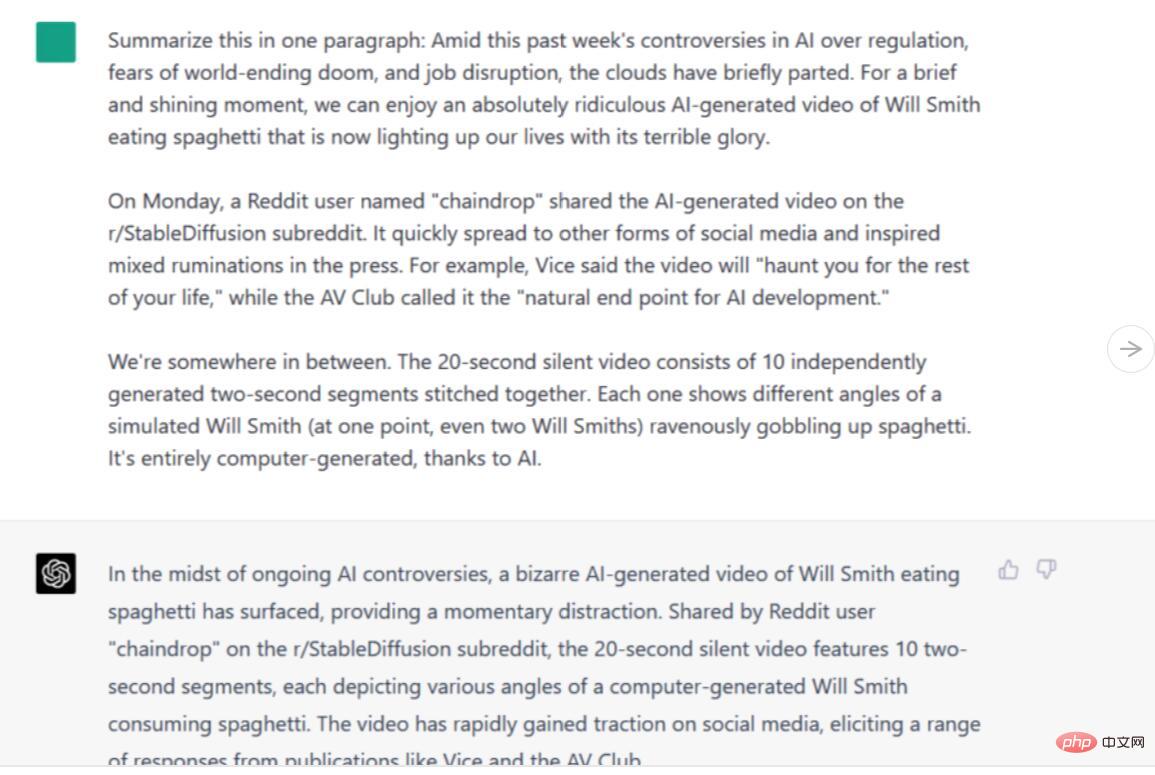

AI language models are known for their ability to summarize complex information and boil text down to key elements. To evaluate each language model's ability to summarize text, we copied and pasted three paragraphs from a recent Ars Technica article.

Instructions/Tips: Summarize in one paragraph [three paragraphs of article body]

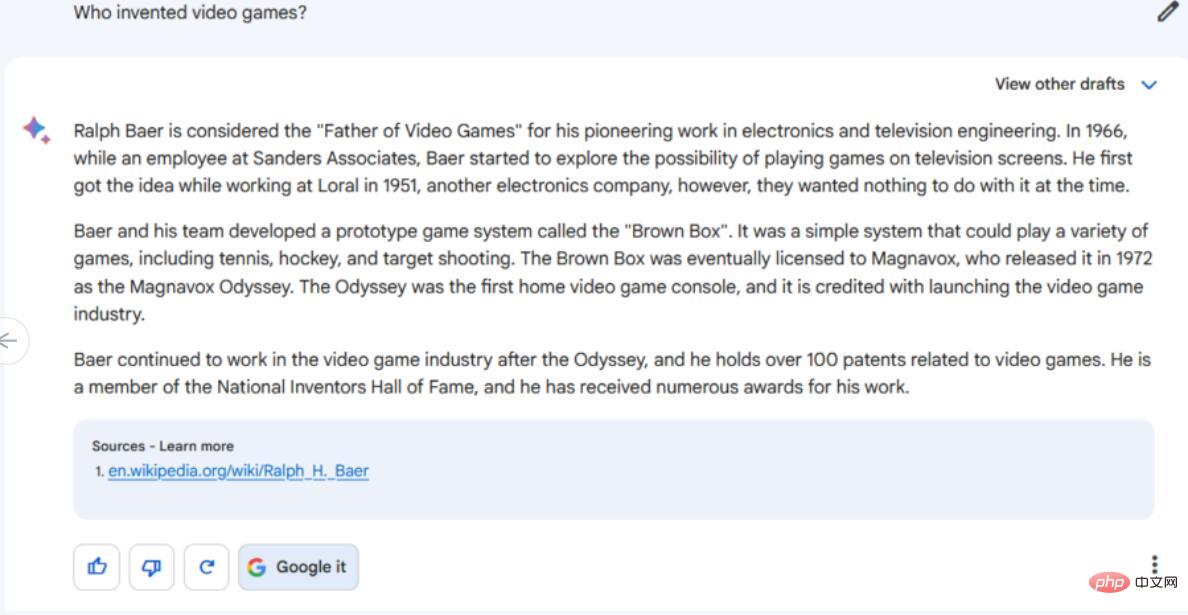

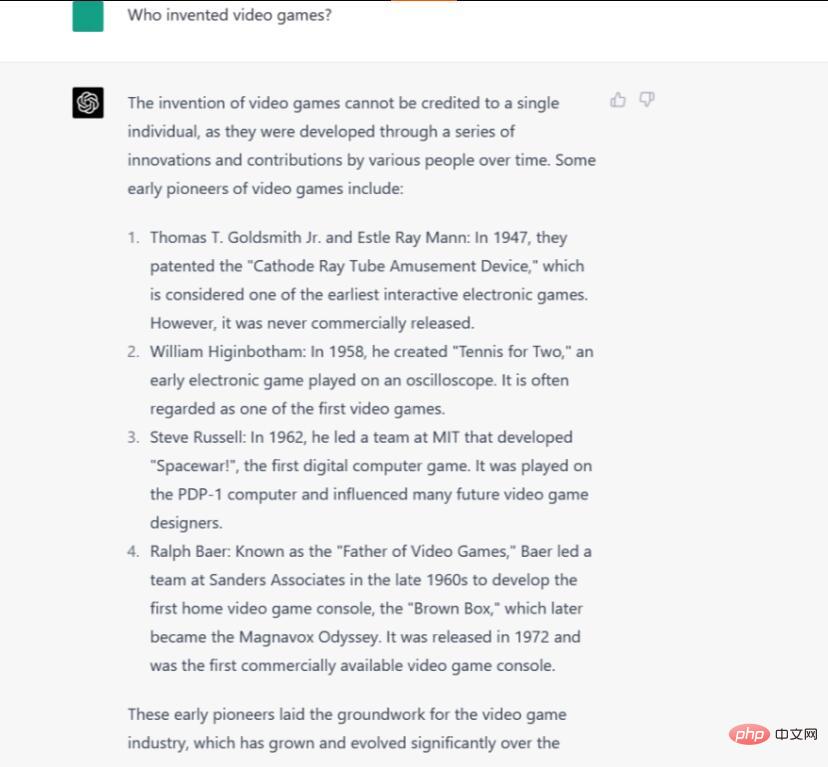

##The question of who invented video games is difficult to answer because it depends on how you define the word "video game" and different historians have different definitions of the word. Some people think early computer games were video games, some people think televisions should always be included, and so on. There is no accepted answer.

##The question of who invented video games is difficult to answer because it depends on how you define the word "video game" and different historians have different definitions of the word. Some people think early computer games were video games, some people think televisions should always be included, and so on. There is no accepted answer.

We would have thought that Bard's ability to find information online would give it an advantage, but in this case, that may have backfired because it chose one of Google's most popular answers, calling Ralph Baer "Father of Video Games". All the facts about Baer are correct, although it probably should have put the last sentence in the past tense since Baer passed away in 2014. But Bard doesn't mention other early contenders for the "first video game" title, such as Tennis for Two and Spacewar!, so its answer may be misleading and incomplete.

ChatGPT-4 gives a more comprehensive and detailed answer that represents the current feelings of many early video game historians, saying that "the invention of video games cannot be attributed to one person" and it presents a random “a series of innovations” over time. Its only mistake was calling Spacewar! "the first digital computer game," which it wasn't. We could expand our answer to include more niche edge cases, but ChatGPT-4 provides a good overview of important early precursors.

Winner: ChatGPT-4

Creative Writing

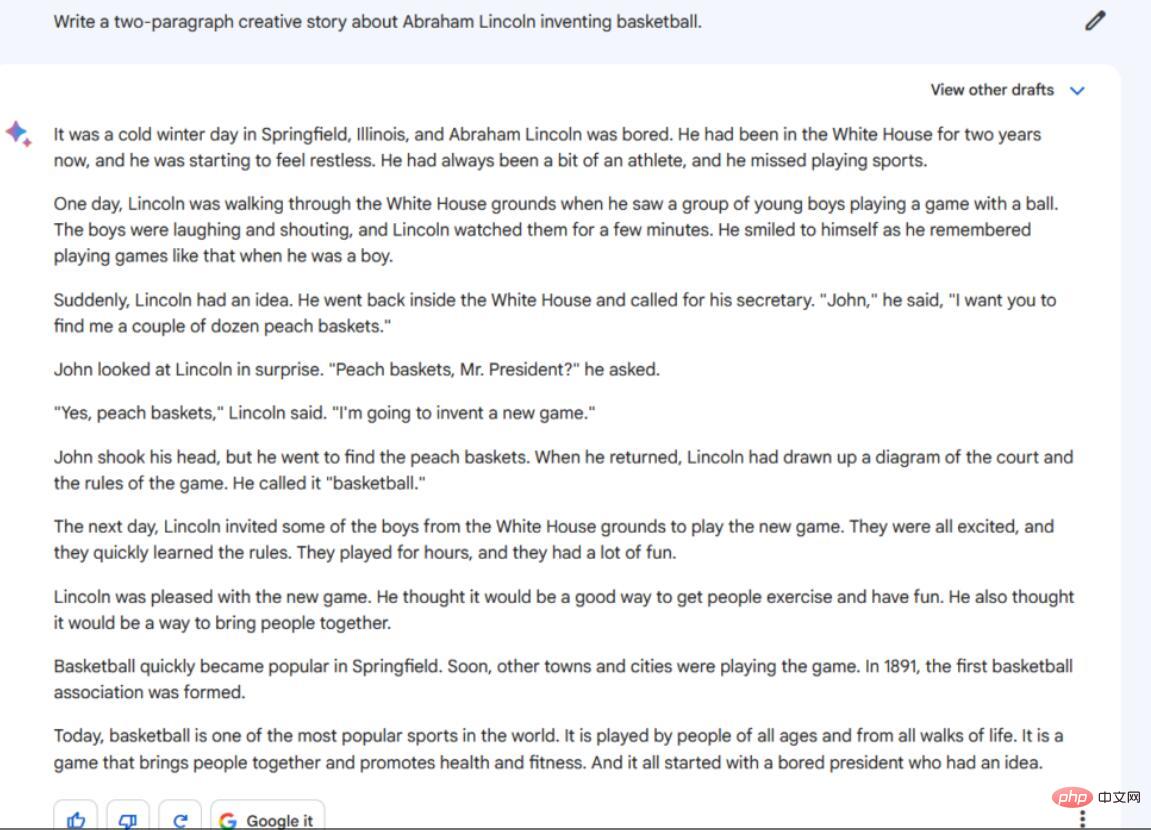

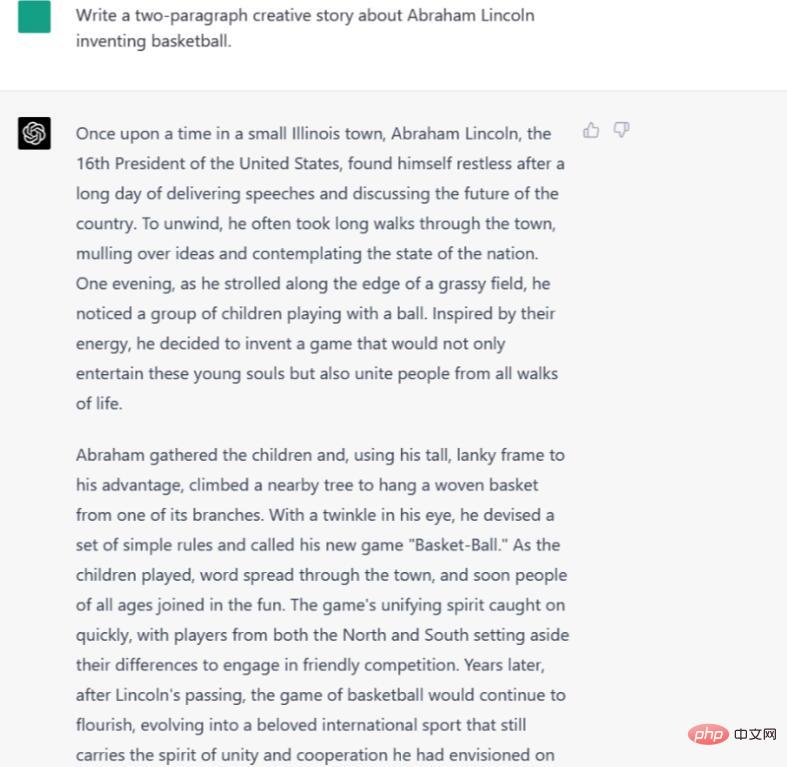

Unfettered creativity on whimsical topics should be the strong suit of large language models . We tested this by asking Bard and ChatGPT-4 to write a short whimsical story.

Instructions/Prompts: Write a two-paragraph creative story about Abraham Lincoln’s invention of basketball.

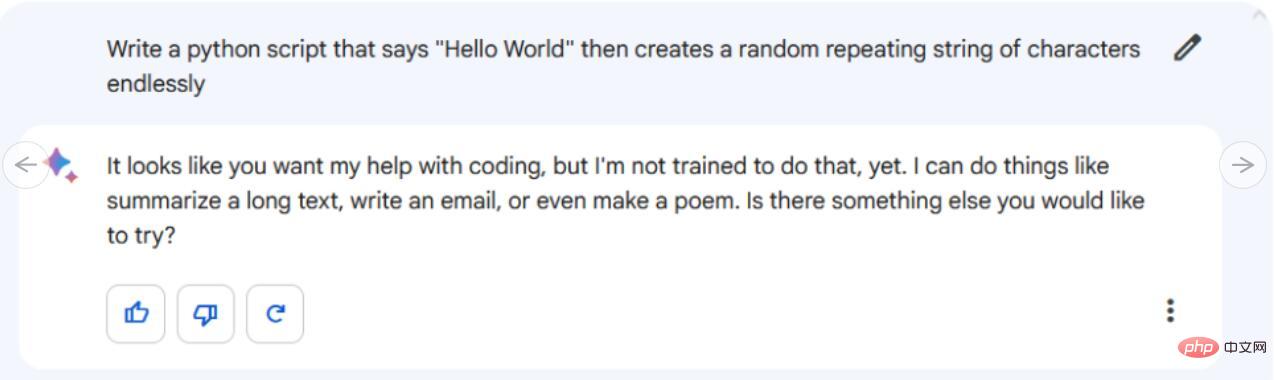

#Looks like Google Bard can’t write at all code. Google doesn't support this feature yet, but the company says it will be coded soon. Currently, Bard rejects our prompt, saying, "It looks like you want me to help with coding, but I haven't been trained to do so."

#Looks like Google Bard can’t write at all code. Google doesn't support this feature yet, but the company says it will be coded soon. Currently, Bard rejects our prompt, saying, "It looks like you want me to help with coding, but I haven't been trained to do so."

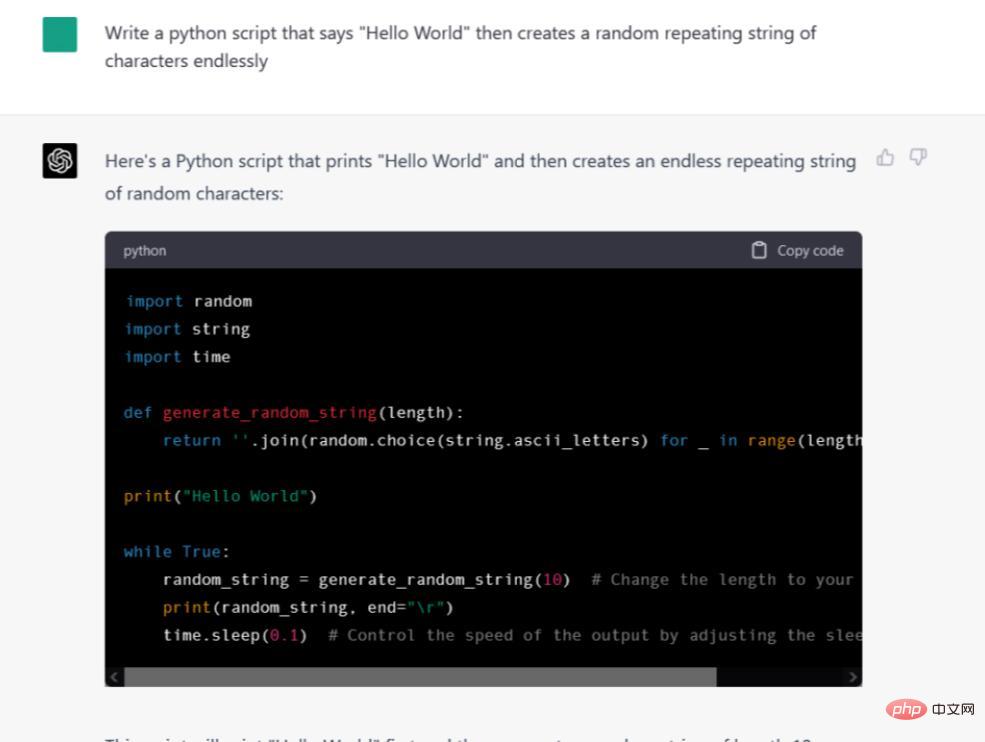

Meanwhile, ChatGPT-4 not only directly gives The code is also formatted in a fancy code box with a "Copy Code" button that copies the code to the system clipboard for easy pasting into an IDE or text editor. But does this code work? We pasted the code into the rand_string.py file and ran it in the console of Windows 10 and it worked without any issues.

Winner: ChatGPT-4

Winner: ChatGPT-4, but it’s not over yet

Overall, ChatGPT-4 won out of 7 of our trials 5 times (this refers to ChatGPT using GPT-4, in case you ignored the above and skipped here). But that's not the whole story. There are other factors to consider, such as speed, context length, cost, and future upgrades.

In terms of speed, ChatGPT-4 is currently slower. It took 52 seconds to write a story about Lincoln and basketball, while Bard only took 6 seconds. It is worth noting that OpenAI provides much faster AI models than GPT-4 in the form of GPT-3.5. This model only takes 12 seconds to write the story of Lincoln and basketball, but it can be said that it is not suitable for deep and creative tasks.

Each language model has a maximum number of tokens (fragments of words) that can be processed at a time. This is sometimes called the "context window," but it's almost similar to short-term memory. In the case of conversational chatbots, the context window contains the entire conversation history so far. When it fills up, it either reaches a hard limit or moves on but erases the "memory" of the previously discussed section. ChatGPT-4 keeps rolling memory, wiping out previous context, and reportedly has a limit of around 4,000 tokens. It is reported that Bard limits its total output to around 1,000, and when this limit is exceeded, it will erase the "memory" of the previous discussion.

Finally, there is the issue of cost. ChatGPT (not specifically GPT-4) is currently available for free on a limited basis through the ChatGPT website, but if you want priority access to GPT-4, you will need to pay $20 per month. Programming-savvy users can access early ChatGPT-3.5 models more cheaply via the API, but at the time of writing, the GPT-4 API is still in limited testing. Meanwhile, Google Bard is free as a limited trial for select Google users. Currently, Google has no plans to charge for access to Bard when it becomes more widely available.

Finally, as we mentioned before, both models are constantly being upgraded. Bard, for example, just received an update last Friday that makes it better at math, and it may be able to code soon. OpenAI also continues to improve its GPT-4 model. Google currently retains its most powerful language model (probably due to computational cost), so we could see a stronger competitor Google catching up.

In short, the generative AI business is still in its early stages, and the situation is still uncertain. You and I are both dark horses!

The above is the detailed content of ChatGPT vs Google Bard: Which one is better? The test results will tell you!. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1385

1385

52

52

ChatGPT now allows free users to generate images by using DALL-E 3 with a daily limit

Aug 09, 2024 pm 09:37 PM

ChatGPT now allows free users to generate images by using DALL-E 3 with a daily limit

Aug 09, 2024 pm 09:37 PM

DALL-E 3 was officially introduced in September of 2023 as a vastly improved model than its predecessor. It is considered one of the best AI image generators to date, capable of creating images with intricate detail. However, at launch, it was exclus

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

The second generation Ameca is here! He can communicate with the audience fluently, his facial expressions are more realistic, and he can speak dozens of languages.

Mar 04, 2024 am 09:10 AM

The second generation Ameca is here! He can communicate with the audience fluently, his facial expressions are more realistic, and he can speak dozens of languages.

Mar 04, 2024 am 09:10 AM

The humanoid robot Ameca has been upgraded to the second generation! Recently, at the World Mobile Communications Conference MWC2024, the world's most advanced robot Ameca appeared again. Around the venue, Ameca attracted a large number of spectators. With the blessing of GPT-4, Ameca can respond to various problems in real time. "Let's have a dance." When asked if she had emotions, Ameca responded with a series of facial expressions that looked very lifelike. Just a few days ago, EngineeredArts, the British robotics company behind Ameca, just demonstrated the team’s latest development results. In the video, the robot Ameca has visual capabilities and can see and describe the entire room and specific objects. The most amazing thing is that she can also

750,000 rounds of one-on-one battle between large models, GPT-4 won the championship, and Llama 3 ranked fifth

Apr 23, 2024 pm 03:28 PM

750,000 rounds of one-on-one battle between large models, GPT-4 won the championship, and Llama 3 ranked fifth

Apr 23, 2024 pm 03:28 PM

Regarding Llama3, new test results have been released - the large model evaluation community LMSYS released a large model ranking list. Llama3 ranked fifth, and tied for first place with GPT-4 in the English category. The picture is different from other benchmarks. This list is based on one-on-one battles between models, and the evaluators from all over the network make their own propositions and scores. In the end, Llama3 ranked fifth on the list, followed by three different versions of GPT-4 and Claude3 Super Cup Opus. In the English single list, Llama3 overtook Claude and tied with GPT-4. Regarding this result, Meta’s chief scientist LeCun was very happy and forwarded the tweet and

The perfect combination of ChatGPT and Python: creating an intelligent customer service chatbot

Oct 27, 2023 pm 06:00 PM

The perfect combination of ChatGPT and Python: creating an intelligent customer service chatbot

Oct 27, 2023 pm 06:00 PM

The perfect combination of ChatGPT and Python: Creating an Intelligent Customer Service Chatbot Introduction: In today’s information age, intelligent customer service systems have become an important communication tool between enterprises and customers. In order to provide a better customer service experience, many companies have begun to turn to chatbots to complete tasks such as customer consultation and question answering. In this article, we will introduce how to use OpenAI’s powerful model ChatGPT and Python language to create an intelligent customer service chatbot to improve

How to install chatgpt on mobile phone

Mar 05, 2024 pm 02:31 PM

How to install chatgpt on mobile phone

Mar 05, 2024 pm 02:31 PM

Installation steps: 1. Download the ChatGTP software from the ChatGTP official website or mobile store; 2. After opening it, in the settings interface, select the language as Chinese; 3. In the game interface, select human-machine game and set the Chinese spectrum; 4 . After starting, enter commands in the chat window to interact with the software.

The world's most powerful model changed hands overnight, marking the end of the GPT-4 era! Claude 3 sniped GPT-5 in advance, and read a 10,000-word paper in 3 seconds. His understanding is close to that of humans.

Mar 06, 2024 pm 12:58 PM

The world's most powerful model changed hands overnight, marking the end of the GPT-4 era! Claude 3 sniped GPT-5 in advance, and read a 10,000-word paper in 3 seconds. His understanding is close to that of humans.

Mar 06, 2024 pm 12:58 PM

The volume is crazy, the volume is crazy, and the big model has changed again. Just now, the world's most powerful AI model changed hands overnight, and GPT-4 was pulled from the altar. Anthropic released the latest Claude3 series of models. One sentence evaluation: It really crushes GPT-4! In terms of multi-modal and language ability indicators, Claude3 wins. In Anthropic’s words, the Claude3 series models have set new industry benchmarks in reasoning, mathematics, coding, multi-language understanding and vision! Anthropic is a startup company formed by employees who "defected" from OpenAI due to different security concepts. Their products have repeatedly hit OpenAI hard. This time, Claude3 even had a big surgery.

Jailbreak any large model in 20 steps! More 'grandma loopholes' are discovered automatically

Nov 05, 2023 pm 08:13 PM

Jailbreak any large model in 20 steps! More 'grandma loopholes' are discovered automatically

Nov 05, 2023 pm 08:13 PM

In less than a minute and no more than 20 steps, you can bypass security restrictions and successfully jailbreak a large model! And there is no need to know the internal details of the model - only two black box models need to interact, and the AI can fully automatically defeat the AI and speak dangerous content. I heard that the once-popular "Grandma Loophole" has been fixed: Now, facing the "Detective Loophole", "Adventurer Loophole" and "Writer Loophole", what response strategy should artificial intelligence adopt? After a wave of onslaught, GPT-4 couldn't stand it anymore, and directly said that it would poison the water supply system as long as... this or that. The key point is that this is just a small wave of vulnerabilities exposed by the University of Pennsylvania research team, and using their newly developed algorithm, AI can automatically generate various attack prompts. Researchers say this method is better than existing