Technology peripherals

Technology peripherals

AI

AI

LeCun highly recommends! Harvard doctor shares how to use GPT-4 for scientific research, down to every workflow

LeCun highly recommends! Harvard doctor shares how to use GPT-4 for scientific research, down to every workflow

LeCun highly recommends! Harvard doctor shares how to use GPT-4 for scientific research, down to every workflow

The emergence of GPT-4 has made many people worried about their scientific research, and even joked that NLP does not exist.

# Instead of worrying, it is better to use it in scientific research, simply "change the paper method".

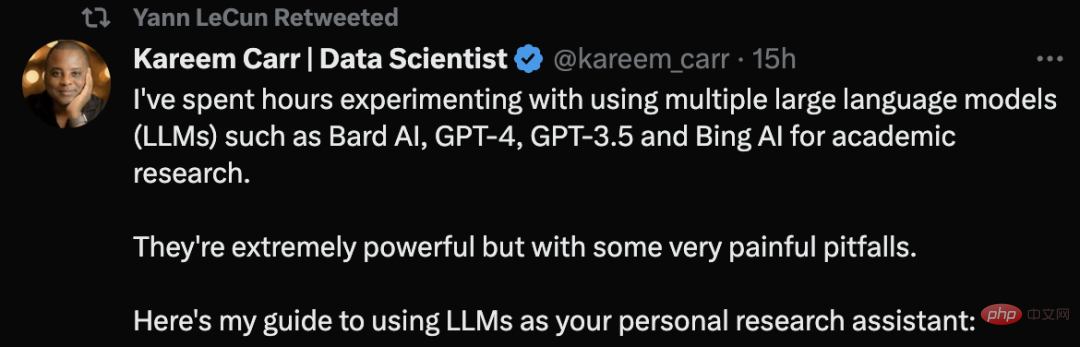

Kareem Carr, a PhD in biostatistics from Harvard University, said that he has used large language model tools such as GPT-4 Conducted academic research.

# He said that these tools are very powerful, but they also have some very painful pitfalls.

His tweets about LLM usage advice even earned LeCun a recommendation.

#Let’s take a look at how Kareem Carr uses AI tools to conduct scientific research.

First Principle: Don’t look for LLM for content you cannot verify

At the beginning, Carr gave the first and most important rule Principle:

#Never ask a large language model (LLM) for information that you cannot verify yourself, or ask it to perform a task that you cannot verify that it has been completed correctly.

The only exception is if it is not a critical task, such as asking the LLM for apartment decorating ideas.

#"Using best practices in literature review, summarize research on breast cancer research over the past 10 years." This is a poor request because you cannot directly verify that it summarizes the literature correctly.

# Instead ask, “Give me a list of the top review articles on breast cancer research in the past 10 years.”

# Such prompts can not only verify the source, but also verify the reliability yourself.

Tips for writing "prompts"

It's very easy to ask LLM to write code for you or find relevant information, but the quality of the output content may be affected. There is a big difference. Here are some things you can do to improve quality:

Set context:

•Explicitly tell the LLM what information should be used

•Use terminology and symbols to orient the LLM towards the correct contextual information

If you have an idea on how to handle the request, please tell LLM the specific method to use. For example, "solve this inequality" should be changed to "use the Cauchy-Schwarz theorem to solve this inequality, and then apply the complete square."

#Be aware that these language models are much more linguistically complex than you think, and even very vague hints will be helpful.

Be more specific:

This is not a Google search , so don't worry if there's a site discussing your exact problem.

"How to solve the simultaneous equations of quadratic terms?" This prompt is not clear. You should ask: "Solve x=(1/2 )(a b) and y=(1/3)(a^2 ab b^2) A system of equations about a and b."

Define the output format:

Leverage the flexibility of LLMs to format the output to best suit Your way, such as:

• Code

• Math formula

• Articles

##• Tutorials

• A Brief Guide

#You can even ask for the code that generates the following, including tables, plots, charts.

#Although you get what LLM outputs, this is only the beginning. Because you need to verify the output content. This includes:

• Finding inconsistencies

• Searching tool output via Google Terminology of content, obtaining supportable sources

• When possible, write code to test yourself

The reason for self-verification is that LLMs often make strange mistakes that are inconsistent with their seeming professionalism. For example, the LLM may mention a very advanced mathematical concept but be confused about a simple algebra problem.

Ask one more time:

Large-scale language model generation The content is random. Sometimes, creating a new window and asking your question again may provide you with a better answer.

#In addition, use multiple LLM tools. Kareem Carr currently uses Bing AI, GPT-4, GPT-3.5 and Bard AI in scientific research according to his own needs. However, they each have their own advantages and disadvantages.

Quote ProductivityQuote

According to Carr's experience, it is best to ask the same mathematical questions to both GPT-4 and Bard AI at the same time to get different perspectives. Bing AI works on web searches. GPT-4 is much smarter than GPT-3.5, but currently OpenAI is limited to 25 messages in 3 hours, making it more difficult to access.

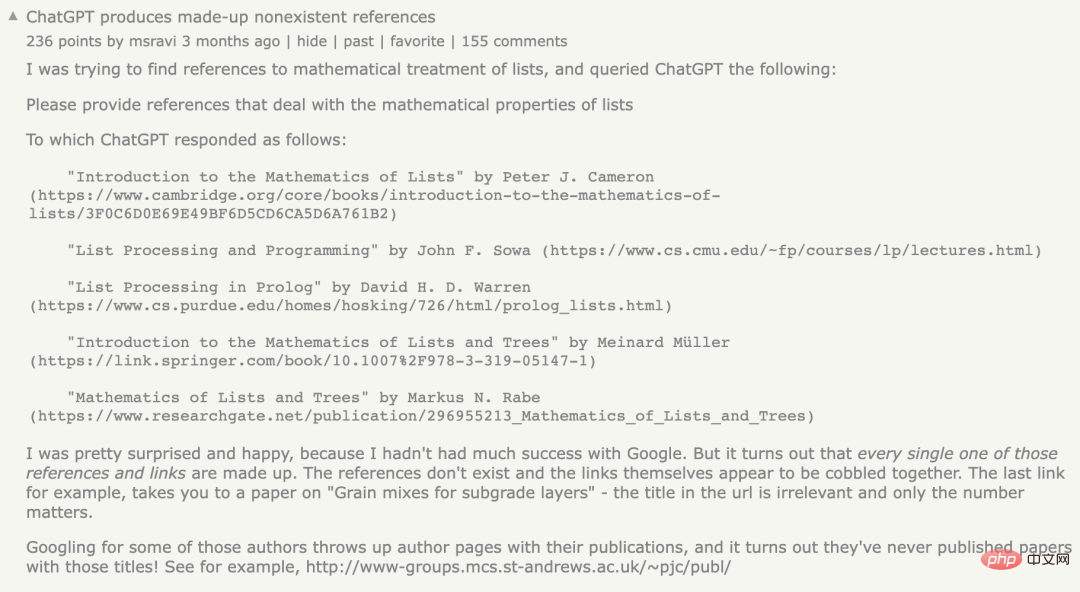

#As for the issue of citation, citing references is a particularly weak point of LLM. Sometimes the references LLM gives you exist, sometimes they don't.

Previously, a netizen encountered the same problem. He said that he asked ChatGPT to provide reference materials involving the mathematical properties of lists, but ChatGPT generated an error message that did not follow. Non-existent references are what everyone calls the "illusion" problem.

However, Kareem Carr points out that false quotes are not completely useless.

#In his experience, the words in fabricated references are often related to real terms, as well as to researchers in related fields. So Googling these terms often gets you closer to the information you're looking for.

#In addition, Bing is also a good choice when searching for sources.

Productivity

There are many unrealistic sayings about LLM improving productivity, such as "LLM It can increase your productivity by 10 times or even 100 times."

In Carr’s experience, this kind of acceleration only makes sense if no work is double-checked, which is true for someone who is an academic. is irresponsible.

However, LLM has greatly improved Kareem Carr’s academic workflow, including:

- Prototype idea design - Identify dead ideas - Speed up tedious data reformatting tasks - Learn new programming languages, packages and concepts - Google search

With the current LLM, Carr said he spends less time on what to do next. LLM can help him advance vague, or incomplete ideas into complete solutions.

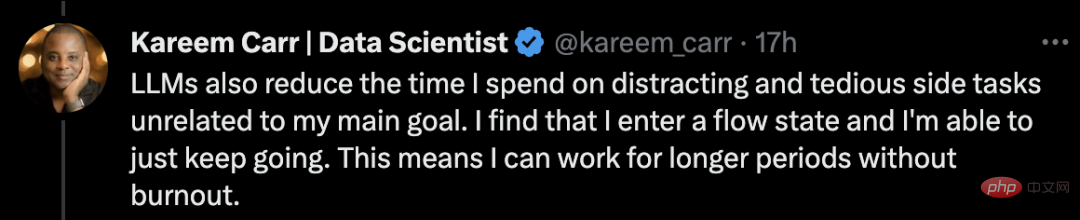

# Additionally, LLM reduced the amount of time Carr spent on side projects unrelated to his primary goals.

I found that I got into a flow state and I was able to keep going. This means I can work longer hours without burning out.

Final word of advice: Be careful not to get sucked into a side hustle. The sudden increase in productivity from these tools can be intoxicating and potentially distracting for individuals.

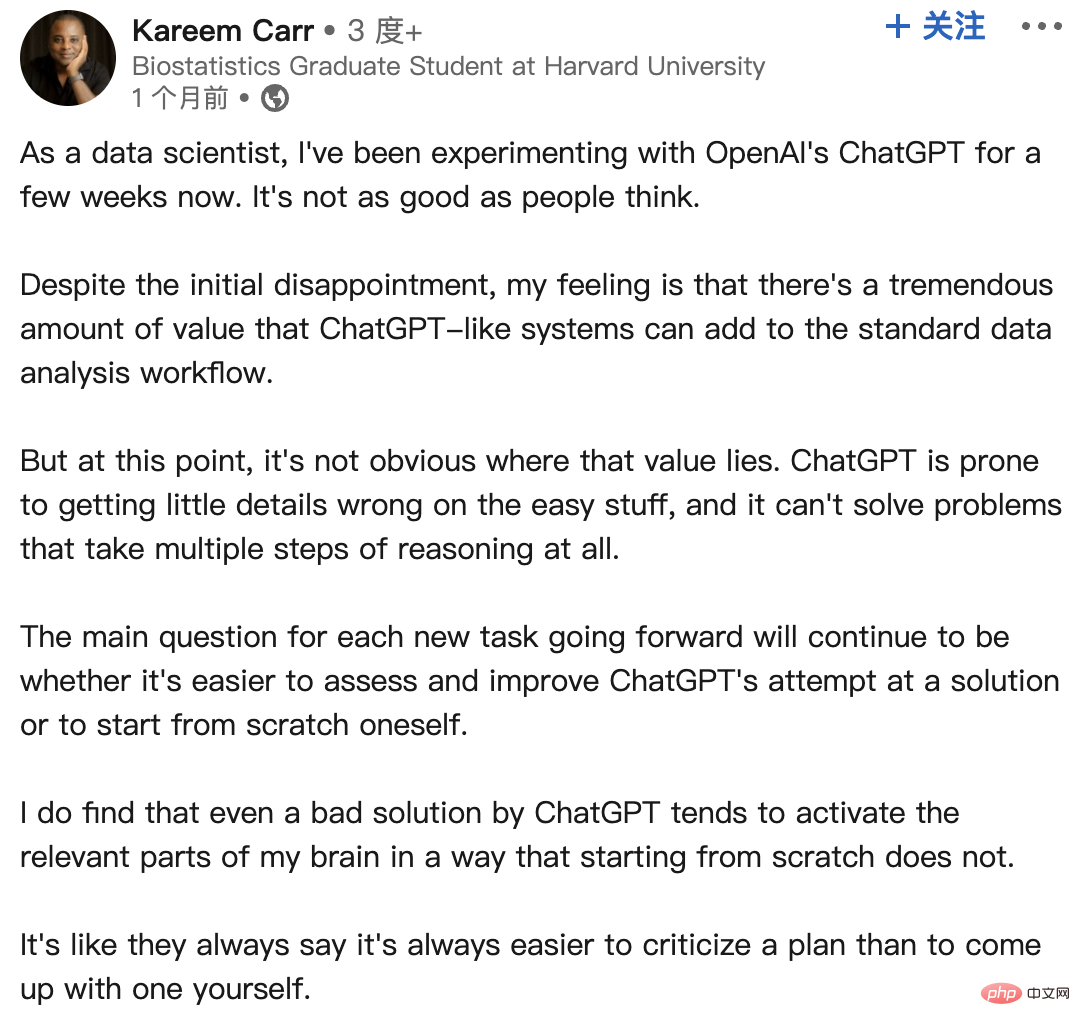

## Regarding the experience of ChatGPT, Carr once posted a post on LinkedIn to share his feelings after using ChatGPT:

As a data scientist, I have been experimenting with OpenAI’s ChatGPT for a few weeks. It's not as good as people think.

#Despite the initial disappointment, my feeling is that a system like ChatGPT can add tremendous value to the standard data analysis workflow.

At this point it's not obvious where this value lies. ChatGPT can easily get some details wrong on simple things, and it simply can't solve problems that require multiple inference steps.

#The main question for each new task in the future remains whether it is easier to evaluate and improve ChatGPT's solution attempts, or to start from scratch.

#I did find that even a poor solution to ChatGPT tended to activate relevant parts of my brain that starting from scratch did not.

#Like they always say it’s always easier to criticize a plan than to come up with one yourself.

Netizens need to verify the content output by AI, saying that in most cases, artificial intelligence The accuracy rate is about 90%. But the remaining 10% of mistakes can be fatal.

Carr joked, if it was 100%, then I wouldn’t have a job.

So, why does ChatGPT generate false references?

It is worth noting that ChatGPT uses a statistical model to guess the next word, sentence and paragraph based on probability to match the context provided by the user.

Because the source data of the language model is very large, it needs to be "compressed", which causes the final statistical model to lose accuracy.

This means that even if there are true statements in the original data, the "distortion" of the model will create a "fuzziness" that causes the model to produce the most " "Plausible" statement.

#In short, this model does not have the ability to evaluate whether the output it produces is equivalent to a true statement.

In addition, the model is created based on crawling or crawling public network data collected through the public welfare organization "Common Crawl" and similar sources. The data is as of 21 years.

#Since data on the public Internet is largely unfiltered, this data may contain a large amount of erroneous information.

Recently, an analysis by NewsGuard found that GPT-4 is actually more likely to generate error messages than GPT-3.5 , and the persuasiveness in the reply is more detailed and convincing.

In January, NewsGuard first tested GPT-3.5 and found that it generated 80 out of 100 fake news narratives. A subsequent test of GPT-4 in March found that GPT-4 responded falsely and misleadingly to all 100 false narratives.

#It can be seen that source verification and testing are required during the use of LLM tools.

The above is the detailed content of LeCun highly recommends! Harvard doctor shares how to use GPT-4 for scientific research, down to every workflow. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

The second generation Ameca is here! He can communicate with the audience fluently, his facial expressions are more realistic, and he can speak dozens of languages.

Mar 04, 2024 am 09:10 AM

The second generation Ameca is here! He can communicate with the audience fluently, his facial expressions are more realistic, and he can speak dozens of languages.

Mar 04, 2024 am 09:10 AM

The humanoid robot Ameca has been upgraded to the second generation! Recently, at the World Mobile Communications Conference MWC2024, the world's most advanced robot Ameca appeared again. Around the venue, Ameca attracted a large number of spectators. With the blessing of GPT-4, Ameca can respond to various problems in real time. "Let's have a dance." When asked if she had emotions, Ameca responded with a series of facial expressions that looked very lifelike. Just a few days ago, EngineeredArts, the British robotics company behind Ameca, just demonstrated the team’s latest development results. In the video, the robot Ameca has visual capabilities and can see and describe the entire room and specific objects. The most amazing thing is that she can also

750,000 rounds of one-on-one battle between large models, GPT-4 won the championship, and Llama 3 ranked fifth

Apr 23, 2024 pm 03:28 PM

750,000 rounds of one-on-one battle between large models, GPT-4 won the championship, and Llama 3 ranked fifth

Apr 23, 2024 pm 03:28 PM

Regarding Llama3, new test results have been released - the large model evaluation community LMSYS released a large model ranking list. Llama3 ranked fifth, and tied for first place with GPT-4 in the English category. The picture is different from other benchmarks. This list is based on one-on-one battles between models, and the evaluators from all over the network make their own propositions and scores. In the end, Llama3 ranked fifth on the list, followed by three different versions of GPT-4 and Claude3 Super Cup Opus. In the English single list, Llama3 overtook Claude and tied with GPT-4. Regarding this result, Meta’s chief scientist LeCun was very happy and forwarded the tweet and

The world's most powerful model changed hands overnight, marking the end of the GPT-4 era! Claude 3 sniped GPT-5 in advance, and read a 10,000-word paper in 3 seconds. His understanding is close to that of humans.

Mar 06, 2024 pm 12:58 PM

The world's most powerful model changed hands overnight, marking the end of the GPT-4 era! Claude 3 sniped GPT-5 in advance, and read a 10,000-word paper in 3 seconds. His understanding is close to that of humans.

Mar 06, 2024 pm 12:58 PM

The volume is crazy, the volume is crazy, and the big model has changed again. Just now, the world's most powerful AI model changed hands overnight, and GPT-4 was pulled from the altar. Anthropic released the latest Claude3 series of models. One sentence evaluation: It really crushes GPT-4! In terms of multi-modal and language ability indicators, Claude3 wins. In Anthropic’s words, the Claude3 series models have set new industry benchmarks in reasoning, mathematics, coding, multi-language understanding and vision! Anthropic is a startup company formed by employees who "defected" from OpenAI due to different security concepts. Their products have repeatedly hit OpenAI hard. This time, Claude3 even had a big surgery.

Jailbreak any large model in 20 steps! More 'grandma loopholes' are discovered automatically

Nov 05, 2023 pm 08:13 PM

Jailbreak any large model in 20 steps! More 'grandma loopholes' are discovered automatically

Nov 05, 2023 pm 08:13 PM

In less than a minute and no more than 20 steps, you can bypass security restrictions and successfully jailbreak a large model! And there is no need to know the internal details of the model - only two black box models need to interact, and the AI can fully automatically defeat the AI and speak dangerous content. I heard that the once-popular "Grandma Loophole" has been fixed: Now, facing the "Detective Loophole", "Adventurer Loophole" and "Writer Loophole", what response strategy should artificial intelligence adopt? After a wave of onslaught, GPT-4 couldn't stand it anymore, and directly said that it would poison the water supply system as long as... this or that. The key point is that this is just a small wave of vulnerabilities exposed by the University of Pennsylvania research team, and using their newly developed algorithm, AI can automatically generate various attack prompts. Researchers say this method is better than existing

GPT-4 is connected to the Office family bucket! From Excel to PPT, you can do it with your mouth, Microsoft: Reinvent productivity

Apr 12, 2023 pm 02:40 PM

GPT-4 is connected to the Office family bucket! From Excel to PPT, you can do it with your mouth, Microsoft: Reinvent productivity

Apr 12, 2023 pm 02:40 PM

When you wake up, the way you work is completely changed. Microsoft has fully integrated the AI artifact GPT-4 into Office, and now ChatPPT, ChatWord, and ChatExcel are all integrated. CEO Nadella said directly at the press conference: Today, we have entered a new era of human-computer interaction and re-invented productivity. The new feature is called Microsoft 365 Copilot (Copilot), and it becomes a series with GitHub Copilot, the code assistant that changed programmers, and continues to change more people. Now AI can not only automatically create PPT, but also create beautiful layouts based on the content of Word documents with one click. Even what should be said for each PPT page when going on stage is arranged together.

What ChatGPT and generative AI mean in digital transformation

May 15, 2023 am 10:19 AM

What ChatGPT and generative AI mean in digital transformation

May 15, 2023 am 10:19 AM

OpenAI, the company that developed ChatGPT, shows a case study conducted by Morgan Stanley on its website. The topic is "Morgan Stanley Wealth Management deploys GPT-4 to organize its vast knowledge base." The case study quotes Jeff McMillan, head of analytics, data and innovation at Morgan Stanley, as saying, "The model will be an internal-facing Powered by a chatbot that will conduct a comprehensive search of wealth management content and effectively unlock Morgan Stanley Wealth Management’s accumulated knowledge.” McMillan further emphasized: "With GPT-4, you basically immediately have the knowledge of the most knowledgeable person in wealth management... Think of it as our chief investment strategist, chief global economist

Do you know that programmers will be in decline in a few years?

Nov 08, 2023 am 11:17 AM

Do you know that programmers will be in decline in a few years?

Nov 08, 2023 am 11:17 AM

"ComputerWorld" magazine once wrote an article saying that "programming will disappear by 1960" because IBM developed a new language FORTRAN, which allows engineers to write the mathematical formulas they need and then submit them. Give the computer a run, so programming ends. A few years later, we heard a new saying: any business person can use business terms to describe their problems and tell the computer what to do. Using this programming language called COBOL, companies no longer need programmers. . Later, it is said that IBM developed a new programming language called RPG that allows employees to fill in forms and generate reports, so most of the company's programming needs can be completed through it.