Since the Turing Test was proposed in the 1950s, people have been exploring the ability of machines to process linguistic intelligence. Language is essentially an intricate system of human expression, governed by grammatical rules. Therefore, there are huge challenges in developing powerful AI algorithms that can understand and master language. In the past two decades, language modeling methods have been widely used for language understanding and generation, including statistical language models and neural language models.

In recent years, researchers have produced pre-trained language models (PLMs) by pre-training the Transformer model on large-scale corpora, and have shown great power in solving various NLP tasks. Ability. And the researchers found that model scaling can bring performance improvements, so they further studied the effect of scaling by increasing the model size. Interestingly, when the parameter size exceeds a certain level, this larger language model achieves significant performance improvements and emerges capabilities that do not exist in the small model, such as context learning. To distinguish them from PLMs, such models are called large language models (LLMs).

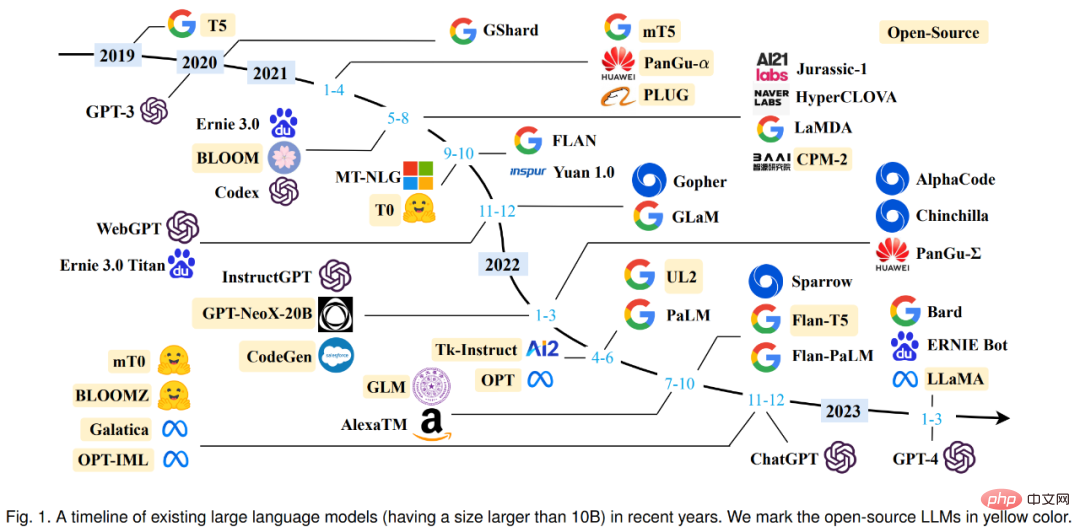

From Google T5 in 2019 to the OpenAI GPT series, large models with exploding parameter volumes continue to emerge. It can be said that the research on LLMs has been greatly promoted in both academia and industry. In particular, the emergence of the large conversation model ChatGPT at the end of November last year has attracted widespread attention from all walks of life. Technical advances in LLMs have had an important impact on the entire AI community and will revolutionize the way people develop and use AI algorithms.

Considering the rapid technological progress of LLMs, more than two dozen researchers from Renmin University of China reviewed the latest progress of LLMs from three aspects: background knowledge, key discoveries and mainstream technologies, especially Focus on pre-training, adaptive tuning, usage and capability evaluation of LLMs. In addition, they summarized and developed available resources for LLMs and discussed future development directions and other issues. This review is an extremely useful learning resource for researchers and engineers in the field.

##Paper link: https://arxiv.org/abs/2303.18223

Before entering the text, let’s first take a look at the timeline of various large language models (more than 10 billion parameters) that have appeared since 2019. Among them, the large models marked in yellow have been open source.

In the first section, the researcher introduced the background, capabilities and key technologies of LLMs in detail.

Background on LLMs

Typically, large language models (LLMs) refer to models containing hundreds of billions (or more) Multi-parameter language models that are trained on large amounts of text data, such as models GPT-3, PaLM, Galactica, and LLaMA. Specifically, LLM is built on the Transformer architecture, where multi-head attention layers are stacked in a very deep neural network. Existing LLMs mainly use a model architecture (i.e. Transformer) and pre-training objectives (i.e. language modeling) similar to small language models. As the main difference, LLM scales the model size, pre-training data, and total computation (scale-up factor) to a great extent. They can better understand natural language and generate high-quality text based on a given context (such as a prompt). This capacity improvement can be described in part by a scaling law, where performance increases roughly following large increases in model size. However, according to the scaling law, some abilities (e.g., contextual learning) are unpredictable and can only be observed when the model size exceeds a certain level.

Emergent Capacity of LLMs

The emergent capability of LLMs is formally defined as “the emergence capability that does not exist in small models but The ability to appear in large models" is one of the most significant features that distinguishes LLM from previous PLMs. When this new capability emerges, it also introduces a notable feature: when scale reaches a certain level, performance is significantly higher than random. By analogy, this new model is closely related to the phenomenon of phase transitions in physics. In principle, this ability can also be related to some complex tasks, while people are more concerned with general abilities that can be applied to solve multiple tasks. Here is a brief introduction to three representative emergent capabilities of LLM:

Contextual learning. GPT-3 formally introduces contextual learning capabilities: assuming that the language model has been provided with natural language instructions and multiple task descriptions, it can generate the expected output of the test instance by completing the word sequence of the input text without additional training or gradient updates. .

Instructions to follow. By fine-tuning a mixture of multi-task datasets formatted with natural language descriptions (i.e. instructions), LLM performs well on tiny tasks that are also described in the form of instructions. In this capacity, instruction tuning enables LLM to perform new tasks by understanding task instructions without using explicit samples, which can greatly improve generalization capabilities.

Step-by-step reasoning. For small language models, it is often difficult to solve complex tasks involving multiple reasoning steps, such as word problems in mathematical subjects. At the same time, through the thought chain reasoning strategy, LLM can solve such tasks to arrive at the final answer by utilizing the prompt mechanism involving intermediate reasoning steps. Presumably, this ability may be acquired through coding training.

Key Technologies

Let’s look at the key technologies of LLMs, including scaling, training, ability stimulation, Alignment tuning, tool utilization, etc.

Zoom. Scaling is a key factor in increasing the model capacity of LLMs. Initially, GPT-3 increased the model parameters to 175 billion, and then PaLM further increased the model parameters to 540 billion. Large-scale parameters are critical to emergent capabilities. Scaling is not only about model size, but also about data size and total computational effort.

train. Due to the large scale, successfully training a LLM with strong capabilities is very challenging. Distributed training algorithms are therefore needed to learn the network parameters of LLMs, often using a combination of various parallel strategies. To support distributed training, optimization frameworks such as DeepSpeed and Megatron-LM are used to facilitate the implementation and deployment of parallel algorithms. Additionally, optimization techniques are important for training stability and model performance, such as restarting training loss spikes and mixed precision training. The recent GPT-4 developed special infrastructure and optimization methods to leverage much smaller models to predict the performance of large models.

Ability stimulation. After being pre-trained on a large-scale corpus, LLMs are endowed with the potential ability to solve general tasks. However, these capabilities may not be explicitly demonstrated when LLMs perform a specific task. Therefore, it is very useful to design suitable task instructions or specific contextual strategies to stimulate these abilities. For example, thinking chain prompts can help solve complex reasoning tasks through intermediate reasoning steps. In addition, instructions can be further tuned for LLMs with natural language task descriptions to improve generalization to unseen tasks.

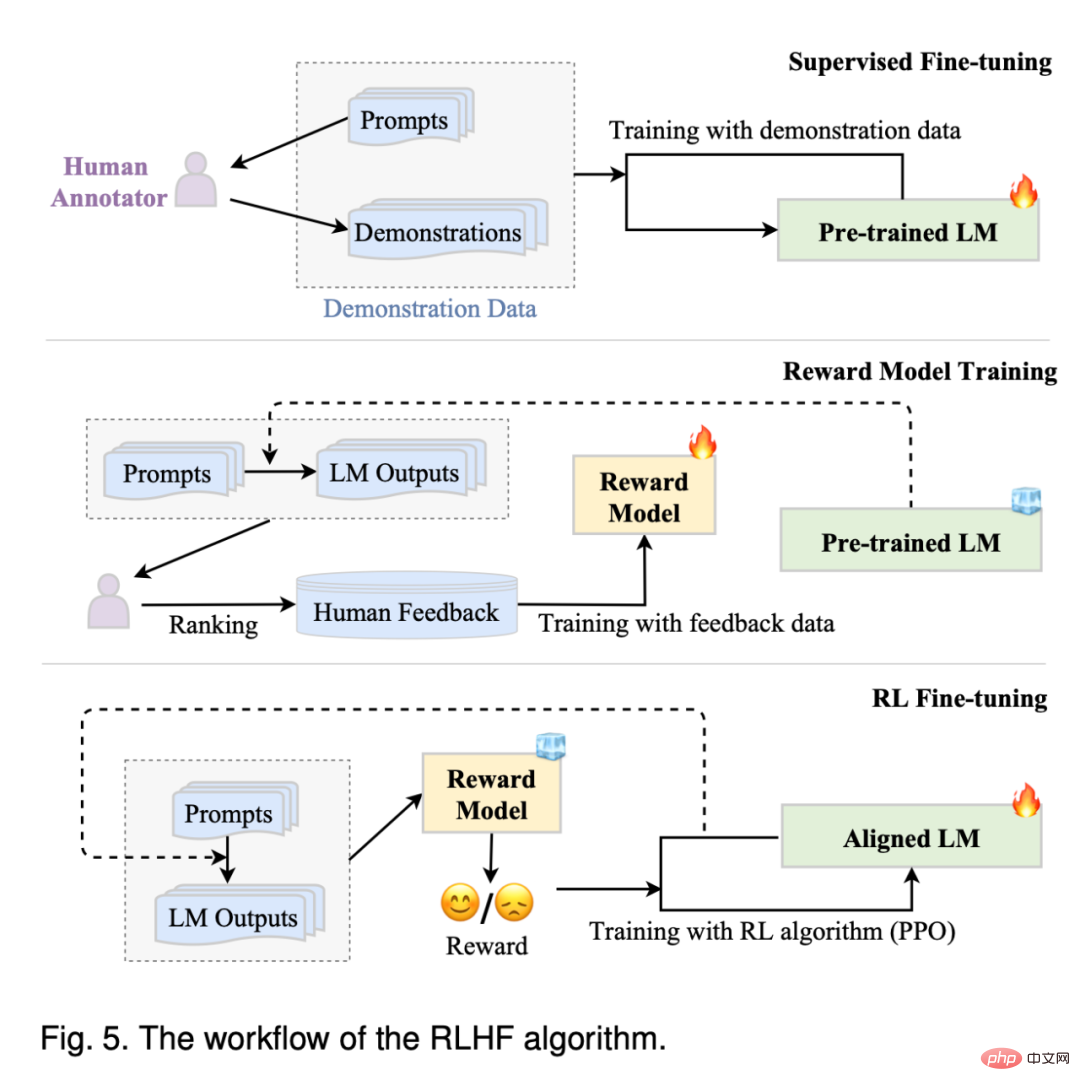

Alignment tuning. Since LLMs are trained to capture data characteristics of pre-training corpora (including high- and low-quality data), they are likely to generate text content that is toxic, biased, and harmful. To align LLMs with human values, InstructGPT designed an efficient tuning method that leverages reinforcement learning and human feedback so that LLMs can follow expected instructions. ChatGPT is developed on technology similar to InstructGPT and exhibits strong alignment capabilities in producing high-quality, harmless responses.

Tool utilization. LLMs are essentially text generators trained on large-scale plain text corpora, and therefore do not perform so well on tasks such as numerical calculations where text is poorly expressed. In addition, the capabilities of LLMs are limited by pre-training data and cannot capture the latest information. In response to these problems, people have proposed using external tools to make up for the shortcomings of LLMs, such as using calculators to perform precise calculations and using search engines to retrieve unknown information. ChatGPT also uses external plug-ins to learn new knowledge online. This mechanism can widely expand the capabilities of LLMs.

Given the challenging technical problems and huge computational resource requirements, developing or replicating LLMs is by no means an easy task. One possible approach is to learn from existing LLMs and reuse publicly available resources for incremental development or experimental research.

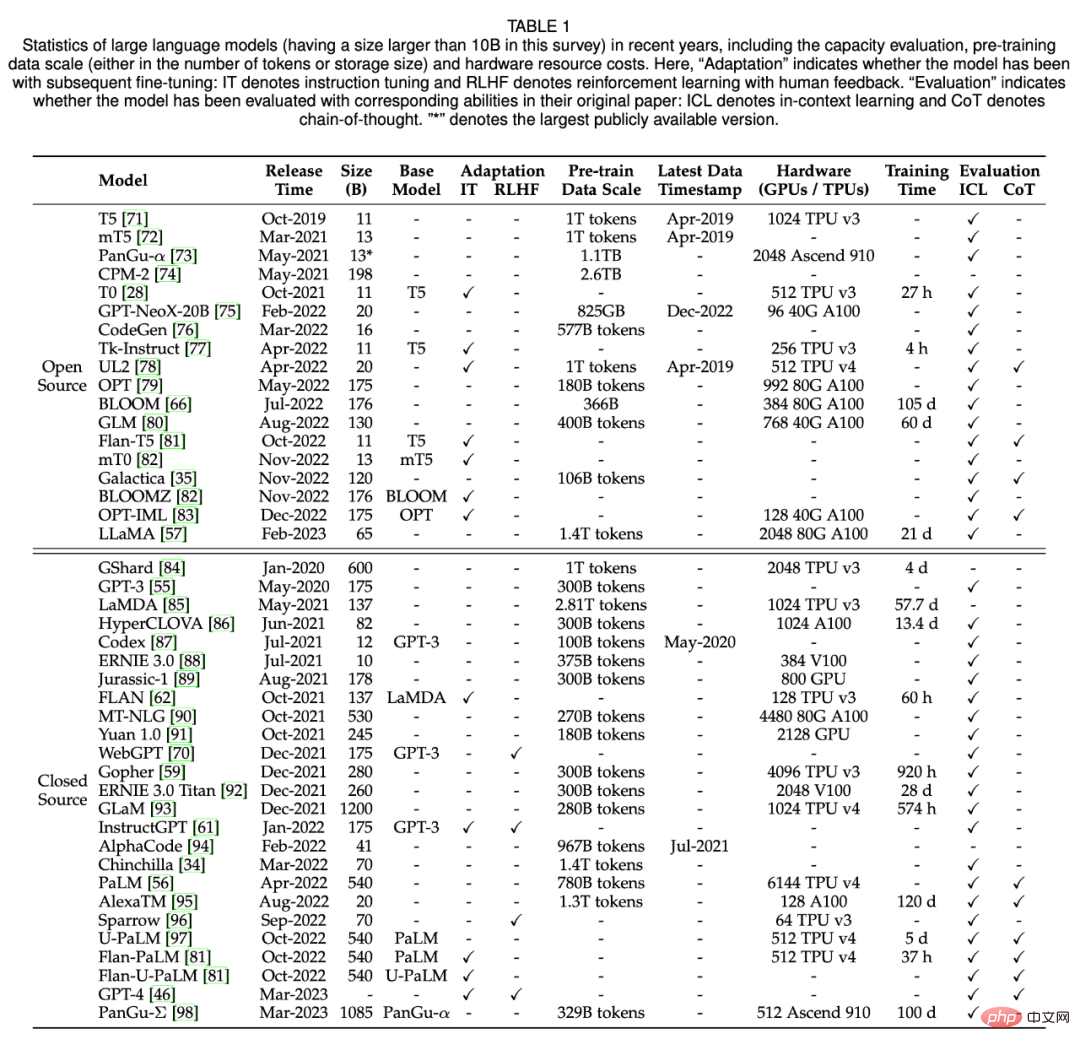

In Section 3, the researcher mainly summarizes open source model checkpoints or APIs, available corpora, and libraries useful for LLM. Table 1 below shows the statistical data of large models with more than 10 billion parameters in recent years.

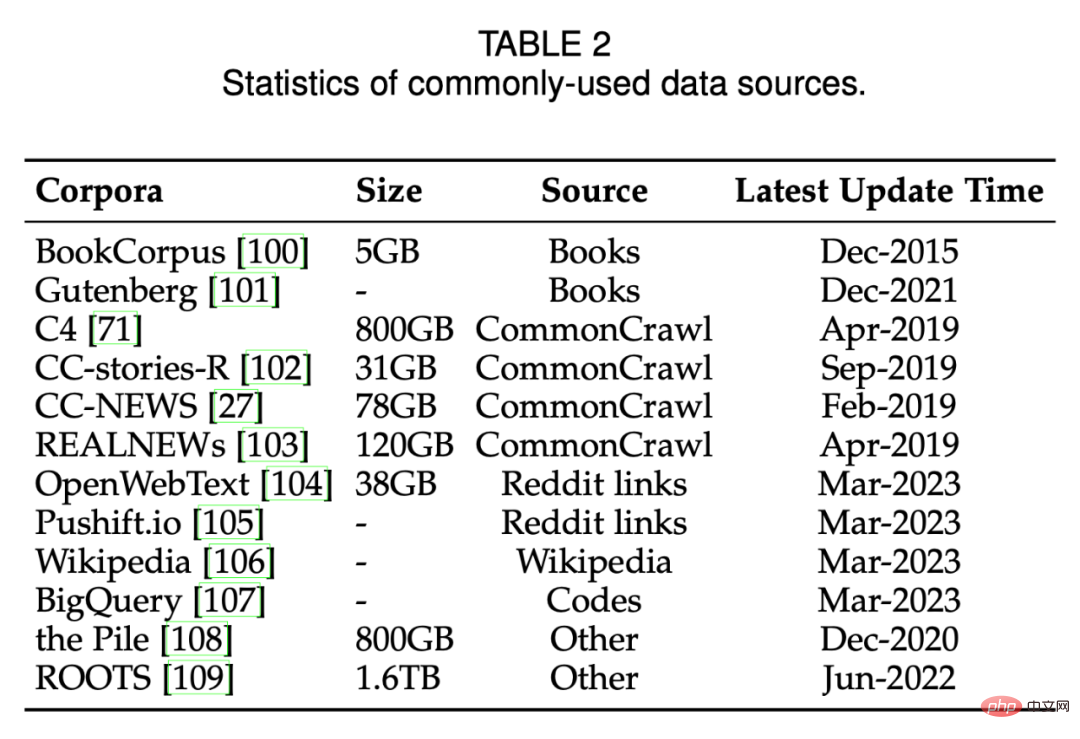

Table 2 below lists commonly used data sources.

Pre-training establishes the capability base of LLMs. Through pre-training on large-scale corpora, LLMs can acquire basic language understanding and production skills. In this process, the size and quality of the pre-training corpus are key for LLMs to achieve strong capabilities. Furthermore, to effectively pretrain LLMs, model architectures, acceleration methods, and optimization techniques all need to be carefully designed. In Section 4, the researcher first discusses data collection and processing in Section 4.1, then introduces commonly used model architectures in Section 4.2, and finally introduces training techniques for stable and effective optimization of LLMs in Section 4.3.

Data Collection

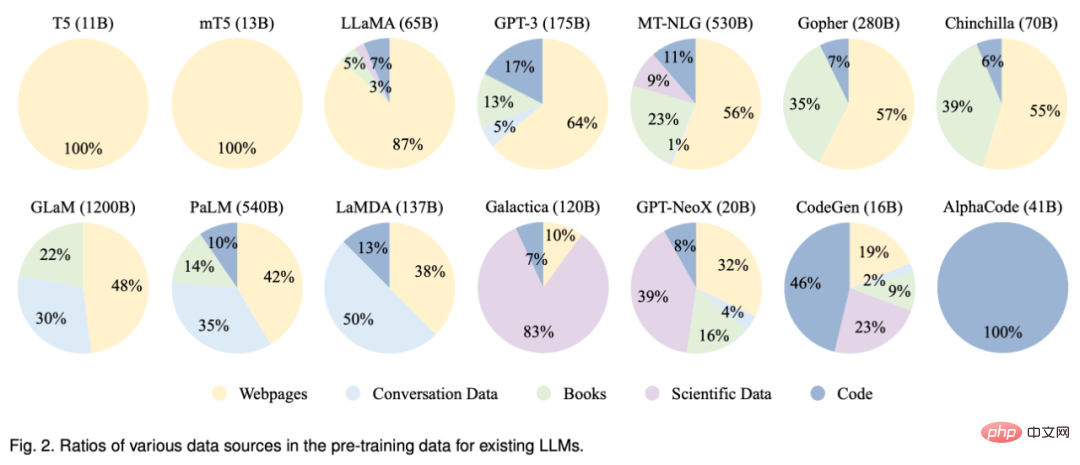

To develop a powerful LLM, collect a large amount of natural language from various data sources Corpus is crucial. Existing LLMs mainly utilize various public text datasets as pre-training corpora. Figure 2 below lists the distribution of pre-trained data sources for existing LLMs.

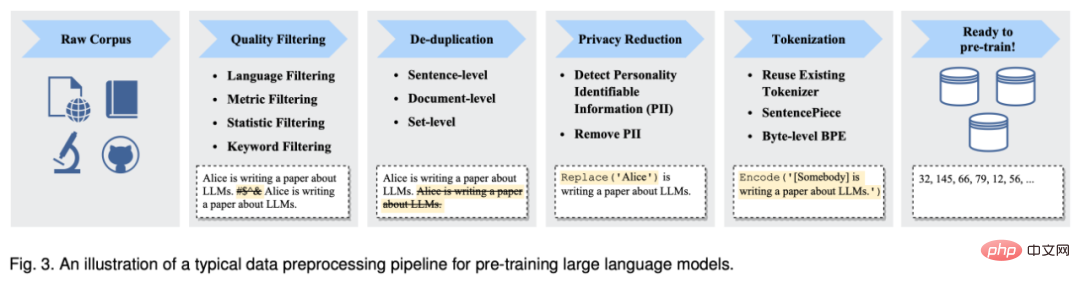

After collecting a large amount of text data, they must be pre-trained to build a pre-training corpus, including denoising, removing redundancy, and removing irrelevant and potentially toxic data. Figure 3 below shows the pre-processing pipeline for pre-training data for LLMs.

Architecture

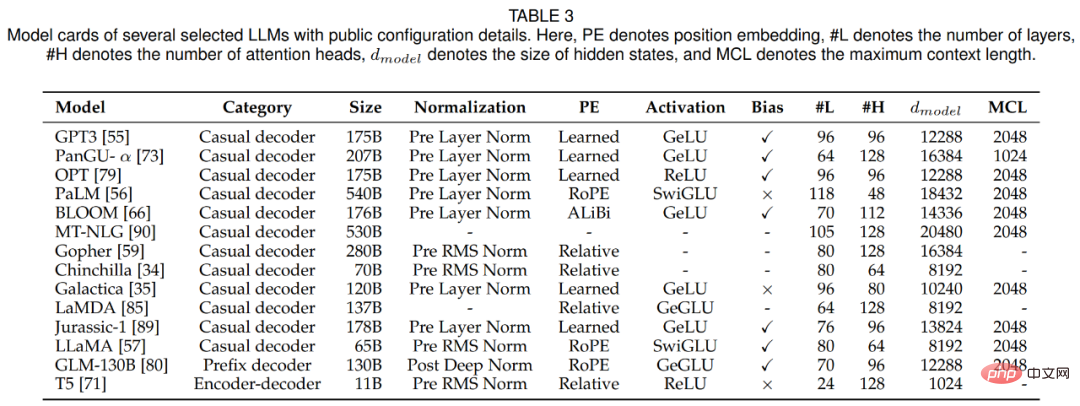

In this section, the researcher reviews LLMs Architectural design, that is, mainstream architecture, pre-training goals and detailed configuration. Table 3 below lists model cards for several representative LLMs with publicly available details.

Due to its excellent parallelization and capacity, the Transformer architecture has become the backbone for developing various LLMs, enabling the scaling of language models to hundreds of billions parameters are possible. Generally speaking, the mainstream architectures of existing LLMs can be roughly divided into three major categories, namely encoder-decoder, ad hoc decoder, and prefix decoder.

Since the emergence of Transformer, various improvements have been proposed to improve its training stability, performance and computational efficiency. In this part, the researchers discuss the corresponding configurations of the four main parts of Transformer, including normalization, position encoding, activation function, attention mechanism, and bias.

Pre-training plays a very critical role, encoding general knowledge from a large-scale corpus into large-scale model parameters. For training LLMs, there are two commonly used pre-training tasks: language modeling and denoising autoencoding.

Model training

In this section, the researcher reviews important settings, techniques and training for training LLMs LLMs Tips.

For parameter optimization of LLMs, researchers have proposed commonly used batch training, learning rate, optimizer and training stability settings.

As model and data sizes increase, it has become difficult to effectively train LLMs models with limited computing resources. In particular, two major technical issues need to be addressed, such as increasing training by input and loading larger models into GPU memory. This section reviews several widely used methods in existing work to address the above two challenges, namely 3D parallelism, ZeRO, and mixed precision training, and gives suggestions on how to leverage them for training.

After pre-training, LLMs can acquire the general ability to solve various tasks. Yet a growing body of research suggests that the capabilities of LLMs can be further tailored to specific goals. In Section 5, the researchers introduce in detail two main methods for tuning pre-trained LLMs, namely instruction tuning and alignment tuning. The former approach is mainly to improve or unlock the capabilities of LLMs, while the latter approach is to make the behavior of LLMs consistent with human values or preferences.

Instruction tuning

Essentially, instruction tuning is based on a set of formatted instances in natural language form Methods for fine-tuning pre-trained LLMs, which are highly relevant to supervised fine-tuning and multi-task cue training. In order to perform instruction tuning, we first need to collect or build an instance of the instruction format. We then typically use these formatted instances to fine-tune LLMs in a supervised learning manner (e.g., trained using a sequence-to-sequence loss). When their instructions are tuned, LLMs can demonstrate superior abilities to generalize to unseen tasks, even in multilingual environments.

A recent survey provides a systematic overview of instruction tuning research. In contrast, this paper focuses on the impact of instruction tuning on LLMs and provides detailed guidelines or strategies for instance collection and tuning. Additionally, this paper discusses the use of instruction tuning to meet users' actual needs, which has been widely used in existing LLMs such as InstructGPT and GPT-4.

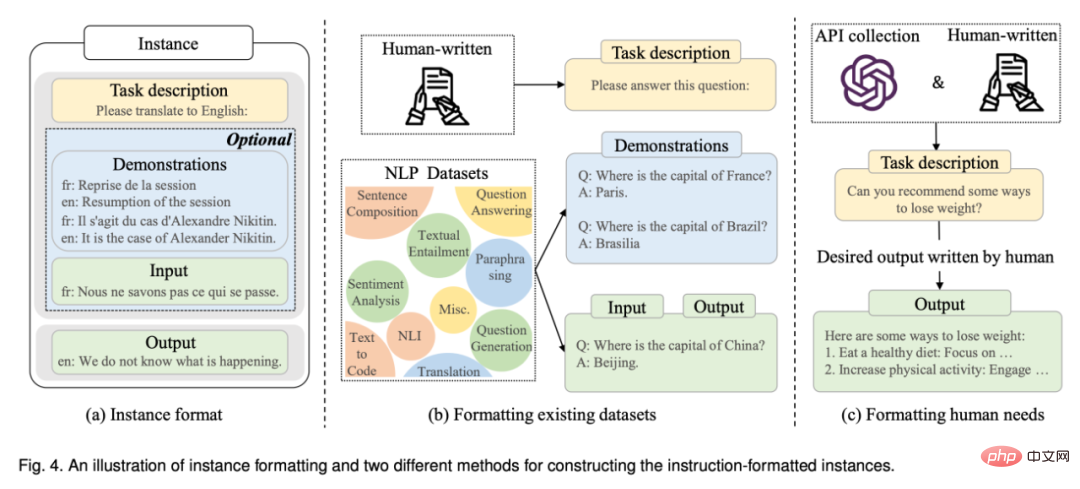

Formatted instance construction: Typically, an instance in the instruction format consists of a task description (called an instruction), an input-output pair, and a small number of demonstrations (optional). Existing research has published a large amount of labeled data formatted in natural language as an important public resource (see Table 5 for a list of available resources). Next, this article introduces the two main methods of constructing formatted instances (see the illustration in Figure 4), and then discusses several key factors in instance construction.

Instruction Tuning Strategy: Unlike pre-training, instruction tuning is generally more efficient because only a modest number of instances are used for training. While instruction tuning can be considered a supervised training process, its optimization differs from pre-training in several aspects, such as the training objective (i.e., sequence-to-sequence loss) and optimization configuration (e.g., smaller batch size) and learning rate), which require special attention in practice. In addition to these optimization configurations, instruction tuning also needs to consider two important aspects:

##Alignment Tuning

This part is introduced first The background of alignment and its definitions and standards are then highlighted, and then the collection of human feedback data for aligning LLMs is highlighted, and finally the key techniques of human feedback reinforcement learning for alignment adjustment are discussed.

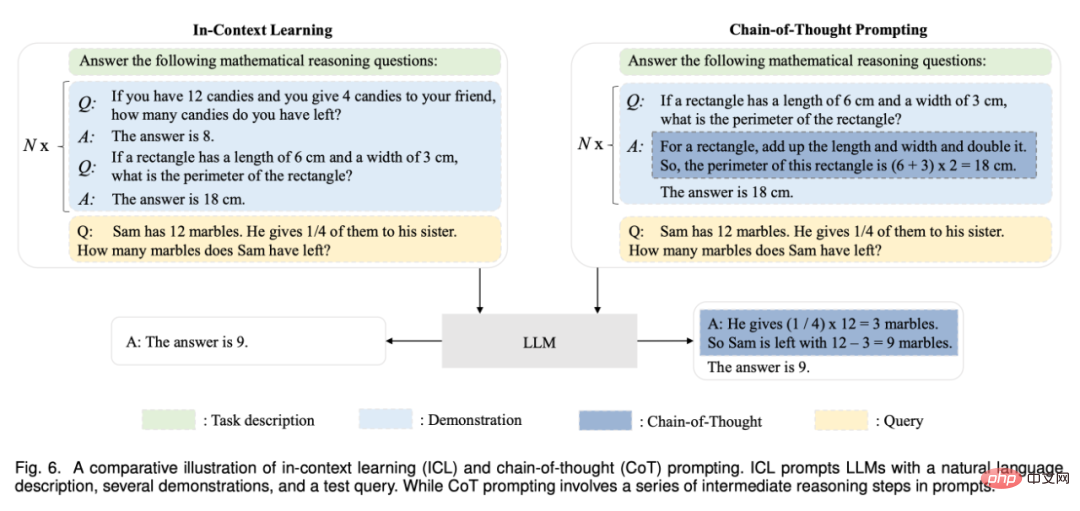

One of the main ways to use LLMs after pre-training or adaptation is to design them for solving a variety of tasks Appropriate prompt strategy. A typical prompt approach is in-context learning, which formulates task descriptions or demonstrations in the form of natural language text. In addition, the thought chain prompting method can enhance contextual learning by incorporating a series of intermediate reasoning steps into the prompt. In Section 6, the researchers introduce the details of these two techniques in detail.

Contextual Learning

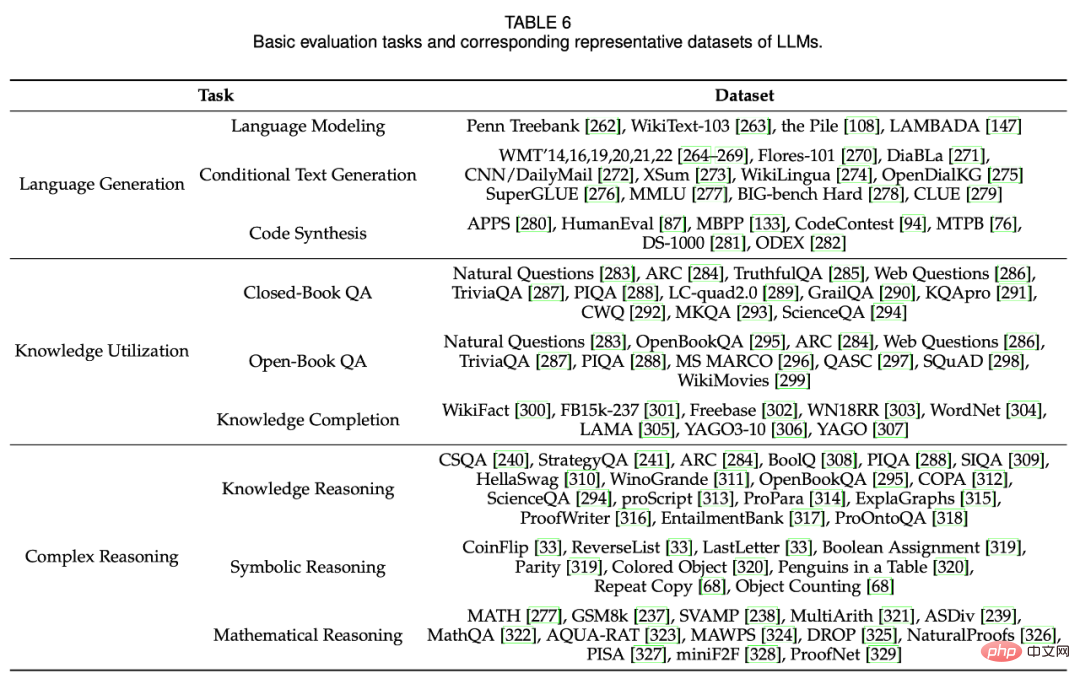

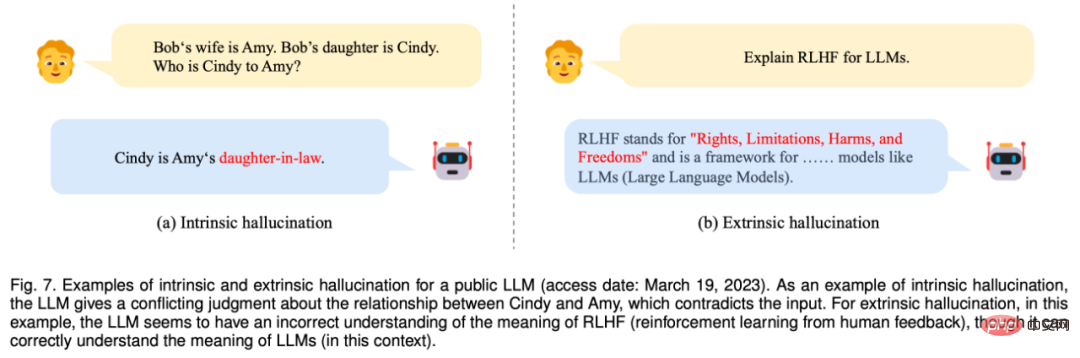

Thinking chain prompt Chain of Thoughts (CoT) is an improved prompt strategy that can improve the performance of LLM in complex reasoning tasks, such as arithmetic reasoning, common sense reasoning, and symbolic reasoning. Rather than simply building the prompt out of input-output pairs like ICL, CoT incorporates into the prompt intermediate reasoning steps that lead to the final output. In Section 6.2, we detail the use of CoT versus ICL and discuss when and why CoT is effective. To study the effectiveness and superiority of LLMs, researchers have utilized a large number of tasks and benchmarks for empirical evaluation and analysis. Section 7 first introduces basic evaluation tasks for three LLMs for language production and understanding, then introduces several advanced tasks for LLMs with more complex settings or goals, and finally discusses existing benchmarks and empirical analyses. Basic Assessment Tasks Figure 7: An example of internal and external hallucinations disclosing LLM (Accessed on March 19, 2023). As an example of internal hallucination, the LLM gives a judgment about the relationship between Cindy and Amy that contradicts the input. For extrinsic hallucinations, in this case LLM seems to have an incorrect understanding of what RLHF (reinforcement learning from human feedback) means, even though it correctly understands what LLM means. Advanced Task Assessment In addition to the above basic assessment tasks, LLMs also demonstrate some advanced abilities, Special assessment is required. In Section 7.2, the researchers discuss several representative high-level capabilities and corresponding evaluation methods, including manual alignment, interaction with the external environment, and tool operation. In the last section, the researcher summarized the discussion of this survey and introduced the challenges and challenges of LLMs from the following aspects Future direction. Theory and Principles: In order to understand the basic working mechanism of LLM, one of the biggest mysteries is how information is distributed, organized and utilized through very large deep neural networks. It is important to reveal the underlying principles or elements that build the basis of LLMs' capabilities. In particular, scaling appears to play an important role in improving the capabilities of LLMs. Existing research has shown that when the parameter size of a language model increases to a critical point (such as 10B), some emerging capabilities will appear in an unexpected way (sudden leap in performance), typically including context learning, instruction following and Step-by-step reasoning. These “emergent” abilities are fascinating but also puzzling: when and how do LLMs acquire them? Some recent research has either conducted broad-based experiments investigating the effects of emerging abilities and the enablers of those abilities, or used existing theoretical frameworks to explain specific abilities. An insightful technical post targeting the GPT family of models also deals specifically with this topic, however more formal theories and principles to understand, describe and explain the capabilities or behavior of LLMs are still missing. Since emergent capabilities have close similarities to phase transitions in nature, interdisciplinary theories or principles (such as whether LLMs can be considered some kind of complex systems) may be helpful in explaining and understanding the behavior of LLMs. These fundamental questions deserve exploration by the research community and are important for developing the next generation of LLMs. Model Architecture: Transformer, consisting of stacked multi-head self-attention layers, has become a common architecture for building LLMs due to its scalability and effectiveness. Various strategies have been proposed to improve the performance of this architecture, such as neural network configuration and scalable parallel training (see discussion in Section 4.2.2). In order to further improve the capacity of the model (such as multi-turn dialogue capability), existing LLMs usually maintain a long context length. For example, GPT-4-32k has an extremely large context length of 32768 tokens. Therefore, a practical consideration is to reduce the time complexity (primitive quadratic cost) incurred by standard self-attention mechanisms. In addition, it is important to study the impact of more efficient Transformer variants on building LLMs, such as sparse attention has been used for GPT-3. Catastrophic forgetting has also been a challenge for neural networks, which has also negatively impacted LLMs. When LLMs are tuned with new data, the previously learned knowledge is likely to be destroyed. For example, fine-tuning LLMs for some specific tasks will affect their general capabilities. A similar situation occurs when LLMs are aligned with human values, which is called an alignment tax. Therefore, it is necessary to consider extending the existing architecture with more flexible mechanisms or modules to effectively support data updates and task specialization. Model training: In practice, pre-training available LLMs is very difficult due to the huge computational effort and sensitivity to data quality and training techniques. Therefore, taking into account factors such as model effectiveness, efficiency optimization, and training stability, it becomes particularly important to develop more systematic and economical pre-training methods to optimize LLMs. Develop more model checking or performance diagnostics (such as predictable scaling in GPT-4) to catch anomalies early in training. In addition, it also requires more flexible hardware support or resource scheduling mechanisms to better organize and utilize resources in computing clusters. Since pretraining LLMs from scratch is expensive, a suitable mechanism must be designed to continuously pretrain or fine-tune LLMs based on publicly available model checkpoints (e.g., LLaMA and Flan-T5). To do this, several technical issues must be addressed, including data inconsistency, catastrophic forgetting, and task specialization. To date, there is still a lack of open source model checkpoints for reproducible LLMs with complete preprocessing and training logs (e.g. scripts to prepare pretraining data). Providing more open source models for research on LLMs would be extremely valuable. In addition, it is also important to develop more improved adjustment strategies and study mechanisms to effectively stimulate model capabilities. Model Usage: Since fine-tuning is expensive in real-world applications, prompts have become a prominent method for using LLMs. By combining task descriptions and demonstration examples into prompts, contextual learning (a special form of prompts) gives LLMs good performance on new tasks, even outperforming full-data fine-tuned models in some cases. In addition, in order to improve the ability of complex reasoning, advanced prompt techniques have been proposed, such as the Chain of Thought (CoT) strategy, which incorporates intermediate reasoning steps into prompts. However, the existing prompt method still has the following shortcomings. First, it requires a lot of manpower when designing prompts, so automatically generating effective prompts for solving various tasks will be very useful; second, some complex tasks (such as formal proofs and numerical calculations) require specific knowledge or logical rules, These knowledge or rules may not be described in natural language or proven with examples, so it is important to develop prompt methods with more information and more flexible task formatting; third, existing prompt strategies mainly focus on single-turn Performance-wise, it is therefore very useful to develop interactive prompt mechanisms for solving complex tasks (such as through natural language conversations), as ChatGPT has demonstrated. Safety and Alignment: Despite their considerable capabilities, LLMs suffer from similar security issues as small language models. For example, LLMs show a tendency to generate hallucinatory texts, such as those that appear reasonable but may not correspond to fact. Worse, LLMs can be motivated by intentional instructions to produce harmful, biased, or toxic text for malicious systems, leading to potential risks of abuse. For a detailed discussion of other security issues with LLMs (such as privacy, over-reliance, disinformation, and impact operations), readers are referred to the GPT-3/4 technical report. As the main method to avoid these problems, reinforcement learning from human feedback (RLHF) has been widely used, which incorporates humans into the training loop to develop good LLMs. In order to improve the security of the model, it is also important to add security-related prompts during the RLHF process, as shown in GPT-4. However, RLHF relies heavily on high-quality human feedback data from professional labelers, making it difficult to implement correctly in practice. Therefore, it is necessary to improve the RLHF framework to reduce the work of human labelers and seek a more effective annotation method to ensure data quality. For example, LLMs can be adopted to assist the annotation work. Recently, red teaming has been adopted to improve the model security of LLMs, which utilizes collected adversarial prompts to refine LLMs (i.e., avoid red teaming attacks). In addition, it is also meaningful to establish a learning mechanism for LLMs through communication with humans. Feedback given by humans through chat can be directly utilized by LLMs for self-improvement. Applications and Ecosystem: Since LLMs exhibit strong capabilities in solving a variety of tasks, they can be used in a wide range of real-world applications (e.g., following specific natural language instructions). As a significant advancement, ChatGPT has the potential to change the way humans access information, leading to the release of the new Bing. In the near future, it is foreseeable that LLMs will have a significant impact on information search technology, including search engines and recognition systems. In addition, with the technological upgrade of LLMs, the development and use of intelligent information assistants will be greatly promoted. On a broader scale, this wave of technological innovation tends to establish an ecosystem of applications licensed by LLMs (e.g., ChatGPT’s support for plug-ins) that will be closely relevant to human life. Finally, the rise of LLMs provides inspiration for the exploration of artificial general intelligence (AGI). It holds the promise of developing more intelligent systems (potentially with multimodal signals) than ever before. At the same time, in this development process, the safety of artificial intelligence should be one of the primary concerns, that is, let artificial intelligence bring benefits rather than harm to mankind. Capability Assessment

Summary and future directions

The above is the detailed content of A newly released review of large-scale language models: the most comprehensive review from T5 to GPT-4, co-authored by more than 20 domestic researchers. For more information, please follow other related articles on the PHP Chinese website!

Application of artificial intelligence in life

Application of artificial intelligence in life

What is the basic concept of artificial intelligence

What is the basic concept of artificial intelligence

How to close port 135 445

How to close port 135 445

C language random function usage

C language random function usage

Introduction to the usage of stickline function

Introduction to the usage of stickline function

WAN access settings

WAN access settings

photoshop cs5 serial number

photoshop cs5 serial number

How to read files and convert them into strings in java

How to read files and convert them into strings in java

Remove header line

Remove header line