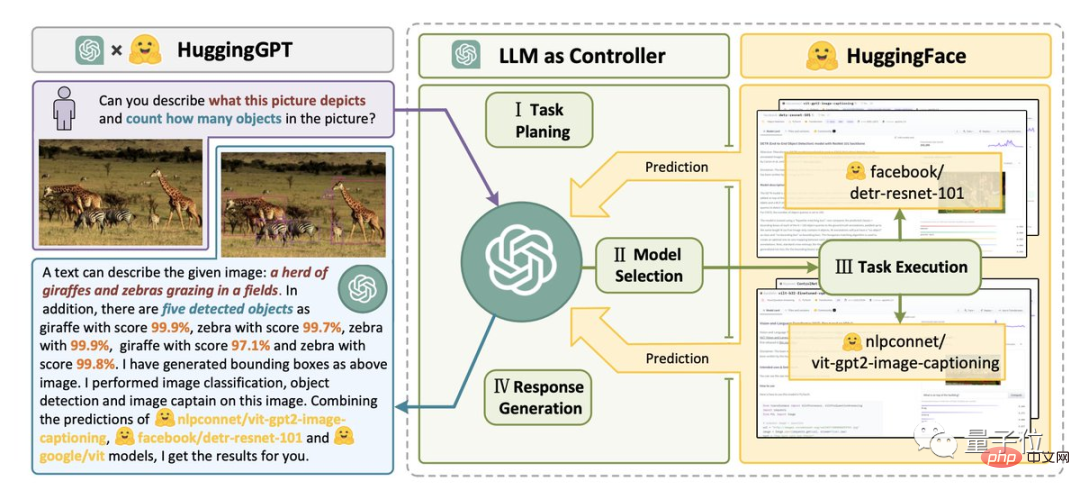

The strongest combination: HuggingFace ChatGPT——

HuggingGPT, it’s coming!

Just give an AI task, such as "What animals are in the picture below, and how many of each type are there?"

It can help you automatically analyze which AI models are needed, and then directly call the corresponding model on HuggingFace to help you execute and complete it.

#In the entire process, all you have to do is output your requirements in natural language.

This result of the cooperation between Zhejiang University and Microsoft Research Asia quickly became popular as soon as it was released.

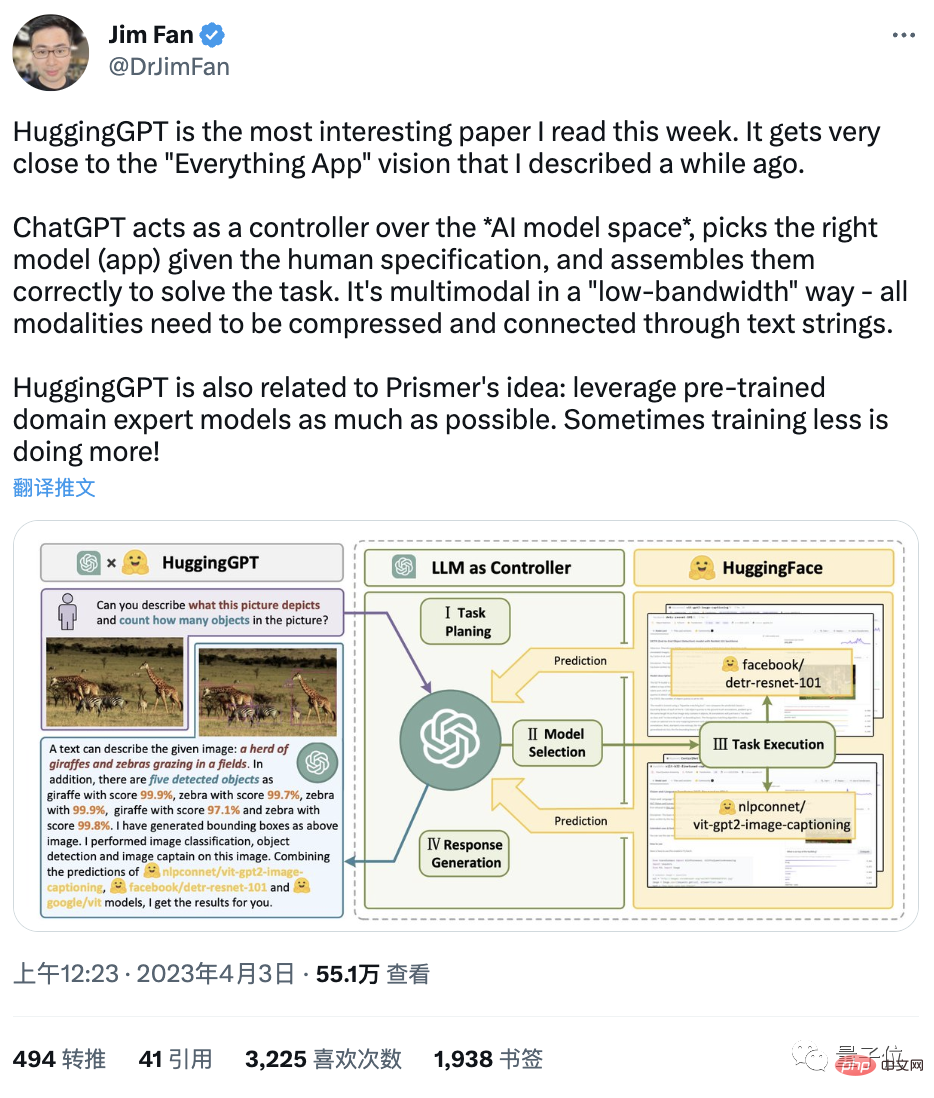

NVIDIA AI research scientist Jim Fan said directly:

This is the most interesting paper I have read this week. Its idea is very close to "Everything App" (everything is an App, and information is read directly by AI).

And one netizen "slaps his thigh directly":

Isn't this the ChatGPT "package transfer man"?

AI evolves at a rapid pace, leaving us something to eat...

So, what exactly is going on?

In fact, if this combination is just a "Tiao Bao Xia", then the pattern is too small.

Its true meaning is AGI.

As the author said, a key step towards AGI is the ability to solve complex AI tasks with different domains and modes.

Our current results are still far from this - a large number of models can only perform a specific task well.

However, the performance of large language models LLM in language understanding, generation, interaction and reasoning made the author think:

They can be used as intermediate controllers to manage all existing AI models , by "mobilizing and combining everyone's power" to solve complex AI tasks.

In this system, language is the universal interface.

So, HuggingGPT was born.

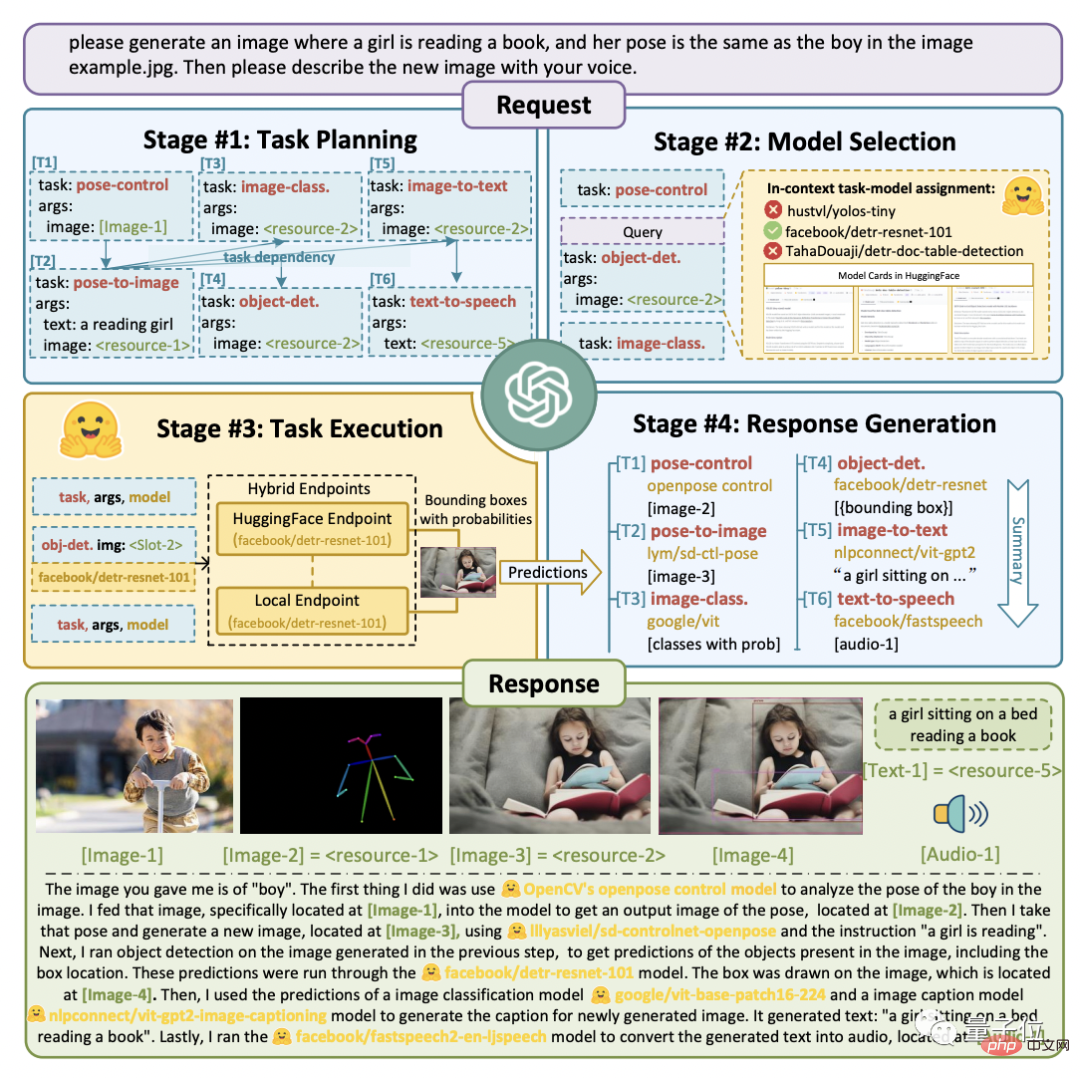

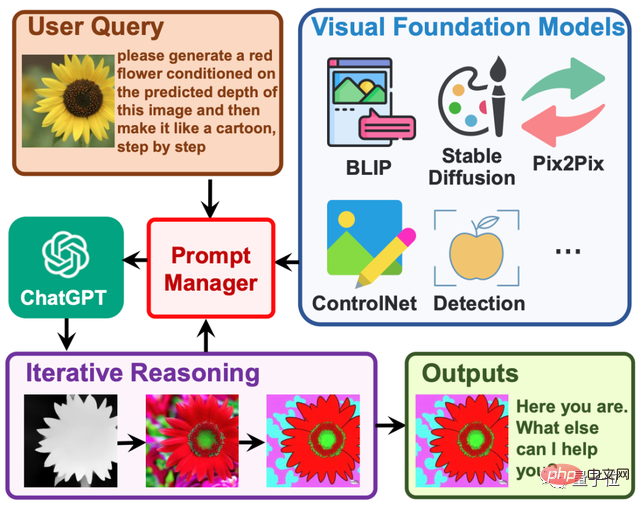

Its engineering process is divided into four steps:

First, task planning. ChatGPT parses the user's needs into a task list and determines the execution sequence and resource dependencies between tasks.

Secondly, model selection. ChatGPT assigns appropriate models to tasks based on the descriptions of each expert model hosted on HuggingFace.

Then, the task is executed. The selected expert model on the hybrid endpoint (including local inference and HuggingFace inference) executes the assigned tasks according to the task sequence and dependencies, and gives the execution information and results to ChatGPT.

Finally, output the results. ChatGPT summarizes the execution process logs and inference results of each model and gives the final output.

As shown below.

Suppose we give such a request:

Please generate a picture of a girl reading a book, her posture is the same as the boy in example.jpg. Then use your voice to describe the new image.

You can see how HuggingGPT decomposes it into 6 subtasks, and selects the model to execute respectively to obtain the final result.

What is the specific effect?

The author conducted actual measurements using gpt-3.5-turbo and text-davinci-003, two variants that can be publicly accessed through the OpenAI API.

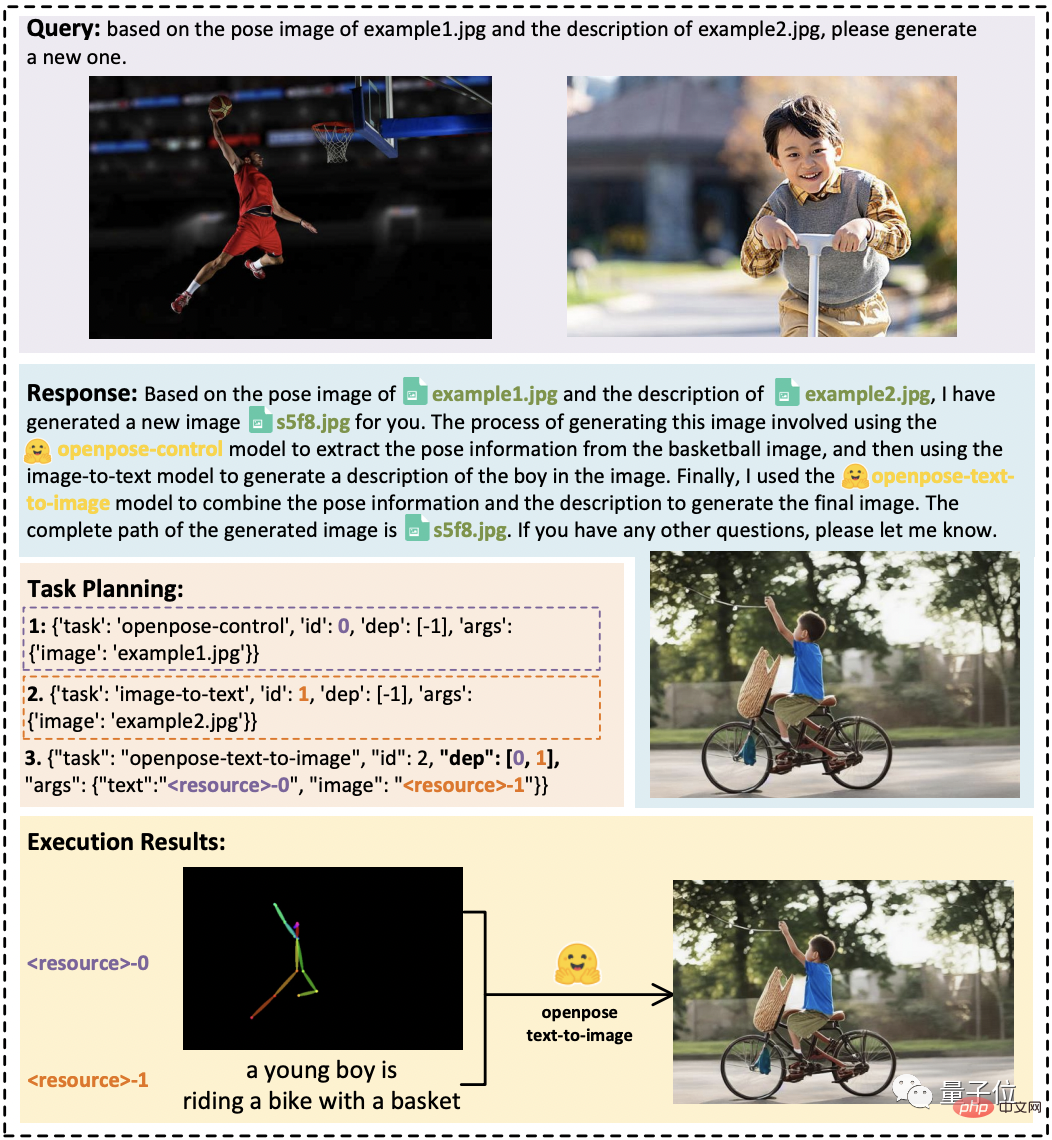

As shown in the figure below:

When there are resource dependencies between tasks, HuggingGPT can correctly parse the specific tasks according to the user's abstract request and complete the image conversion.

In audio and video tasks, it also demonstrates the ability to organize cooperation between models, by executing two models in parallel and serially, respectively. Finished a video and dubbing work of "Astronauts Walking in Space".

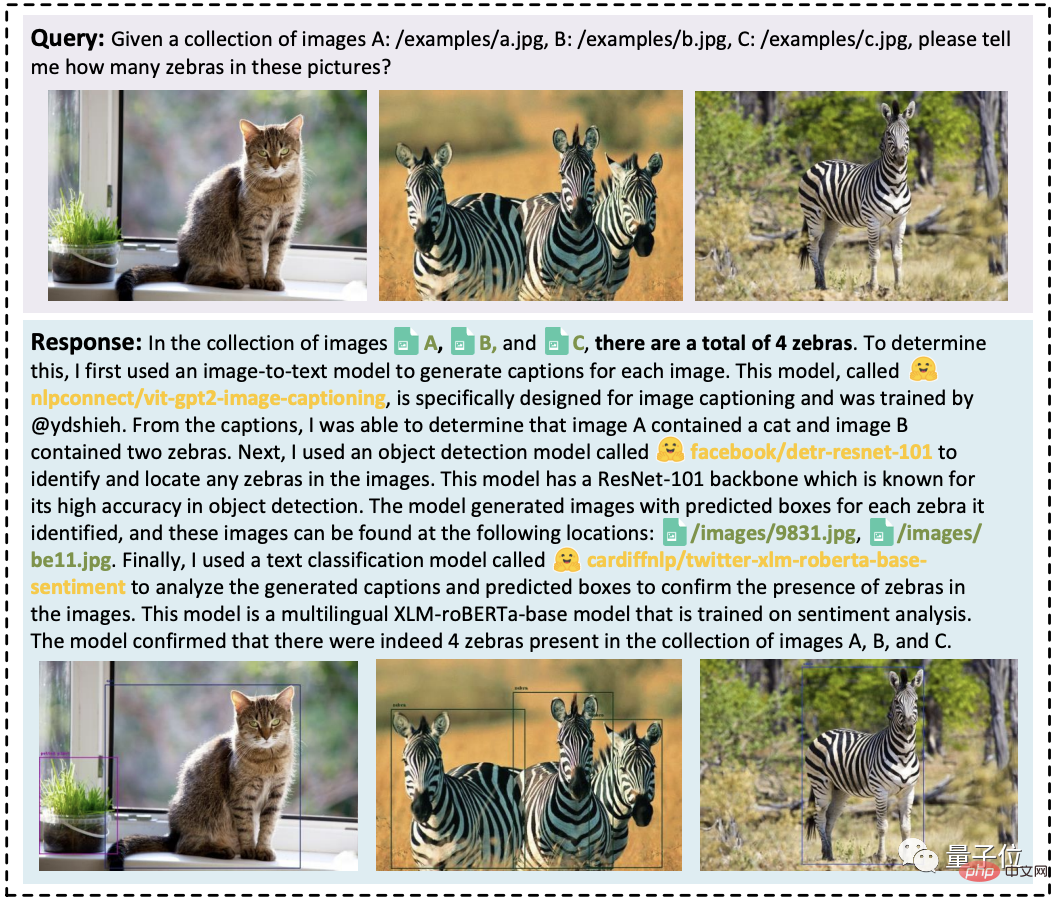

#In addition, it can also integrate input resources from multiple users to perform simple reasoning, such as counting how many zebras there are in the following three pictures. .

Summary in one sentence: HuggingGPT can show good performance on various forms of complex tasks.

Currently, HuggingGPT's paper has been released and the project is under construction. Only part of the code has been open sourced and has received 1.4k stars. .

We noticed that its project name is very interesting. It is not called HuggingGPT, but JARVIS, the AI butler in Iron Man.

Some people have found that the idea of it is very similar to the Visual ChatGPT just released in March: the latter HuggingGPT, mainly the scope of the callable model has been expanded to more, Include quantity and type.

#Yes, in fact, they all have a common author: Microsoft Asia Research Institute.

Specifically, the first author of Visual ChatGPT is MSRA senior researcher Wu Chenfei, and the corresponding author is MSRA chief researcher Duan Nan.

HuggingGPT includes two co-authors:

Shen Yongliang, who is from Zhejiang University and completed this work during his internship at MSRA;

Song Kaitao, a researcher at MSRA.

The corresponding author is Zhuang Yueting, a professor in the Department of Computer Science of Zhejiang University.

Finally, netizens were very excited about the birth of this powerful new tool. Some people said:

ChatGPT has become a human Create the overall commander of all AI.

Some people also believe that

AGI may not be an LLM, but an "intermediary" LLM Connect multiple interrelated models.

So, have we begun the era of "semi-AGI"?

Paper address:https://www.php.cn/link/1ecdec353419f6d7e30857d00d0312d1

Project link :https://www.php.cn/link/859555c74e9afd45ab771c615c1e49a6

Reference link:https://www.php.cn/ link/62d2b7ba91f34c0ac08aa11c359a8d2c

The above is the detailed content of HuggingGPT is popular: a ChatGPT controls all AI models and automatically helps people complete AI tasks. Netizens: Leave your mouth to eat.. For more information, please follow other related articles on the PHP Chinese website!

What is the use of java

What is the use of java

Domestic Bitcoin buying and selling platform

Domestic Bitcoin buying and selling platform

psrpc.dll not found solution

psrpc.dll not found solution

Three mainstream frameworks for web front-end

Three mainstream frameworks for web front-end

The difference between indexof and includes

The difference between indexof and includes

How to calculate the factorial of a number in python

How to calculate the factorial of a number in python

Computer system vulnerability repair methods

Computer system vulnerability repair methods

How to solve the problem of access denied when booting up Windows 10

How to solve the problem of access denied when booting up Windows 10

Latest ranking of digital currency exchanges

Latest ranking of digital currency exchanges