Technology peripherals

Technology peripherals

AI

AI

Re-examining AI, the discovery and proof of concept symbol emergence in neural networks

Re-examining AI, the discovery and proof of concept symbol emergence in neural networks

Re-examining AI, the discovery and proof of concept symbol emergence in neural networks

This article focuses on two recent works to discuss the emergence of symbolic concepts in neural networks, that is, "Whether the representation of deep neural networks is symbolic" question. If we bypass the perspective of “application technology improvement” and re-examine AI from the perspective of “scientific development”, it will undoubtedly prove that the phenomenon of symbol emergence in AI models is of great significance.

#1. First of all, most of the current interpretability research is trying to explain the neural network as a "clear", "semantic", or "logical" model. However, if the symbolic emergence of neural networks cannot be proven, and if the intrinsic representational components of neural networks really have a lot of chaotic components, then most of the interpretability research will lose its basic factual basis.

2. Secondly, if the symbolic emergence of neural networks cannot be proven, the development of deep learning will most likely be trapped in peripheral factors such as "structure", "loss function", and "data" level, and cannot directly realize interactive learning at the knowledge level from the high-level cognitive level. Development in this direction requires cleaner and clearer theoretical support.

Therefore, this article mainly introduces it from the following three aspects.

1. How to define the symbolic concept modeled by neural networks, so as to reliably discover the symbolic emergence phenomenon of neural networks.

2. Why the quantified symbolic concepts can be considered credible concepts (sparsity, universal matching of neural network representations, transferability, classification, historical interpretability interpretation of the indicator).

3. How to prove the emergence of symbolic concepts - that is, theoretically prove that when the AI model is under certain circumstances (a not harsh condition), the representation logic of the AI model can be deconstructed The classification utility of a very small number of transferable symbolic concepts (this part will be discussed publicly at the end of April).

##Paper address: https://arxiv.org/pdf/2111.06206.pdf

Paper address: https://arxiv.org/pdf/2302.13080.pdf

The authors of the study include Li Mingjie, a second-year master's student at Shanghai Jiao Tong University, and Ren Jie, a third-year doctoral student at Shanghai Jiao Tong University. Both Li Mingjie and Ren Jie studied under Zhang Quanshi. Their laboratory team has been doing research on the interpretability of neural networks all year round. For the field of interpretability, researchers can analyze it from different angles, including explaining representation, explaining performance, relatively reliable and reasonable, and some unreasonable. However, if the discussion goes further, there are two fundamental visions for the explanation of neural networks, namely "Can the concept modeled by the neural network be clearly and rigorously expressed"和 "Can you accurately explain the factors that determine the performance of neural networks".

In the direction of "explaining the concepts modeled by neural networks", all researchers must face a core issue - " Whether representation is symbolic conceptualization". If the answer to this question is unclear, then subsequent research will be difficult to carry out - if the representation of the neural network itself is chaotic, and then the researcher forces a bunch of "symbolic concepts" or "causal logic" to explain, this will Were you coming in the wrong direction? The assumption of symbolic representation of neural networks is the basis for in-depth research in this field, but the demonstration of this issue often makes people unable to start.

Most researchers’ first instinct about neural networks is “It can’t be symbolic, right?” Neural networks are not graphical models after all. In a paper "Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead" [3] written by Cynthia et al., people mistakenly think of post-hoc explanation of neural networks. It is inherently unreliable.

So, the internal representation of neural networks is really very confusing? Rather than clear, sparse, and symbolic? Focusing on this issue, we defined game interaction [4, 5], proved the bottleneck of neural network representation [6], and studied the characteristics of neural network representation of visual concepts [7, 8], thus proving that the interaction concept is closely related to the neural network. The relationship between transformation and robustness [9, 10, 11, 12] was improved, and the Shapley value [13] was improved. However, in the early stage of the laboratory, it only explored the periphery of the core of "symbolic representation" and was never able to explore directlyWhether the neural network representation is symbolic.

Let’s talk about the conclusion first - In most cases, the representation of neural networks is clear, sparse, and symbolic. There is a lot of theoretical proof and a lot of experimental demonstration behind this conclusion. In terms of theory, our current research has proven some properties that can support "symbolization", but the current proof is not enough to give a rigorous and clear answer to "symbolization representation". In the next few months, we will have more rigorous and comprehensive proof.

How to define the concept modeled by the neural network

Before analyzing the neural network, we need to clarify "how to define the concept modeled by the network". In fact, there have been relevant studies on this issue before [14,15], and the experimental results are relatively excellent. However, we believe that the definition of “concept” should be theoretically “ Rigorous ” mathematical guarantee.

Therefore, we defined the index I(S) in the paper [1] to quantify the effectiveness of concept S for network output, where S Refers to the set of all input variables that make up the concept. For example, given a neural network and an input sentence x = "I think he is a green hand.", each word can be regarded as one of the input variables of the network, and the three words "a" and "green" in the sentence , "hand" can constitute a potential concept S={a, green, hand}. Each concept S represents the "AND" relationship between input variables in S: This concept is triggered when and only if all input variables in S appear, thereby contributing I to the network output (S) Utility. When any variable in S is occluded, the utility of I (S) is removed from the original network output. For example, for the concept S={a, green, hand}, if the word "hand" in the input sentence is blocked, then this concept will not be triggered, and the network output will not contain the utility of this concept. I(S).

# We prove that the neural network output can always be split into the sum of the utilities of all triggered concepts. That is to say, in theory, for a sample containing n input units, there are at most

different occlusion methods. We can always use the utility of "a small number of concepts" to "accurately fit" "all

" different neural networks The output value on the occlusion sample proves the "rigor" of I (S). The figure below gives a simple example.

Further, we proved in paper [1] that I (S ) satisfies 7 properties in game theory, which further illustrates the reliability of this indicator.

In addition, we also proved that the game interaction concept I (S) can explain the basic mechanism of a large number of classic indicators in game theory, such as Shapley value [16], Shapley interaction index [17], and Shapley- Taylor interaction index [18]. Specifically, we can represent these three indicators as forms of different linear sums of interaction concepts.

In fact, the research team’s preliminary work has defined the optimal benchmark value of Shapley value based on game interaction concept indicators[13] , and explore the "prototype visual concept" and its "aesthetics" modeled by the visual neural network [8].

Whether the neural network models a clear, symbolic representation of concepts

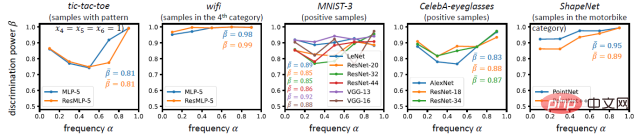

With this indicator, we further explore the core question mentioned above: whether the neural network Can clear, symbolic, and conceptual representations really be summarized from the training tasks? Can the defined interaction concept really represent some meaningful "knowledge", or is it just a tricky metric that is purely made from mathematics and has no clear meaning? To this end, we answer this question from the following four aspects - the representation of symbolic conceptualization should satisfy sparsity, transferability between samples, transferability between networks, and classification.

Requirement 1 (Concept sparsity): The concepts modeled by the neural network should be sparse

Different As with connectionism, a characteristic of symbolism is that people hope to use a small number of sparse concepts to represent the knowledge learned by the network, rather than using a large number of dense concepts. In experiments, we found that among a large number of potential concepts, there are only a very small number of salient concepts. That is, the interaction utility I (S) of most interactive concepts approaches 0, so it can be ignored. Only a very small number of interactive concepts have significant interaction utility I (S). In this way, the output of the neural network only depends on the interaction utility of a small number of concepts. interaction utility. In other words, the neural network's inference for each sample can be succinctly explained as the utility of a small number of salient concepts.

Requirement 2 (Transferability between samples): The concepts modeled by the neural network should be transferable between different samples

It is far from enough to satisfy sparsity on a single sample. More importantly, these sparse conceptual expressions should be able to transfer between different samples. If the same interaction concept can be represented in different samples, and if different samples always extract similar interaction concepts, then this interaction concept is more likely to represent a meaningful and universal knowledge. On the contrary, if most interaction concepts are only represented on one or two specific samples, then the interaction defined in this way is more likely to be a tricky metric with only mathematical definitions but no physical meaning. In experiments, we found that there is often a smaller concept dictionary that can explain most of the concepts modeled by neural networks for samples of the same category.

We also visualized some concepts and found that the same concept usually produces similar effects on different samples, which also verifies that the concept Transferability between different samples.

Requirement 3 (Transferability between networks): There should be transferability between concepts modeled by different neural networks

Similarly, these concepts should be able to be stably learned by different neural networks, whether they are networks with different initializations or networks with different architectures. Although neural networks can be designed with completely different architectures and model features of different dimensions, if different neural networks face the same specific task, they can achieve "the same goal by different paths", that is, if different neural networks can stably learn similar A set of interaction concepts, then we can think that this set of interaction concepts is the fundamental representation for this task. For example, if different face detection networks all model the interaction between eyes, nose, and mouth, then we can think that such interaction is more "essential" and "reliable." In experiments, we found that the more salient concepts are easier to be learned by different networks at the same time, and the relative proportion of salient interactions is jointly modeled by different neural networks.

Requirement 4 (Concept Classification): The concept modeled by the neural network should be classifiable

Finally, for classification tasks, if a concept has a high classification ability, then it should have a consistent positive effect (or a consistent negative effect) on the classification of most samples. effect). Higher classifiability can verify that the concept can independently undertake the classification task, making it more likely to be a reliable concept rather than an immature intermediate feature. We also designed experiments to verify this property and found that concepts modeled by neural networks tend to have higher classification properties.

To sum up, the above four aspects show that in most cases, the representation of neural networks is clear, Sparse and symbolic. Of course, neural networks are not always able to model such clear and symbolic concepts. In a few extreme cases, neural networks cannot learn sparse and transferable concepts. For details, please see our paper [2 ].

Additionally, we exploit this interaction to explain large models [22].

The significance of the symbolic representation of neural networks in the interpretability of neural networks

1. From the perspective of the development of the field of interpretability, the most direct significance It is to find a certain basis for "explaining neural networks at a conceptual level". If the representation of the neural network itself is not symbolic, then the explanation of the neural network from the symbolic conceptual level can only be scratching the surface. The results of the explanation must be specious and cannot substantively deduce the further development of deep learning.

2. Starting in 2021, we will gradually build a theoretical system based on game interaction. It is found that based on game interaction, we can uniformly explain two core issues: "How to quantify the knowledge modeled by neural networks" and "How to explain the representation ability of neural networks". In the direction of "how to quantify the knowledge modeled by neural networks", in addition to the two works mentioned in this article, the research team's preliminary work has been based on game interaction concept indicators to define the optimal benchmark value of Shapley value [13] , and explore the "prototype visual concept" and its "aesthetics" modeled by the visual neural network [7,8].

3. In the direction of "how to explain the representation ability of neural networks", the research team proved the bottleneck of neural networks in representing different interactions [6], and studied how neural networks use The interaction concept modeled by the neural network is used to determine its generalization [12, 19], and the relationship between the interaction concept modeled by the neural network and its adversarial robustness and adversarial transferability is studied [9, 10, 11, 20], and it is proved that Bayesian neural networks are more difficult to model complex interaction concepts [21].

For more reading, please refer to:

https://zhuanlan.zhihu.com/p/264871522/

The above is the detailed content of Re-examining AI, the discovery and proof of concept symbol emergence in neural networks. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1377

1377

52

52

How to configure Debian Apache log format

Apr 12, 2025 pm 11:30 PM

How to configure Debian Apache log format

Apr 12, 2025 pm 11:30 PM

This article describes how to customize Apache's log format on Debian systems. The following steps will guide you through the configuration process: Step 1: Access the Apache configuration file The main Apache configuration file of the Debian system is usually located in /etc/apache2/apache2.conf or /etc/apache2/httpd.conf. Open the configuration file with root permissions using the following command: sudonano/etc/apache2/apache2.conf or sudonano/etc/apache2/httpd.conf Step 2: Define custom log formats to find or

How Tomcat logs help troubleshoot memory leaks

Apr 12, 2025 pm 11:42 PM

How Tomcat logs help troubleshoot memory leaks

Apr 12, 2025 pm 11:42 PM

Tomcat logs are the key to diagnosing memory leak problems. By analyzing Tomcat logs, you can gain insight into memory usage and garbage collection (GC) behavior, effectively locate and resolve memory leaks. Here is how to troubleshoot memory leaks using Tomcat logs: 1. GC log analysis First, enable detailed GC logging. Add the following JVM options to the Tomcat startup parameters: -XX: PrintGCDetails-XX: PrintGCDateStamps-Xloggc:gc.log These parameters will generate a detailed GC log (gc.log), including information such as GC type, recycling object size and time. Analysis gc.log

How to implement file sorting by debian readdir

Apr 13, 2025 am 09:06 AM

How to implement file sorting by debian readdir

Apr 13, 2025 am 09:06 AM

In Debian systems, the readdir function is used to read directory contents, but the order in which it returns is not predefined. To sort files in a directory, you need to read all files first, and then sort them using the qsort function. The following code demonstrates how to sort directory files using readdir and qsort in Debian system: #include#include#include#include#include//Custom comparison function, used for qsortintcompare(constvoid*a,constvoid*b){returnstrcmp(*(

How to optimize the performance of debian readdir

Apr 13, 2025 am 08:48 AM

How to optimize the performance of debian readdir

Apr 13, 2025 am 08:48 AM

In Debian systems, readdir system calls are used to read directory contents. If its performance is not good, try the following optimization strategy: Simplify the number of directory files: Split large directories into multiple small directories as much as possible, reducing the number of items processed per readdir call. Enable directory content caching: build a cache mechanism, update the cache regularly or when directory content changes, and reduce frequent calls to readdir. Memory caches (such as Memcached or Redis) or local caches (such as files or databases) can be considered. Adopt efficient data structure: If you implement directory traversal by yourself, select more efficient data structures (such as hash tables instead of linear search) to store and access directory information

How to configure firewall rules for Debian syslog

Apr 13, 2025 am 06:51 AM

How to configure firewall rules for Debian syslog

Apr 13, 2025 am 06:51 AM

This article describes how to configure firewall rules using iptables or ufw in Debian systems and use Syslog to record firewall activities. Method 1: Use iptablesiptables is a powerful command line firewall tool in Debian system. View existing rules: Use the following command to view the current iptables rules: sudoiptables-L-n-v allows specific IP access: For example, allow IP address 192.168.1.100 to access port 80: sudoiptables-AINPUT-ptcp--dport80-s192.16

Where is the Debian Nginx log path

Apr 12, 2025 pm 11:33 PM

Where is the Debian Nginx log path

Apr 12, 2025 pm 11:33 PM

In the Debian system, the default storage locations of Nginx's access log and error log are as follows: Access log (accesslog):/var/log/nginx/access.log Error log (errorlog):/var/log/nginx/error.log The above path is the default configuration of standard DebianNginx installation. If you have modified the log file storage location during the installation process, please check your Nginx configuration file (usually located in /etc/nginx/nginx.conf or /etc/nginx/sites-available/ directory). In the configuration file

Debian mail server SSL certificate installation method

Apr 13, 2025 am 11:39 AM

Debian mail server SSL certificate installation method

Apr 13, 2025 am 11:39 AM

The steps to install an SSL certificate on the Debian mail server are as follows: 1. Install the OpenSSL toolkit First, make sure that the OpenSSL toolkit is already installed on your system. If not installed, you can use the following command to install: sudoapt-getupdatesudoapt-getinstallopenssl2. Generate private key and certificate request Next, use OpenSSL to generate a 2048-bit RSA private key and a certificate request (CSR): openss

Debian mail server firewall configuration tips

Apr 13, 2025 am 11:42 AM

Debian mail server firewall configuration tips

Apr 13, 2025 am 11:42 AM

Configuring a Debian mail server's firewall is an important step in ensuring server security. The following are several commonly used firewall configuration methods, including the use of iptables and firewalld. Use iptables to configure firewall to install iptables (if not already installed): sudoapt-getupdatesudoapt-getinstalliptablesView current iptables rules: sudoiptables-L configuration