Technology peripherals

Technology peripherals

AI

AI

I created a voice chatbot powered by ChatGPT API, please follow the instructions

I created a voice chatbot powered by ChatGPT API, please follow the instructions

I created a voice chatbot powered by ChatGPT API, please follow the instructions

Today’s article focuses on creating a private voice Chatbot web application using the ChatGPT API. The purpose is to explore and discover more potential use cases and business opportunities for artificial intelligence. I'll guide you step-by-step through the development process to ensure you understand and can replicate the process for your own.

Why it’s needed

- Not everyone welcomes typing-based services, imagine kids who are still learning writing skills or seniors who can’t see words correctly on the screen. Voice-based AI Chatbot is the solution to this problem, like how it helped my kid ask his voice Chatbot to read him a bedtime story.

- Given the assistant options currently available, such as Apple’s Siri and Amazon’s Alexa, adding voice interaction to the GPT model could open up a wider range of possibilities. The ChatGPT API has the advantage of its superior ability to create coherent and contextual responses, which, combined with the idea of voice-based smart home connectivity, could offer a wealth of business opportunities. The voice assistant we created in this article will serve as the entry point.

Enough theory, let’s get started.

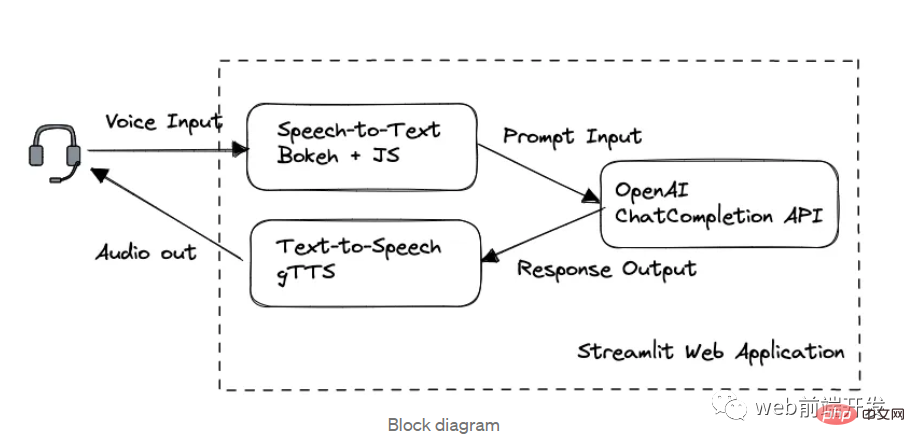

1. Block diagram

In this application, we are divided into three key modules in processing order:

- Bokeh and Web Speech to Text with Speech API

- Chatting via OpenAI GPT-3.5 API

- gTTS Text to Speech

Web framework built by Streamlit.

If you already know how to use the OpenAI API under the GPT 3.5 model and how to use Streamlit to design web applications, it is recommended that you skip Part 1 and Part 2 to save reading time.

2. OpenAI GPT API

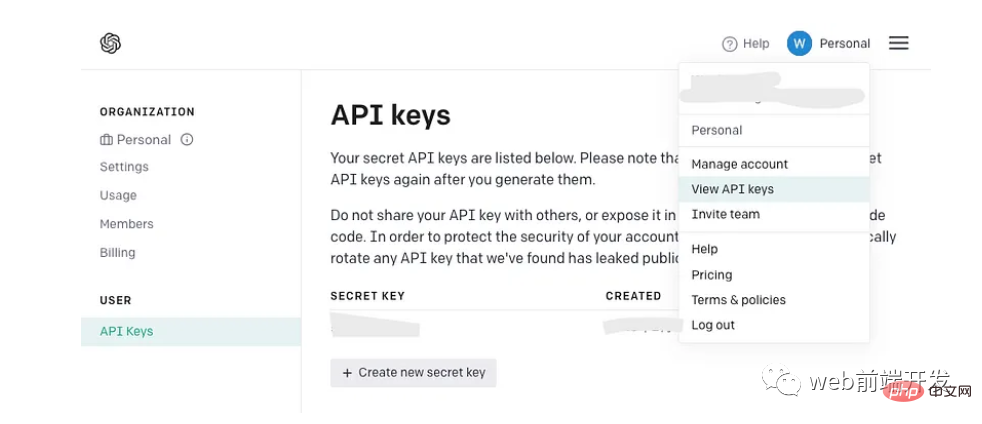

Get your API key

If you already have an OpenAI API key, stick with it instead Instead of creating a new key. However, if you are new to OpenAI, please sign up for a new account and find the following page in your account menu:

After generating the API key , remember it will only appear once, so be sure to copy it to a safe place for future use.

Use of ChatCompletion API

Currently GPT-4.0 has just been released, and the API of this model has not been fully released, so I will introduce the development of still GPT 3.5 model, which is enough to complete our AI voice Chatbot demo.

Now let’s look at the simplest demo from OpenAI to understand the basic definition of ChatCompletion API (also known as gpt-3.5 API or ChatGPT API):

Installation package:

!pip install opena

If you have previously developed some legacy GPT models from OpenAI, you may have to upgrade your package via pip:

!pip install --upgrade openai

Create and send prompt:

import openai

complete = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Who won the world series in 2020?"},

{"role": "assistant", "content": "The Los Angeles Dodgers won the World Series in 2020."},

{"role": "user", "content": "Where was it played?"}

]

)Receive text response:

message=complete.choices[0].message.content

Because the GPT 3.5 API is a chat-based text completion API, please ensure that the message body of the ChatCompletion request contains the conversation history as context and you want the model to reference a more contextual response in response to your current request.

To implement this functionality, the list objects of the message body should be organized in the following order:

- System messages are defined as settings for the chatbot by adding instructions in the content at the top of the message list Behavior. As mentioned in the introduction, this feature is currently not fully released in gpt-3.5-turbo-0301.

- User messages represent user input or queries, while helper messages refer to the corresponding responses from the GPT-3.5 API. Such paired conversations provide a reference for models about context.

- The last user message refers to the prompt requested at the current moment.

3. Web Development

We will continue to use the powerful Streamlit library to build web applications.

Streamlit is an open source framework that enables data scientists and developers to quickly build and share interactive web applications for machine learning and data science projects. It also provides a bunch of widgets that can be created with just one line of python code, like st.table(...).

If you are not very good at web development and are not willing to build a large commercial application like me, Streamlit is always one of your best choices because it requires almost no expertise in HTML.

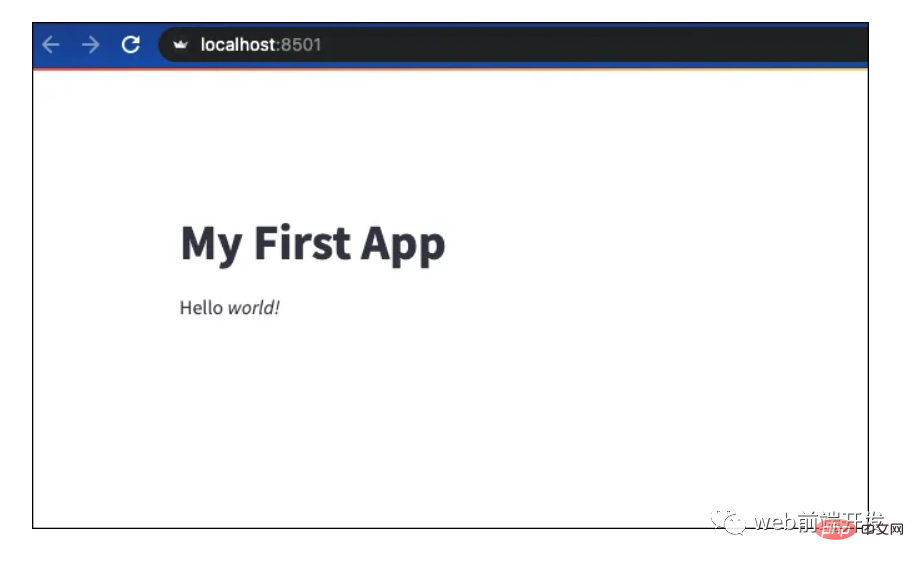

Let’s look at a quick example of building a Streamlit web application:

Installation package:

!pip install streamlit

Create a Python file “demo.py”:

import streamlit as st

st.write("""

# My First App

Hello *world!*

""")Run on the local machine or remote server:

!python -m streamlit run demo.py

After printing this output, you can access your website at the address and port listed:

You can now view your Streamlit app in your browser. Network URL: http://xxx.xxx.xxx.xxx:8501 External URL: http://xxx.xxx.xxx.xxx:8501

Streamlit 提供的所有小部件的用法可以在其文档页面中找到:https://docs.streamlit.io/library/api-reference

4.语音转文字的实现

此 AI 语音聊天机器人的主要功能之一是它能够识别用户语音并生成我们的 ChatCompletion API 可用作输入的适当文本。

OpenAI 的 Whisper API 提供的高质量语音识别是一个很好的选择,但它是有代价的。或者,来自 Javascript 的免费 Web Speech API 提供可靠的多语言支持和令人印象深刻的性能。

虽然开发 Python 项目似乎与定制的 Javascript 不兼容,但不要害怕!在下一部分中,我将介绍一种在 Python 程序中调用 Javascript 代码的简单技术。

不管怎样,让我们看看如何使用 Web Speech API 快速开发语音转文本演示。您可以找到它的文档(地址:https://wicg.github.io/speech-api/)。

语音识别的实现可以很容易地完成,如下所示。

var recognition = new webkitSpeechRecognition(); recognition.continuous = false; recognition.interimResults = true; recognition.lang = 'en'; recognition.start();

通过方法 webkitSpeechRecognition() 初始化识别对象后,需要定义一些有用的属性。continuous 属性表示您是否希望 SpeechRecognition 函数在语音输入的一种模式处理成功完成后继续工作。

我将其设置为 false,因为我希望语音聊天机器人能够以稳定的速度根据用户语音输入生成每个答案。

设置为 true 的 interimResults 属性将在用户语音期间生成一些中间结果,以便用户可以看到从他们的语音输入输出的动态消息。

lang 属性将设置请求识别的语言。请注意,如果它在代码中是未设置,则默认语言将来自 HTML 文档根元素和关联的层次结构,因此在其系统中使用不同语言设置的用户可能会有不同的体验。

识别对象有多个事件,我们使用 .onresult 回调来处理来自中间结果和最终结果的文本生成结果。

recognition.onresult = function (e) {

var value, value2 = "";

for (var i = e.resultIndex; i < e.results.length; ++i) {

if (e.results[i].isFinal) {

value += e.results[i][0].transcript;

rand = Math.random();

} else {

value2 += e.results[i][0].transcript;

}

}

}5.引入Bokeh库

从用户界面的定义来看,我们想设计一个按钮来启动我们在上一节中已经用 Javascript 实现的语音识别。

Streamlit 库不支持自定义 JS 代码,所以我们引入了 Bokeh。Bokeh 库是另一个强大的 Python 数据可视化工具。可以支持我们的演示的最佳部分之一是嵌入自定义 Javascript 代码,这意味着我们可以在 Bokeh 的按钮小部件下运行我们的语音识别脚本。

为此,我们应该安装 Bokeh 包。为了兼容后面会提到的streamlit-bokeh-events库,Bokeh的版本应该是2.4.2:

!pip install bokeh==2.4.2

导入按钮和 CustomJS:

from bokeh.models.widgets import Button from bokeh.models import CustomJS

创建按钮小部件:

spk_button = Button(label='SPEAK', button_type='success')

定义按钮点击事件:

spk_button.js_on_event("button_click", CustomJS(code="""

...js code...

"""))定义了.js_on_event()方法来注册spk_button的事件。

在这种情况下,我们注册了“button_click”事件,该事件将在用户单击后触发由 CustomJS() 方法嵌入的 JS 代码块…js 代码…的执行。

Streamlit_bokeh_event

speak 按钮及其回调方法实现后,下一步是将 Bokeh 事件输出(识别的文本)连接到其他功能块,以便将提示文本发送到 ChatGPT API。

幸运的是,有一个名为“Streamlit Bokeh Events”的开源项目专为此目的而设计,它提供与 Bokeh 小部件的双向通信。你可以在这里找到它的 GitHub 页面。

这个库的使用非常简单。首先安装包:

!pip install streamlit-bokeh-events

通过 streamlit_bokeh_events 方法创建结果对象。

result = streamlit_bokeh_events( bokeh_plot = spk_button, events="GET_TEXT,GET_ONREC,GET_INTRM", key="listen", refresh_on_update=False, override_height=75, debounce_time=0)

使用 bokeh_plot 属性来注册我们在上一节中创建的 spk_button。使用 events 属性来标记多个自定义的 HTML 文档事件

- GET_TEXT 接收最终识别文本

- GET_INTRM 接收临时识别文本

- GET_ONREC 接收语音处理阶段

我们可以使用 JS 函数 document.dispatchEvent(new CustomEvent(…)) 来生成事件,例如 GET_TEXT 和 GET_INTRM 事件:

spk_button.js_on_event("button_click", CustomJS(code="""

var recognition = new webkitSpeechRecognition();

recognition.continuous = false;

recognition.interimResults = true;

recognition.lang = 'en';

var value, value2 = "";

for (var i = e.resultIndex; i < e.results.length; ++i) {

if (e.results[i].isFinal) {

value += e.results[i][0].transcript;

rand = Math.random();

} else {

value2 += e.results[i][0].transcript;

}

}

document.dispatchEvent(new CustomEvent("GET_TEXT", {detail: {t:value, s:rand}}));

document.dispatchEvent(new CustomEvent("GET_INTRM", {detail: value2}));

recognition.start();

}

"""))并且,检查事件 GET_INTRM 处理的 result.get() 方法,例如:

tr = st.empty()

if result:

if "GET_INTRM" in result:

if result.get("GET_INTRM") != '':

tr.text_area("**Your input**", result.get("GET_INTRM"))这两个代码片段表明,当用户正在讲话时,任何临时识别文本都将显示在 Streamlit text_area 小部件上:

6. 文字转语音实现

提示请求完成,GPT-3.5模型通过ChatGPT API生成响应后,我们通过Streamlit st.write()方法将响应文本直接显示在网页上。

但是,我们需要将文本转换为语音,这样我们的 AI 语音 Chatbot 的双向功能才能完全完成。

有一个名为“gTTS”的流行 Python 库能够完美地完成这项工作。在与谷歌翻译的文本转语音 API 接口后,它支持多种格式的语音数据输出,包括 mp3 或 stdout。你可以在这里找到它的 GitHub 页面。

只需几行代码即可完成转换。首先安装包:

!pip install gTTS

在这个演示中,我们不想将语音数据保存到文件中,所以我们可以调用 BytesIO() 来临时存储语音数据:

sound = BytesIO() tts = gTTS(output, lang='en', tld='com') tts.write_to_fp(sound)

输出的是要转换的文本字符串,你可以根据自己的喜好,通过tld从不同的google域中选择不同的语言by lang。例如,您可以设置 tld='co.uk' 以生成英式英语口音。

然后,通过 Streamlit 小部件创建一个像样的音频播放器:

st.audio(sound)

全语音聊天机器人

要整合上述所有模块,我们应该完成完整的功能:

- 已完成与 ChatCompletion API 的交互,并在用户和助手消息块中定义了附加的历史对话。使用 Streamlit 的 st.session_state 来存储运行变量。

- 考虑到 .onspeechstart()、.onsoundend() 和 .onerror() 等多个事件以及识别过程,在 SPEAK 按钮的 CustomJS 中完成了事件生成。

- 完成事件“GET_TEXT、GET_ONREC、GET_INTRM”的事件处理,以在网络界面上显示适当的信息,并管理用户讲话时的文本显示和组装。

- 所有必要的 Streamit 小部件

请找到完整的演示代码供您参考:

import streamlit as st

from bokeh.models.widgets import Button

from bokeh.models import CustomJS

from streamlit_bokeh_events import streamlit_bokeh_events

from gtts import gTTS

from io import BytesIO

import openai

openai.api_key = '{Your API Key}'

if 'prompts' not in st.session_state:

st.session_state['prompts'] = [{"role": "system", "content": "You are a helpful assistant. Answer as concisely as possible with a little humor expression."}]

def generate_response(prompt):

st.session_state['prompts'].append({"role": "user", "content":prompt})

completinotallow=openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages = st.session_state['prompts']

)

message=completion.choices[0].message.content

return message

sound = BytesIO()

placeholder = st.container()

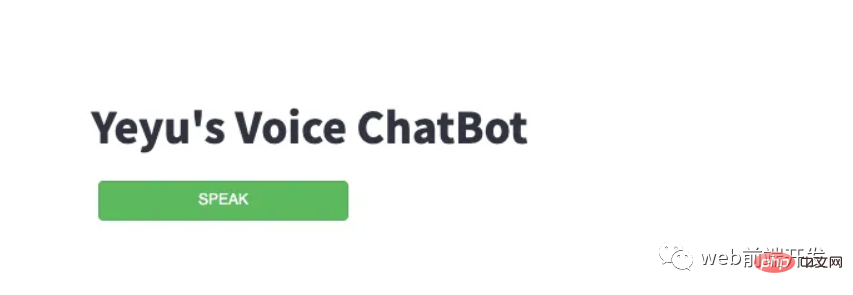

placeholder.title("Yeyu's Voice ChatBot")

stt_button = Button(label='SPEAK', button_type='success', margin = (5, 5, 5, 5), width=200)

stt_button.js_on_event("button_click", CustomJS(code="""

var value = "";

var rand = 0;

var recognition = new webkitSpeechRecognition();

recognition.continuous = false;

recognition.interimResults = true;

recognition.lang = 'en';

document.dispatchEvent(new CustomEvent("GET_ONREC", {detail: 'start'}));

recognition.onspeechstart = function () {

document.dispatchEvent(new CustomEvent("GET_ONREC", {detail: 'running'}));

}

recognition.onsoundend = function () {

document.dispatchEvent(new CustomEvent("GET_ONREC", {detail: 'stop'}));

}

recognition.onresult = function (e) {

var value2 = "";

for (var i = e.resultIndex; i < e.results.length; ++i) {

if (e.results[i].isFinal) {

value += e.results[i][0].transcript;

rand = Math.random();

} else {

value2 += e.results[i][0].transcript;

}

}

document.dispatchEvent(new CustomEvent("GET_TEXT", {detail: {t:value, s:rand}}));

document.dispatchEvent(new CustomEvent("GET_INTRM", {detail: value2}));

}

recognition.onerror = function(e) {

document.dispatchEvent(new CustomEvent("GET_ONREC", {detail: 'stop'}));

}

recognition.start();

"""))

result = streamlit_bokeh_events(

bokeh_plot = stt_button,

events="GET_TEXT,GET_ONREC,GET_INTRM",

key="listen",

refresh_on_update=False,

override_height=75,

debounce_time=0)

tr = st.empty()

if 'input' not in st.session_state:

st.session_state['input'] = dict(text='', sessinotallow=0)

tr.text_area("**Your input**", value=st.session_state['input']['text'])

if result:

if "GET_TEXT" in result:

if result.get("GET_TEXT")["t"] != '' and result.get("GET_TEXT")["s"] != st.session_state['input']['session'] :

st.session_state['input']['text'] = result.get("GET_TEXT")["t"]

tr.text_area("**Your input**", value=st.session_state['input']['text'])

st.session_state['input']['session'] = result.get("GET_TEXT")["s"]

if "GET_INTRM" in result:

if result.get("GET_INTRM") != '':

tr.text_area("**Your input**", value=st.session_state['input']['text']+' '+result.get("GET_INTRM"))

if "GET_ONREC" in result:

if result.get("GET_ONREC") == 'start':

placeholder.image("recon.gif")

st.session_state['input']['text'] = ''

elif result.get("GET_ONREC") == 'running':

placeholder.image("recon.gif")

elif result.get("GET_ONREC") == 'stop':

placeholder.image("recon.jpg")

if st.session_state['input']['text'] != '':

input = st.session_state['input']['text']

output = generate_response(input)

st.write("**ChatBot:**")

st.write(output)

st.session_state['input']['text'] = ''

tts = gTTS(output, lang='en', tld='com')

tts.write_to_fp(sound)

st.audio(sound)

st.session_state['prompts'].append({"role": "user", "content":input})

st.session_state['prompts'].append({"role": "assistant", "content":output})输入后:

!python -m streamlit run demo_voice.py

您最终会在网络浏览器上看到一个简单但智能的语音聊天机器人。

请注意:不要忘记在弹出请求时允许网页访问您的麦克风和扬声器。

就是这样,一个简单聊天机器人就完成了。

最后,希望您能在本文中找到有用的东西,感谢您的阅读!

The above is the detailed content of I created a voice chatbot powered by ChatGPT API, please follow the instructions. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1377

1377

52

52

ChatGPT now allows free users to generate images by using DALL-E 3 with a daily limit

Aug 09, 2024 pm 09:37 PM

ChatGPT now allows free users to generate images by using DALL-E 3 with a daily limit

Aug 09, 2024 pm 09:37 PM

DALL-E 3 was officially introduced in September of 2023 as a vastly improved model than its predecessor. It is considered one of the best AI image generators to date, capable of creating images with intricate detail. However, at launch, it was exclus

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

This site reported on June 27 that Jianying is a video editing software developed by FaceMeng Technology, a subsidiary of ByteDance. It relies on the Douyin platform and basically produces short video content for users of the platform. It is compatible with iOS, Android, and Windows. , MacOS and other operating systems. Jianying officially announced the upgrade of its membership system and launched a new SVIP, which includes a variety of AI black technologies, such as intelligent translation, intelligent highlighting, intelligent packaging, digital human synthesis, etc. In terms of price, the monthly fee for clipping SVIP is 79 yuan, the annual fee is 599 yuan (note on this site: equivalent to 49.9 yuan per month), the continuous monthly subscription is 59 yuan per month, and the continuous annual subscription is 499 yuan per year (equivalent to 41.6 yuan per month) . In addition, the cut official also stated that in order to improve the user experience, those who have subscribed to the original VIP

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Large Language Models (LLMs) are trained on huge text databases, where they acquire large amounts of real-world knowledge. This knowledge is embedded into their parameters and can then be used when needed. The knowledge of these models is "reified" at the end of training. At the end of pre-training, the model actually stops learning. Align or fine-tune the model to learn how to leverage this knowledge and respond more naturally to user questions. But sometimes model knowledge is not enough, and although the model can access external content through RAG, it is considered beneficial to adapt the model to new domains through fine-tuning. This fine-tuning is performed using input from human annotators or other LLM creations, where the model encounters additional real-world knowledge and integrates it

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

Editor |ScienceAI Question Answering (QA) data set plays a vital role in promoting natural language processing (NLP) research. High-quality QA data sets can not only be used to fine-tune models, but also effectively evaluate the capabilities of large language models (LLM), especially the ability to understand and reason about scientific knowledge. Although there are currently many scientific QA data sets covering medicine, chemistry, biology and other fields, these data sets still have some shortcomings. First, the data form is relatively simple, most of which are multiple-choice questions. They are easy to evaluate, but limit the model's answer selection range and cannot fully test the model's ability to answer scientific questions. In contrast, open-ended Q&A

SOTA performance, Xiamen multi-modal protein-ligand affinity prediction AI method, combines molecular surface information for the first time

Jul 17, 2024 pm 06:37 PM

SOTA performance, Xiamen multi-modal protein-ligand affinity prediction AI method, combines molecular surface information for the first time

Jul 17, 2024 pm 06:37 PM

Editor | KX In the field of drug research and development, accurately and effectively predicting the binding affinity of proteins and ligands is crucial for drug screening and optimization. However, current studies do not take into account the important role of molecular surface information in protein-ligand interactions. Based on this, researchers from Xiamen University proposed a novel multi-modal feature extraction (MFE) framework, which for the first time combines information on protein surface, 3D structure and sequence, and uses a cross-attention mechanism to compare different modalities. feature alignment. Experimental results demonstrate that this method achieves state-of-the-art performance in predicting protein-ligand binding affinities. Furthermore, ablation studies demonstrate the effectiveness and necessity of protein surface information and multimodal feature alignment within this framework. Related research begins with "S

Laying out markets such as AI, GlobalFoundries acquires Tagore Technology's gallium nitride technology and related teams

Jul 15, 2024 pm 12:21 PM

Laying out markets such as AI, GlobalFoundries acquires Tagore Technology's gallium nitride technology and related teams

Jul 15, 2024 pm 12:21 PM

According to news from this website on July 5, GlobalFoundries issued a press release on July 1 this year, announcing the acquisition of Tagore Technology’s power gallium nitride (GaN) technology and intellectual property portfolio, hoping to expand its market share in automobiles and the Internet of Things. and artificial intelligence data center application areas to explore higher efficiency and better performance. As technologies such as generative AI continue to develop in the digital world, gallium nitride (GaN) has become a key solution for sustainable and efficient power management, especially in data centers. This website quoted the official announcement that during this acquisition, Tagore Technology’s engineering team will join GLOBALFOUNDRIES to further develop gallium nitride technology. G

SK Hynix will display new AI-related products on August 6: 12-layer HBM3E, 321-high NAND, etc.

Aug 01, 2024 pm 09:40 PM

SK Hynix will display new AI-related products on August 6: 12-layer HBM3E, 321-high NAND, etc.

Aug 01, 2024 pm 09:40 PM

According to news from this site on August 1, SK Hynix released a blog post today (August 1), announcing that it will attend the Global Semiconductor Memory Summit FMS2024 to be held in Santa Clara, California, USA from August 6 to 8, showcasing many new technologies. generation product. Introduction to the Future Memory and Storage Summit (FutureMemoryandStorage), formerly the Flash Memory Summit (FlashMemorySummit) mainly for NAND suppliers, in the context of increasing attention to artificial intelligence technology, this year was renamed the Future Memory and Storage Summit (FutureMemoryandStorage) to invite DRAM and storage vendors and many more players. New product SK hynix launched last year

SearchGPT: Open AI takes on Google with its own AI search engine

Jul 30, 2024 am 09:58 AM

SearchGPT: Open AI takes on Google with its own AI search engine

Jul 30, 2024 am 09:58 AM

Open AI is finally making its foray into search. The San Francisco company has recently announced a new AI tool with search capabilities. First reported by The Information in February this year, the new tool is aptly called SearchGPT and features a c