Technology peripherals

Technology peripherals

AI

AI

Google open-sources its first 'dialect' data set: making machine translation more authentic

Google open-sources its first 'dialect' data set: making machine translation more authentic

Google open-sources its first 'dialect' data set: making machine translation more authentic

Although people all over China speak Chinese, the specific dialects in different places are slightly different. For example, it also means alley. When you say "Hutong", you will know it is old Beijing, but when you go to the south, it is called "Hutong". alley".

When these subtle regional differences are reflected in the "machine translation" task, the translation results will appear not to be "authentic" enough. However, almost all current machine translation systems do not consider the region. The influence of sexual language (i.e. dialect).

This phenomenon also exists around the world. For example, the official language of Brazil is Portuguese, and there are some regional differences with Portuguese in Europe.

Recently, Google released a brand new data set and evaluation benchmark FRMT that can be used for few-shot Region-aware machine translation, which mainly solves the problem of dialect translation. The paper was published in TACL (Transactions of the Association for Computational Linguistics).

Paper link: https://arxiv.org/pdf/2210.00193.pdf

Open source link: https:// github.com/google-research/google-research/tree/master/frmt

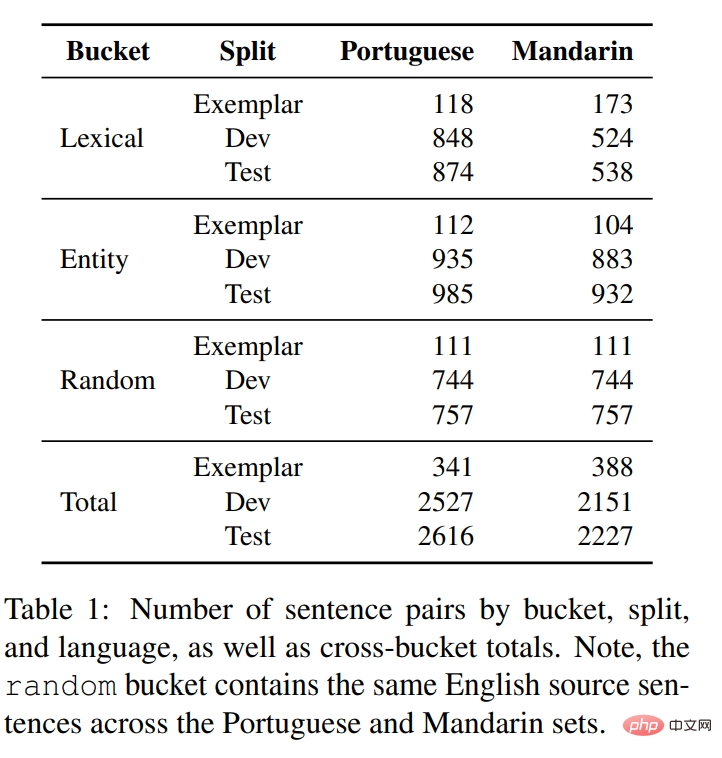

This dataset includes professional translations from English to two regional variants of Portuguese and Chinese Mandarin, the source documents are designed to be able A detailed analysis of the phenomenon of interest, including lexically distinct terms and interference terms.

The researchers explored FRMT’s automated evaluation metrics and verified its correlation with expert manual evaluation under regional match and mismatch scoring scenarios.

Finally, some baseline models are proposed for this task, and guidance suggestions are provided for researchers on how to train, evaluate, and compare their own models. The dataset and evaluation code have been open sourced.

Few-Shot Generalization

Most modern machine translation systems are trained on millions or billions of translation samples, with input data consisting of an English input sentence and its corresponding Portuguese translation .

However, the vast majority of available training data does not account for regional differences in translation.

Given this data scarcity, the researchers positioned FRMT as a benchmark for few-shot translation, measuring how well a machine translation model identifies a given region when given no more than 100 labeled examples per language. Language variant capabilities.

The machine translation model needs to identify similar patterns in other unlabeled training samples based on the language patterns displayed in a small number of labeled samples (i.e., examples). The model needs to generalize in this way to produce "idiomatic" translations for areas not explicitly specified in the model.

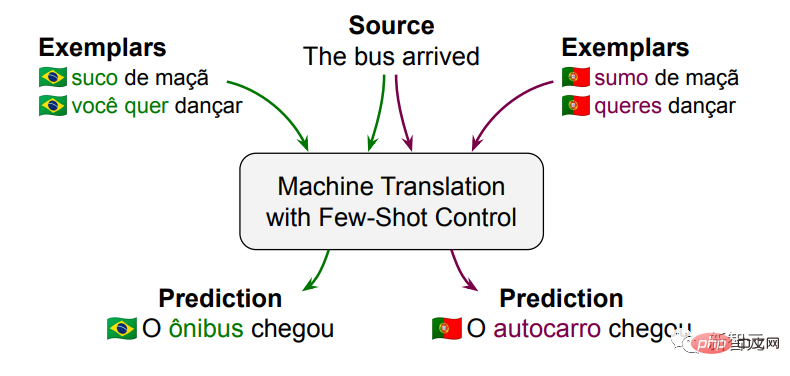

For example, enter the sentence: The bus arrived, and given a few Brazilian Portuguese examples, the model should be able to translate "O ônibus chegou"; if the examples are given is European Portuguese, the translation result of the model should be "O autocarro chegou".

The few-shot method of machine translation is of great research value and can add support for additional regional languages to existing systems in a very simple way.

While the current work published by Google is for regional variants of two languages, the researchers predict that a good approach will be easily applicable to other language and regional variants.

In principle, these methods are also applicable to other language difference phenomena, such as etiquette and style.

Data collection

The FRMT dataset includes some English Wikipedia articles, derived from the Wiki40b dataset, which have been translated into different regions by paid professional translators Sexual Portuguese and Chinese.

To highlight the translation challenges of critical region awareness, the researchers used three content buckets to design Data set:

1. Vocabulary Lixical

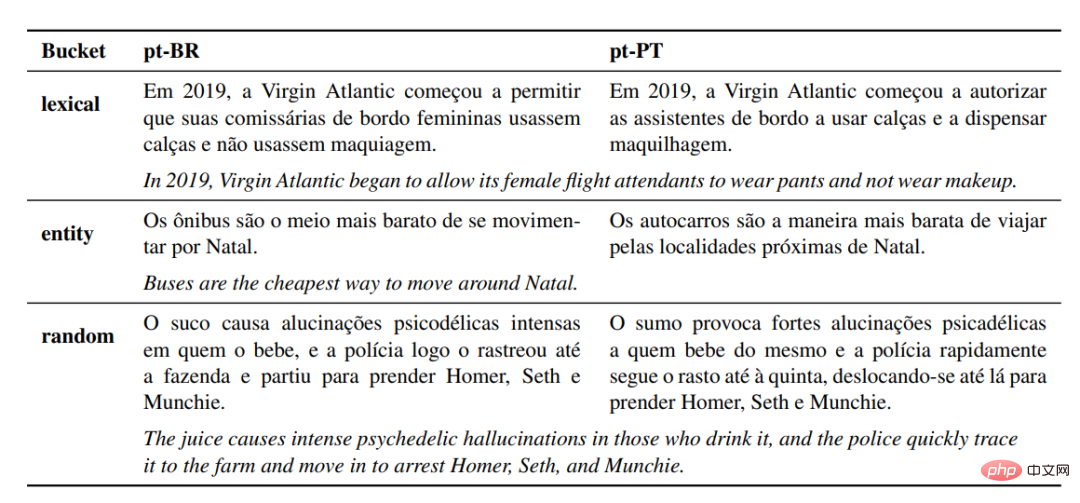

Lexical bucket mainly focuses on the differences in vocabulary selection in different regions. For example, when a word with When a sentence with the word "bus" is translated into Brazilian and European Portuguese respectively, the model needs to be able to identify the difference between "ônibus" and "autocarro".

The researchers manually collected 20-30 regionally specific translation terms based on blogs and educational websites, and filtered and filtered the translations based on feedback from native-speaking volunteers from each region. Review.

Based on the obtained list of English terms, 100 sentences are extracted from relevant English Wikipedia articles (for example, bus). For Mandarin, repeat the same collection process above.

2. Entity Entity

The entity bucket is filled in a similar manner with the people, locations or other entities involved A strong connection to one of the two areas in which a particular language is involved.

For example, given an explanatory sentence, such as "In Lisbon, I often took the bus." (In Lisbon, I often took the bus.), in order to correctly interpret it Translating into Brazilian Portuguese, the model must be able to identify two potential pitfalls:

#1) The closer geographical connection between Lisbon and Portugal may influence the choice of model translation, thereby Help the model determine that it should be translated into European Portuguese instead of Brazilian Portuguese, that is, select "autocarro" instead of "ônibus".

2) Replacing "Lisbon" with "Brasilia" may be a simpler way. For the same pattern, localize its output to Brazilian Portuguese, even if the translation result is still Very smooth, but may also lead to inaccurate semantics.

3. Random Random

Random bucket is used to check whether a model correctly handles other different phenomena, including features from Wikipedia and good) 100 articles randomly selected from the collection.

System Performance

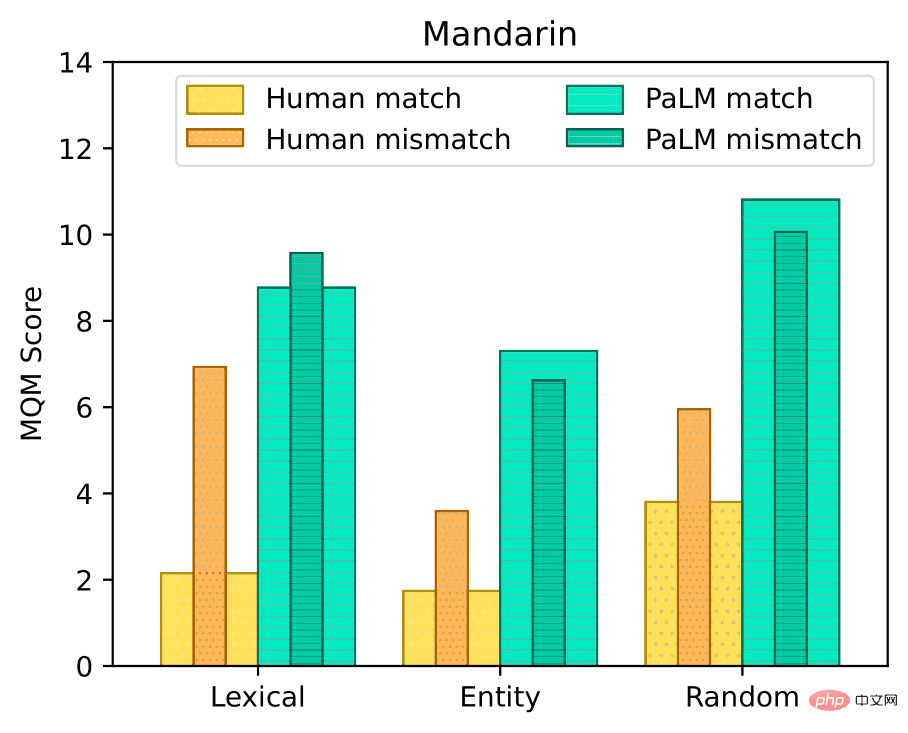

To verify that the translations collected for the FRMT dataset are able to capture the phenomena in a specific region , the researchers performed a manual assessment of data quality.

Expert annotators from each respective region identify and classify errors in translation using a Multidimensional Quality Measurement (MQM) framework: the framework includes a classification weighting scheme that combines the identified Errors are converted into a single score that roughly represents the number of major errors per sentence, i.e. smaller numbers indicate better translations.

For each region, the researchers asked MQM raters to rate translations from their region and translations from other regions in their language.

For example, Brazilian Portuguese raters rated both Brazilian and European Portuguese translations at the same time. The difference between the two scores indicates the universality of the linguistic phenomenon, that is, the Whether a language variant is acceptable rather than another language.

Experimental results found that in Portuguese and Chinese, raters found approximately two more major errors per sentence on average than in the matched translations, indicating that the FRMT dataset is indeed able to capture specific regional linguistic phenomena.

While manual evaluation is the best way to ensure model quality, it is often slow and expensive.

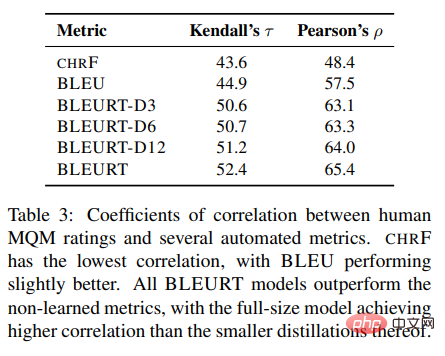

Therefore, the researchers hope to find a ready-made automatic metric that can be used to evaluate the performance of the model in the benchmark. The researchers consider using chrF, BLEU and BLEURT.

Based on MQM evaluators’ ratings of several baseline model translation results, it can be found that BLEURT has the best correlation with human judgment , and the strength of this correlation (0.65 Pearson correlation coefficient, ρ) is comparable to the inter-annotator agreement (0.70 intraclass correlation).

System Performance

This article evaluates some recently released models with few-shot control capabilities.

Based on human evaluation of MQM, baseline methods all show a certain ability to localize Portuguese output, but for Chinese Mandarin, most do not use the knowledge of the target area to generate excellent local translations result.

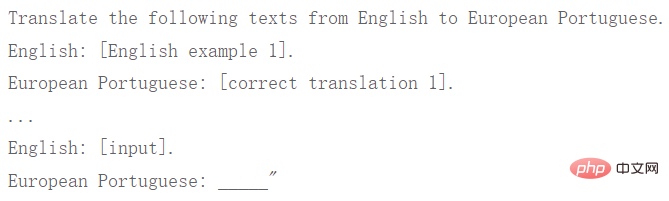

Among the benchmarks evaluated, Google’s language model PaLM model performed best. To use PaLM to generate region-specific translations, an instructive prompt is first fed into the model, and then Generate text from it to fill in the gaps.

PaLM achieved great results with just one example, in Portuguese , the quality improves slightly when increasing to 10 examples, which is already very good considering that PaLM is trained unsupervised.

The findings also suggest that language models like PaLM may be particularly good at memorizing region-specific lexical choices needed for smooth translation.

However, there is still a significant performance gap between PaLM and humans.

Reference materials:

https://ai.googleblog.com/2023/02/frmt-benchmark-for-few-shot-region.html

The above is the detailed content of Google open-sources its first 'dialect' data set: making machine translation more authentic. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

How to comment deepseek

Feb 19, 2025 pm 05:42 PM

How to comment deepseek

Feb 19, 2025 pm 05:42 PM

DeepSeek is a powerful information retrieval tool. Its advantage is that it can deeply mine information, but its disadvantages are that it is slow, the result presentation method is simple, and the database coverage is limited. It needs to be weighed according to specific needs.

How to search deepseek

Feb 19, 2025 pm 05:39 PM

How to search deepseek

Feb 19, 2025 pm 05:39 PM

DeepSeek is a proprietary search engine that only searches in a specific database or system, faster and more accurate. When using it, users are advised to read the document, try different search strategies, seek help and feedback on the user experience in order to make the most of their advantages.

Sesame Open Door Exchange Web Page Registration Link Gate Trading App Registration Website Latest

Feb 28, 2025 am 11:06 AM

Sesame Open Door Exchange Web Page Registration Link Gate Trading App Registration Website Latest

Feb 28, 2025 am 11:06 AM

This article introduces the registration process of the Sesame Open Exchange (Gate.io) web version and the Gate trading app in detail. Whether it is web registration or app registration, you need to visit the official website or app store to download the genuine app, then fill in the user name, password, email, mobile phone number and other information, and complete email or mobile phone verification.

Why can't the Bybit exchange link be directly downloaded and installed?

Feb 21, 2025 pm 10:57 PM

Why can't the Bybit exchange link be directly downloaded and installed?

Feb 21, 2025 pm 10:57 PM

Why can’t the Bybit exchange link be directly downloaded and installed? Bybit is a cryptocurrency exchange that provides trading services to users. The exchange's mobile apps cannot be downloaded directly through AppStore or GooglePlay for the following reasons: 1. App Store policy restricts Apple and Google from having strict requirements on the types of applications allowed in the app store. Cryptocurrency exchange applications often do not meet these requirements because they involve financial services and require specific regulations and security standards. 2. Laws and regulations Compliance In many countries, activities related to cryptocurrency transactions are regulated or restricted. To comply with these regulations, Bybit Application can only be used through official websites or other authorized channels

Sesame Open Door Trading Platform Download Mobile Version Gateio Trading Platform Download Address

Feb 28, 2025 am 10:51 AM

Sesame Open Door Trading Platform Download Mobile Version Gateio Trading Platform Download Address

Feb 28, 2025 am 10:51 AM

It is crucial to choose a formal channel to download the app and ensure the safety of your account.

Sesame Open Door Exchange Web Page Login Latest version gateio official website entrance

Mar 04, 2025 pm 11:48 PM

Sesame Open Door Exchange Web Page Login Latest version gateio official website entrance

Mar 04, 2025 pm 11:48 PM

A detailed introduction to the login operation of the Sesame Open Exchange web version, including login steps and password recovery process. It also provides solutions to common problems such as login failure, unable to open the page, and unable to receive verification codes to help you log in to the platform smoothly.

Top 10 recommended for crypto digital asset trading APP (2025 global ranking)

Mar 18, 2025 pm 12:15 PM

Top 10 recommended for crypto digital asset trading APP (2025 global ranking)

Mar 18, 2025 pm 12:15 PM

This article recommends the top ten cryptocurrency trading platforms worth paying attention to, including Binance, OKX, Gate.io, BitFlyer, KuCoin, Bybit, Coinbase Pro, Kraken, BYDFi and XBIT decentralized exchanges. These platforms have their own advantages in terms of transaction currency quantity, transaction type, security, compliance, and special features. For example, Binance is known for its largest transaction volume and abundant functions in the world, while BitFlyer attracts Asian users with its Japanese Financial Hall license and high security. Choosing a suitable platform requires comprehensive consideration based on your own trading experience, risk tolerance and investment preferences. Hope this article helps you find the best suit for yourself

Binance binance official website latest version login portal

Feb 21, 2025 pm 05:42 PM

Binance binance official website latest version login portal

Feb 21, 2025 pm 05:42 PM

To access the latest version of Binance website login portal, just follow these simple steps. Go to the official website and click the "Login" button in the upper right corner. Select your existing login method. If you are a new user, please "Register". Enter your registered mobile number or email and password and complete authentication (such as mobile verification code or Google Authenticator). After successful verification, you can access the latest version of Binance official website login portal.