Research on key technical difficulties of autonomous driving

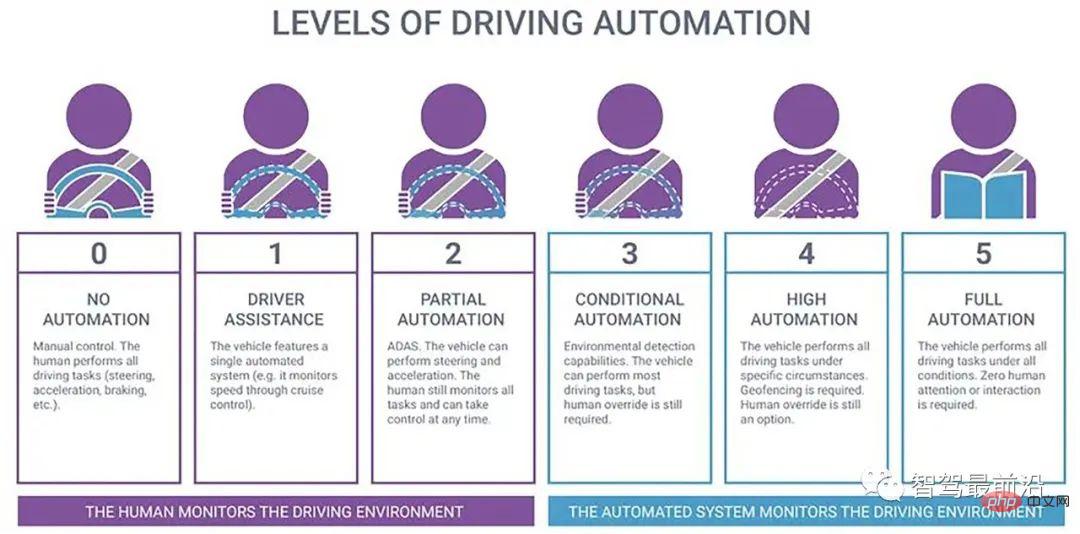

The Society of Automotive Engineers divides autonomous driving into six levels, L0-L5, based on the degree of vehicle intelligence:

- L0 is No Automation , NA), that is, traditional cars, the driver performs all operating tasks, such as steering, braking, acceleration, deceleration or parking, etc.;

- L1 is Driving Assistant (Driving Assistant, DA), that is, it can provide driving warning or assistance to the driver, such as providing support for one operation of the steering wheel or acceleration and deceleration, and the rest is operated by the driver;

- L2 is part Automation (Partial Automation, PA), the vehicle provides driving for multiple operations on the steering wheel and acceleration and deceleration, and the driver is responsible for other driving operations;

- #L3 is Conditional Automation (CA) ), that is, the automatic driving system completes most of the driving operations, and the driver needs to concentrate on preparing for emergencies;

- #L4 is High Automation (HA), where the vehicle Complete all driving operations, the driver does not need to concentrate, but road and environmental conditions are limited;

- #L5 is Full Automation (FA), under any road and environmental conditions , the automatic driving system completes all driving operations, and the driver does not need to concentrate.

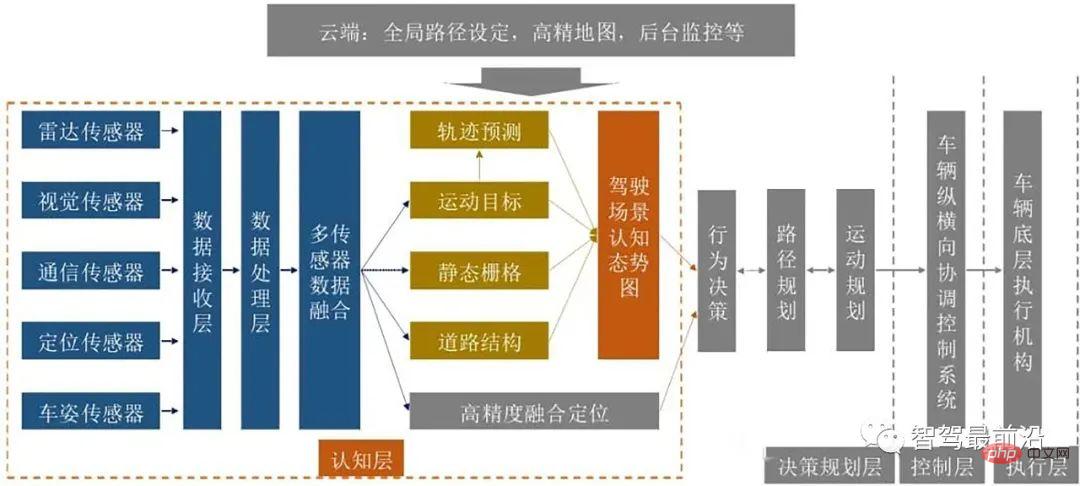

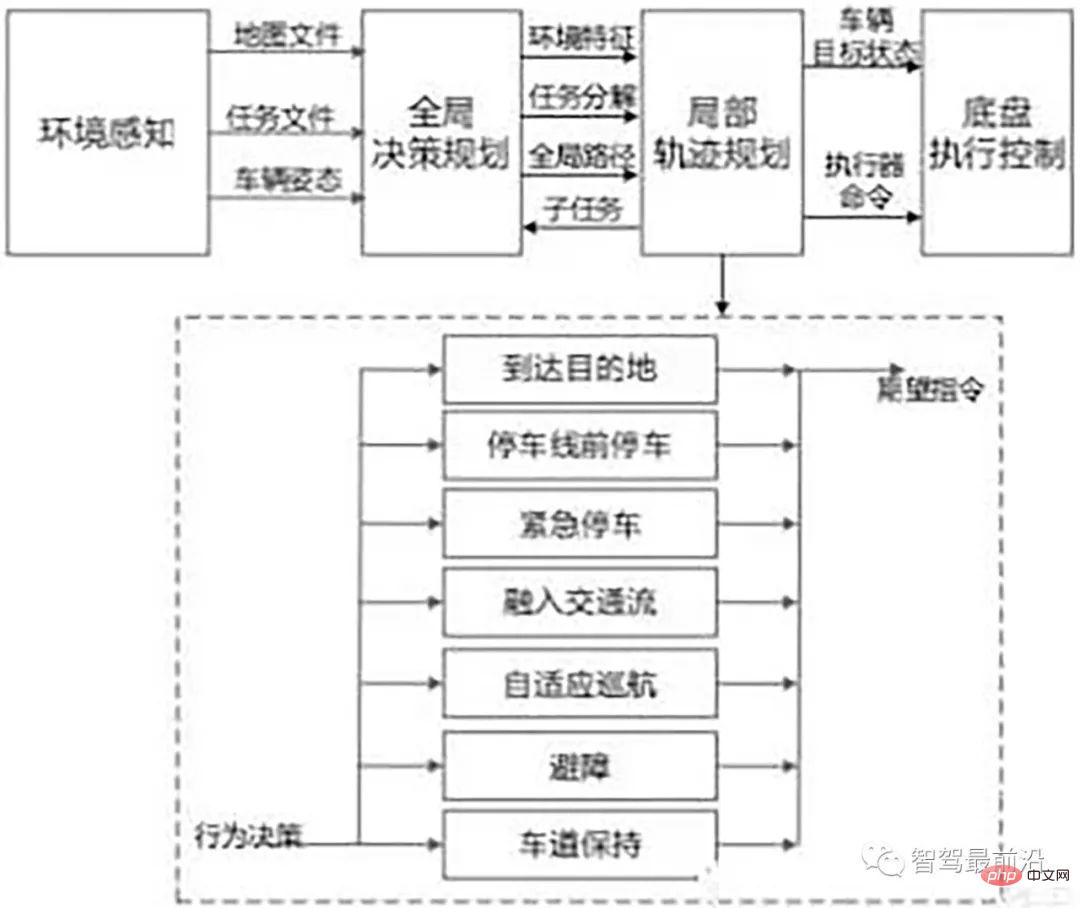

The software and hardware architecture of an autonomous vehicle is shown in Figure 2, which is mainly divided into environmental awareness layers , decision-making and planning layer, control layer and execution layer. The environmental recognition (perception) layer mainly obtains the vehicle's environmental information and vehicle status information through sensors such as laser radar, millimeter wave radar, ultrasonic radar, vehicle cameras, night vision systems, GPS, and gyroscopes. Specifically, it includes: lane lines detection, traffic light recognition, traffic sign recognition, pedestrian detection, vehicle detection, obstacle recognition and vehicle positioning, etc.; the decision-making and planning layer is divided into task planning, behavior planning and trajectory planning, based on the set route planning and the environment. and the vehicle's own status to plan the next specific driving tasks (lane keeping, lane changing, following, overtaking, collision avoidance, etc.), behaviors (acceleration, deceleration, turning, braking, etc.) and paths (driving trajectories); control layer and execution The layer controls vehicle driving, braking, steering, etc. based on the vehicle dynamics system model, so that the vehicle follows the prescribed driving trajectory.

Autonomous driving technology involves many key technologies. This article mainly introduces environment perception technology, high-precision positioning technology, and decision-making and planning techniques and control and execution techniques.

Environment Perception Technology

Environment perception refers to the ability to understand the environment, such as types of obstacles, road signs and markings, and driving Language classification of vehicle detection, traffic information and other data. Positioning is the post-processing of the perception results, which helps the vehicle understand its position relative to its environment through the positioning function. Environmental perception requires obtaining a large amount of surrounding environment information through sensors to ensure a correct understanding of the vehicle's surrounding environment and make corresponding planning and decisions based on this.

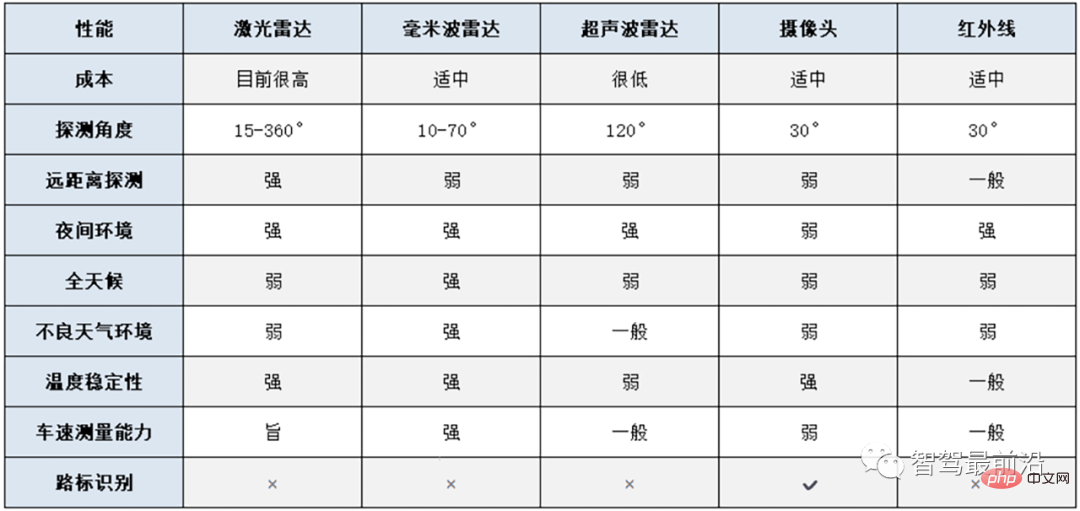

Commonly used environment perception sensors for autonomous vehicles include: cameras, lidar, millimeter wave radar, infrared and ultrasonic radar, etc. Cameras are the most commonly used, simplest, and closest to the human eye imaging principle of environment perception sensors for autonomous vehicles. By capturing the environment around the vehicle in real time, CV technology is used to analyze the captured images to achieve functions such as vehicle and pedestrian detection and traffic sign recognition around the vehicle.

The main advantages of the camera are its high resolution and low cost. However, in bad weather such as night, rain, snow, haze, etc., the performance of the camera will decline rapidly. In addition, the viewing distance of the camera is limited and it is not good at long-distance observation.

Millimeter wave radar is also a commonly used sensor for autonomous vehicles. Millimeter wave radar refers to radar that works in the millimeter wave band (wavelength 1-10 mm, frequency domain 30-300GHz). It is based on ToF technology (Time of Flight) to detect target objects. Millimeter wave radar continuously sends millimeter wave signals to the outside world and receives the signal returned by the target. It determines the distance between the target and the vehicle based on the time difference between signal sending and receiving. Therefore, millimeter wave radar is mainly used to avoid collisions between cars and surrounding objects, such as blind spot detection, obstacle avoidance assistance, parking assistance, adaptive cruise, etc. Millimeter-wave radar has strong anti-interference ability, and its ability to penetrate rainfall, sand, dust, smoke and plasma is much stronger than laser and infrared, and it can work all-weather. However, it also has shortcomings such as large signal attenuation, easy to be blocked by buildings, human bodies, etc., short transmission distance, low resolution, and difficulty in imaging.

Lidar also uses ToF technology to determine the target location and distance. Lidar detects targets by emitting laser beams. Its detection accuracy and sensitivity are higher, and its detection range is wider. However, lidar is more susceptible to interference from rain, snow, haze, etc. in the air, and its high cost also restricts its application. main reason. Vehicle-mounted lidar can be divided into single-line, 4-line, 8-line, 16-line and 64-line lidar according to the number of laser beams emitted. You can use the following table (Table 1) to compare the advantages and disadvantages of mainstream sensors.

Autonomous driving environment perception usually uses two technologies: "weak perception and super intelligence" and "strong perception and strong intelligence" route. Among them, the "weak perception, super intelligence" technology refers to the technology that mainly relies on cameras and deep learning technology to realize environmental perception, and does not rely on lidar. This technology believes that humans can drive with a pair of eyes, and the car can also rely on cameras to see the surrounding environment clearly. If super intelligence is temporarily difficult to achieve, in order to achieve driverless driving, it is necessary to enhance perception capabilities. This is the so-called "strong perception and strong intelligence" technical route.

Compared with the "weak perception and super intelligence" technology route, the biggest feature of the "strong perception and strong intelligence" technology route is the addition of lidar as a sensor, thereby greatly improving perception capabilities. Tesla adopts the "weak intelligence and super intelligence" technical route, while Google Waymo, Baidu Apollo, Uber, Ford Motor and other artificial intelligence companies, travel companies, and traditional car companies all adopt the "strong perception and strong intelligence" technical route.

High-precision positioning technology

The purpose of positioning is to obtain the precise position of an autonomous vehicle relative to the external environment, which is a must for autonomous vehicles Foundation. When driving on complex urban roads, the positioning accuracy requires an error of no more than 10 cm. For example: Only by accurately knowing the distance between the vehicle and the intersection can we make more accurate predictions and preparations; only by accurately positioning the vehicle can we determine the lane in which the vehicle is located. If the positioning error is high, it may cause a complete traffic accident.

GPS is currently the most widely used positioning method. The higher the GPS accuracy, the more expensive the GPS sensor. However, the current positioning accuracy of commercial GPS technology is far from enough. Its accuracy is only meter level and is easily interfered by factors such as tunnel obstruction and signal delay. In order to solve this problem, Qualcomm has developed vision-enhanced high-precision positioning (VEPP) technology, which integrates information from multiple automotive components such as GNSS global navigation, cameras, IMU inertial navigation, and wheel speed sensors. mutual calibration and data fusion to achieve global real-time positioning accurate to lane lines.

Decision and planning technology

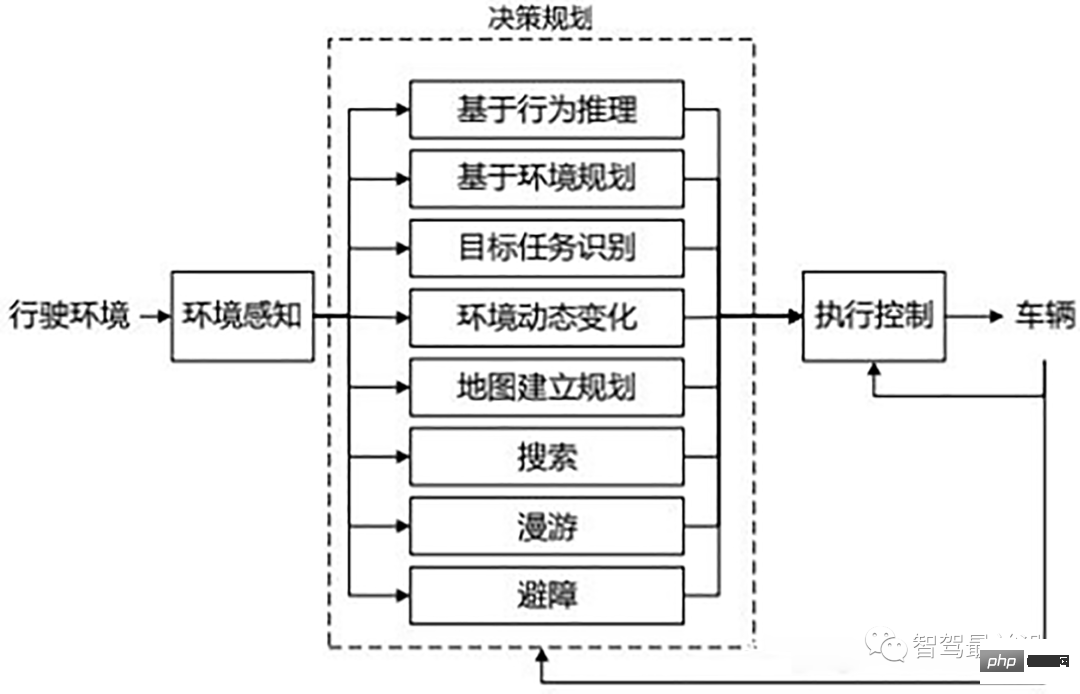

Decision planning is one of the key parts of autonomous driving. It first fuses multi-sensor information, and then drives based on Need to make task decisions, and then be able to plan multiple safe paths between two points through some specific constraints while avoiding existing obstacles, and choose an optimal path among these paths. , as the vehicle driving trajectory, that is planning. According to the different levels of division, it can be divided into two types: global planning and local planning. Global planning uses the obtained map information to plan a collision-free optimal path under specific conditions. For example, there are many roads from Shanghai to Beijing. Planning one as a driving route is the overall planning.

Static path planning algorithms such as grid method, visual diagram method, topology method, free space method, neural network method, etc. Local planning is based on global planning and on the basis of some local environmental information, it is a process that can avoid collision with some unknown obstacles and finally reach the target point. For example, there will be other vehicles or obstacles on the globally planned route from Shanghai to Beijing. If you want to avoid these obstacles or vehicles, you need to turn and adjust the lane. This is local path planning. Local path planning methods include: artificial potential field method, vector domain histogram method, virtual force field method, genetic algorithm and other dynamic path planning algorithms.

The decision-making and planning layer is the autonomous driving system. It is a direct reflection of intelligence and plays a decisive role in the driving safety of the vehicle and the entire vehicle. Common decision-making and planning system structures are divided into Layer-progressive, reactive, and a mixture of the two.

The hierarchical progressive architecture is the structure of a series system. In this system, the modules of the intelligent driving system are in clear order, and the output of the previous module is The input to the next module is therefore also called the perceptual planning action structure. However, the reliability of this structure is not high. Once a software or hardware failure occurs in a certain module, the entire information flow will be affected, and the entire system is likely to collapse or even be paralyzed.

The reactive architecture adopts a parallel structure. The control layer can directly make decisions based on sensor input. Therefore, the actions it generates are a result of the direct action of sensor data. It highlights the characteristics of perceptual action and is suitable for completely unfamiliar environments. Many behaviors in the reactive architecture mainly involve a simple special task, so it feels that the planning and control can be closely integrated, and the storage space occupied is not large, so it can produce fast responses and strong real-time performance. At the same time, each One layer only needs to be responsible for a certain behavior of the system. The entire system can conveniently and flexibly realize the transition from low level to high level. Moreover, if one of the modules has an unexpected failure, the remaining layers can still produce meaningful results. Actions, the robustness of the system has been greatly improved. The difficulty is that due to the flexibility of the system to perform actions, a specific coordination mechanism is needed to resolve the conflicts between various control loops and agree on the actuator to obtain meaningful the result of.

The structure of the hierarchical system and the structure of the reactive system have their own advantages and disadvantages. It is difficult to meet the complex and changeable usage requirements of the driving environment alone, so more and more people in the industry are beginning to study hybrid architectures to effectively combine the advantages of both and generate goal-oriented definitions at the level of global planning. Hierarchical hierarchical behavior generates the behavior of a reactive system oriented to target search at the level of local planning.

Control and execution technology

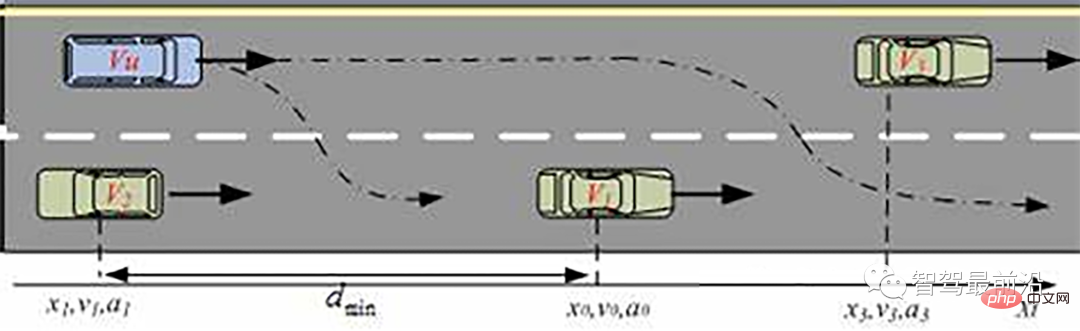

Autonomous driving control The core technology is the vehicle's longitudinal control, lateral control, longitudinal control and vehicle driving and braking control. The lateral control is the adjustment of the steering wheel angle and the control of tire force. By realizing longitudinal and lateral automatic control, you can press the given Goals and constraints automatically control vehicle operation.

The longitudinal control of the vehicle is in the direction of the driving speed, that is, the speed of the vehicle and the relationship between the vehicle and the preceding and following vehicles or obstacles Automatic control of object distance. Cruise control and emergency braking control are both typical examples of longitudinal control in autonomous driving. This type of control problem boils down to the control of motor drives, engines, transmission and braking systems. Various motor-engine-transmission models, vehicle operation models and braking process models are combined with different controller algorithms to form a variety of longitudinal control modes.

The lateral control of the vehicle refers to the control perpendicular to the direction of movement. The goal is to control the vehicle to automatically maintain the desired driving route and achieve good ride comfort and stability under different vehicle speeds, loads, wind resistance, and road conditions. . There are two basic design methods for vehicle lateral control. One is based on driver simulation (one is to use a simpler dynamics model and driver manipulation rules to design the controller; the other is to use the driver's manipulation process The data training controller obtains the control algorithm); the other is a control method that gives the car's lateral motion mechanics model (an accurate car's lateral motion model needs to be established. A typical model is the single-track model, which considers the characteristics of the left and right sides of the car to be the same)

Summary

In addition to the environmental perception, precise positioning, decision planning and control execution introduced above, self-driving cars also involve to key technologies such as high-precision maps, V2X, and autonomous vehicle testing. Autonomous driving technology is a combination of artificial intelligence, high-performance chips, communication technology, sensor technology, vehicle control technology, big data technology and other multi-field technologies. It is difficult to implement the technology. In addition, for the implementation of autonomous driving technology, basic transportation facilities that meet the requirements of autonomous driving must be established, and laws and regulations on autonomous driving must be considered.

The above is the detailed content of Research on key technical difficulties of autonomous driving. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1377

1377

52

52

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

This site reported on June 27 that Jianying is a video editing software developed by FaceMeng Technology, a subsidiary of ByteDance. It relies on the Douyin platform and basically produces short video content for users of the platform. It is compatible with iOS, Android, and Windows. , MacOS and other operating systems. Jianying officially announced the upgrade of its membership system and launched a new SVIP, which includes a variety of AI black technologies, such as intelligent translation, intelligent highlighting, intelligent packaging, digital human synthesis, etc. In terms of price, the monthly fee for clipping SVIP is 79 yuan, the annual fee is 599 yuan (note on this site: equivalent to 49.9 yuan per month), the continuous monthly subscription is 59 yuan per month, and the continuous annual subscription is 499 yuan per year (equivalent to 41.6 yuan per month) . In addition, the cut official also stated that in order to improve the user experience, those who have subscribed to the original VIP

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Improve developer productivity, efficiency, and accuracy by incorporating retrieval-enhanced generation and semantic memory into AI coding assistants. Translated from EnhancingAICodingAssistantswithContextUsingRAGandSEM-RAG, author JanakiramMSV. While basic AI programming assistants are naturally helpful, they often fail to provide the most relevant and correct code suggestions because they rely on a general understanding of the software language and the most common patterns of writing software. The code generated by these coding assistants is suitable for solving the problems they are responsible for solving, but often does not conform to the coding standards, conventions and styles of the individual teams. This often results in suggestions that need to be modified or refined in order for the code to be accepted into the application

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Large Language Models (LLMs) are trained on huge text databases, where they acquire large amounts of real-world knowledge. This knowledge is embedded into their parameters and can then be used when needed. The knowledge of these models is "reified" at the end of training. At the end of pre-training, the model actually stops learning. Align or fine-tune the model to learn how to leverage this knowledge and respond more naturally to user questions. But sometimes model knowledge is not enough, and although the model can access external content through RAG, it is considered beneficial to adapt the model to new domains through fine-tuning. This fine-tuning is performed using input from human annotators or other LLM creations, where the model encounters additional real-world knowledge and integrates it

Seven Cool GenAI & LLM Technical Interview Questions

Jun 07, 2024 am 10:06 AM

Seven Cool GenAI & LLM Technical Interview Questions

Jun 07, 2024 am 10:06 AM

To learn more about AIGC, please visit: 51CTOAI.x Community https://www.51cto.com/aigc/Translator|Jingyan Reviewer|Chonglou is different from the traditional question bank that can be seen everywhere on the Internet. These questions It requires thinking outside the box. Large Language Models (LLMs) are increasingly important in the fields of data science, generative artificial intelligence (GenAI), and artificial intelligence. These complex algorithms enhance human skills and drive efficiency and innovation in many industries, becoming the key for companies to remain competitive. LLM has a wide range of applications. It can be used in fields such as natural language processing, text generation, speech recognition and recommendation systems. By learning from large amounts of data, LLM is able to generate text

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

Editor |ScienceAI Question Answering (QA) data set plays a vital role in promoting natural language processing (NLP) research. High-quality QA data sets can not only be used to fine-tune models, but also effectively evaluate the capabilities of large language models (LLM), especially the ability to understand and reason about scientific knowledge. Although there are currently many scientific QA data sets covering medicine, chemistry, biology and other fields, these data sets still have some shortcomings. First, the data form is relatively simple, most of which are multiple-choice questions. They are easy to evaluate, but limit the model's answer selection range and cannot fully test the model's ability to answer scientific questions. In contrast, open-ended Q&A

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Machine learning is an important branch of artificial intelligence that gives computers the ability to learn from data and improve their capabilities without being explicitly programmed. Machine learning has a wide range of applications in various fields, from image recognition and natural language processing to recommendation systems and fraud detection, and it is changing the way we live. There are many different methods and theories in the field of machine learning, among which the five most influential methods are called the "Five Schools of Machine Learning". The five major schools are the symbolic school, the connectionist school, the evolutionary school, the Bayesian school and the analogy school. 1. Symbolism, also known as symbolism, emphasizes the use of symbols for logical reasoning and expression of knowledge. This school of thought believes that learning is a process of reverse deduction, through existing

SOTA performance, Xiamen multi-modal protein-ligand affinity prediction AI method, combines molecular surface information for the first time

Jul 17, 2024 pm 06:37 PM

SOTA performance, Xiamen multi-modal protein-ligand affinity prediction AI method, combines molecular surface information for the first time

Jul 17, 2024 pm 06:37 PM

Editor | KX In the field of drug research and development, accurately and effectively predicting the binding affinity of proteins and ligands is crucial for drug screening and optimization. However, current studies do not take into account the important role of molecular surface information in protein-ligand interactions. Based on this, researchers from Xiamen University proposed a novel multi-modal feature extraction (MFE) framework, which for the first time combines information on protein surface, 3D structure and sequence, and uses a cross-attention mechanism to compare different modalities. feature alignment. Experimental results demonstrate that this method achieves state-of-the-art performance in predicting protein-ligand binding affinities. Furthermore, ablation studies demonstrate the effectiveness and necessity of protein surface information and multimodal feature alignment within this framework. Related research begins with "S

Laying out markets such as AI, GlobalFoundries acquires Tagore Technology's gallium nitride technology and related teams

Jul 15, 2024 pm 12:21 PM

Laying out markets such as AI, GlobalFoundries acquires Tagore Technology's gallium nitride technology and related teams

Jul 15, 2024 pm 12:21 PM

According to news from this website on July 5, GlobalFoundries issued a press release on July 1 this year, announcing the acquisition of Tagore Technology’s power gallium nitride (GaN) technology and intellectual property portfolio, hoping to expand its market share in automobiles and the Internet of Things. and artificial intelligence data center application areas to explore higher efficiency and better performance. As technologies such as generative AI continue to develop in the digital world, gallium nitride (GaN) has become a key solution for sustainable and efficient power management, especially in data centers. This website quoted the official announcement that during this acquisition, Tagore Technology’s engineering team will join GLOBALFOUNDRIES to further develop gallium nitride technology. G