Technology peripherals

Technology peripherals

AI

AI

Has the language model learned to use search engines on its own? Meta AI proposes API call self-supervised learning method Toolformer

Has the language model learned to use search engines on its own? Meta AI proposes API call self-supervised learning method Toolformer

Has the language model learned to use search engines on its own? Meta AI proposes API call self-supervised learning method Toolformer

In natural language processing tasks, large language models have achieved impressive results in zero-shot and few-shot learning. However, all models have inherent limitations that can often only be partially addressed through further extensions. Specifically, the limitations of the model include the inability to access the latest information, the "information hallucination" of facts, the difficulty of understanding low-resource languages, the lack of mathematical skills for precise calculations, etc.

A simple way to solve these problems is to equip the model with external tools, such as a search engine, calculator, or calendar. However, existing methods often rely on extensive manual annotations or limit the use of tools to specific task settings, making the use of language models combined with external tools difficult to generalize.

In order to break this bottleneck, Meta AI recently proposed a new method called Toolformer, which allows the language model to learn to "use" various external tools.

##Paper address: https://arxiv.org/pdf/2302.04761v1.pdf

Toolformer quickly attracted great attention. Some people believed that this paper solved many problems of current large language models and praised: "This is the most important article in recent weeks. paper".

Some people pointed out that Toolformer uses self-supervised learning to allow large language models to learn to use some APIs and tools, which are very flexible and efficient:

Some even think that Toolformer will take us away from general artificial intelligence (AGI) One step closer.

Toolformer gets such a high rating because it meets the following practical needs:

- Large language models should learn the use of tools in a self-supervised manner without the need for extensive human annotation. This is critical because the cost of human annotation is high, but more importantly, what humans think is useful may be different from what the model thinks is useful.

- Language models require more comprehensive use of tools that are not bound to a specific task.

This clearly breaks the bottleneck mentioned above. Let’s take a closer look at Toolformer’s methods and experimental results.

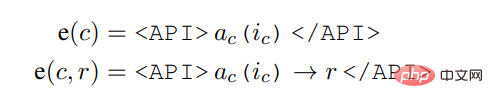

MethodToolformer generates the dataset from scratch based on a large language model with in-context learning (ICL) (Schick and Schütze, 2021b; Honovich et al. , 2022; Wang et al., 2022)’s idea: just give a few samples of humans using the API, you can let LM annotate a huge language modeling data set with potential API calls; then use self-supervised loss function to determine which API calls actually help the model predict future tokens; and finally fine-tune based on API calls that are useful to the LM itself.

Since Toolformer is agnostic to the dataset used, it can be used on the exact same dataset as the model was pre-trained on, which ensures that the model does not lose any generality and language Modeling capabilities.

Specifically, the goal of this research is to equip the language model M with the ability to use various tools through API calls. This requires that the input and output of each API can be characterized as a sequence of text. This allows API calls to be seamlessly inserted into any given text, with special tokens used to mark the beginning and end of each such call.

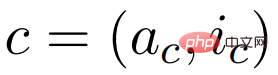

The study represents each API call as a tuple

, where a_c is the name of the API and i_c is the corresponding input. Given an API call c with corresponding result r, this study represents the linearized sequence of API calls excluding and including its result as:

Among them,

Given data set

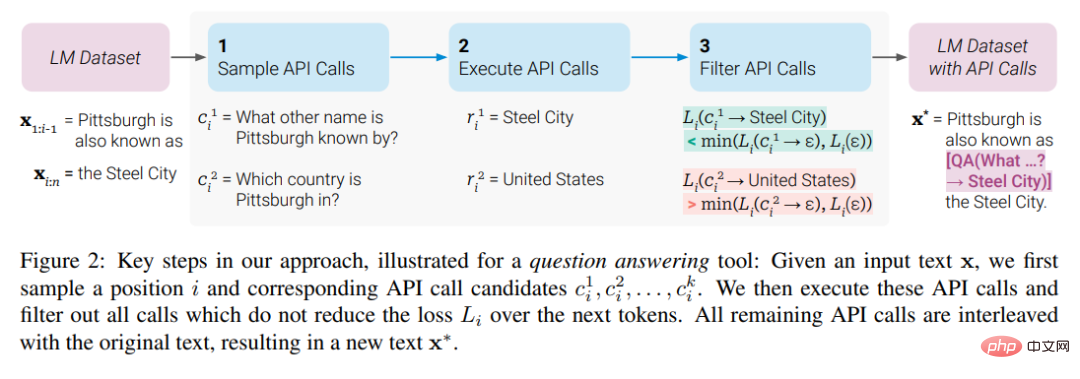

, the study first transformed this data set into a data set C* with added API calls. This is done in three steps, as shown in Figure 2 below: First, the study leverages M's in-context learning capabilities to sample a large number of potential API calls, then executes these API calls, and then checks whether the obtained responses help predictions Future token to be used as filtering criteria. After filtering, the study merges API calls to different tools, ultimately generating dataset C*, and fine-tunes M itself on this dataset.

Experiments and Results

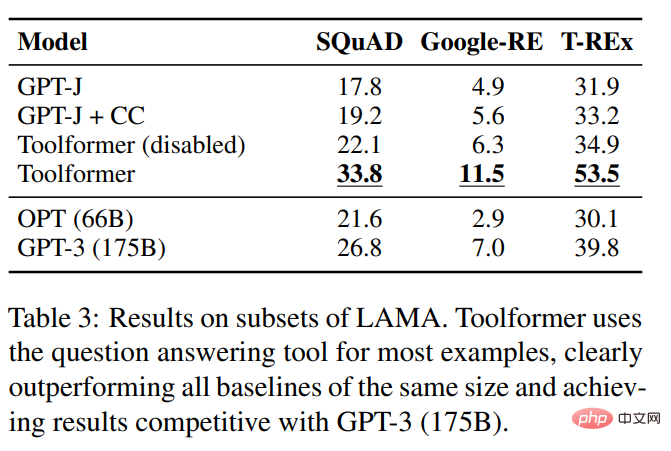

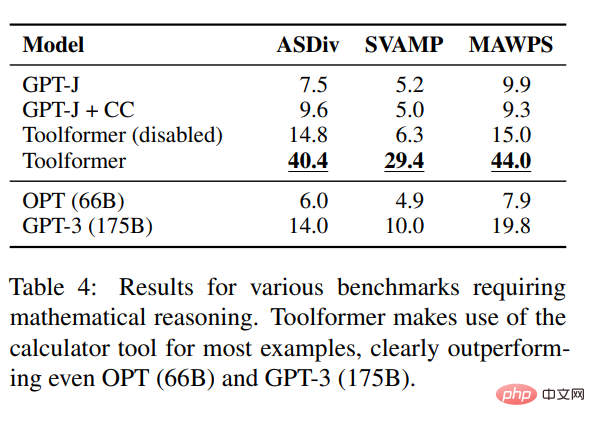

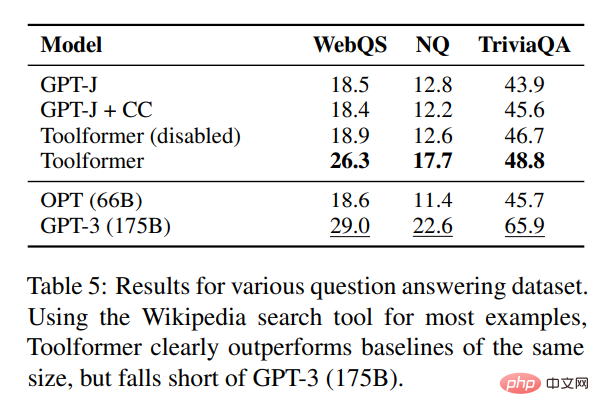

The study was conducted on a variety of different downstream tasks Experimental results show that: Toolformer based on the 6.7B parameter pre-trained GPT-J model (learned to use various APIs and tools) significantly outperforms the larger GPT-3 model and several other baselines on various tasks.

This study evaluated several models on the SQuAD, GoogleRE and T-REx subsets of the LAMA benchmark. The experimental results are shown in Table 3 below:

To test the mathematical reasoning capabilities of Toolformer, the study conducted experiments on the ASDiv, SVAMP, and MAWPS benchmarks. Experiments show that Toolformer uses calculator tools in most cases, which is significantly better than OPT (66B) and GPT-3 (175B).

In terms of question answering, the study conducted experiments on three question answering data sets: Web Questions, Natural Questions and TriviaQA . Toolformer significantly outperforms baseline models of the same size, but is inferior to GPT-3 (175B).

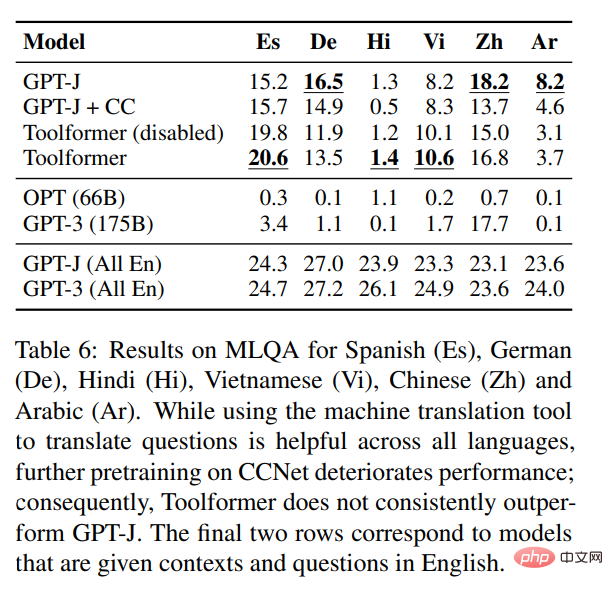

In terms of cross-language tasks, this study compared all baseline models on Toolformer and MLQA, and the results are as follows As shown in Table 6:

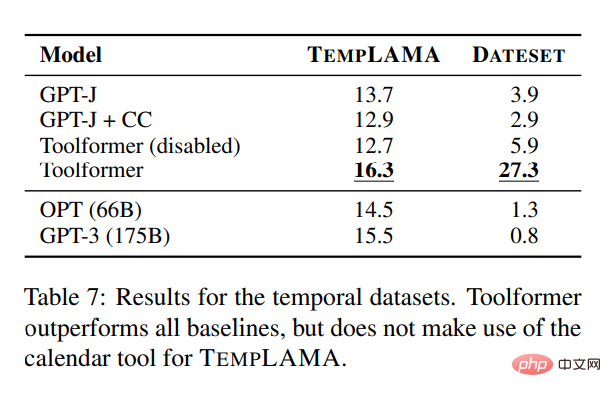

##In order to study the effectiveness of the calendar API, the study was conducted on TEMPLAMA and a new API called DATESET Experiments were conducted on several models on the dataset. Toolformer outperforms all baselines but does not use the TEMPLAMA calendar tool.

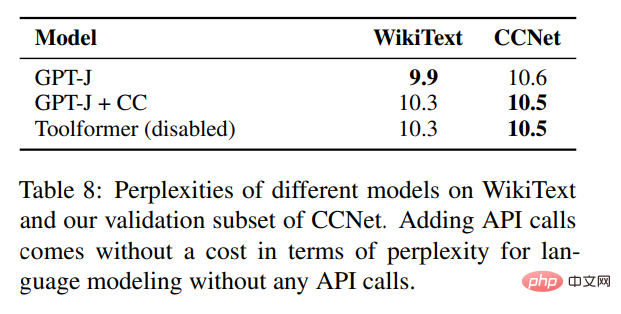

In addition to validating performance improvements on various downstream tasks, the study also hopes to ensure that Toolformer's language modeling performance is not degraded by fine-tuning of API calls. To this end, this study conducts experiments on two language modeling datasets to evaluate, and the perplexity of the model is shown in Table 8 below.

For language modeling without any API calls, there is no cost to add API calls.

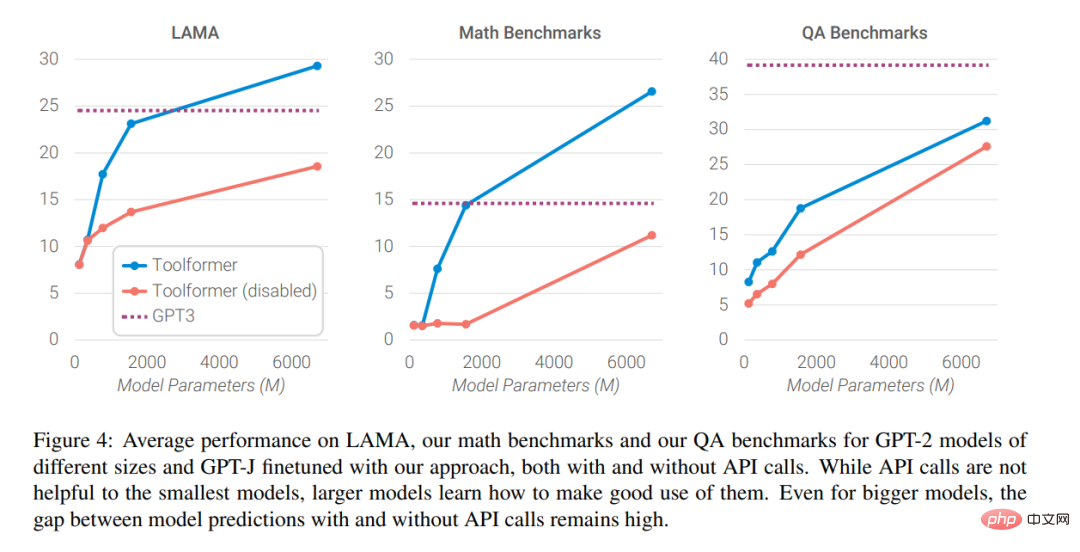

Finally, the researchers analyzed how the ability to seek help from external tools affects the model as the size of the language model increases. The impact of performance, the analysis results are shown in Figure 4 below

##Interested readers can read the original text of the paper to learn more Study the details.

The above is the detailed content of Has the language model learned to use search engines on its own? Meta AI proposes API call self-supervised learning method Toolformer. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1377

1377

52

52

How to interpret the output results of Debian Sniffer

Apr 12, 2025 pm 11:00 PM

How to interpret the output results of Debian Sniffer

Apr 12, 2025 pm 11:00 PM

DebianSniffer is a network sniffer tool used to capture and analyze network packet timestamps: displays the time for packet capture, usually in seconds. Source IP address (SourceIP): The network address of the device that sent the packet. Destination IP address (DestinationIP): The network address of the device receiving the data packet. SourcePort: The port number used by the device sending the packet. Destinatio

How to check Debian OpenSSL configuration

Apr 12, 2025 pm 11:57 PM

How to check Debian OpenSSL configuration

Apr 12, 2025 pm 11:57 PM

This article introduces several methods to check the OpenSSL configuration of the Debian system to help you quickly grasp the security status of the system. 1. Confirm the OpenSSL version First, verify whether OpenSSL has been installed and version information. Enter the following command in the terminal: If opensslversion is not installed, the system will prompt an error. 2. View the configuration file. The main configuration file of OpenSSL is usually located in /etc/ssl/openssl.cnf. You can use a text editor (such as nano) to view: sudonano/etc/ssl/openssl.cnf This file contains important configuration information such as key, certificate path, and encryption algorithm. 3. Utilize OPE

What are the security settings for Debian Tomcat logs?

Apr 12, 2025 pm 11:48 PM

What are the security settings for Debian Tomcat logs?

Apr 12, 2025 pm 11:48 PM

To improve the security of DebianTomcat logs, we need to pay attention to the following key policies: 1. Permission control and file management: Log file permissions: The default log file permissions (640) restricts access. It is recommended to modify the UMASK value in the catalina.sh script (for example, changing from 0027 to 0022), or directly set filePermissions in the log4j2 configuration file to ensure appropriate read and write permissions. Log file location: Tomcat logs are usually located in /opt/tomcat/logs (or similar path), and the permission settings of this directory need to be checked regularly. 2. Log rotation and format: Log rotation: Configure server.xml

The role of Debian Sniffer in DDoS attack detection

Apr 12, 2025 pm 10:42 PM

The role of Debian Sniffer in DDoS attack detection

Apr 12, 2025 pm 10:42 PM

This article discusses the DDoS attack detection method. Although no direct application case of "DebianSniffer" was found, the following methods can be used for DDoS attack detection: Effective DDoS attack detection technology: Detection based on traffic analysis: identifying DDoS attacks by monitoring abnormal patterns of network traffic, such as sudden traffic growth, surge in connections on specific ports, etc. This can be achieved using a variety of tools, including but not limited to professional network monitoring systems and custom scripts. For example, Python scripts combined with pyshark and colorama libraries can monitor network traffic in real time and issue alerts. Detection based on statistical analysis: By analyzing statistical characteristics of network traffic, such as data

Comparison between Debian Sniffer and Wireshark

Apr 12, 2025 pm 10:48 PM

Comparison between Debian Sniffer and Wireshark

Apr 12, 2025 pm 10:48 PM

This article discusses the network analysis tool Wireshark and its alternatives in Debian systems. It should be clear that there is no standard network analysis tool called "DebianSniffer". Wireshark is the industry's leading network protocol analyzer, while Debian systems offer other tools with similar functionality. Functional Feature Comparison Wireshark: This is a powerful network protocol analyzer that supports real-time network data capture and in-depth viewing of data packet content, and provides rich protocol support, filtering and search functions to facilitate the diagnosis of network problems. Alternative tools in the Debian system: The Debian system includes networks such as tcpdump and tshark

How Tomcat logs help troubleshoot memory leaks

Apr 12, 2025 pm 11:42 PM

How Tomcat logs help troubleshoot memory leaks

Apr 12, 2025 pm 11:42 PM

Tomcat logs are the key to diagnosing memory leak problems. By analyzing Tomcat logs, you can gain insight into memory usage and garbage collection (GC) behavior, effectively locate and resolve memory leaks. Here is how to troubleshoot memory leaks using Tomcat logs: 1. GC log analysis First, enable detailed GC logging. Add the following JVM options to the Tomcat startup parameters: -XX: PrintGCDetails-XX: PrintGCDateStamps-Xloggc:gc.log These parameters will generate a detailed GC log (gc.log), including information such as GC type, recycling object size and time. Analysis gc.log

How to use Debian Apache logs to improve website performance

Apr 12, 2025 pm 11:36 PM

How to use Debian Apache logs to improve website performance

Apr 12, 2025 pm 11:36 PM

This article will explain how to improve website performance by analyzing Apache logs under the Debian system. 1. Log Analysis Basics Apache log records the detailed information of all HTTP requests, including IP address, timestamp, request URL, HTTP method and response code. In Debian systems, these logs are usually located in the /var/log/apache2/access.log and /var/log/apache2/error.log directories. Understanding the log structure is the first step in effective analysis. 2. Log analysis tool You can use a variety of tools to analyze Apache logs: Command line tools: grep, awk, sed and other command line tools.

How to configure Debian Apache log format

Apr 12, 2025 pm 11:30 PM

How to configure Debian Apache log format

Apr 12, 2025 pm 11:30 PM

This article describes how to customize Apache's log format on Debian systems. The following steps will guide you through the configuration process: Step 1: Access the Apache configuration file The main Apache configuration file of the Debian system is usually located in /etc/apache2/apache2.conf or /etc/apache2/httpd.conf. Open the configuration file with root permissions using the following command: sudonano/etc/apache2/apache2.conf or sudonano/etc/apache2/httpd.conf Step 2: Define custom log formats to find or