The topic of this sharing is ChatGPT technology, localization attempts and open source models. The sharing consists of three parts. The first part gives an overall introduction to ChatGPT related technologies: the evolution of ChatGPT technology, current problems, the three stages of ChatGPT technology learning, data organization and effect evaluation; the second part shares our experience in ChatGPT Our attempts at technology localization include the problems we encountered during the experiment, our thoughts, and the effects and applications of the model; the third part introduces the Chinese open source large model we have released, and how to use our own data to train a local model During the operation, the problems that may be encountered during the experiment, the gaps between it and the open source advanced model, and how to further improve the effect of the model.

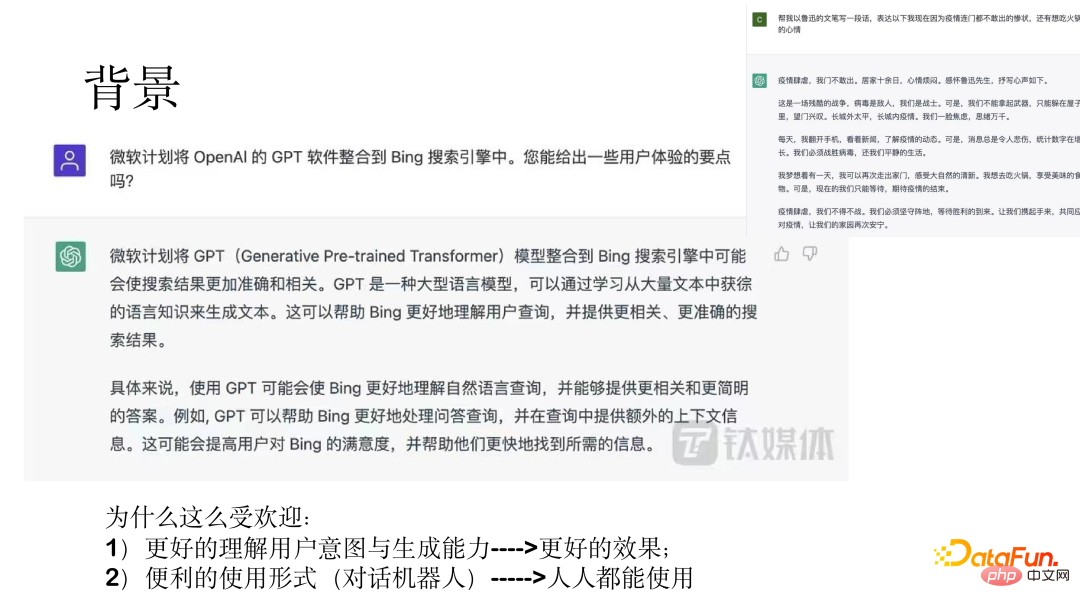

ChatGPT is a general functional assistant. On December 5, 2022, OpenAI CEO Sam Altman posted on social media that ChatGPT had exceeded 1 million users five days after its launch. The AI chatbot ChatGPT exploded into popularity and has become a landmark event. Microsoft is in talks to increase its stake by $10 billion and soon integrate it into the Microsoft Cloud.

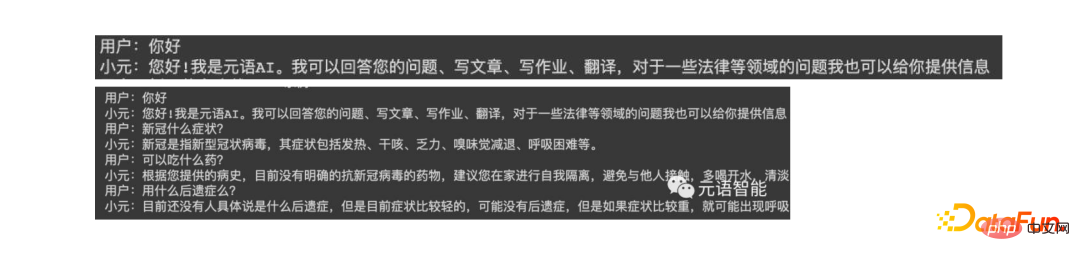

The above picture shows two examples, showing amazing results.

The reason why ChatGPT is so popular is, on the one hand, its ability to understand user intentions and its better generated effects; on the other hand, through the use of conversational robots form so that everyone can use it.

The following will cover the evolution of the model, problems with the initial model, the three stages of ChatGPT model learning, and the data organization and effects of training the ChatGPT model. introduce.

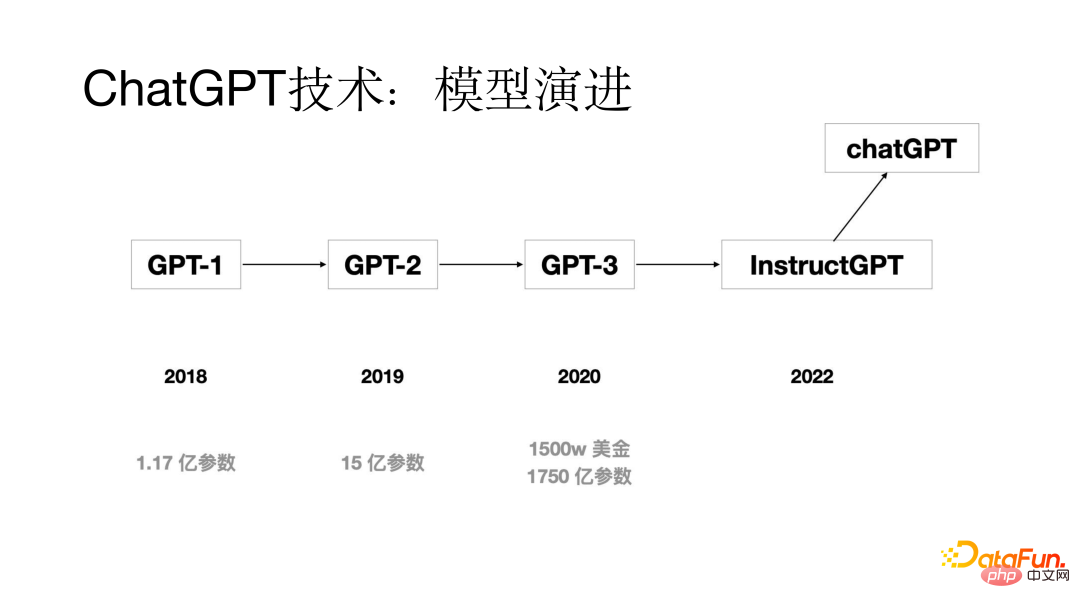

ChatGPT technology has also evolved through several generations of models. The initial GPT model is Proposed in 2018, the model parameters were only 117 million; in 2019, the GPT-2 model parameters were 1.5 billion; by 2020, the GPT-3 model parameters reached 175 billion; through several generations of model update iterations, it will appear by 2022 ChatGPT model.

In What were the problems with the models before the ChatGPT model came out? Through analysis, it was found that one of the more obvious problems is the alignment problem. Although the generation ability of large models is relatively strong, the generated answers sometimes do not meet the user's intention. Through research, it was found that the main reason for the alignment problem is that the training goal of language model training is to predict the next word, rather than generate it according to the user's intention. In order to solve the alignment problem, the Reinforcement Learning from Human Feedback (RLHF) process is added to the training process of the ChatGPT model.

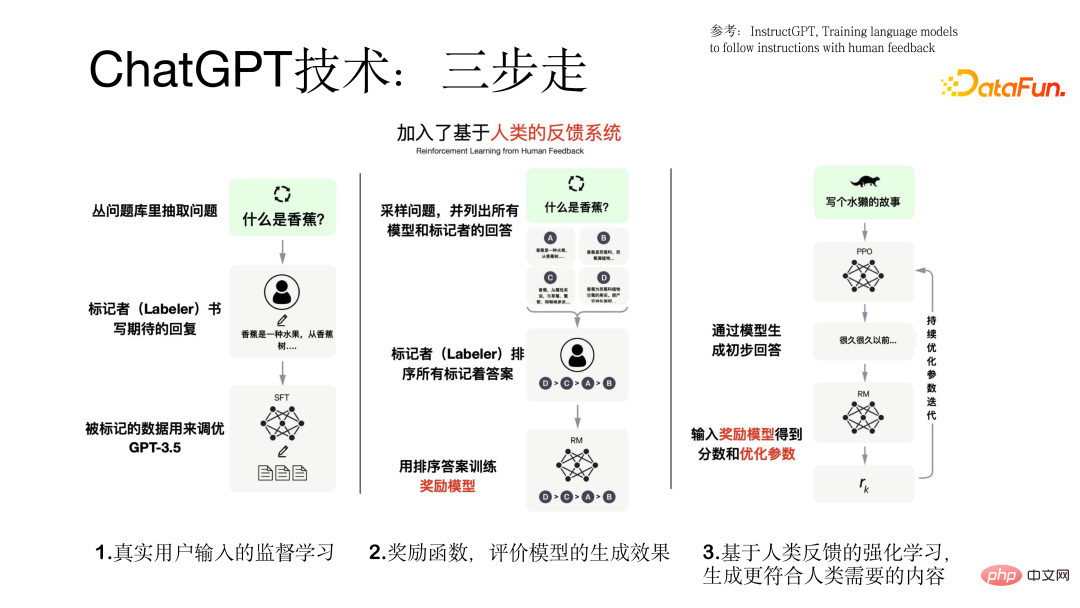

The training process of the ChatGPT model is carried out in a three-step process.

The first step is to use real user input for supervised learning based on the GPT model. In this The data in the process comes from real users, and the data quality is relatively high and valuable.

The second step is to train a reward model. Different models will produce different outputs for a query. As a result, the tagger sorts the output results of all models and uses these sorted data to train the reward model.

The third step is to Input the preliminary answer generated by the model into the reward model. The reward model will evaluate the answer. If the generated If the answer meets the user's intention, a positive feedback will be given, otherwise a negative feedback will be given, thereby making the model better and better. This is the purpose of introducing reinforcement learning to make the generated results more in line with human needs. The three-step process of training the ChatGPT model is shown in the figure below.

Before training the model, we need to prepare the data set to be used. In this process, we will encounter the problem of data cold start, can be solved through the following three aspects:

(1) Collect data sets used by users of the old system

(2) Let the annotators annotate some similar prompts and output

based on the questions input by real users before Think of some prompts.

The data for training the ChatGPT model contains three parts of the data set (77k real data):

(1) Supervised learning based on real user prompts Data, user prompt, model response, the amount of data is 13k.

(2) The data set used to train the reward model. This part of the data is for the sorting of multiple responses corresponding to one prompt, and the data volume is 33k.

# (3) A data set based on the reward model using reinforcement learning technology for model training. It only requires user prompts. The data volume is 31k and has high quality requirements. .

After completing the ChatGPT model training, the evaluation of the model is relatively sufficient, mainly from the following aspects:

(1 ) Whether the results generated by the model meet the user's intention

(2) Whether the generated results can satisfy the constraints mentioned by the user

(3) Whether the model can have good results in the field of customer service

Details of comparison with the GPT basic model The experimental results are shown in the figure below.

The following will discuss the background and problems, solution ideas, effects and practices. This aspect introduces our localization of ChatGPT technology.

Why we need to carry out localization, we mainly consider the following aspects:

(1) ChatGPT technology itself is relatively advanced and works well on many tasks, but it does not provide services to mainland China.

# (2) It may not be able to meet the needs of domestic enterprise-level customers and cannot provide localized technical support and services.

# (3) The price is priced in US dollars in Europe and the United States as the main markets. The price is relatively expensive and most domestic users may not be able to afford it. Through testing, it was found that each piece of data costs about 0.5 yuan, and commercialization is impossible for customers with large amounts of data.

Due to the above three problems, we tried to localize ChatGPT technology.

#We are in the process of localizing ChatGPT technology, A distributed strategy was adopted.

First, a Chinese pre-training model with tens of billions of parameters was trained; secondly, task supervised learning was performed using Prompt on billion-level task data; and then the model was conversationalized, that is, in Interact with people in the form of dialogue or human-computer interaction; finally, we introduce the reinforcement learning RLHF technology of reward model and user feedback.

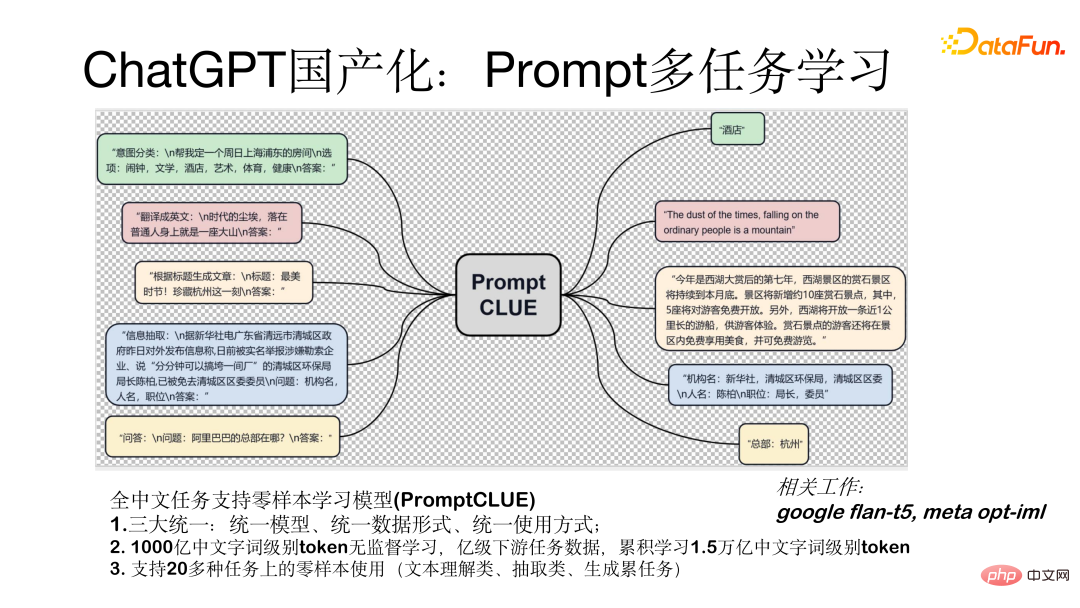

Prompt multi-task learning model (PromptCLUE) is a model that supports zero-sample learning for all Chinese tasks. This model achieves three major unifications: unified model, unified data form (all tasks are converted into prompt form), and unified usage method (used in zero-sample form). The model is based on unsupervised learning of 100 billion Chinese word-level tokens. It is trained on billion-level downstream task data and has accumulated 1.5 trillion Chinese word-level tokens. Supports zero-sample use on more than 20 tasks (text understanding, extraction, and generation tasks).

How to make the model conversational, that is, convert it into a model in the form of human-computer interaction, we mainly did the following aspects Work:

First of all, in order to make the model have a better generation effect, we removed the text understanding and extraction tasks, thus strengthening the question and answer, dialogue and generation tasks. learning; secondly, after transforming into a dialogue model, the generated results will be interfered by the context. To address this problem, we added anti-interference data so that the model can ignore irrelevant context when necessary; finally, we based on the feedback data of real users A learning process is added to enable the model to better understand the user's intentions. The figure below shows the form of single-round and multi-round testing with the model.

The following is a test for the model By comparing the current effect with the ChatGPT model, there is still a gap of 1 to 2 years. However, this gap can be gradually made up. At present, we have made some useful attempts and have achieved certain results. We can currently have some dialogues. , Q&A, writing and other interactions. The image below shows the test results.

The metalanguage functional dialogue model (ChatYuan) we just released recently has 770 million parameters. The online version is a model with 10 billion parameters. It has been launched on multiple platforms, including Huggingface, ModelScope, and Github. , paddlepaddle can be used. Models can be downloaded locally and fine-tuned based on your own user data set. It is further trained based on PromptCLUE-large combined with hundreds of millions of functional dialogue multi-round dialogue data.

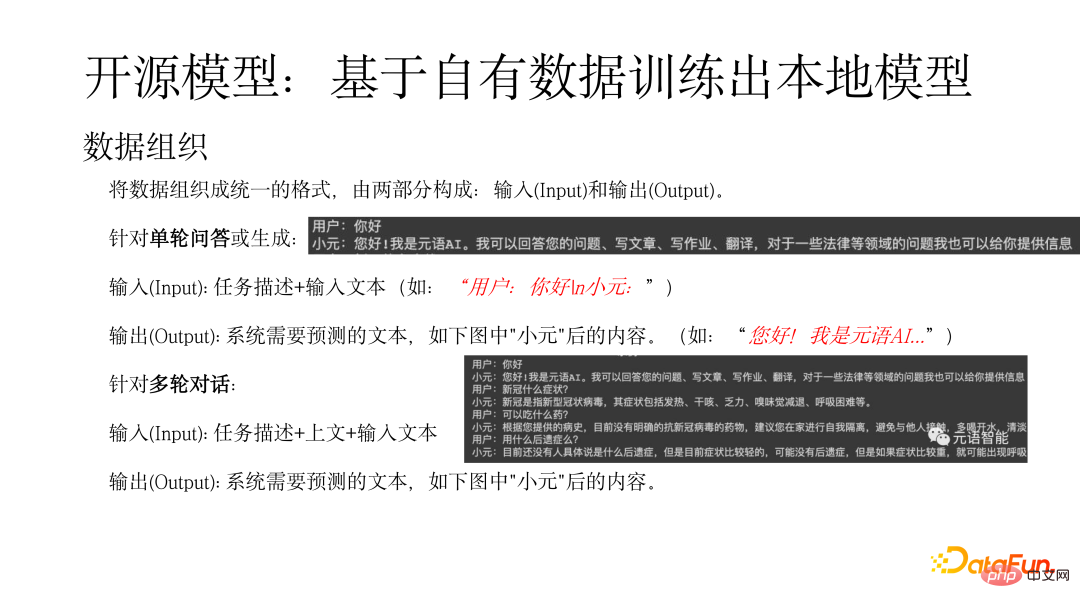

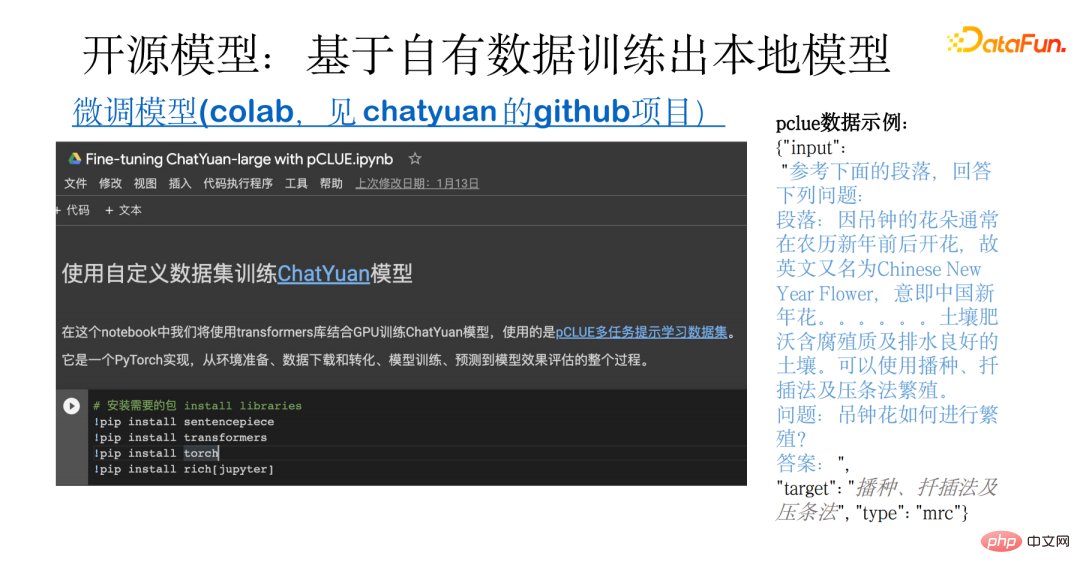

## The Huggingface platform is taken as an example to show how to use the model locally. Search ChatYuan on the platform, load the model, and perform simple packaging. There are some important parameters in use, such as whether to sample samples. If you need to generate a variety of samples, you need to sample. #First, the data needs to be organized into a unified form, which consists of two parts: input and Output. For a single round of question and answer or generated input (Input): task description input text (such as: "User: Hello n Xiaoyuan:"), output (Output) refers to the text that the system needs to predict (such as: "Hello! I am Metalanguage AI..."). For multi-round dialogue input (Input): Task description above input text, output refers to the text that the system needs to predict, as shown in the figure below after "Xiaoyuan". The following figure shows an example of training a local model based on your own data. This example covers the entire process, from data preparation to downloading and converting open source data, as well as model training, prediction, and evaluation. The basis is the pCLUE multi-task dataset. Users can use their own data for training, or use pCLUE for preliminary training to test the effect. ChatYuan and ChatGPT are both general functional conversation models, capable of question and answer, interaction and generation in chatting or professional fields such as law and medicine. Compared with the ChatGPT model, there is still a certain gap, mainly reflected in the following aspects: In the process of using the model, you may encounter problems with the generation effect and text length, depending on whether the data format is correct and whether during the generation process Sampling sample, the length of the output result controls max_length, etc. To further improve the model effect, you can start from the following aspects: (1) Combine industry data for further training, including unsupervised pre-training, and use a large amount of high-quality data for supervised learning. ## (2) Learning using real user feedback data can compensate for distribution differences. #(3) Introduce reinforcement learning to align user intentions. # (4) Choose a larger model. Generally speaking, the larger the model, the stronger the model capability. The new technologies and usage scenarios brought by ChatGPT allow people to see the huge potential of AI. More applications will be upgraded, creating possibilities for some new applications. Yuanyu Intelligence, as a large model Model-as-a-Service service provider, is also constantly exploring in this field. Interested partners are welcome to pay attention to our website and official account. That’s it for today’s sharing, thank you all. 2. Training local models based on own data

3. Possible problems, gaps and how to further improve the effect

The above is the detailed content of An attempt to localize ChatGPT technology. For more information, please follow other related articles on the PHP Chinese website!