ChatGPT’s popularity continues to this day, and breaking news and technical interpretations surrounding it continue to emerge. Regarding its number of parameters, there is a common assumption that ChatGPT has the same number of parameters as the 175 billion parameter model introduced in the GPT-3 paper. However, people who work deeply in the field of large language models know that this is not true. By analyzing the memory bandwidth of the A100 GPU, we find that the actual inference speed of the ChatGPT API is much faster than the maximum theoretical inference speed of the 175 billion Dense equivalent model.

This article will use proof by contradiction to prove and support the above argument, using only some theoretical knowledge learned in college. Also note that there is also the opposite problem, which is that some people claim that ChatGPT only has X billion parameters (X is much lower than 1750). However, these claims cannot be verified because the people who make them usually speak from hearsay.

The next step is the detailed argumentation process.

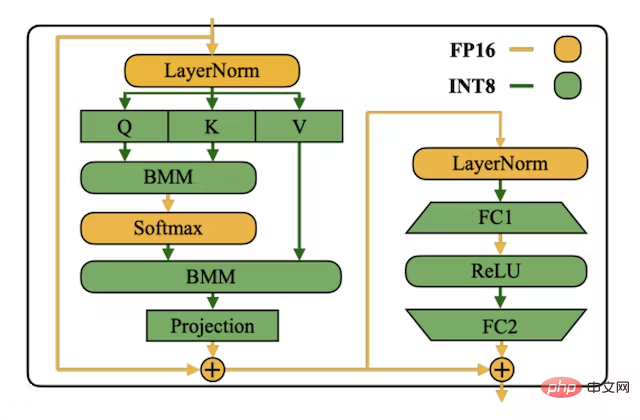

Assume that the ChatGPT model has 175 billion parameters. INT8 format is usually used to store LLM weights for lower latency inference and higher throughput. size and lower memory requirements (twice less memory than storing in float16 format). Each INT8 parameter requires 1 byte for storage. A simple calculation shows that the model requires 175GB of storage space.

The picture is from the INT8 SmoothQuant paper, address: https://arxiv.org/abs/2211.10438

In terms of inference, the GPT-style language model is "autoregressive" on each forward pass, predicting the next most likely token (for something like ChatGPT's RLHF model, which predicts the next token that its human annotators prefer). This means 200 tokens are generated, so 200 forward passes need to be performed. For each forward pass, we need to load all the weights of the model from high-bandwidth (HBM) memory into the matrix computing unit (the tensor computing core of the GPU), which means that we need to load 175GB of weights for each forward pass. .

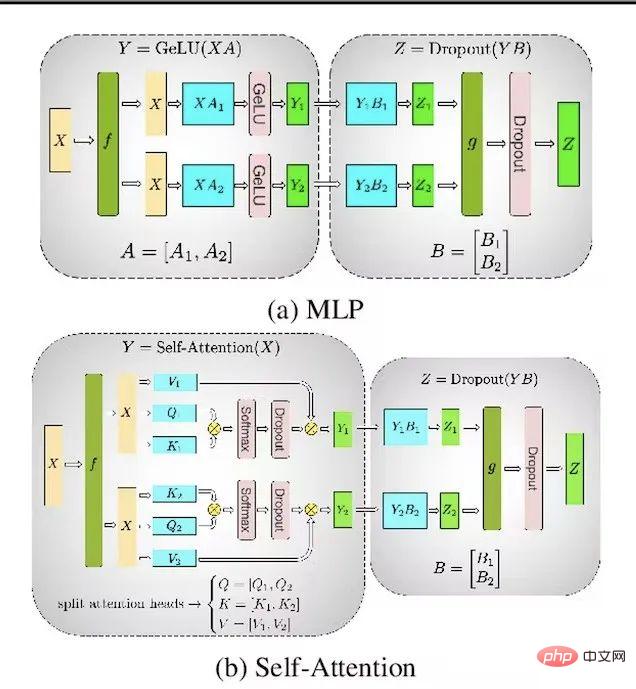

On the Microsoft Azure platform, the maximum number of A100s that can be allocated on a node is 8. This means that the maximum tensor parallelism per model instance is 8. So instead of loading 175GB of weights per forward pass, you only need to load 21.87GB per GPU per forward pass because tensor parallelism can parallelize the weights and computation on all GPUs.

The picture is from the Megatron-LM paper, address: https://arxiv.org/abs/1909.08053

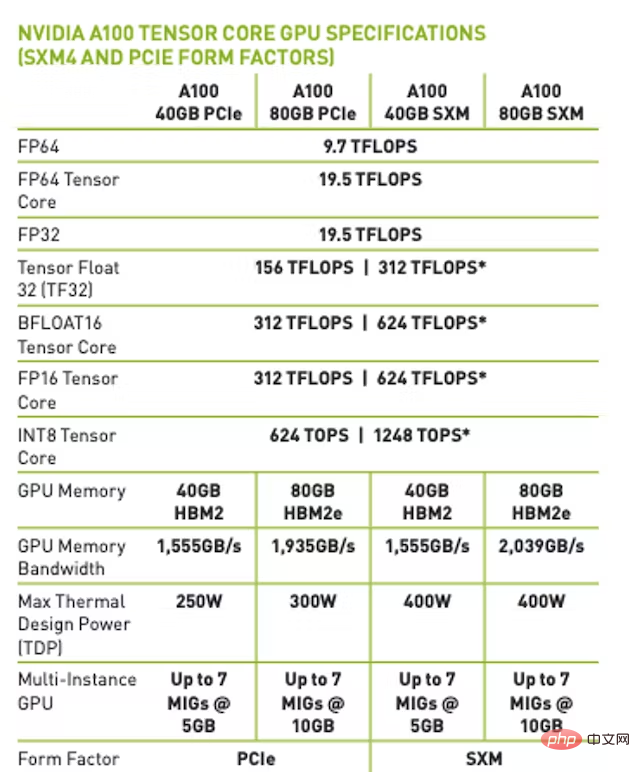

On the A100 80GB SXM version, the maximum memory bandwidth is 2TB/s. This means that with batchsize=1 (limited by memory bandwidth), the maximum theoretical forward pass speed will be 91 times/second. Also, most of the time is spent loading the weights rather than computing matrix multiplications.

Note: For fp16/bfloat16, maximum theoretical forward pass when limited by memory bandwidth The speed reaches 45.5 times/second.

#What is the actual latency of ChatGPT?

Run a script written in Python at night (night running is less expensive) to test the latency of using ChatGPT through the OpenAI API. The maximum empirical speed achieved by the forward pass is 101 times / Second. This paper uses the maximum empirical results of the experiments due to the need to obtain the lowest overhead from OpenAI's backend and dynamic batching system.

Conclusion

According to the previous assumptions and arguments, we can find that there are contradictions, because based on empirical results Much faster than the maximum theoretical result based on the memory bandwidth of the A100 platform. It can therefore be concluded that the ChatGPT model used by OpenAI for inference is definitely not equivalent to a dense model of 175 billion parameters.

#1. Why predict the parameters of the ChatGPT inference model instead of the parameters of the training model?

#Use the memory bandwidth method to estimate the number of model parameters, which only applies to inference models. We don’t know for sure whether OpenAI applies techniques like distillation to make its inference model smaller than its training model.

Many insects have a larval form that is optimized for extracting energy and nutrients from the environment, and a completely different adult form that has very different requirements for travel and reproduction optimization. ——From Geoffrey Hinton, Oriol Vinyals, Jeff Dean, 2015.

2. Are there any other assumptions?

The proof actually includes 3 assumptions:

#3. What does Dense Equivalent mean?

# Over the past few years, researchers have conducted research on sparse hybrid expert LLMs such as Switch Transformer. Dense equivalent indicates how many parameters are used in each forward pass. Using the methods described in this article, there is no way to prove that ChatGPT is not a 175 billion parameter sparse MoE model.

#4. Have you considered KV cache Transformer inference optimization?

Even if KV cache optimization is used, each forward pass still needs to load the entire model. KV cache only saves on FLOPs, but does not reduce memory bandwidth consumption (in fact It increases because the KV cache needs to be loaded on every forward pass).

5. Have you considered Flash Attention?

Although Flash Attention performs better in terms of memory bandwidth efficiency and real-time speed, each forward pass still requires loading the entire model, so the previous argument still holds.

#6. Have you considered pipeline parallelism/more fine-grained parallel strategies?

Utilizing pipeline parallelism results in the same maximum number of forward passes. However, by using micro-batches and larger batch sizes, throughput (total tokens/second) can be increased.

#7. Have you considered increasing tensor parallelism to more than 8?

The A100 platform supports 16 A100s per node, but Azure does not support this feature. Only Google Cloud supports this feature, but almost no one uses it. It's unlikely that Azure will custom-make a node with 16 A100s for OpenAI and not release it as a public GA version to spread the cost of designing or maintaining new nodes. Regarding tensor parallelism between nodes, that's just a possibility, but it's a less cost-effective way to do inference on the A100. Even NVIDIA does not recommend parallel processing of tensors between nodes.

8. Have you considered using INT4 to store weights?

Although using INT4 has been proven effective, OpenAI's GPU Kernel Compiler does not support INT4 loads, stores, or matrix multiplications, and there are no plans to add INT to their technology roadmap. Pictured. Since there is no support for INT4 loads or stores, you can't even store the weights as INT4 and then quantize them back to a high-precision format (like INT8, bfloat16, etc.).

The above is the detailed content of ChatGPT model parameters ≠ 175 billion, someone proved it by contradiction.. For more information, please follow other related articles on the PHP Chinese website!