Technology peripherals

Technology peripherals

AI

AI

Interpretation of hot topics: The emergent ability of large models and the paradigm shift triggered by ChatGPT

Interpretation of hot topics: The emergent ability of large models and the paradigm shift triggered by ChatGPT

Interpretation of hot topics: The emergent ability of large models and the paradigm shift triggered by ChatGPT

Recently, there has been great interest in the powerful capabilities demonstrated by large language models (such as thought chains[2], scratch pads[3]), and a lot of work has been carried out. We collectively refer to these as the emergent capabilities of large models [4]. These capabilities may [5] only exist in large models but not in smaller models, so they are called “emergent”. Many of these capabilities are very impressive, such as complex reasoning, knowledge reasoning, and out-of-distribution robustness, which we will discuss in detail later.

Notably, these capabilities are close to what the NLP community has been seeking for decades, and thus represent a potential research paradigm shift away from fine-tuning small models. to using large models for contextual learning. For first movers, the paradigm shift may be obvious. However, for the sake of scientific rigor, we do need very clear reasons why one should move to large language models, even if these models are expensive[6] and difficult to use[7 ], and the effect may be average[8]. In this article, we will take a closer look at what these capabilities are, what large language models can provide, and what their potential advantages are in a wider range of NLP/ML tasks.

##Original link: yaofu.notion.site/A-Closer-Look-at-Large-Language-Models-Emergent-Abilities-493876b55df5479d80686f68a1abd72f

Contents

Prerequisite: We assume that the reader has the following knowledge:

- Pre-training, fine-tuning, prompts (natural language processing/deep learning capabilities that ordinary practitioners should have)

- Thinking chain prompts, scratch pads (ordinary practitioners may not Too understanding, but it does not affect reading)

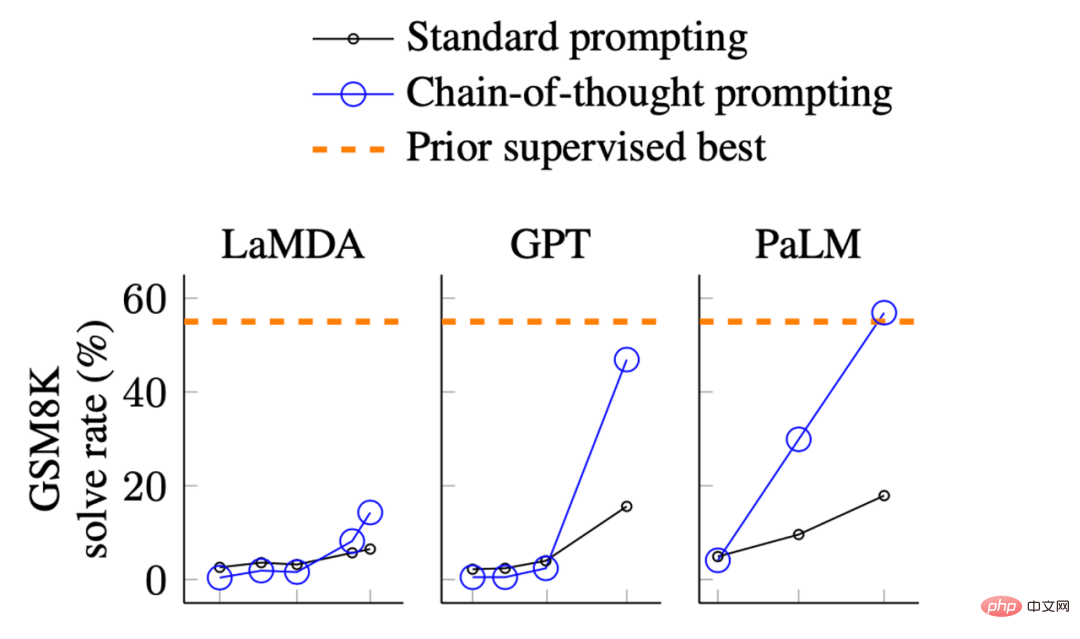

Image from Wei. et. al. 2022. Chain-of-Thought Prompting Elicits Reasoning in Large Language Models. The X-axis is the model size. GSM8K is a collection of elementary school-level mathematics problems.

In the above renderings, we can observe the performance of the model:

- When the size When the model is relatively small, the improvement is not big

- When the model becomes larger, there is a significant improvement

This fundamentally shows that , some capabilities may not exist in the small model but are acquired in the large model.

There are many kinds of emergent capabilities, such as those sorted out by Wei et al. in 2022[9]. Some abilities are interesting, but we will not discuss them in this article, such as spelling the last letters of a string of words. We think this is a task for Python rather than a language model; or 3-digit addition, we think it is a calculation. This is what the processor does instead of the language model.

In this article, we are mainly interested in the following capabilities:

1. The NLP community has paid attention to it in recent years, but the previous NLP Capabilities that are difficult for models to achieve

2. Capabilities derived from the deepest essence of human language (depth of capabilities)

3. Ability that may reach the highest level of human intelligence (the upper limit of ability)

2. Three typical examples of emergent abilitiesMany interesting abilities can be classified as above Among the categories mentioned in the article, among them, we mainly discuss the following three typical abilities:

- Complex reasoning

- Knowledge reasoning

- Out-of-distribution robustness

Let us discuss each in detail next.

Complex Reasoning

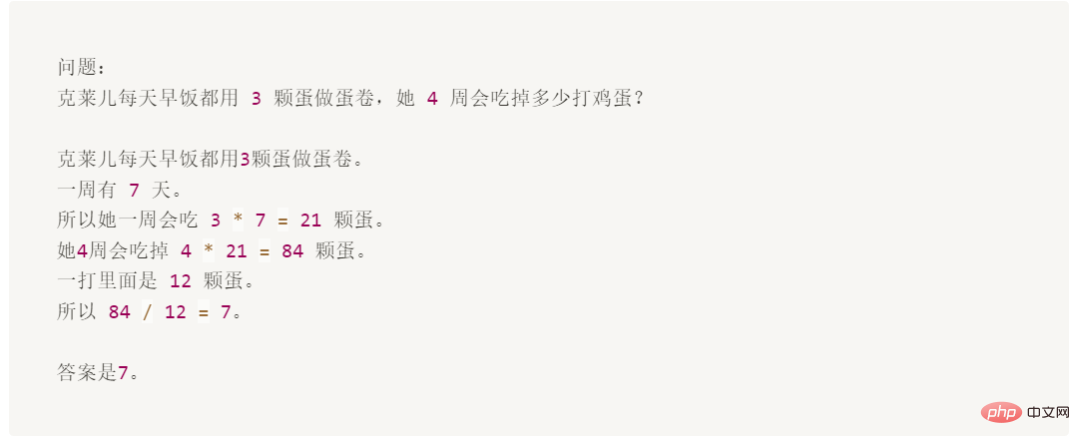

The following is an example in the GSM8K data set where using prompt words significantly exceeds fine tuning:

While this question is easy for a 10-year-old, it is difficult for a language model, mainly due to the mix of math and language.

GSM8K was originally proposed by OpenAI in October 2021 [10]. At that time, they used the first version of [11]GPT3 to fine-tune the entire training set, with an accuracy of about 35%. This result makes the authors quite pessimistic, because their results show the scaling law of language models: as the model size increases exponentially, the performance increases linearly (I will discuss this later). Therefore, they ponder in Section 4.1:

"The 175B model appears to require at least an additional two orders of magnitude of training data to achieve an 80% solution rate."

Three months later, in January 2022, Wei et al. [12] Based on the 540BPaLM model, only used 8 thought chain prompts The example improves the accuracy to 56.6% (without increasing the training set by two orders of magnitude). Later in March 2022, Wang et al.[13] based on the same 540B PaLM model and improved the accuracy to 74.4% through the majority voting method. The current SOTA comes from my own work on AI2 (Fu et. al. Nov 2022[14]), where we achieved 82.9% accuracy on 175B Codex by using complex thought chains. As can be seen from the above progress, technological progress is indeed growing exponentially.

Thinking chain prompt is a typical example showing the emergent capabilities of a model as it scales:

- From emergent capabilities Let’s look at : Only when the model is larger than 100B can the effect of the thinking chain be greater than the only answer prompt. So this ability only exists in large models.

- From the effect point of view: The performance of the thought chain prompt is significantly better than its previous fine-tuning[15]method.

- From the perspective of annotation efficiency: Thought chain prompts only require annotations of 8 examples, while fine-tuning requires a complete training set.

Some students may think that models that can do primary school mathematics mean nothing (in a sense, they are really not that cool). But GSM8K is just the beginning, and recent work has pushed cutting-edge problems to high school[16], universities[17], and even International Mathematical Olympiad problems[18] . Is it cooler now?

Knowledge Reasoning

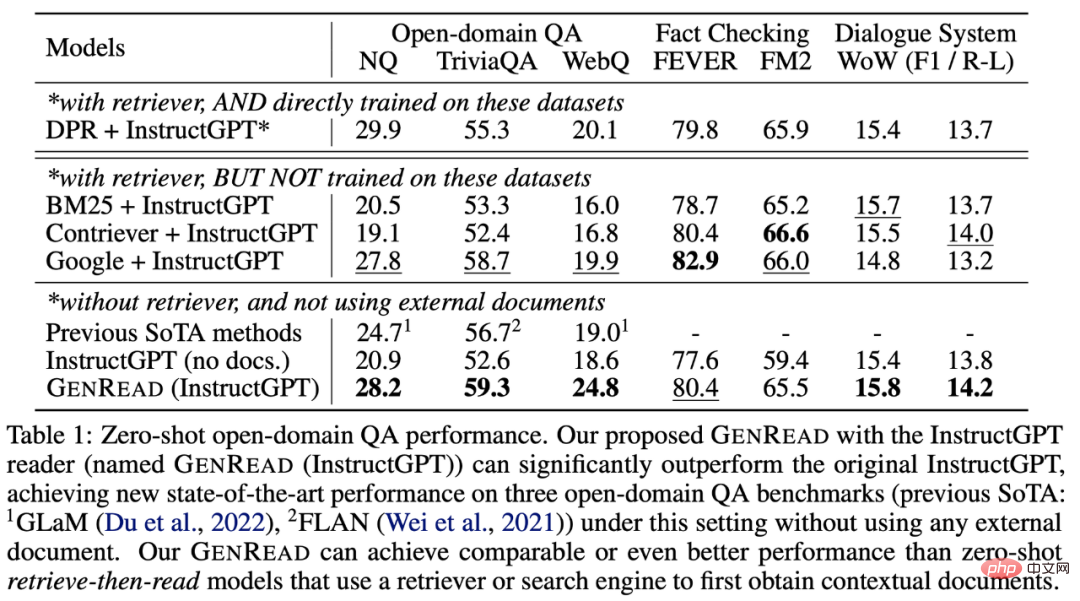

The next example is reasoning skills that require knowledge (such as question and answer and common sense reasoning). In this case, prompting a large model is not necessarily better than fine-tuning a small model (which model is better remains to be seen). But the annotation efficiency in this case is amplified because:

- #In many datasets, in order to obtain the required background/common sense knowledge, the (previously small) model An external corpus/knowledge graph is needed to retrieve[19], or training on enhanced[20] data is required through multi-task learning

- For large language models, you can directly remove the retriever[21] and rely only on the internal knowledge of the model[22] without the need for fine-tuning

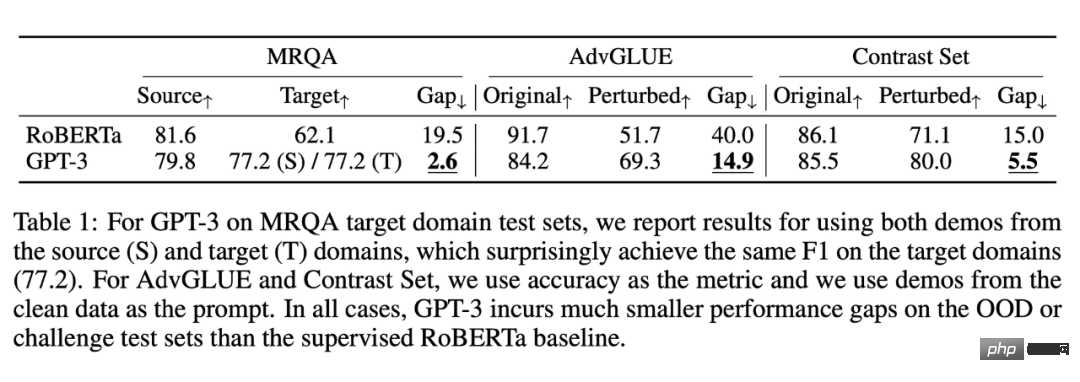

Image from Yu et. al. 2022. Previous SOTA models needed to be retrieved from external knowledge sources. GPT-3 performs equally well/better than previous models without retrieval.

#As shown in the table, unlike the math problem example, GPT-3 does not significantly outperform the previous fine-tuned model. But it does not need to be retrieved from external documents, it itself contains knowledge[23].

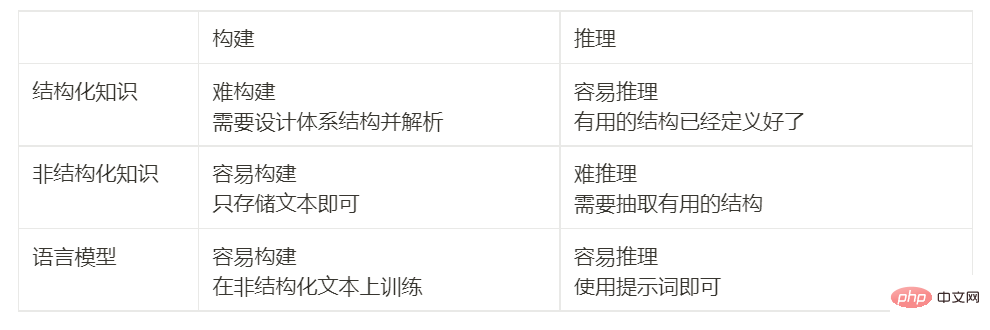

To understand the significance of these results, we can look back at history: the NLP community has faced the challenge of how to effectively encode knowledge from the beginning. People are constantly exploring ways to store knowledge outside or inside the model. Since the 1990s, people have been trying to record the rules of language and the world in a giant library, storing knowledge outside the model. But this is very difficult, after all, we cannot exhaust all the rules. Therefore, researchers began to build domain-specific knowledge bases to store knowledge in the form of unstructured text, semi-structured (such as Wikipedia) or fully structured (such as knowledge graphs). Generally, structured knowledge is difficult to construct (because the structural system of knowledge needs to be designed), but easy to reason (because of the architecture), unstructured knowledge is easy to construct (just save it directly), but it is difficult to use for reasoning (no architecture). However, language models provide a new way to easily extract knowledge from unstructured text and reason based on the knowledge efficiently without the need for predefined patterns. The following table compares the advantages and disadvantages:

Out-of-distribution robustness

# # The third capability we discuss is out-of-distribution robustness. Between 2018 and 2022, there was a lot of research on distribution shift/adversarial robustness/combination generation in the fields of NLP, CV and general machine learning. It was found that when the test set distribution is different from the training distribution, the behavioral performance of the model may be will drop significantly. However, this does not seem to be the case in context learning of large language models. The research by Si et al[24] in 2022 shows:

##Data comes from Si et. al. 2022. Although GPT-3 is worse than RoBERTa in the identically distributed setting, it is better than RoBERTa in the non-identically distributed setting, and the performance drop is significantly smaller.

#Similarly, in this experiment, the effect of GPT-3 based on prompt words under the same distribution is not as good as that of fine-tuned RoBERTa. But it outperforms RoBERTa in three other distributions (domain switching, noise, and adversarial perturbations), which means GPT3 is more robust.In addition, even if there is a distribution shift, the generalization performance brought by good prompt words will still be maintained. For example:

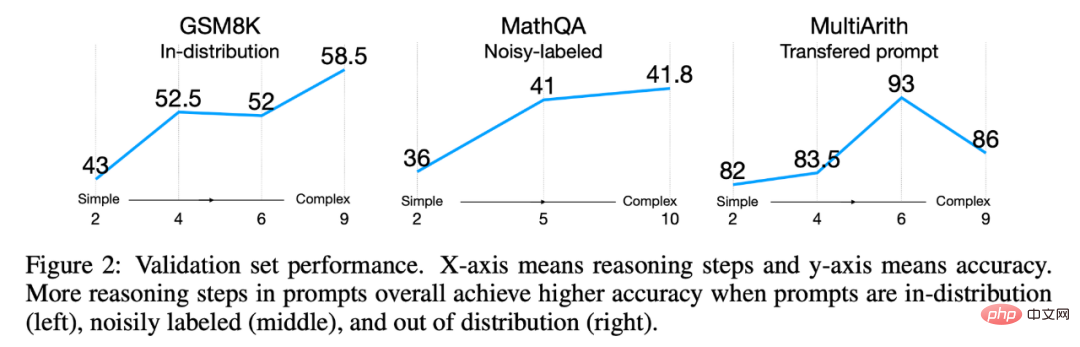

The picture comes from Fu et. al. 2022. Even if the test distribution is different from the training distribution, complex cues are always better than simple ones. Hints perform better.

Fu et al.’s 2022 study[25] showed that the more complex the input prompts, the better the performance of the model. This trend also continued in the case of distribution shifts: complex cues always outperformed simple cues, whether the test distribution was different from the original distribution, came from a noise distribution, or was transferred from another distribution.

Summary so far

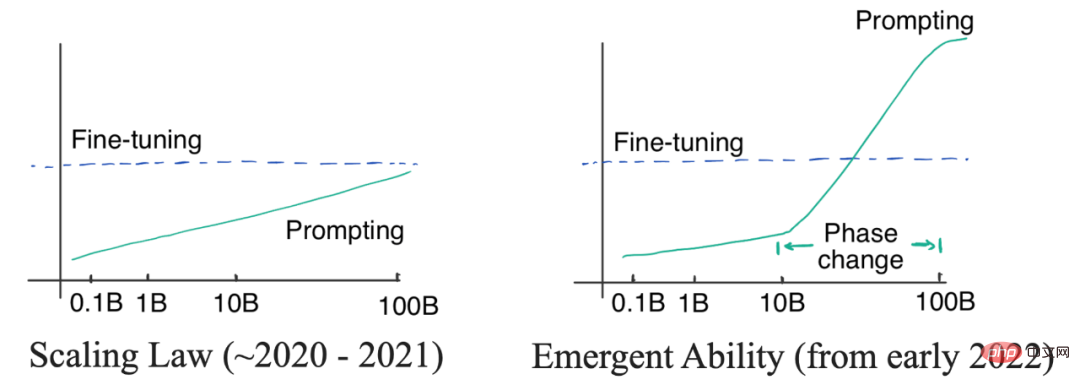

In the above, I discussed three types that are only available for large models Emergent ability. They are:In view of the advantages listed above, you may start to think that large language models are indeed very good. Before discussing further, let us look back at previous work and we will find a very strange question: GPT-3 was released in 2020, but why did we not discover and start thinking about the paradigm shift until now? The answer to this question lies in two kinds of curves: logarithmic linear curve and phase change curve. As shown below: Left picture: Law of proportion. When model size grows exponentially, the corresponding model performance grows linearly. Right: When the model size reaches a certain scale, emergent capabilities will appear, allowing performance to increase dramatically. Initially, (OpenAI) researchers believed that the relationship between language model performance and model size could be predicted by a log-linear curve, that is, the model size increases exponentially , performance will increase linearly. This phenomenon is known as the scaling law of language models, as discussed by Kaplan et al. in their original 2020 GPT3 article. Importantly, at that stage, even the largest GPT-3 could not outperform small model fine-tuning with hints. So there was no need to use expensive large models at that time (even though the labeling of prompt words was very efficient). Until 2021, Cobbe et al[28] found that the scaling law also applies to fine tuning. This is a somewhat pessimistic finding because it means that we may be locked in model size - although model architecture optimization may improve model performance to a certain extent, the effect will still be Locked within a range (corresponding to the model size), it is difficult to have a more significant breakthrough. Under the control of the scaling law (2020 to 2021), since GPT-3 cannot outperform fine-tuning T5-11B, and fine-tuning T5-11B is already very troublesome, so NLP The community's focus is more on studying smaller models or efficient parameter adaptation. Prefix tuning[29] [30] in 2021. The logic at that time was very simple: If the fine-tuning effect is better, we should work more on efficient parameter adaptation; if the prompt word method is better, we should invest more energy in training large language models. Later in January 2022, the work of Thought Chain was released. As the authors show, thought chain cues exhibit a clear phase transition When using thought chains for prompts, the large model performs significantly better than fine-tuning on complex reasoning, performs competitively on knowledge reasoning, and is distributed robust There is also some potential. It only takes about 8 examples to achieve such an effect, which is why the paradigm may shift (Note: This article was completed a month before ChatGPT went online; after ChatGPT went online, the entire field was shocked and realized that the paradigm had shifted ). 4. What does paradigm shift mean?

The benefits of prompt words are obvious: we no longer need tedious data annotation and fine-tuning on the full amount of data. We only need to write prompt words and obtain results that meet the requirements, which is much faster than fine-tuning. Two other points to note are: Is contextual learning supervised learning? Is contextual learning really better than supervised learning? Let’s review the logic mentioned above: If fine tuning is better, we should work hard to study how to optimize parameters efficiently; if prompt words are better, we should Efforts to train better large language models. So, although we believe that large language models have great potential, There is still no conclusive evidence that whether fine-tuning or cue words is better, so we do not Determine if the paradigm really should shift, or to what extent it should shift. It is very meaningful to carefully compare these two paradigms to give us a clear understanding of the future. We leave more discussion to the next article. Two numbers: 62B and 175B. 62B This number comes from the fifth table of Chung et al.’s 2022 [31] work: #For all models smaller than 62B, using prompt words directly is better than thinking chain. The first model that is better with the thought chain is the result of Flan-cont-PaLM 62B on BBH. The 540B model using thinking chain will get good results on more tasks, but not all tasks are better than fine tuning. In addition, the ideal size can be less than 540B. In the work of Suzgun et al. in 2022[32], the author showed that the use of thought chains in 175B InstructGPT and 175B Codex is better than directly using prompt words. Combining the above results, we get two numbers: 63B and 175B. So, if you want to participate in this game, you must first have a larger than average size model. However, there are other large models that perform much worse under the thinking chain and cannot even learn the thinking chain, such as the first version of OPT, BLOOM and GPT-3. They are both size 175B. This brings us to our next question. no. Size is a necessary but not sufficient factor. Some models are large enough (such as OPT and BLOOM, both 175B), but they cannot do thought chains. There are two models[33] You can do thinking chain: It is still unclear why there are emergent abilities, but we have found out the factors that may produce emergent abilities: However, all of these factors are speculative at this stage. It is very meaningful to reveal how to train the model to produce emergent capabilities. We will leave more discussion to next article. In this article, we carefully studied the emergent ability of language models. We highlight the importance of and opportunities for complex reasoning, knowledge reasoning, and out-of-distribution robustness. Emergent capabilities are very exciting because they can transcend scaling laws and exhibit phase transitions in scaling curves. We discussed in detail whether the research paradigm will actually shift from fine-tuning to contextual learning, but we do not yet have a definite answer because the effects of fine-tuning and contextual learning in in-distribution and out-of-distribution scenarios still need to be compared. Finally, we discuss three potential factors that produce emergent capabilities: instruction fine-tuning, code fine-tuning, and thought-chain fine-tuning. Suggestions and discussions are very welcome. In addition we also mentioned two interesting issues that have not yet been discussed: For these two questions, we will follow in the following articles discussion in.

3. Emergent ability overturns the law of proportion

5. How big should the model be?

6. Is scale the only factor?

7. Conclusion Conclusion

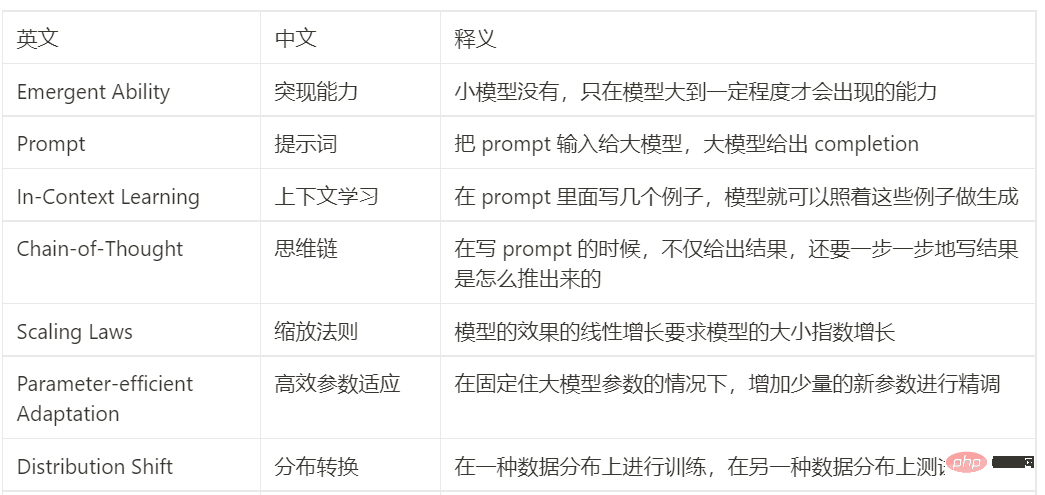

Chinese-English comparison table

The above is the detailed content of Interpretation of hot topics: The emergent ability of large models and the paradigm shift triggered by ChatGPT. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1377

1377

52

52

ChatGPT now allows free users to generate images by using DALL-E 3 with a daily limit

Aug 09, 2024 pm 09:37 PM

ChatGPT now allows free users to generate images by using DALL-E 3 with a daily limit

Aug 09, 2024 pm 09:37 PM

DALL-E 3 was officially introduced in September of 2023 as a vastly improved model than its predecessor. It is considered one of the best AI image generators to date, capable of creating images with intricate detail. However, at launch, it was exclus

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Boston Dynamics Atlas officially enters the era of electric robots! Yesterday, the hydraulic Atlas just "tearfully" withdrew from the stage of history. Today, Boston Dynamics announced that the electric Atlas is on the job. It seems that in the field of commercial humanoid robots, Boston Dynamics is determined to compete with Tesla. After the new video was released, it had already been viewed by more than one million people in just ten hours. The old people leave and new roles appear. This is a historical necessity. There is no doubt that this year is the explosive year of humanoid robots. Netizens commented: The advancement of robots has made this year's opening ceremony look like a human, and the degree of freedom is far greater than that of humans. But is this really not a horror movie? At the beginning of the video, Atlas is lying calmly on the ground, seemingly on his back. What follows is jaw-dropping

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

Earlier this month, researchers from MIT and other institutions proposed a very promising alternative to MLP - KAN. KAN outperforms MLP in terms of accuracy and interpretability. And it can outperform MLP running with a larger number of parameters with a very small number of parameters. For example, the authors stated that they used KAN to reproduce DeepMind's results with a smaller network and a higher degree of automation. Specifically, DeepMind's MLP has about 300,000 parameters, while KAN only has about 200 parameters. KAN has a strong mathematical foundation like MLP. MLP is based on the universal approximation theorem, while KAN is based on the Kolmogorov-Arnold representation theorem. As shown in the figure below, KAN has

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

The latest video of Tesla's robot Optimus is released, and it can already work in the factory. At normal speed, it sorts batteries (Tesla's 4680 batteries) like this: The official also released what it looks like at 20x speed - on a small "workstation", picking and picking and picking: This time it is released One of the highlights of the video is that Optimus completes this work in the factory, completely autonomously, without human intervention throughout the process. And from the perspective of Optimus, it can also pick up and place the crooked battery, focusing on automatic error correction: Regarding Optimus's hand, NVIDIA scientist Jim Fan gave a high evaluation: Optimus's hand is the world's five-fingered robot. One of the most dexterous. Its hands are not only tactile

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

Target detection is a relatively mature problem in autonomous driving systems, among which pedestrian detection is one of the earliest algorithms to be deployed. Very comprehensive research has been carried out in most papers. However, distance perception using fisheye cameras for surround view is relatively less studied. Due to large radial distortion, standard bounding box representation is difficult to implement in fisheye cameras. To alleviate the above description, we explore extended bounding box, ellipse, and general polygon designs into polar/angular representations and define an instance segmentation mIOU metric to analyze these representations. The proposed model fisheyeDetNet with polygonal shape outperforms other models and simultaneously achieves 49.5% mAP on the Valeo fisheye camera dataset for autonomous driving

Single card running Llama 70B is faster than dual card, Microsoft forced FP6 into A100 | Open source

Apr 29, 2024 pm 04:55 PM

Single card running Llama 70B is faster than dual card, Microsoft forced FP6 into A100 | Open source

Apr 29, 2024 pm 04:55 PM

FP8 and lower floating point quantification precision are no longer the "patent" of H100! Lao Huang wanted everyone to use INT8/INT4, and the Microsoft DeepSpeed team started running FP6 on A100 without official support from NVIDIA. Test results show that the new method TC-FPx's FP6 quantization on A100 is close to or occasionally faster than INT4, and has higher accuracy than the latter. On top of this, there is also end-to-end large model support, which has been open sourced and integrated into deep learning inference frameworks such as DeepSpeed. This result also has an immediate effect on accelerating large models - under this framework, using a single card to run Llama, the throughput is 2.65 times higher than that of dual cards. one

The latest from Oxford University! Mickey: 2D image matching in 3D SOTA! (CVPR\'24)

Apr 23, 2024 pm 01:20 PM

The latest from Oxford University! Mickey: 2D image matching in 3D SOTA! (CVPR\'24)

Apr 23, 2024 pm 01:20 PM

Project link written in front: https://nianticlabs.github.io/mickey/ Given two pictures, the camera pose between them can be estimated by establishing the correspondence between the pictures. Typically, these correspondences are 2D to 2D, and our estimated poses are scale-indeterminate. Some applications, such as instant augmented reality anytime, anywhere, require pose estimation of scale metrics, so they rely on external depth estimators to recover scale. This paper proposes MicKey, a keypoint matching process capable of predicting metric correspondences in 3D camera space. By learning 3D coordinate matching across images, we are able to infer metric relative