Technology peripherals

Technology peripherals

AI

AI

An in-depth exploration of the implementation of unsupervised pre-training technology and 'algorithm optimization + engineering innovation' of Huoshan Voice

An in-depth exploration of the implementation of unsupervised pre-training technology and 'algorithm optimization + engineering innovation' of Huoshan Voice

An in-depth exploration of the implementation of unsupervised pre-training technology and 'algorithm optimization + engineering innovation' of Huoshan Voice

For a long time, Volcano Engine has provided intelligent video subtitle solutions based on speech recognition technology for popular video platforms. To put it simply, it is a function that uses AI technology to automatically convert the voices and lyrics in the video into text to assist in video creation. However, with the rapid growth of platform users and the requirement for richer and more diverse language types, the traditionally used supervised learning technology has increasingly reached its bottleneck, which has put the team in real trouble.

As we all know, traditional supervised learning will rely heavily on manually annotated supervised data, especially in the continuous optimization of large languages and the cold start of small languages. Taking major languages such as Chinese, Mandarin and English as an example, although the video platform provides sufficient voice data for business scenarios, after the supervised data reaches a certain scale, the ROI of continued annotation will be very low, and technical personnel will inevitably need to consider how to effectively utilize hundreds of supervised data. Tens of thousands of hours of unlabeled data to further improve the performance of large-language speech recognition.

For relatively niche languages or dialects, the cost of data labeling is high due to resources, manpower and other reasons. When there is very little labeled data (on the order of 10 hours), the effect of supervised training is very poor and may even fail to converge normally; and the purchased data often does not match the target scenario and cannot meet the needs of the business.

Therefore, the Volcano Engine Speech Team urgently needs to study how to make full use of a large amount of unlabeled data at the lowest possible labeling cost, improve the recognition effect with a small amount of labeled data, and implement it in actual business. Therefore, unsupervised pre-training technology has become the key to promoting the video platform ASR (Automatic Speech Recognition / Automatic Speech Recognition) capabilities to small languages.

Although the academic community has made many significant progress in the field of speech unsupervised pre-training in recent years, including Wav2vec2.0[1], HuBERT[2], etc., but there are few implementation cases in the industry for reference. Overall, The Volcano Voice team believes that the following three reasons hinder the implementation of unsupervised pre-training technology:

- The model parameters are large and the inference overhead is high. A large amount of unlabeled data requires unsupervised pre-training with a larger model to obtain high-quality speech representation. However, if such a model is directly deployed online, it will bring high inference costs.

- Unsupervised pre-training only focuses on the learning of speech representations. It needs to be combined with a large number of plain text-trained language models to jointly decode to achieve the desired effect, and is incompatible with the end-to-end ASR inference engine.

- Unsupervised pre-training is expensive, long-term and unstable. Taking Wav2vec2.0 as an example, a model with 300M parameters was pre-trained for 600,000 steps using 64 V100 GPUs, which took up to half a month. In addition, due to differences in data distribution, training on business data is prone to divergence.

In view of the above three major pain points, algorithm improvements and engineering optimization have been carried out to form a complete and easy-to-promote implementation plan. This article will introduce the solution in detail from the implementation process, algorithm optimization and engineering optimization.

Implementation process

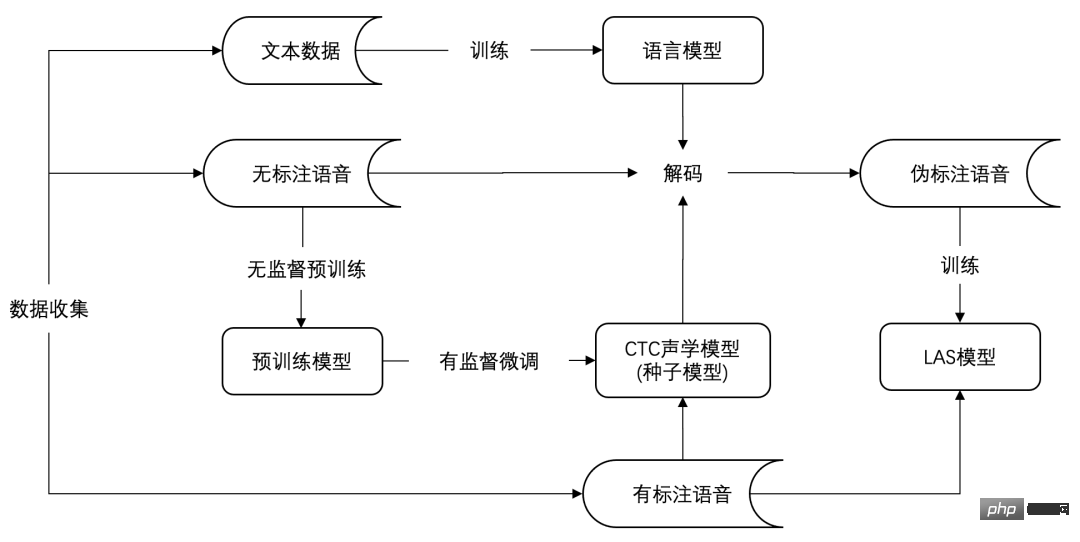

The following figure is the implementation process of unsupervised pre-training of low-resource language ASR, which can be roughly divided into data There are three stages: collection, seed model training and model migration.

##ASR implementation process based on unsupervised pre-training

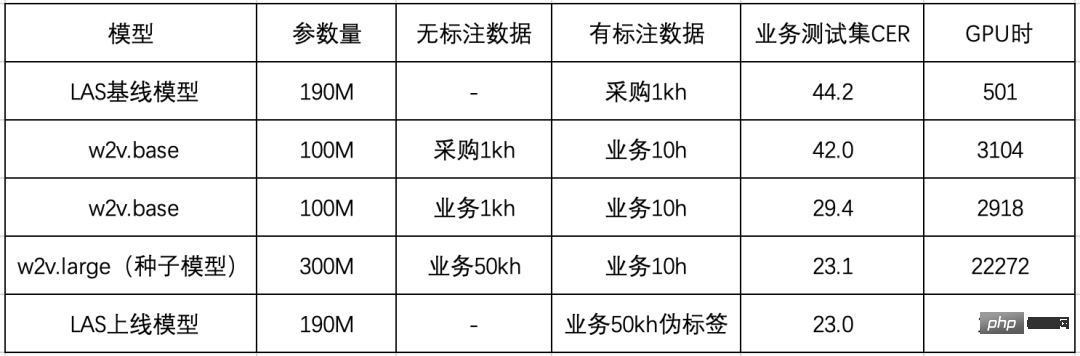

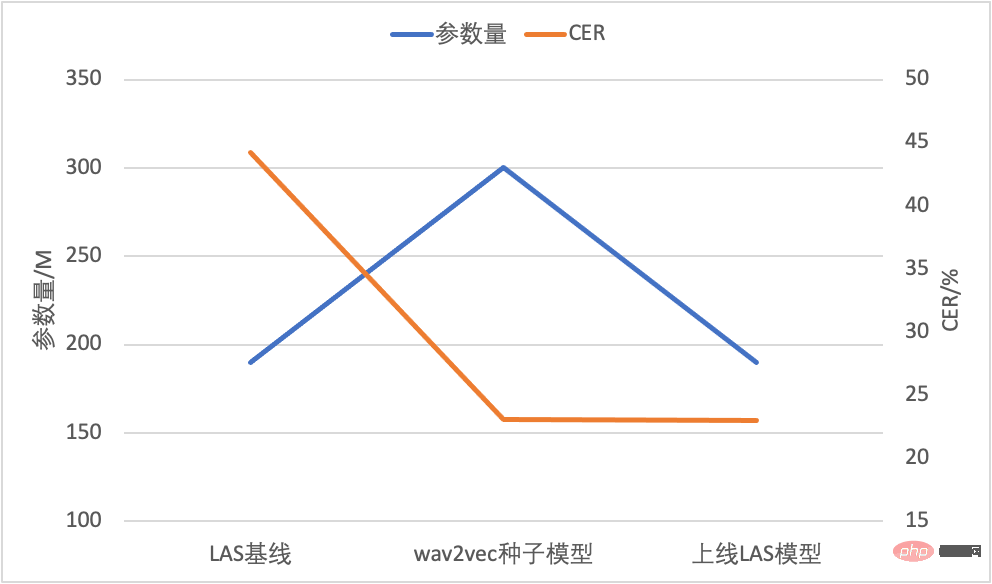

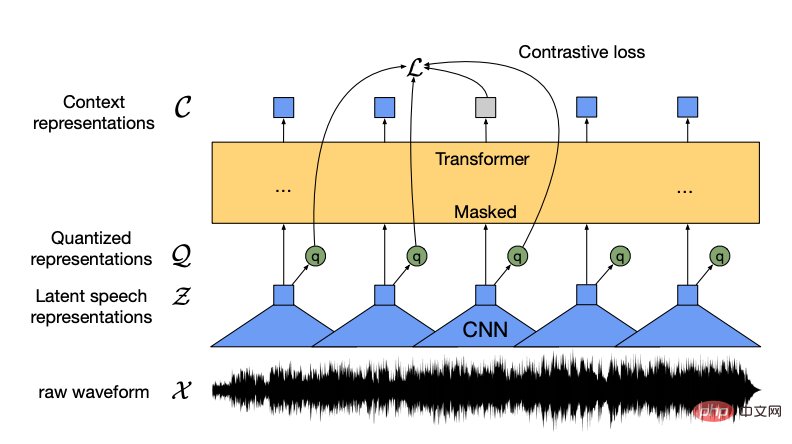

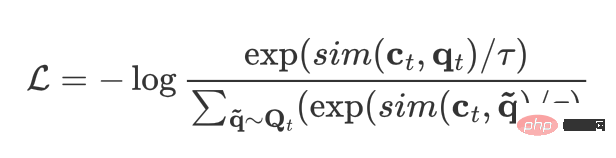

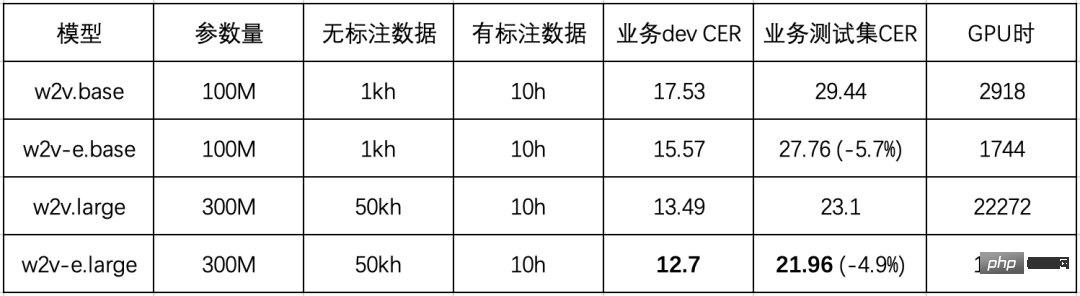

# #Specifically, the first stage of data collection can collect unlabeled speech, labeled speech and plain text data in the target language through language diversion, procurement and other means. The second stage of seed model training is the classic "unsupervised pre-training and supervised fine-tuning" process. At this stage, an acoustic model will be obtained, which is usually fine-tuned based on the Connectionist Temporal Classification (CTC[3]) loss function. The acoustic model combined with the language model trained on pure text forms a complete speech recognition system, which can achieve good recognition results. The reason why it is called a seed model is because this model is not suitable for directly being launched into the business. The Volcano Engine prefers to use LAS (Listen, Attend and Spell[4]) or RNN-T (Recurrent Neural). Network Transducer[5]) This type of end-to-end model is deployed online. The main reason is that LAS/RNN-T has excellent end-to-end modeling capabilities. At the same time, it has achieved better results than the traditional CTC model in recent years, and has been widely used in It is increasingly used in industry. The Volcano Engine has done a lot of optimization work on the inference and deployment of end-to-end speech recognition models, and has formed a relatively mature solution to support many businesses. While maintaining the effect without loss, if the end-to-end inference engine can be used, the operation and maintenance cost of the engine can be significantly reduced. Based on this, the team designed the third phase, which is the model migration phase. Mainly draw on the idea of knowledge distillation, use the seed model to pseudo-label unlabeled data, and then provide a LAS model with a smaller number of parameters for training, synchronously realizing the migration of the model structure. and compression of inference calculations. The effectiveness of the entire process has been verified on Cantonese ASR. The specific experimental results are shown in the following table: ##First of all, the team purchased 1kh of finished product data for experimental comparison. The performance of directly training the LAS model was poor, with a Character Error Rate (CER) as high as 44.2%. After analysis, Volcano Engine believes that the main reason is the mismatch between the procurement data (conversation) and business test set (video) fields. Preliminary experiments on wav2vec2.0 also found a similar phenomenon. Compared with using procurement data for pre-training, the Volcano Engine uses data consistent with the target field for pre-training, and the CER on the business test set can be reduced from 42.0% to 29.4%; when the unlabeled data of the business scenario is accumulated to 50kh, the model parameters increase from 100M to 300M, and the CER further drops to 23.1%. Finally, the Volcano Engine verified the effect of model migration, and combined the Cantonese language model to decode 50kh of unlabeled data to obtain pseudo Label, train LAS model. It can be seen that the LAS model based on pseudo-label training can basically maintain the recognition effect of the CTC seed model, and the number of model parameters is reduced by one-third, and can be directly deployed based on a mature end-to-end inference engine. online. Comparison of model parameters and CER Finally, in the model structure Under the premise that the number of parameters remains unchanged, the team used 50kh of unlabeled business data and 10h of labeled business data to achieve a CER of 23.0%, which was a 48% decrease compared to the baseline model. After solving the problems of online calculation amount and compatibility, we focused on the core unsupervised pre-training technology in the entire process. For wav2vec2.0, the Volcano Engine carried out the work from two dimensions: algorithm and engineering. Optimized. wav2vec2.0, as a self-supervised pre-training model proposed by Meta AI in 2020, opens up unsupervised representation of speech A new chapter in learning. The core idea is to use the quantization module to discretize the input features, and through comparative learning optimization, the main body of the model realizes random mask partial input features similar to BERT. wav2vec2.0 model structure diagram (Source: wav2vec 2.0 Figure 1 [1]) There are two difficulties encountered when training the wav2vec 2.0 model on business data Problems: One is that the training efficiency is low, and a 300M large model with 64 cards takes more than ten days to complete; the other is that the training is unstable and easy to diverge. This Volcano Engine proposes Efficient wav2vec to alleviate the above two problems. Regarding the problem of low training efficiency, the team accelerated the training speed by reducing the frame rate of the model, replacing the input features from waveform to filterbanks, and the frame rate was changed from the original 20ms becomes 40ms. This not only greatly reduces the calculation amount of feature extraction convolution, but also greatly reduces the length of Transformer's internal encoding, thereby improving training efficiency. For the problem of unstable training, it is solved by analyzing the learning method of unsupervised pre-training and comprehensive judgment combined with the actual situation of business data. The comparative learning loss can be expressed by the following formula: For each frame t, ct represents the encoder output of the frame, qt represents the quantized output of the frame. In addition, several other frames need to be sampled as negative samples, so the set of the current frame and the negative sample frame is equivalent to a dynamically constructed vocabulary Qt . The optimization goal of contrastive learning is to maximize the similarity between the current frame encoding and the quantization result of the frame, while minimizing the similarity between the current frame encoding and the quantization results of other frames. It is not difficult to find that the similarity between negative samples and positive samples and the number of negative samples directly determine the effect of contrastive learning. In actual operation, the average length of business data is short, and it is far from enough to only provide 50 negative samples in one sentence. Considering that the similarity between adjacent frames of speech is very high, it is necessary to ensure the continuity of the mask area, thereby increasing the difficulty of representation reconstruction. In order to solve the above two problems, the Volcano Engine has proposed two improvements: After comparing the effects of wav2vec2.0 (w2v) and Efficient wav2vec (w2v-e) on business data, the results shown in the table below are obtained (all models are Using 64 V100 GPUs for training): You can see that the improved Efficient wav2vec has a stable 5% performance improvement compared to the original wav2vec 2.0 , and the training efficiency is almost doubled. Although the Efficient wav2vec proposed by the team has nearly doubled the training efficiency from the algorithm level, due to the large communication volume of the 300M model, there are still fluctuations in training communication and multi-machine expansion efficiency Low. In this regard, the Volcano Engine Voice Team concluded: "In order to improve the communication efficiency of model pre-training in synchronous gradient scenarios, we have completed the Bucket group communication optimization technology on the communication backend based on the BytePS distributed training framework, and the data parallel efficiency can be achieved 10% improvement; at the same time, an adaptive parameter reordering (Parameter Reorder) strategy is also implemented to address the waiting problem caused by the different order of model parameter definition and gradient update order." Based on these optimizations, further Combined with gradient accumulation and other technologies, the single-card expansion efficiency of the 300M model increased from 55.42% to 81.83%, and the multi-machine expansion efficiency increased from 60.54% to 91.13%. The model that originally took 6.5 days to train can now be trained in only 4 days. , time-consuming shortened by 40%. In addition, in order to support large model big data scenarios explored in the future, the Volcano Engine voice team further completed a series of ultra-large-scale models Atomic capability building. Firstly, local OSS technology was implemented, which solved the problem of inter-machine expansion efficiency while removing most of the redundant memory occupied by the optimizer; later, it supported buckets in synchronous gradient communication. Lazy init reduces the video memory usage by twice the number of parameters, greatly reduces the peak memory value and adapts to very large model scenarios where video memory resources are tight; finally, based on data parallelism, model parallelism and pipeline parallelism are supported, and in 1B and 10B models Verification and customization support are completed. This series of optimizations lays a solid foundation for the training of large models and big data. Currently, by adopting the low-resource ASR implementation process, two low-resource languages have successfully implemented video subtitles and content security services. In addition to speech recognition, the pre-training model based on wav2vec2.0 has also achieved significant gains in many other downstream tasks, including audio event detection, language recognition, emotion detection, etc., and will be gradually implemented in video content security, recommendation, and analysis in the future. , audio offloading, e-commerce customer service sentiment analysis and other related businesses. The implementation of unsupervised pre-training technology will significantly reduce the cost of labeling various types of audio data, shorten the labeling cycle, and achieve rapid response to business needs. In practice, Volcano Engine has explored a set of low-resource language ASR implementation solutions based on wav2vec2.0, which solves the problem It solves the problem of high reasoning overhead and achieves seamless connection with the end-to-end engine. To address the core issues of low training efficiency and instability of wav2vec2.0, Efficient wav2vec was proposed. Compared with wav2vec2.0, the effect on downstream tasks is improved by 5%, and the pre-training time is reduced by half. Combined with engineering optimization, the final pre-training time is reduced by 70% compared to the original version. In the future, Volcano Engine will continue to explore in the following three directions: Volcano Voice, long-term service ByteDance’s cutting-edge voice technology for each business line is opened through the Volcano engine, providing industry-leading AI voice technology capabilities and excellent full-stack voice products Solutions include audio understanding, audio synthesis, virtual digital humans, conversational interaction, music retrieval, intelligent hardware, etc. Currently, Volcano Engine's speech recognition and speech synthesis cover multiple languages and dialects. Many technical papers have been selected into various top AI conferences, providing leading voice capabilities for Douyin, Jianying, Feishu, Tomato Novels, Pico and other businesses. , and is suitable for diverse scenarios such as short videos, live broadcasts, video creation, office and wearable devices. References [1] Baevski, A., Zhou, Y., Mohamed, A. and Auli, M ., 2020. wav2vec 2.0: A framework for self-supervised learning of speech representations. Advances in Neural Information Processing Systems, 33, pp.12449-12460. # #[2] Hsu, W.N., Bolte, B., Tsai, Y.H.H., Lakhotia, K., Salakhutdinov, R. and Mohamed, A., 2021. Hubert: Self-supervised speech representation learning by masked prediction of hidden units. IEEE /ACM Transactions on Audio, Speech, and Language Processing, 29, pp.3451-3460. ##[3] Graves, A., Fernández, S. , Gomez, F. and Schmidhuber, J., 2006, June. Connectionist temporal classification: labelling unsegmented sequence data with recurrent neural networks. In Proceedings of the 23rd international conference on Machine learning (pp. 369-376). [4] Chan, W., Jaitly, N., Le, Q. and Vinyals, O., 2016, March. Listen, attend and spell: A neural network for large vocabulary conversational speech recognition. In 2016 IEEE international conference on acoustics, speech and signal processing (ICASSP) (pp. 4960-4964). IEEE. [5] Graves, A., 2012. Sequence transduction with recurrent neural networks. arXiv preprint arXiv:1211.3711. [6] He, K., Chen, X., Xie, S., Li, Y., Dollár, P. and Girshick, R., 2022. Masked autoencoders are scalable vision learners. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 16000-16009). [7] Baevski, A., Hsu, W.N., Xu, Q., Babu, A., Gu, J. and Auli, M., 2022. Data2vec: A general framework for self-supervised learning in speech, vision and language. arXiv preprint arXiv:2202.03555. [8] Conneau, A., Baevski, A., Collobert, R., Mohamed, A. and Auli, M., 2020. Unsupervised cross-lingual representation learning for speech recognition. arXiv preprint arXiv:2006.13979. [9] Lu, Y., Huang, M., Qu, X., Wei, P. and Ma, Z., 2022, May. Language adaptive cross-lingual speech representation learning with sparse sharing sub-networks. In ICASSP 2022-2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (pp. 6882-6886). IEEE. [10] Park, D.S., Zhang, Y., Jia, Y., Han, W., Chiu, C.C., Li, B., Wu, Y. and Le, Q.V., 2020. Improved noisy student training for automatic speech recognition. arXiv preprint arXiv:2005.09629.

Engineering Optimization

Summary and Outlook

The above is the detailed content of An in-depth exploration of the implementation of unsupervised pre-training technology and 'algorithm optimization + engineering innovation' of Huoshan Voice. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

Bytedance Beanbao large model released, Volcano Engine full-stack AI service helps enterprises intelligently transform

Jun 05, 2024 pm 07:59 PM

Bytedance Beanbao large model released, Volcano Engine full-stack AI service helps enterprises intelligently transform

Jun 05, 2024 pm 07:59 PM

Tan Dai, President of Volcano Engine, said that companies that want to implement large models well face three key challenges: model effectiveness, inference costs, and implementation difficulty: they must have good basic large models as support to solve complex problems, and they must also have low-cost inference. Services allow large models to be widely used, and more tools, platforms and applications are needed to help companies implement scenarios. ——Tan Dai, President of Huoshan Engine 01. The large bean bag model makes its debut and is heavily used. Polishing the model effect is the most critical challenge for the implementation of AI. Tan Dai pointed out that only through extensive use can a good model be polished. Currently, the Doubao model processes 120 billion tokens of text and generates 30 million images every day. In order to help enterprises implement large-scale model scenarios, the beanbao large-scale model independently developed by ByteDance will be launched through the volcano

The marketing effect has been greatly improved, this is how AIGC video creation should be used

Jun 25, 2024 am 12:01 AM

The marketing effect has been greatly improved, this is how AIGC video creation should be used

Jun 25, 2024 am 12:01 AM

After more than a year of development, AIGC has gradually moved from text dialogue and picture generation to video generation. Looking back four months ago, the birth of Sora caused a reshuffle in the video generation track and vigorously promoted the scope and depth of AIGC's application in the field of video creation. In an era when everyone is talking about large models, on the one hand we are surprised by the visual shock brought by video generation, on the other hand we are faced with the difficulty of implementation. It is true that large models are still in a running-in period from technology research and development to application practice, and they still need to be tuned based on actual business scenarios, but the distance between ideal and reality is gradually being narrowed. Marketing, as an important implementation scenario for artificial intelligence technology, has become a direction that many companies and practitioners want to make breakthroughs. Once you master the appropriate methods, the creative process of marketing videos will be

The technical strength of Huoshan Voice TTS has been certified by the National Inspection and Quarantine Center, with a MOS score as high as 4.64

Apr 12, 2023 am 10:40 AM

The technical strength of Huoshan Voice TTS has been certified by the National Inspection and Quarantine Center, with a MOS score as high as 4.64

Apr 12, 2023 am 10:40 AM

Recently, the Volcano Engine speech synthesis product has obtained the speech synthesis enhanced inspection and testing certificate issued by the National Speech and Image Recognition Product Quality Inspection and Testing Center (hereinafter referred to as the "AI National Inspection Center"). It has met the basic requirements and extended requirements of speech synthesis. The highest level standard of AI National Inspection Center. This evaluation is conducted from the dimensions of Mandarin Chinese, multi-dialects, multi-languages, mixed languages, multi-timbrals, and personalization. The product’s technical support team, the Volcano Voice Team, provides a rich sound library. After evaluation, its timbre MOS score is the highest. It reached 4.64 points, which is at the leading level in the industry. As the first and only national quality inspection and testing agency for voice and image products in the field of artificial intelligence in my country’s quality inspection system, the AI National Inspection Center has been committed to promoting intelligent

Focusing on personalized experience, retaining users depends entirely on AIGC?

Jul 15, 2024 pm 06:48 PM

Focusing on personalized experience, retaining users depends entirely on AIGC?

Jul 15, 2024 pm 06:48 PM

1. Before purchasing a product, consumers will search and browse product reviews on social media. Therefore, it is becoming increasingly important for companies to market their products on social platforms. The purpose of marketing is to: Promote the sale of products Establish a brand image Improve brand awareness Attract and retain customers Ultimately improve the profitability of the company The large model has excellent understanding and generation capabilities and can provide users with personalized information by browsing and analyzing user data content recommendations. In the fourth issue of "AIGC Experience School", two guests will discuss in depth the role of AIGC technology in improving "marketing conversion rate". Live broadcast time: July 10, 19:00-19:45 Live broadcast topic: Retaining users, how does AIGC improve conversion rate through personalization? The fourth episode of the program invited two important

An in-depth exploration of the implementation of unsupervised pre-training technology and 'algorithm optimization + engineering innovation' of Huoshan Voice

Apr 08, 2023 pm 12:44 PM

An in-depth exploration of the implementation of unsupervised pre-training technology and 'algorithm optimization + engineering innovation' of Huoshan Voice

Apr 08, 2023 pm 12:44 PM

For a long time, Volcano Engine has provided intelligent video subtitle solutions based on speech recognition technology for popular video platforms. To put it simply, it is a function that uses AI technology to automatically convert the voices and lyrics in the video into text to assist in video creation. However, with the rapid growth of platform users and the requirement for richer and more diverse language types, the traditionally used supervised learning technology has increasingly reached its bottleneck, which has put the team in real trouble. As we all know, traditional supervised learning will rely heavily on manually annotated supervised data, especially in the continuous optimization of large languages and the cold start of small languages. Taking major languages such as Chinese, Mandarin and English as an example, although the video platform provides sufficient voice data for business scenarios, after the supervised data reaches a certain scale, it will continue to

All Douyin is speaking native dialects, two key technologies help you 'understand” local dialects

Oct 12, 2023 pm 08:13 PM

All Douyin is speaking native dialects, two key technologies help you 'understand” local dialects

Oct 12, 2023 pm 08:13 PM

During the National Day, Douyin’s “A word of dialect proves that you are from your hometown” campaign attracted enthusiastic participation from netizens from all over the country. The topic topped the Douyin challenge list, with more than 50 million views. This “Local Dialect Awards” quickly became popular on the Internet, which is inseparable from the contribution of Douyin’s newly launched local dialect automatic translation function. When the creators recorded short videos in their native dialect, they used the "automatic subtitles" function and selected "convert to Mandarin subtitles", so that the dialect speech in the video can be automatically recognized and the dialect content can be converted into Mandarin subtitles. This allows netizens from other regions to easily understand various "encrypted Mandarin" languages. Netizens from Fujian personally tested it and said that even the southern Fujian region with "different pronunciation" is a region in Fujian Province, China.

The 'Health + AI' ecological innovation competition jointly organized by Volcano Engine and Yili ended successfully

Jan 13, 2024 am 11:57 AM

The 'Health + AI' ecological innovation competition jointly organized by Volcano Engine and Yili ended successfully

Jan 13, 2024 am 11:57 AM

Health + AI =? Brain health nutrition solutions for middle-aged and elderly people, digital intelligent nutrition and health services, AIGC big health community solutions... With the unfolding of the "Health + AI" ecological innovation competition, each of them contains technological energy and empowers the health industry. Innovative solutions are about to come out, and the answer to "health + AI =?" is slowly emerging. On December 26, the "Health + AI" ecological innovation competition jointly sponsored by Yili Group and Volcano Engine came to a successful conclusion. Six winning companies, including Shanghai Bosten Network Technology Co., Ltd. and Zhongke Suzhou Intelligent Computing Technology Research Institute, stood out. In the competition that lasted for more than a month, Yili joined hands with outstanding scientific and technological enterprises to explore the deep integration of AI technology and the health industry, continuously raising expectations for the competition. "Health + AI" Ecological Innovation Competition

Volcano Engine's ultra-clear restoration of Beyond's classic concert, technical capabilities have been made available to the public

Apr 09, 2023 pm 11:51 PM

Volcano Engine's ultra-clear restoration of Beyond's classic concert, technical capabilities have been made available to the public

Apr 09, 2023 pm 11:51 PM

On the evening of July 3, Douyin joined hands with Polygram, a label of Universal Music, to live broadcast selected content of the Beyond Live 1991 life contact concert and commemorative concert that had been restored with ultra-high definition by Volcano Engine, attracting more than 140 million views. Beyond is a rock band founded in 1983. With the rise of Cantonese music, the name of the Beyond band has become a cultural imprint of an era. "Beyond Live 1991" was the first concert held by Beyond at the Hung Hom Stadium. The DVDs subsequently released by PolyGram were almost hard to find in the 1990s. In the 31 years since then, this concert has become the musical enlightenment and youthful memory of generations of fans. Limited by the shooting equipment, storage media and