Technology peripherals

Technology peripherals

AI

AI

Get rid of the 'clickbait', another open source masterpiece from the Tsinghua team!

Get rid of the 'clickbait', another open source masterpiece from the Tsinghua team!

Get rid of the 'clickbait', another open source masterpiece from the Tsinghua team!

As someone who has trouble naming, what troubles me most about writing essays in high school is that I can write a good article but don’t know what title to give it. After I started making a public account, I lost a lot of hair every time I thought of a title.. ....

Recently, I finally discovered the light of "Name Waste" on GitHub, a large-scale interesting application launched by Tsinghua University and the OpenBMB open source community: "Outsmart" "Title", enter the text content and you can generate a hot title with one click!

Ready to use right out of the box. After trying it, all I can say is: it smells great!

##Online experience: https://live.openbmb.org/ant

GitHub: https://github.com/OpenBMB/CPM-Live

When it comes to this headline-making artifact, we have to talk about it first. Let’s talk about its “ontology” – the large model CPM-Ant.

CPM-Ant is the first tens of billions model in China to be trained live. The training took 68 days and was completed on August 5, 2022, and was officially released by OpenBMB!

- Five Outstanding Features

- ##Four Innovation Breakthroughs

- The training process is low-cost and environment-friendly!

- #The most important thing is - completely open source!

Now, let’s take a look at the CPM-Ant release results content report!

Model Overview

CPM-Ant is an open source Chinese pre-trained language model with 10B parameters. It is also the first milestone in the CPM-Live live training process.

The entire training process is low-cost and environment-friendly. It does not require high hardware requirements and running costs. It is based on the delta tuning method and has achieved excellent results in the CUGE benchmark test.CPM-Ant related code, log files and model parameters are fully open source under an open license agreement. In addition to the full model, OpenBMB also provides various compressed versions to suit different hardware configurations.

Five outstanding features of CPM-Ant:

(1) Computational efficiency

With the BMTrain[1] toolkit, you can make full use of the capabilities of distributed computing resources to efficiently train large models.The training of CPM-Ant lasted 68 days and cost 430,000 yuan, which is 1/20 of the approximately US$1.3 million cost of training the T5-11B model by Google. The greenhouse gas emissions of training CPM-Ant are about 4872kg CO₂e, while the emissions of training T5-11B are 46.7t CO₂e[9]. The CPM-Ant solution is about 1/10 of its emissions.

(2) Excellent performance

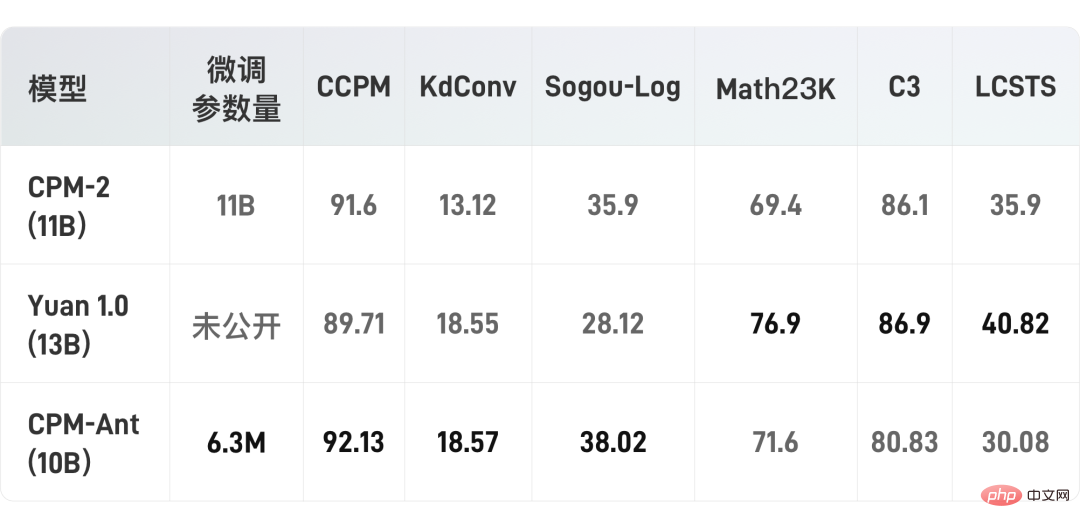

With the OpenDelta[3] tool, it is very convenient to perform incremental fine-tuning Adapt CPM-Ant to downstream tasks.Experiments show that CPM-Ant achieves the best results on 3/6 CUGE tasks by only fine-tuning 6.3M parameters. This result surpasses other fully parameter fine-tuned models. For example: the number of fine-tuned parameters of CPM-Ant is only 0.06% of CPM2 (fine-tuned 11B parameters).

(3) Deployment economy

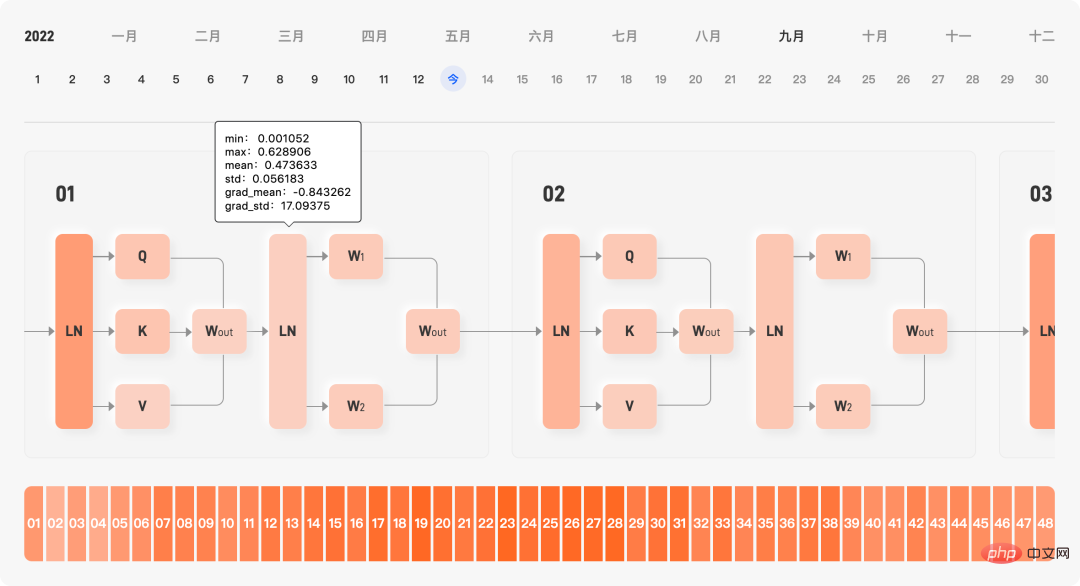

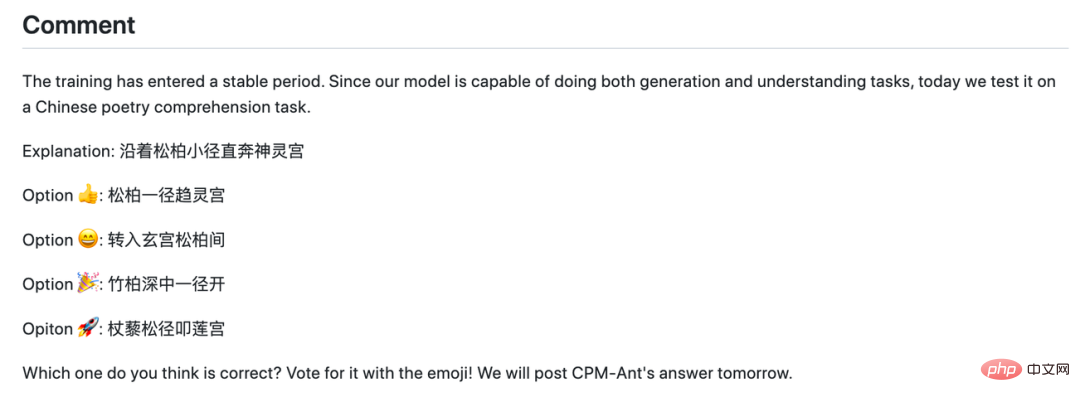

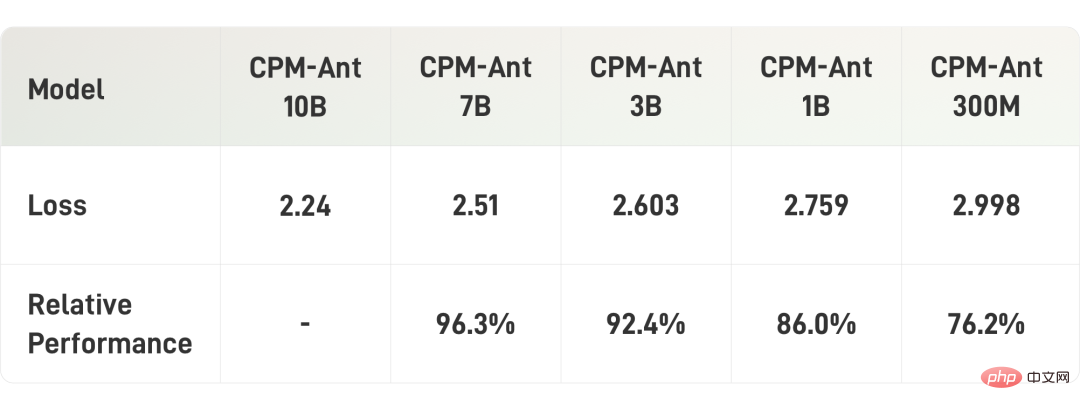

With the help of BMCook[7] and BMInf[4] toolkits, you can Driving CPM-Ant under limited computing resources.Based on BMInf, it can replace the computing cluster and perform large model inference on a single GPU (even a consumer-grade graphics card such as GTX 1060). To make the deployment of CPM-Ant more economical, OpenBMB uses BMCook to further compress the original 10B model into different versions. The compressed model (7B, 3B, 1B, 300M) can adapt to the needs of different low resource scenarios. (4) Easy to use Whether it is the original 10B model or the related compressed version, through a few lines of code It can be easily loaded and run. OpenBMB will also add CPM-Ant to ModelCenter[8], making further development of the model easier. (5) Open Democracy The training process of CPM-Ant is completely open. OpenBMB publishes all code, log files, and model archives as open access. CPM-Ant also adopts an open license that allows commercialization. For manufacturers and research institutions capable of large model training, the CPM-Ant training process provides a complete Practical records of Chinese large model training. OpenBMB has released the model design, training scheme, data requirements and implementation code of the CPM-Live series of models. Based on the model architecture of CPM-Live, it is possible to quickly and easily design and implement large model training programs and organize relevant business data to complete model pre-research and data preparation. The official website records all training dynamics during the training process, including loss function, learning rate, learned data, throughput, gradient size, cost curve, and internal parameters of the model The mean and standard deviation are displayed in real time. Through these training dynamics, users can quickly diagnose whether there are problems in the model training process. #Real-time display of model training internal parameters In addition, the R&D students of OpenBMB update the training record summary in real time every day. The summary includes loss value, gradient value, and overall progress. It also records some problems encountered and bugs during the training process, so that users can understand the model training process in advance. various "pits" that may be encountered. On days when model training is "calm", the R&D guy will also throw out some famous quotes, introduce some latest papers, and even launch guessing activities. A guessing activity in the log In addition, OpenBMB also provides cost-effective training solutions. For enterprises that actually have large model training needs, through relevant training acceleration technologies, training costs have been reduced to an acceptable level. Using the BMTrain[1] toolkit, the computational cost of training the tens of billions of large model CPM-Ant is only 430,000 yuan (the current cost is calculated based on public cloud prices, the actual cost will be lower ), which is approximately 1/20 of the externally estimated cost of the 11B large model T5 of US$1.3 million! How does CPM-Ant help us adapt downstream tasks? For large model researchers, OpenBMB provides a large model performance evaluation solution based on efficient fine-tuning of parameters, which facilitates rapid downstream task adaptation and evaluation of model performance. Use parameter efficient fine-tuning, that is, delta tuning, to evaluate the performance of CPM-Ant on six downstream tasks. LoRA [2] was used in the experiment, which inserts two adjustable low-rank matrices in each attention layer and freezes all parameters of the original model. Using this approach, only 6.3M parameters were fine-tuned per task, accounting for only 0.067% of the total parameters. With the help of OpenDelta[3], OpenBMB conducted all experiments without modifying the code of the original model. It should be noted that no data augmentation methods were used when evaluating the CPM-Ant model on downstream tasks. The experimental results are shown in the following table: It can be seen that with only fine-tuning a few parameters, the OpenBMB model performed well on three data sets. The performance has exceeded CPM-2 and Source 1.0. Some tasks (such as LCSTS) may be difficult to learn when there are very few fine-tuned parameters. The training process of CPM-Live will continue, and the performance on each task will also be affected. Polish further. Interested students can visit the GitHub link below to experience CPM-Ant and OpenDelta first, and further explore the capabilities of CPM-Ant on other tasks! https://github.com/OpenBMB/CPM-Live The performance of large models is amazing, but high hardware requirements and running costs have always troubled many users. For users of large models, OpenBMB provides a series of hardware-friendly usage methods, which can more easily run different model versions in different hardware environments. Using the BMInf[4] toolkit, CPM-Ant can run in a low resource environment such as a single card 1060! In addition, OpenBMB also compresses CPM-Ant. These compressed models include CPM-Ant-7B/3B/1B/0.3B. All of these model compression sizes can correspond to the classic sizes of existing open source pre-trained language models. Considering that users may perform further development on released checkpoints, OpenBMB mainly uses task-independent structured pruning to compress CPM-Ant. The pruning process is also gradual, that is, from 10B to 7B, from 7B to 3B, from 3B to 1B, and finally from 1B to 0.3B. In the specific pruning process, OpenBMB will train a dynamic learnable mask matrix, and then use this mask matrix to prune the corresponding parameters. Finally, the parameters are pruned according to the threshold of the mask matrix, which is determined based on the target sparsity. For more compression details, please refer to the technical blog [5]. The following table shows the results of model compression: Now that the hard core content is over, then How can large models help us "choose titles"? Based on CPM-Ant, all large model developers and enthusiasts can develop it Interesting text fun applications. In order to further verify the effectiveness of the model and provide examples, OpenBMB fine-tuned a hot title generator based on CPM-Ant to demonstrate the model's capabilities. Just paste the text content into the text box below, click to generate, and you can get the exciting title provided by the big model! The title of the first article of the CPM-Ant achievement report is generated by the generator This demo will be continuously polished, and more special effects will be added in the future to enhance the user experience Interested users can also use CPM-Ant to build own display application. If you have any application ideas, need technical support, or encounter any problems while using the demo, you can initiate a discussion at the CPM-Live forum [6] at any time! The release of CPM-Ant is the first milestone of CPM-Live, but it is only the first phase of training. OpenBMB will continue to conduct a series of training in the future. Just a quick spoiler, new features such as multi-language support and structured input and output will be added in the next training period. You are welcome to continue to pay attention! Project GitHub address: https://github.com/OpenBMB/CPM -Live Demo experience address (PC access only): https://live.openbmb.org/ant A complete large model training practice

An efficient fine-tuning solution that has repeatedly created SOTA

A series of hardware-friendly inference methods

An unexpectedly interesting large model application

Portal|Project link

The above is the detailed content of Get rid of the 'clickbait', another open source masterpiece from the Tsinghua team!. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1359

1359

52

52

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Boston Dynamics Atlas officially enters the era of electric robots! Yesterday, the hydraulic Atlas just "tearfully" withdrew from the stage of history. Today, Boston Dynamics announced that the electric Atlas is on the job. It seems that in the field of commercial humanoid robots, Boston Dynamics is determined to compete with Tesla. After the new video was released, it had already been viewed by more than one million people in just ten hours. The old people leave and new roles appear. This is a historical necessity. There is no doubt that this year is the explosive year of humanoid robots. Netizens commented: The advancement of robots has made this year's opening ceremony look like a human, and the degree of freedom is far greater than that of humans. But is this really not a horror movie? At the beginning of the video, Atlas is lying calmly on the ground, seemingly on his back. What follows is jaw-dropping

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

Earlier this month, researchers from MIT and other institutions proposed a very promising alternative to MLP - KAN. KAN outperforms MLP in terms of accuracy and interpretability. And it can outperform MLP running with a larger number of parameters with a very small number of parameters. For example, the authors stated that they used KAN to reproduce DeepMind's results with a smaller network and a higher degree of automation. Specifically, DeepMind's MLP has about 300,000 parameters, while KAN only has about 200 parameters. KAN has a strong mathematical foundation like MLP. MLP is based on the universal approximation theorem, while KAN is based on the Kolmogorov-Arnold representation theorem. As shown in the figure below, KAN has

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI is indeed changing mathematics. Recently, Tao Zhexuan, who has been paying close attention to this issue, forwarded the latest issue of "Bulletin of the American Mathematical Society" (Bulletin of the American Mathematical Society). Focusing on the topic "Will machines change mathematics?", many mathematicians expressed their opinions. The whole process was full of sparks, hardcore and exciting. The author has a strong lineup, including Fields Medal winner Akshay Venkatesh, Chinese mathematician Zheng Lejun, NYU computer scientist Ernest Davis and many other well-known scholars in the industry. The world of AI has changed dramatically. You know, many of these articles were submitted a year ago.

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

The performance of JAX, promoted by Google, has surpassed that of Pytorch and TensorFlow in recent benchmark tests, ranking first in 7 indicators. And the test was not done on the TPU with the best JAX performance. Although among developers, Pytorch is still more popular than Tensorflow. But in the future, perhaps more large models will be trained and run based on the JAX platform. Models Recently, the Keras team benchmarked three backends (TensorFlow, JAX, PyTorch) with the native PyTorch implementation and Keras2 with TensorFlow. First, they select a set of mainstream

Recommended: Excellent JS open source face detection and recognition project

Apr 03, 2024 am 11:55 AM

Recommended: Excellent JS open source face detection and recognition project

Apr 03, 2024 am 11:55 AM

Face detection and recognition technology is already a relatively mature and widely used technology. Currently, the most widely used Internet application language is JS. Implementing face detection and recognition on the Web front-end has advantages and disadvantages compared to back-end face recognition. Advantages include reducing network interaction and real-time recognition, which greatly shortens user waiting time and improves user experience; disadvantages include: being limited by model size, the accuracy is also limited. How to use js to implement face detection on the web? In order to implement face recognition on the Web, you need to be familiar with related programming languages and technologies, such as JavaScript, HTML, CSS, WebRTC, etc. At the same time, you also need to master relevant computer vision and artificial intelligence technologies. It is worth noting that due to the design of the Web side

Alibaba 7B multi-modal document understanding large model wins new SOTA

Apr 02, 2024 am 11:31 AM

Alibaba 7B multi-modal document understanding large model wins new SOTA

Apr 02, 2024 am 11:31 AM

New SOTA for multimodal document understanding capabilities! Alibaba's mPLUG team released the latest open source work mPLUG-DocOwl1.5, which proposed a series of solutions to address the four major challenges of high-resolution image text recognition, general document structure understanding, instruction following, and introduction of external knowledge. Without further ado, let’s look at the effects first. One-click recognition and conversion of charts with complex structures into Markdown format: Charts of different styles are available: More detailed text recognition and positioning can also be easily handled: Detailed explanations of document understanding can also be given: You know, "Document Understanding" is currently An important scenario for the implementation of large language models. There are many products on the market to assist document reading. Some of them mainly use OCR systems for text recognition and cooperate with LLM for text processing.

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

The latest video of Tesla's robot Optimus is released, and it can already work in the factory. At normal speed, it sorts batteries (Tesla's 4680 batteries) like this: The official also released what it looks like at 20x speed - on a small "workstation", picking and picking and picking: This time it is released One of the highlights of the video is that Optimus completes this work in the factory, completely autonomously, without human intervention throughout the process. And from the perspective of Optimus, it can also pick up and place the crooked battery, focusing on automatic error correction: Regarding Optimus's hand, NVIDIA scientist Jim Fan gave a high evaluation: Optimus's hand is the world's five-fingered robot. One of the most dexterous. Its hands are not only tactile