Technology peripherals

Technology peripherals

AI

AI

Just train once to generate new 3D scenes! The evolution history of Google's 'Light Field Neural Rendering”

Just train once to generate new 3D scenes! The evolution history of Google's 'Light Field Neural Rendering”

Just train once to generate new 3D scenes! The evolution history of Google's 'Light Field Neural Rendering”

View synthesis is a key problem at the intersection of computer vision and computer graphics. It refers to creating a new view of a scene from multiple pictures of the scene.

To accurately synthesize a new view of a scene, a model needs to capture multiple types of information from a small set of reference images, such as detailed 3D structure , materials and lighting, etc.

Since researchers proposed the Neural Radiation Field (NeRF) model in 2020, this issue has also received increasing attention, greatly promoting new views Synthetic performance.

One of the super big players is Google, which has also published many papers in the field of NeRF. This article will introduce two A paper published by Google at CVPR 2022 and ECCV 2022, describing the evolution of light field neural rendering model.

The first paper proposes a two-stage model based on Transformer to learn to combine reference pixel colors. First, the features along the epipolar lines are obtained, The features along the reference view are then obtained to generate the color of the target ray, greatly improving the accuracy of view reproduction.

Paper link: https://arxiv.org/pdf/2112.09687.pdf

ClassicLight Field RenderingCan accurately reproduce view-related effects such as reflection, refraction, and translucency, but requires dense view sampling of the scene. Methods based on geometric reconstruction only require sparse views, but cannot accurately simulate non-Lambertian effects, that is, non-ideal scattering.

The new model proposed in this article combines the advantages of these two directions and alleviates its limitations, by focusing on light By manipulating the four-dimensional representation of the field, the model can learn to accurately represent view-dependent effects. Scene geometry is implicitly learned from a sparse set of views by enforcing geometric constraints during training and inference.

The model outperforms state-of-the-art models on multiple forward and 360° datasets and has severe line-of-sight dependence There is greater leeway in scenes of sexual change.

Another paper solves the generalization problem of synthesizing unseen scenes by using Transformer sequences with canonicalized position encoding . After the model is trained on a set of scenes, it can be used to synthesize views of new scenes.

Paper link: https://arxiv.org/pdf/2207.10662.pdf

This article proposes a different paradigm that does not require depth features and NeRF-like volume rendering. This method can directly predict the color of target rays in new scenes by simply sampling a patch set from the scene.

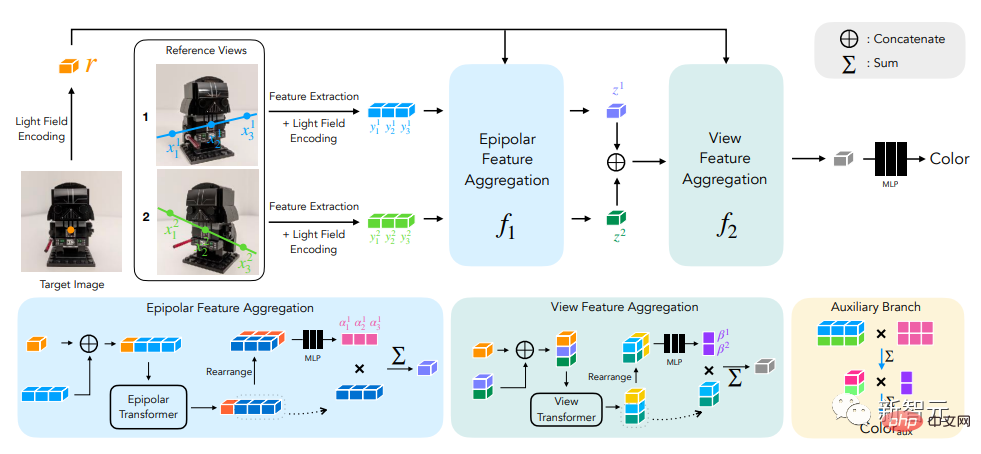

First use epipolar geometry to extract patches along the epipolar lines of each reference view, and assign each patch Linearly projected into a one-dimensional feature vector, this set is then processed by a series of Transformers.

For position encoding, the researchers used a method similar to the light field representation methodto parameterize the rays. The difference is that the coordinates are normalized relative to the target ray, and also This makes the method independent of the reference frame and improves versatility.

The innovation of the model is that it performs image-based rendering, combining the color and characteristics of the reference image to render a new view, and it is purely It is based on Transformer and operates on image patch sets. And they utilize 4D light field representations for position encoding, helping to simulate view-related effects.

Final experimental results show that this method outperforms other methods in new view synthesis of unseen scenes, even when trained with much less data than The same is true for ##.

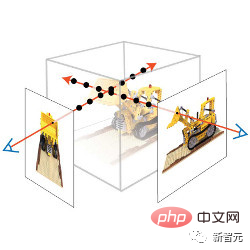

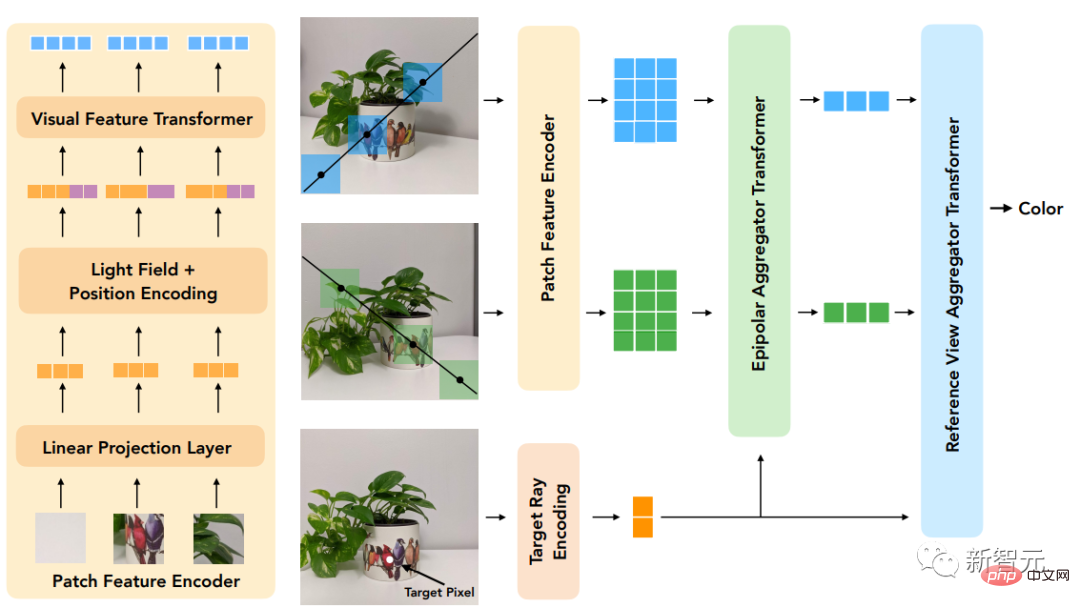

Light Field Neural RenderingThe input to the model includes a set of reference images, the corresponding camera parameters (focal length, position and spatial orientation), and the user's desired The coordinates of the color's target ray.

In order to generate a new image, we need to start with the camera parameters of the input image, first obtain the coordinates of the target ray (each one corresponds to a pixel), and for each coordinate Model query.

The researchers’ solution was to not fully process each reference image, but only to look at the areas that might affect the target pixels. These regions can be determined by epipolar geometry, mapping each target pixel to a line on each reference frame.

For the sake of safety, you need to select a small area around some points on the epipolar line to form a set of patches that will be actually processed by the model, and then apply the Transformer to this set of patches. Get the color of the target pixel.

Transformer is particularly useful in this case because the self-attention mechanism in it can naturally take the patch collection as input and the attention weight itself It can be used to predict the color of output pixels by combining reference view colors and features.

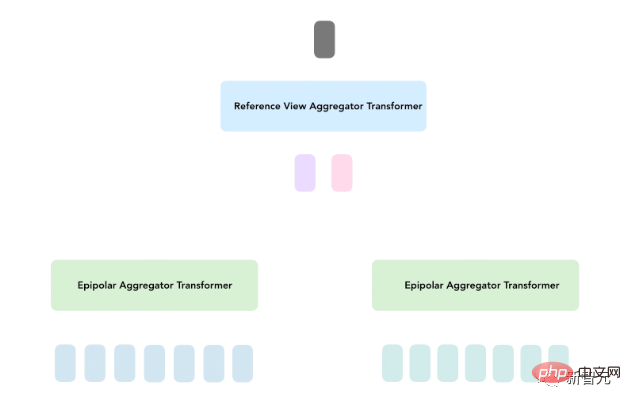

In light field neural rendering (LFNR), researchers use two Transformer sequences to map a collection of patches to target pixel colors.

The first Transformer aggregates information along each epipolar line, and the second Transformer aggregates information along each reference image.

This method can interpret the first Transformer as finding the potential correspondence of the target pixel on each reference frame, while the second Transformer is responsible for occlusion and line-of-sight dependence effects. reasoning, which is also a common difficulty with image-based rendering.

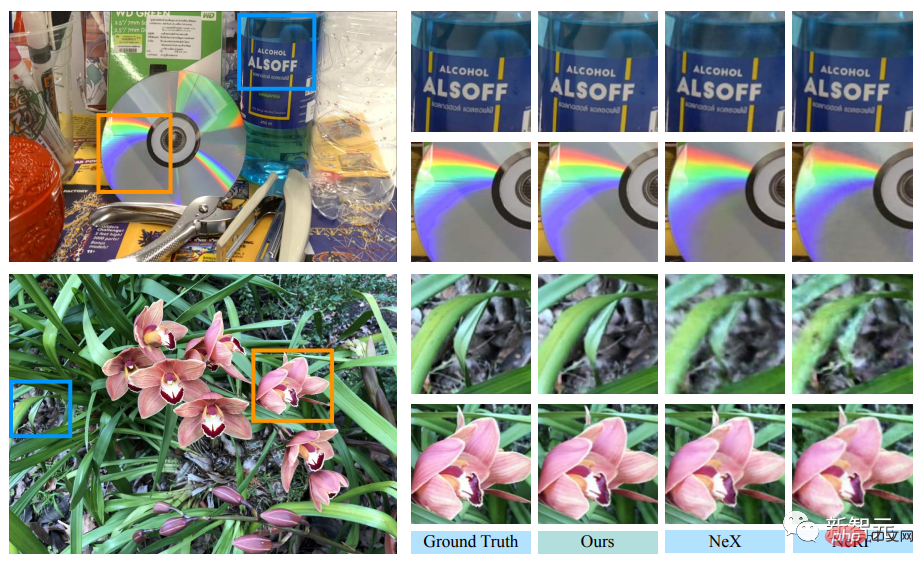

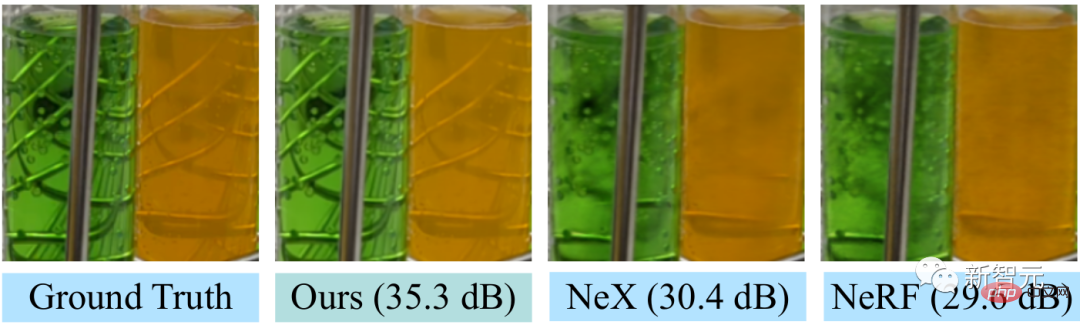

LFNR outperforms the sota model on the most popular view synthesis benchmarks (NeRF’s Blender and Real Forward-Facing scenes and NeX’s Shiny) The peak signal-to-noise ratio (PSNR) is improved by up to 5dB, which is equivalent to reducing the pixel-level error by 1.8 times.

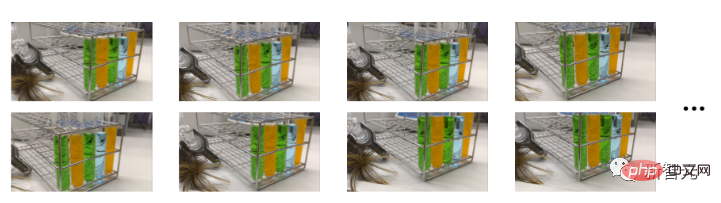

LFNR can reproduce some of the more difficult line-of-sight-dependent effects in the NeX/Shiny dataset, such as rainbows and reflections on CDs, reflections, refractions and translucency on bottles.

Compared with previous methods such as NeX and NeRF, they are unable to reproduce line-of-sight-related effects, such as in the NeX/Shiny dataset Translucency and refractive index of test tubes in a laboratory scene.

But LFNR also has limitations.

The first Transformer folds information along each epipolar line independently for each reference image, which also means that the model can only decide what information to retain based on the output ray coordinates and patches of each reference image. , which works well in training on a single scene (like most neural rendering methods), but it cannot generalize to different scenes.

Generalizable models are important because they can be directly applied to new scenarios without retraining.

The researchers proposed a general patch-based neural rendering (GPNR) model to solve this shortcoming of LFNR.

By adding a Transformer to the model so that it runs before the other two Transformers and between the points of the same depth of all reference images exchange information between.

GPNR consists of a sequence of three Transformers that map a set of patches extracted along epipolar lines into pixel colors. Image patches are mapped to initial features through linear projection layers, and then these features are continuously refined and aggregated by the model to finally form features and colors.

For example, after the first Transformer extracts the patch sequence from "Park Bench", the new model can use "Flowers" that appear at corresponding depths in both views Such clues indicate a potential match.

Another key idea of this work is to normalize the position encoding according to the target ray, because we want to generalize in different scenarios, Quantities must be represented in a relative rather than an absolute frame of reference

To evaluate the model's generalization performance, the researchers trained GPNR on a set of scenarios and tested it on new scenarios .

GPNR improves by an average of 0.5-1.0 dB on several benchmarks (following IBRNet and MVSNeRF protocols), especially on the IBRNet benchmark, where GPNR improves using only 11% of the training scenarios. case, it exceeds the baseline model.

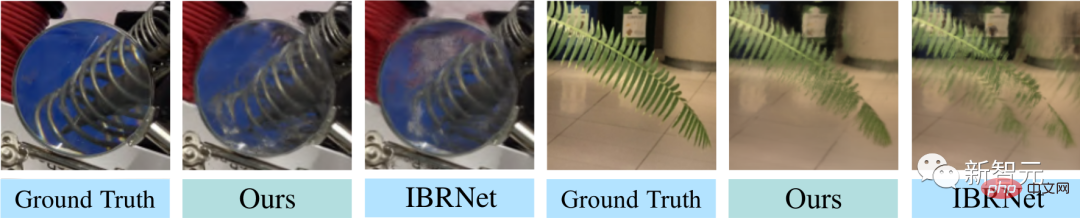

GPNR generated view details on maintained scenes in NeX/Shiny and LLFF without any fine-tuning. GPNR more accurately reproduces details on the blades and refraction through the lens than IBRNet.

The above is the detailed content of Just train once to generate new 3D scenes! The evolution history of Google's 'Light Field Neural Rendering”. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1385

1385

52

52

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

0.What does this article do? We propose DepthFM: a versatile and fast state-of-the-art generative monocular depth estimation model. In addition to traditional depth estimation tasks, DepthFM also demonstrates state-of-the-art capabilities in downstream tasks such as depth inpainting. DepthFM is efficient and can synthesize depth maps within a few inference steps. Let’s read about this work together ~ 1. Paper information title: DepthFM: FastMonocularDepthEstimationwithFlowMatching Author: MingGui, JohannesS.Fischer, UlrichPrestel, PingchuanMa, Dmytr

Abandon the encoder-decoder architecture and use the diffusion model for edge detection, which is more effective. The National University of Defense Technology proposed DiffusionEdge

Feb 07, 2024 pm 10:12 PM

Abandon the encoder-decoder architecture and use the diffusion model for edge detection, which is more effective. The National University of Defense Technology proposed DiffusionEdge

Feb 07, 2024 pm 10:12 PM

Current deep edge detection networks usually adopt an encoder-decoder architecture, which contains up and down sampling modules to better extract multi-level features. However, this structure limits the network to output accurate and detailed edge detection results. In response to this problem, a paper on AAAI2024 provides a new solution. Thesis title: DiffusionEdge:DiffusionProbabilisticModelforCrispEdgeDetection Authors: Ye Yunfan (National University of Defense Technology), Xu Kai (National University of Defense Technology), Huang Yuxing (National University of Defense Technology), Yi Renjiao (National University of Defense Technology), Cai Zhiping (National University of Defense Technology) Paper link: https ://ar

Tongyi Qianwen is open source again, Qwen1.5 brings six volume models, and its performance exceeds GPT3.5

Feb 07, 2024 pm 10:15 PM

Tongyi Qianwen is open source again, Qwen1.5 brings six volume models, and its performance exceeds GPT3.5

Feb 07, 2024 pm 10:15 PM

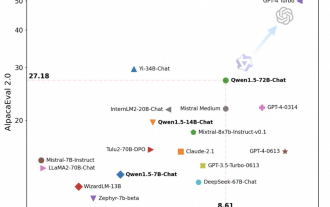

In time for the Spring Festival, version 1.5 of Tongyi Qianwen Model (Qwen) is online. This morning, the news of the new version attracted the attention of the AI community. The new version of the large model includes six model sizes: 0.5B, 1.8B, 4B, 7B, 14B and 72B. Among them, the performance of the strongest version surpasses GPT3.5 and Mistral-Medium. This version includes Base model and Chat model, and provides multi-language support. Alibaba’s Tongyi Qianwen team stated that the relevant technology has also been launched on the Tongyi Qianwen official website and Tongyi Qianwen App. In addition, today's release of Qwen 1.5 also has the following highlights: supports 32K context length; opens the checkpoint of the Base+Chat model;

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Boston Dynamics Atlas officially enters the era of electric robots! Yesterday, the hydraulic Atlas just "tearfully" withdrew from the stage of history. Today, Boston Dynamics announced that the electric Atlas is on the job. It seems that in the field of commercial humanoid robots, Boston Dynamics is determined to compete with Tesla. After the new video was released, it had already been viewed by more than one million people in just ten hours. The old people leave and new roles appear. This is a historical necessity. There is no doubt that this year is the explosive year of humanoid robots. Netizens commented: The advancement of robots has made this year's opening ceremony look like a human, and the degree of freedom is far greater than that of humans. But is this really not a horror movie? At the beginning of the video, Atlas is lying calmly on the ground, seemingly on his back. What follows is jaw-dropping

CLIP-BEVFormer: Explicitly supervise the BEVFormer structure to improve long-tail detection performance

Mar 26, 2024 pm 12:41 PM

CLIP-BEVFormer: Explicitly supervise the BEVFormer structure to improve long-tail detection performance

Mar 26, 2024 pm 12:41 PM

Written above & the author’s personal understanding: At present, in the entire autonomous driving system, the perception module plays a vital role. The autonomous vehicle driving on the road can only obtain accurate perception results through the perception module. The downstream regulation and control module in the autonomous driving system makes timely and correct judgments and behavioral decisions. Currently, cars with autonomous driving functions are usually equipped with a variety of data information sensors including surround-view camera sensors, lidar sensors, and millimeter-wave radar sensors to collect information in different modalities to achieve accurate perception tasks. The BEV perception algorithm based on pure vision is favored by the industry because of its low hardware cost and easy deployment, and its output results can be easily applied to various downstream tasks.

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

I cry to death. The world is madly building big models. The data on the Internet is not enough. It is not enough at all. The training model looks like "The Hunger Games", and AI researchers around the world are worrying about how to feed these data voracious eaters. This problem is particularly prominent in multi-modal tasks. At a time when nothing could be done, a start-up team from the Department of Renmin University of China used its own new model to become the first in China to make "model-generated data feed itself" a reality. Moreover, it is a two-pronged approach on the understanding side and the generation side. Both sides can generate high-quality, multi-modal new data and provide data feedback to the model itself. What is a model? Awaker 1.0, a large multi-modal model that just appeared on the Zhongguancun Forum. Who is the team? Sophon engine. Founded by Gao Yizhao, a doctoral student at Renmin University’s Hillhouse School of Artificial Intelligence.

Kuaishou version of Sora 'Ke Ling' is open for testing: generates over 120s video, understands physics better, and can accurately model complex movements

Jun 11, 2024 am 09:51 AM

Kuaishou version of Sora 'Ke Ling' is open for testing: generates over 120s video, understands physics better, and can accurately model complex movements

Jun 11, 2024 am 09:51 AM

What? Is Zootopia brought into reality by domestic AI? Exposed together with the video is a new large-scale domestic video generation model called "Keling". Sora uses a similar technical route and combines a number of self-developed technological innovations to produce videos that not only have large and reasonable movements, but also simulate the characteristics of the physical world and have strong conceptual combination capabilities and imagination. According to the data, Keling supports the generation of ultra-long videos of up to 2 minutes at 30fps, with resolutions up to 1080p, and supports multiple aspect ratios. Another important point is that Keling is not a demo or video result demonstration released by the laboratory, but a product-level application launched by Kuaishou, a leading player in the short video field. Moreover, the main focus is to be pragmatic, not to write blank checks, and to go online as soon as it is released. The large model of Ke Ling is already available in Kuaiying.

The U.S. Air Force showcases its first AI fighter jet with high profile! The minister personally conducted the test drive without interfering during the whole process, and 100,000 lines of code were tested for 21 times.

May 07, 2024 pm 05:00 PM

The U.S. Air Force showcases its first AI fighter jet with high profile! The minister personally conducted the test drive without interfering during the whole process, and 100,000 lines of code were tested for 21 times.

May 07, 2024 pm 05:00 PM

Recently, the military circle has been overwhelmed by the news: US military fighter jets can now complete fully automatic air combat using AI. Yes, just recently, the US military’s AI fighter jet was made public for the first time and the mystery was unveiled. The full name of this fighter is the Variable Stability Simulator Test Aircraft (VISTA). It was personally flown by the Secretary of the US Air Force to simulate a one-on-one air battle. On May 2, U.S. Air Force Secretary Frank Kendall took off in an X-62AVISTA at Edwards Air Force Base. Note that during the one-hour flight, all flight actions were completed autonomously by AI! Kendall said - "For the past few decades, we have been thinking about the unlimited potential of autonomous air-to-air combat, but it has always seemed out of reach." However now,