Technology peripherals

Technology peripherals

AI

AI

Implement edge training with less than 256KB of memory, and the cost is less than one thousandth of PyTorch

Implement edge training with less than 256KB of memory, and the cost is less than one thousandth of PyTorch

Implement edge training with less than 256KB of memory, and the cost is less than one thousandth of PyTorch

When it comes to neural network training, everyone’s first impression is of the GPU server cloud platform. Due to its huge memory overhead, traditional training is often performed in the cloud and the edge platform is only responsible for inference. However, such a design makes it difficult for the AI model to adapt to new data: after all, the real world is a dynamic, changing, and developing scenario. How can one training cover all scenarios?

In order to enable the model to continuously adapt to new data, can we perform training on the edge (on-device training) so that the device can continuously learn on its own? In this work, we only used less than 256KB of memory to implement on-device training, and the overhead was less than 1/1000 of PyTorch. At the same time, we performed well on the visual wake word task (VWW) Achieved cloud training accuracy. This technology enables models to adapt to new sensor data. Users can enjoy customized services without uploading data to the cloud, thereby protecting privacy.

- Website: https://tinytraining.mit.edu/

- Paper: https://arxiv.org/abs/2206.15472

- Demo: https://www.bilibili.com/ video/BV1qv4y1d7MV

- Code: https://github.com/mit-han-lab/tiny-training

Background

On-device Training allows pre-trained models to adapt to new environments after deployment. By training and adapting locally on mobile, the model can continuously improve its results and customize the model for the user. For example, fine-tuning language models allows them to learn from input history; adjusting vision models allows smart cameras to continuously recognize new objects. By bringing training closer to the terminal rather than the cloud, we can effectively improve model quality while protecting user privacy, especially when processing private information such as medical data and input history.

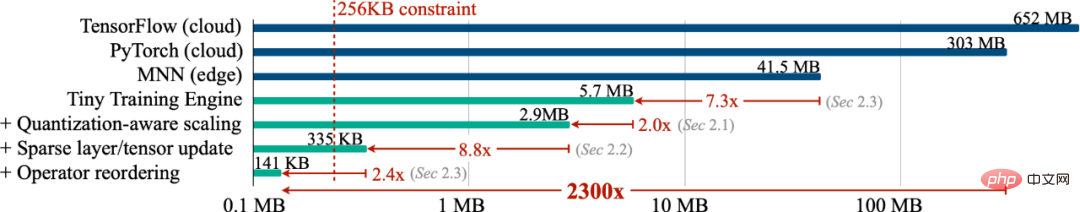

However, training on small IoT devices is essentially different from cloud training and is very challenging. First, the SRAM size of AIoT devices (MCU) is usually limited (256KB). This level of memory is very difficult to do inference, let alone training. Furthermore, existing low-cost and high-efficiency transfer learning algorithms, such as only training the last layer classifier (last FC) and only learning the bias term, often have unsatisfactory accuracy and cannot be used in practice, let alone in modern applications. Some deep learning frameworks are unable to translate the theoretical numbers of these algorithms into measured savings. Finally, modern deep training frameworks (PyTorch, TensorFlow) are usually designed for cloud servers, and training small models (MobileNetV2-w0.35) requires a large amount of memory even if the batch-size is set to 1. Therefore, we need to co-design algorithms and systems to achieve training on smart terminal devices.

Methods and Results

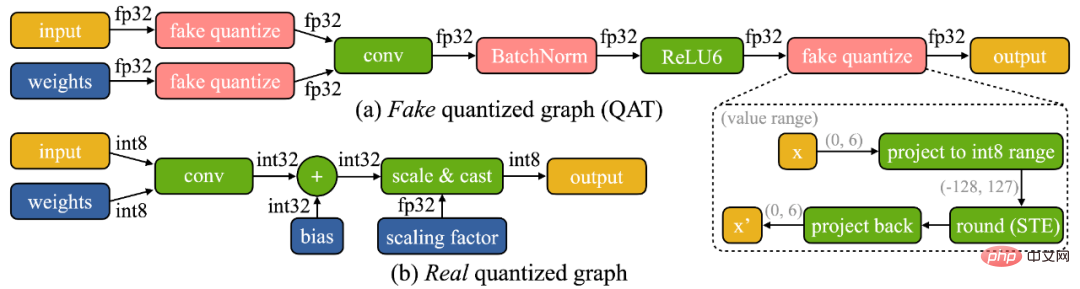

We found that on-device training has two unique challenges: (1) The model is on the edge device It's quantitative. A truly quantized graph (as shown below) is difficult to optimize due to low-precision tensors and lack of batch normalization layers; (2) the limited hardware resources (memory and computation) of small hardware do not allow full backpropagation, which The memory usage can easily exceed the limit of the microcontroller's SRAM (by more than an order of magnitude), but if only the last layer is updated, the final accuracy will inevitably be unsatisfactory.

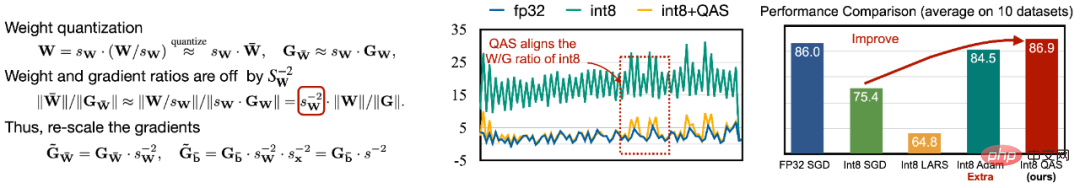

#In order to cope with the difficulty of optimization, we propose Quantization-Aware Scaling (QAS) to automatically scale the gradient of tensors with different bit precisions (as follows) shown on the left). QAS can automatically match gradients and parameter scales and stabilize training without requiring additional hyperparameters. On 8 data sets, QAS can achieve consistent performance with floating-point training (right picture below).

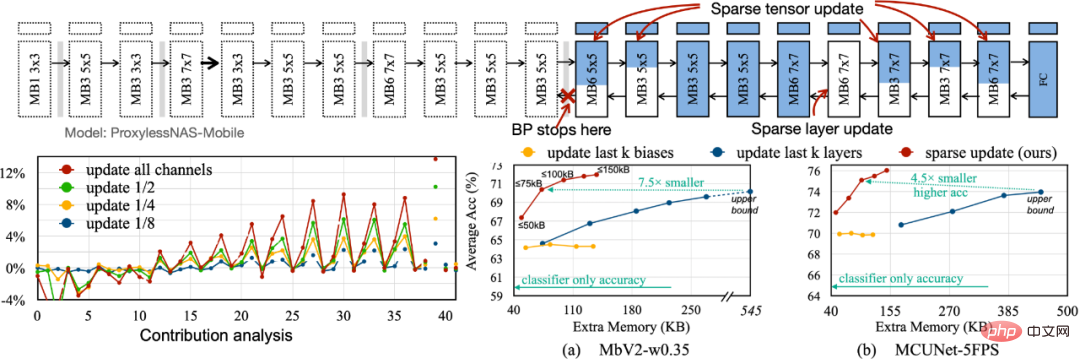

In order to reduce the memory footprint required for backpropagation, we propose Sparse Update to skip the gradient calculation of less important layers and sub-sheets. We develop an automatic method based on contribution analysis to find the optimal update scheme. Compared with previous bias-only, last-k layers update, the sparse update scheme we searched has 4.5 times to 7.5 times memory savings, and the average accuracy on 8 downstream data sets is even higher.

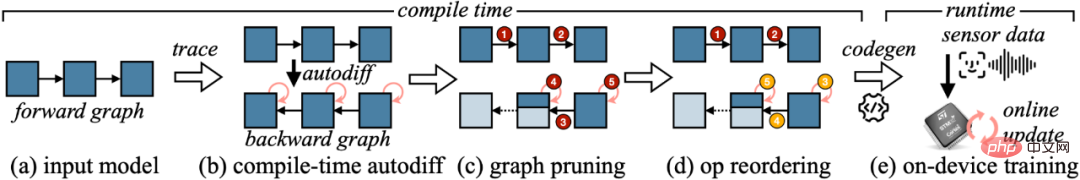

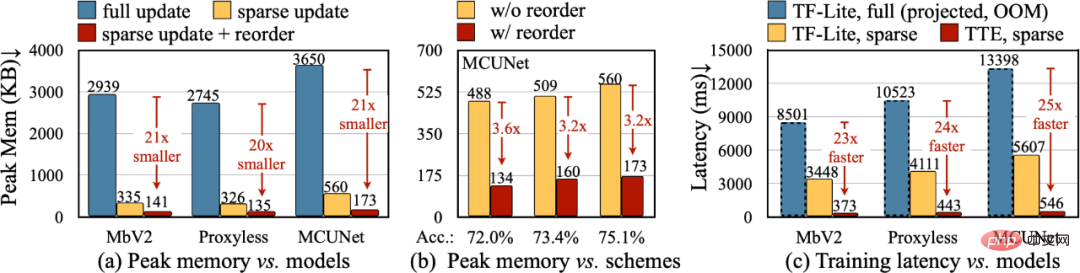

In order to convert the theoretical reduction in the algorithm into actual numerical values, we designed the Tiny Training Engine (TTE): it transfers the work of automatic differentiation to to compile time, and use codegen to reduce runtime overhead. It also supports graph pruning and reordering for real savings and speedups. Sparse Update effectively reduces peak memory by 7-9x compared to Full Update, and can be further improved to 20-21x total memory savings with reordering. Compared with TF-Lite, the optimized kernel and sparse update in TTE increase the overall training speed by 23-25 times.

Conclusion

In this article, we propose the first implementation on a single-chip computer Training solution (using only 256KB RAM and 1MB Flash). Our algorithm system co-design (System-Algorithm Co-design) greatly reduces the memory required for training (1000 times vs. PyTorch) and training time (20 times vs. TF-Lite), and achieves higher accuracy on downstream tasks Rate. Tiny Training can empower many interesting applications. For example, mobile phones can customize language models based on users’ emails/input history, smart cameras can continuously recognize new faces/objects, and some AI scenarios that cannot be connected to the Internet can also continue to learn (such as agriculture). , marine, industrial assembly lines). Through our work, small end devices can perform not only inference but also training. During this process, personal data will never be uploaded to the cloud, so there is no privacy risk. At the same time, the AI model can continuously learn on its own to adapt to a dynamically changing world!

The above is the detailed content of Implement edge training with less than 256KB of memory, and the cost is less than one thousandth of PyTorch. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

Large memory optimization, what should I do if the computer upgrades to 16g/32g memory speed and there is no change?

Jun 18, 2024 pm 06:51 PM

Large memory optimization, what should I do if the computer upgrades to 16g/32g memory speed and there is no change?

Jun 18, 2024 pm 06:51 PM

For mechanical hard drives or SATA solid-state drives, you will feel the increase in software running speed. If it is an NVME hard drive, you may not feel it. 1. Import the registry into the desktop and create a new text document, copy and paste the following content, save it as 1.reg, then right-click to merge and restart the computer. WindowsRegistryEditorVersion5.00[HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Control\SessionManager\MemoryManagement]"DisablePagingExecutive"=d

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

0.What does this article do? We propose DepthFM: a versatile and fast state-of-the-art generative monocular depth estimation model. In addition to traditional depth estimation tasks, DepthFM also demonstrates state-of-the-art capabilities in downstream tasks such as depth inpainting. DepthFM is efficient and can synthesize depth maps within a few inference steps. Let’s read about this work together ~ 1. Paper information title: DepthFM: FastMonocularDepthEstimationwithFlowMatching Author: MingGui, JohannesS.Fischer, UlrichPrestel, PingchuanMa, Dmytr

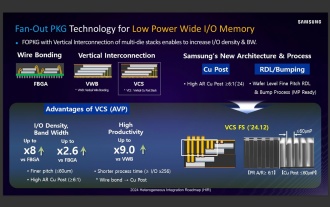

Sources say Samsung Electronics and SK Hynix will commercialize stacked mobile memory after 2026

Sep 03, 2024 pm 02:15 PM

Sources say Samsung Electronics and SK Hynix will commercialize stacked mobile memory after 2026

Sep 03, 2024 pm 02:15 PM

According to news from this website on September 3, Korean media etnews reported yesterday (local time) that Samsung Electronics and SK Hynix’s “HBM-like” stacked structure mobile memory products will be commercialized after 2026. Sources said that the two Korean memory giants regard stacked mobile memory as an important source of future revenue and plan to expand "HBM-like memory" to smartphones, tablets and laptops to provide power for end-side AI. According to previous reports on this site, Samsung Electronics’ product is called LPWide I/O memory, and SK Hynix calls this technology VFO. The two companies have used roughly the same technical route, which is to combine fan-out packaging and vertical channels. Samsung Electronics’ LPWide I/O memory has a bit width of 512

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Boston Dynamics Atlas officially enters the era of electric robots! Yesterday, the hydraulic Atlas just "tearfully" withdrew from the stage of history. Today, Boston Dynamics announced that the electric Atlas is on the job. It seems that in the field of commercial humanoid robots, Boston Dynamics is determined to compete with Tesla. After the new video was released, it had already been viewed by more than one million people in just ten hours. The old people leave and new roles appear. This is a historical necessity. There is no doubt that this year is the explosive year of humanoid robots. Netizens commented: The advancement of robots has made this year's opening ceremony look like a human, and the degree of freedom is far greater than that of humans. But is this really not a horror movie? At the beginning of the video, Atlas is lying calmly on the ground, seemingly on his back. What follows is jaw-dropping

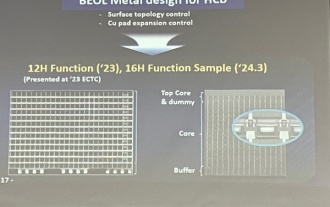

Samsung announced the completion of 16-layer hybrid bonding stacking process technology verification, which is expected to be widely used in HBM4 memory

Apr 07, 2024 pm 09:19 PM

Samsung announced the completion of 16-layer hybrid bonding stacking process technology verification, which is expected to be widely used in HBM4 memory

Apr 07, 2024 pm 09:19 PM

According to the report, Samsung Electronics executive Dae Woo Kim said that at the 2024 Korean Microelectronics and Packaging Society Annual Meeting, Samsung Electronics will complete the verification of the 16-layer hybrid bonding HBM memory technology. It is reported that this technology has passed technical verification. The report also stated that this technical verification will lay the foundation for the development of the memory market in the next few years. DaeWooKim said that Samsung Electronics has successfully manufactured a 16-layer stacked HBM3 memory based on hybrid bonding technology. The memory sample works normally. In the future, the 16-layer stacked hybrid bonding technology will be used for mass production of HBM4 memory. ▲Image source TheElec, same as below. Compared with the existing bonding process, hybrid bonding does not need to add bumps between DRAM memory layers, but directly connects the upper and lower layers copper to copper.

Lexar launches Ares Wings of War DDR5 7600 16GB x2 memory kit: Hynix A-die particles, 1,299 yuan

May 07, 2024 am 08:13 AM

Lexar launches Ares Wings of War DDR5 7600 16GB x2 memory kit: Hynix A-die particles, 1,299 yuan

May 07, 2024 am 08:13 AM

According to news from this website on May 6, Lexar launched the Ares Wings of War series DDR57600CL36 overclocking memory. The 16GBx2 set will be available for pre-sale at 0:00 on May 7 with a deposit of 50 yuan, and the price is 1,299 yuan. Lexar Wings of War memory uses Hynix A-die memory chips, supports Intel XMP3.0, and provides the following two overclocking presets: 7600MT/s: CL36-46-46-961.4V8000MT/s: CL38-48-49 -1001.45V In terms of heat dissipation, this memory set is equipped with a 1.8mm thick all-aluminum heat dissipation vest and is equipped with PMIC's exclusive thermal conductive silicone grease pad. The memory uses 8 high-brightness LED beads and supports 13 RGB lighting modes.

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

I cry to death. The world is madly building big models. The data on the Internet is not enough. It is not enough at all. The training model looks like "The Hunger Games", and AI researchers around the world are worrying about how to feed these data voracious eaters. This problem is particularly prominent in multi-modal tasks. At a time when nothing could be done, a start-up team from the Department of Renmin University of China used its own new model to become the first in China to make "model-generated data feed itself" a reality. Moreover, it is a two-pronged approach on the understanding side and the generation side. Both sides can generate high-quality, multi-modal new data and provide data feedback to the model itself. What is a model? Awaker 1.0, a large multi-modal model that just appeared on the Zhongguancun Forum. Who is the team? Sophon engine. Founded by Gao Yizhao, a doctoral student at Renmin University’s Hillhouse School of Artificial Intelligence.

Kuaishou version of Sora 'Ke Ling' is open for testing: generates over 120s video, understands physics better, and can accurately model complex movements

Jun 11, 2024 am 09:51 AM

Kuaishou version of Sora 'Ke Ling' is open for testing: generates over 120s video, understands physics better, and can accurately model complex movements

Jun 11, 2024 am 09:51 AM

What? Is Zootopia brought into reality by domestic AI? Exposed together with the video is a new large-scale domestic video generation model called "Keling". Sora uses a similar technical route and combines a number of self-developed technological innovations to produce videos that not only have large and reasonable movements, but also simulate the characteristics of the physical world and have strong conceptual combination capabilities and imagination. According to the data, Keling supports the generation of ultra-long videos of up to 2 minutes at 30fps, with resolutions up to 1080p, and supports multiple aspect ratios. Another important point is that Keling is not a demo or video result demonstration released by the laboratory, but a product-level application launched by Kuaishou, a leading player in the short video field. Moreover, the main focus is to be pragmatic, not to write blank checks, and to go online as soon as it is released. The large model of Ke Ling is already available in Kuaiying.