Technology peripherals

Technology peripherals

AI

AI

Big things are happening in artificial intelligence, and LeCun has also transformed

Big things are happening in artificial intelligence, and LeCun has also transformed

Big things are happening in artificial intelligence, and LeCun has also transformed

Symbol processing is a process common in logic, mathematics, and computer science that treats thinking as algebraic operations. For nearly 70 years, the most fundamental debate in the field of artificial intelligence has been whether artificial intelligence systems should be based on symbolic processing or on neural systems similar to the human brain.

There is actually a third possibility as a middle ground - the hybrid model. Hybrid models attempt to get the best of both worlds by combining the data-driven learning of neural networks with the powerful abstraction capabilities of symbolic processing. This is also where I have worked for most of my career.

In a recent article published in NOEMA magazine, Yann LeCun, Turing Award winner and Meta’s chief artificial intelligence scientist, and Jacob Browning, LeCun Lab’s “resident philosopher”, were also involved in this controversy. . The article appears to offer a new alternative, but closer inspection reveals that its ideas are neither new nor convincing.

Yann LeCun and Jacob Browning formally responded to the idea that "deep learning has hit a wall" for the first time in an article published in NOEMA magazine, stating that "from the beginning, critics have Prematurely believing that neural networks have hit an insurmountable wall turns out to be only a temporary obstacle each time."

At the beginning of the article, they seem to argue against hybrid models, which are often defined as It is a system that combines neural network deep learning and symbol processing. But by the end, LeCun was uncharacteristically acknowledging in many words that hybrid systems exist—that they matter, that they are a possible way forward, and that we have always known this. The article itself is contradictory.

As for why this contradiction occurs, the only reason I can think of is that LeCun and Browning somehow believe that models that learn symbolic processing are not hybrid models. But learning is a developing problem (how do systems arise?), and how developed systems function (with one mechanism or two) is a computational problem: by any reasonable measure, A system that utilizes both symbolic and neural network mechanisms is a hybrid system. (Perhaps what they really mean is that AI is more like a learned hybrid than an innate hybrid. But a learned hybrid is still a hybrid.)

Around 2010, symbolic processing was considered a bad word by deep learning proponents; in 2020, understanding the origins of symbolic processing has become our top priority.

I think symbol processing is either innate, or something else indirectly contributes to the acquisition of symbol processing. The sooner we figure out what foundations allow systems to learn symbolic abstractions, the sooner we can build systems that appropriately leverage all the knowledge in the world, and the sooner the systems will be more secure, trustworthy, and explainable.

However, first we need to understand the ins and outs of this important debate in the history of artificial intelligence development.

Early AI pioneers Marvin Minsky and John McCarthy believed that symbolic processing was the only reasonable way forward, while neural network pioneer Frank Rosenblatt believed that AI would be better built around the amalgamation of neuron-like "node" sets. Can work on the structure of the data to do the heavy lifting of statistics.

These two possibilities are not mutually exclusive. The "neural network" used in artificial intelligence is not a literal biological neuron network. Instead, it is a simplified digital model that bears some resemblance to an actual biological brain, but with minimal complexity. In principle, these abstract neurons could be connected in many different ways, some of which could directly enable logical and symbolic processing. This possibility was explicitly acknowledged as early as 1943 in one of the earliest papers in the field, A Logical Calculus of the Ideas Inmanent in Nervous Activity.

Frank Rosenblatt in the 1950s and David Rumelhart and Jay McClelland in the 1980s proposed neural networks as an alternative to symbol processing; Geoffrey Hinton generally supported this position.

The untold history here is that back in the early 2010s, LeCun, Hinton, and Yoshua Bengio were so enthusiastic about these multi-layer neural networks that were finally becoming practical that they wanted to do away with symbol processing entirely. By 2015, deep learning was still in its carefree, enthusiastic era, and LeCun, Bengio, and Hinton wrote a manifesto on deep learning in Nature. The article ends with an attack on symbols, arguing that "new paradigms are needed to replace rule-based operations on symbolic expressions with operations on large vectors."

In fact, Hinton was so convinced that symbol processing was a dead end that the same year he gave a lecture called "Aetherial Symbols" at Stanford University-likening symbols to one of the biggest mistakes in the history of science.

Similarly, Hinton's collaborators Rumelhart and McClelland made a similar point in the 1980s, arguing in a 1986 book that symbols are not "the essence of human computation."

When I wrote an article defending symbol processing in 2018, LeCun called my hybrid systems view "mostly wrong" on Twitter. At that time, Hinton also compared my work to wasting time on a "gasoline engine" and that an "electric engine" was the best way forward. Even in November 2020, Hinton claimed that "deep learning will be able to do everything."

So when LeCun and Browning now write, without irony, that “everyone working in deep learning agrees that symbolic processing is a necessary feature for creating human-like AI,” they are upending decades of history of debate. As Christopher Manning, a professor of artificial intelligence at Stanford University, said: "LeCun's position has changed somewhat."

Clearly, the methods of ten years ago are no longer applicable.

In the 2010s, many in the machine learning community asserted (without real evidence) that “symbols are biologically implausible.” Ten years later, LeCun was considering a new approach that would involve symbolic processing, whether innate or learned. LeCun and Browning's new view that symbolic processing is crucial represents a huge concession for the field of deep learning.

Artificial intelligence historians should consider the NOEMA magazine article as a major turning point, in which LeCun, one of the deep learning trio, was the first to directly acknowledge the inevitability of hybrid AI.

It is worth noting that earlier this year, two other members of the deep learning Big Three also expressed support for hybrid AI systems. Computer scientist Andrew Ng and LSTM co-creator Sepp Hochreiter have also expressed support for such systems. Jürgen Schmidhuber’s AI company NNAISANCE is currently conducting research around the combination of symbolic processing and deep learning.

The remainder of LeCun and Browning's article can be roughly divided into three parts:

- An incorrect characterization of my position;

- An effort to narrow down the hybrid model Scope;

- Discuss why symbol processing is learned rather than innate.

For example, LeCun and Browning say: "Marcus believes that if you don't have symbolic manipulation at the beginning, you won't have it later. at the start, you'll never have it)." In fact, I made it clear in my 2001 book "The Algebraic Mind": We are not sure whether symbolic processing is innate.

They also said that I expected "no further progress" in deep learning, when my actual point was not that there won't be any further progress on any problem, but that deep learning is useful for certain tasks such as combinatorial It is itself the wrong tool for sexual problems, causal inference problems).

They also said that I think "symbolic reasoning is all-or-nothing for a model, because DALL-E does not use symbols and logical rules as the basis of its processing, it does not actually use symbols." Reasoning,” and I said no such thing. DALL·E does not reason using symbols, but this does not mean that any system that includes symbolic reasoning must be all-or-nothing. At least as early as the 1970s, in the expert system MYCIN, there were purely symbolic systems that could perform all kinds of quantitative reasoning.

In addition to assuming that "a model containing learned symbols is not a hybrid model", they also try to equate a hybrid model with "a model containing a non-differentiable symbol processor". They think I equate hybrid models to "a simple combination of two things: inserting a hard-coded symbolic processing module on top of a pattern-completion deep learning module." In fact, everyone who is really working on neural symbols People working in AI realize that the job is not that simple.

Instead, as we all realize, the crux of the matter is the right way to build hybrid systems. Many different methods have been considered for combining symbolic and neural networks, focusing on techniques such as extracting symbolic rules from neural networks, converting symbolic rules directly into neural networks, and building intermediate systems that allow information to be passed between neural networks and symbolic systems. and reconstruct the neural network itself. Many avenues are being explored.

Finally, let’s look at the most critical question: Can symbol processing be learned through learning rather than being built-in from the beginning?

I answered bluntly: Of course. As far as I know, no one denies that symbolic processing can be learned. I answered this question in 2001 in Section 6.1 of Algebraic Thinking, and while I thought it was unlikely, I didn't say it was absolutely impossible. Instead, I concluded: "These experiments and theories certainly do not guarantee that symbol processing abilities are innate, but they do fit the idea."

Overall, my points include Two parts:

The first is the "learnability" perspective: throughout Algebraic Thinking, I show that certain types of systems (basically the precursors to today's deeper systems) fail to Various aspects of symbol processing are learned, so there is no guarantee that any system will be able to learn symbol processing. As the original words in my book say:

Some things must be innate. But there is no real conflict between "nature" and "nurture." Nature provides a set of mechanisms that allow us to interact with our environment, a set of tools to extract knowledge from the world, and a set of tools to exploit this knowledge. Without some innate learning tools, we wouldn't learn at all.

Developmental psychologist Elizabeth Spelke once said: "I think a system with some built-in starting points (such as objects, sets, devices for symbol processing, etc.) will be better than A pure blank slate is a more effective way to understand the world.” In fact, LeCun’s own most famous work on convolutional neural networks can also illustrate this point.

The second point is that human infants show some evidence of symbolic processing abilities. In an oft-cited set of rule-learning experiments from my laboratory, infants generalized a range of abstract patterns beyond the specific examples in which they were trained. Follow-up work on the implicit logical reasoning abilities of human infants will further confirm this.

Unfortunately, LeCun and Browning completely avoid both of my points. Oddly, they instead equate learning symbols with later acquisitions such as “maps, pictorial representations, rituals, and even social roles,” apparently unaware that I and several other cognitive scientists learned from the vast literature in cognitive science Thinking about babies, young children, and non-human animals drawn from. If a lamb can crawl down a hill soon after birth, why can’t a nascent neural network add a little symbol processing?

Finally , it is puzzling why LeCun and Browning go to such lengths to argue against the innate nature of symbol processing? They do not give a strong principled argument against the innate nature of symbol processing, nor do they give any principled reasons to prove it. Symbol processing is learned.

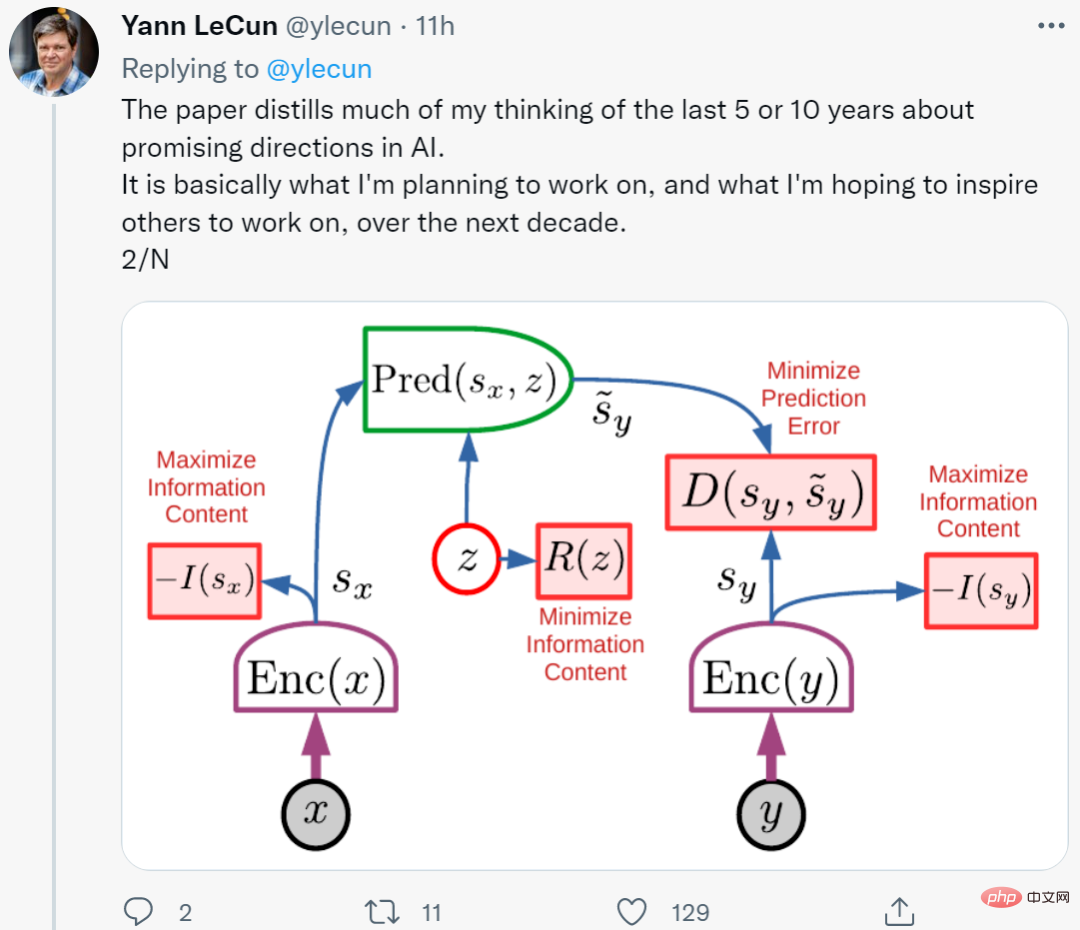

It is worth noting that LeCun’s latest research includes some “innate” symbol processing. His recently unveiled new architecture consists of six modules overall, most of which are adjustable but all of which are built-in.

Furthermore, LeCun and Browning do not specify how to solve specific problems that are well known in language understanding and reasoning, since language models have no innate symbol processing mechanisms.

On the contrary, they just use the principle of induction to explain the role of deep learning: "Since deep learning has overcome the problem of 1 to N, we should believe that it can overcome the problem of N 1."

This view is very weak. What people should really think about and question is the limit of deep learning.

Second, there are some strong concrete reasons why deep learning already faces principled challenges, namely combinatoriality, systematization, and language understanding problems. These problems rely on "generalization" and "distribution shift". Everyone in the field now recognizes that distribution shift is the Achilles heel of current neural networks. This is also the pioneering view of today's deep learning systems in Algebraic Thinking.

In fact, deep learning is only part of building intelligent machines. Such techniques lack methods for characterizing causal relationships (such as the relationship between a disease and its symptoms) and may present challenges in capturing abstract concepts. Deep learning has no obvious logical reasoning method and is still a long way from integrating abstract knowledge.

Of course, deep learning has made a lot of progress, and it is good at pattern recognition, but it has not made enough progress on some basic problems such as reasoning, and the system is still very unreliable.

Take the new model Minerva developed by Google as an example. It has billions of tokens during training, but it is still difficult to complete the problem of multiplying 4-digit numbers. It achieved 50 percent accuracy on high school math exams, but was touted as a "major improvement." Therefore, it is still difficult to build a system that masters reasoning and abstraction in the field of deep learning. The current conclusion is: not only is there a problem with deep learning, but deep learning "has always had problems."

It seems to me that the situation with symbol handling is probably the same as ever:

Under the influence of 20 years of "algebraic thinking", current systems still cannot reliably extract symbolic processing (such as multiplication), even in the face of huge data sets and training. The example of human infants and young children shows that before formal education, humans are able to generalize complex concepts of natural language and reasoning (presumably symbolic in nature).

A little built-in symbolism can greatly improve learning efficiency. LeCun's own success with convolutions (built-in constraints on how neural networks are connected) illustrates this situation well. Part of AlphaFold 2's success stems from carefully constructed innate representations of molecular biology, and another part comes from the role of models. A new paper from DeepMind says they've made some progress in building systematic reasoning about innate knowledge about targets.

And nothing LeCun and Browning said changes that.

Taking a step back, the world can be roughly divided into three parts:

- Systems with symbol processing equipment fully installed in the factory (such as almost all known programming languages).

- Systems with innate learning devices lack symbolic processing, but with appropriate data and training environment, they are sufficient to obtain symbolic processing.

- Even with sufficient training, a system with a complete symbol processing mechanism cannot be obtained.

Current deep learning systems fall into the third category: there is no symbol processing mechanism at the beginning, and there is no reliable symbol processing mechanism in the process.

LeCun and Browning seem to agree with my recent argument against scaling when they realize that scaling does a good job of adding more layers, more data, but it's not enough. All three of us acknowledged the need for some new ideas.

Additionally, on a macro level, LeCun’s recent assertions are in many ways very close to my 2020 assertions that we both emphasize the importance of perceiving, reasoning, and having richer models of the world. We all agree that symbol processing plays an important role (although it may be different). We all agree that currently popular reinforcement learning techniques cannot meet all needs, and neither does pure scaling.

The biggest difference in symbol processing is the amount of inherent structure required, and the ability to leverage existing knowledge. Symbolic processing wants to exploit as much existing knowledge as possible, while deep learning wants the system to start from scratch as much as possible.

Back in the 2010s, symbolic processing was an unpopular term among deep learning proponents. In the 2020s, we should make it a priority to understand the origins of this method, even for the most enthusiastic about neural networks. Proponents of AI have recognized the importance of symbolic processing in achieving AI. A long-standing question in the neurosymbolic community is: How can data-driven learning and symbolic representation work in harmony within a single, more powerful intelligence? Excitingly, LeCun finally committed to working toward that goal.

Original link: https://www.noemamag.com/deep-learning-alone-isnt-getting-us-to-human-like-ai/

The above is the detailed content of Big things are happening in artificial intelligence, and LeCun has also transformed. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

This site reported on June 27 that Jianying is a video editing software developed by FaceMeng Technology, a subsidiary of ByteDance. It relies on the Douyin platform and basically produces short video content for users of the platform. It is compatible with iOS, Android, and Windows. , MacOS and other operating systems. Jianying officially announced the upgrade of its membership system and launched a new SVIP, which includes a variety of AI black technologies, such as intelligent translation, intelligent highlighting, intelligent packaging, digital human synthesis, etc. In terms of price, the monthly fee for clipping SVIP is 79 yuan, the annual fee is 599 yuan (note on this site: equivalent to 49.9 yuan per month), the continuous monthly subscription is 59 yuan per month, and the continuous annual subscription is 499 yuan per year (equivalent to 41.6 yuan per month) . In addition, the cut official also stated that in order to improve the user experience, those who have subscribed to the original VIP

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Improve developer productivity, efficiency, and accuracy by incorporating retrieval-enhanced generation and semantic memory into AI coding assistants. Translated from EnhancingAICodingAssistantswithContextUsingRAGandSEM-RAG, author JanakiramMSV. While basic AI programming assistants are naturally helpful, they often fail to provide the most relevant and correct code suggestions because they rely on a general understanding of the software language and the most common patterns of writing software. The code generated by these coding assistants is suitable for solving the problems they are responsible for solving, but often does not conform to the coding standards, conventions and styles of the individual teams. This often results in suggestions that need to be modified or refined in order for the code to be accepted into the application

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Large Language Models (LLMs) are trained on huge text databases, where they acquire large amounts of real-world knowledge. This knowledge is embedded into their parameters and can then be used when needed. The knowledge of these models is "reified" at the end of training. At the end of pre-training, the model actually stops learning. Align or fine-tune the model to learn how to leverage this knowledge and respond more naturally to user questions. But sometimes model knowledge is not enough, and although the model can access external content through RAG, it is considered beneficial to adapt the model to new domains through fine-tuning. This fine-tuning is performed using input from human annotators or other LLM creations, where the model encounters additional real-world knowledge and integrates it

Seven Cool GenAI & LLM Technical Interview Questions

Jun 07, 2024 am 10:06 AM

Seven Cool GenAI & LLM Technical Interview Questions

Jun 07, 2024 am 10:06 AM

To learn more about AIGC, please visit: 51CTOAI.x Community https://www.51cto.com/aigc/Translator|Jingyan Reviewer|Chonglou is different from the traditional question bank that can be seen everywhere on the Internet. These questions It requires thinking outside the box. Large Language Models (LLMs) are increasingly important in the fields of data science, generative artificial intelligence (GenAI), and artificial intelligence. These complex algorithms enhance human skills and drive efficiency and innovation in many industries, becoming the key for companies to remain competitive. LLM has a wide range of applications. It can be used in fields such as natural language processing, text generation, speech recognition and recommendation systems. By learning from large amounts of data, LLM is able to generate text

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Machine learning is an important branch of artificial intelligence that gives computers the ability to learn from data and improve their capabilities without being explicitly programmed. Machine learning has a wide range of applications in various fields, from image recognition and natural language processing to recommendation systems and fraud detection, and it is changing the way we live. There are many different methods and theories in the field of machine learning, among which the five most influential methods are called the "Five Schools of Machine Learning". The five major schools are the symbolic school, the connectionist school, the evolutionary school, the Bayesian school and the analogy school. 1. Symbolism, also known as symbolism, emphasizes the use of symbols for logical reasoning and expression of knowledge. This school of thought believes that learning is a process of reverse deduction, through existing

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

Editor |ScienceAI Question Answering (QA) data set plays a vital role in promoting natural language processing (NLP) research. High-quality QA data sets can not only be used to fine-tune models, but also effectively evaluate the capabilities of large language models (LLM), especially the ability to understand and reason about scientific knowledge. Although there are currently many scientific QA data sets covering medicine, chemistry, biology and other fields, these data sets still have some shortcomings. First, the data form is relatively simple, most of which are multiple-choice questions. They are easy to evaluate, but limit the model's answer selection range and cannot fully test the model's ability to answer scientific questions. In contrast, open-ended Q&A

AlphaFold 3 is launched, comprehensively predicting the interactions and structures of proteins and all living molecules, with far greater accuracy than ever before

Jul 16, 2024 am 12:08 AM

AlphaFold 3 is launched, comprehensively predicting the interactions and structures of proteins and all living molecules, with far greater accuracy than ever before

Jul 16, 2024 am 12:08 AM

Editor | Radish Skin Since the release of the powerful AlphaFold2 in 2021, scientists have been using protein structure prediction models to map various protein structures within cells, discover drugs, and draw a "cosmic map" of every known protein interaction. . Just now, Google DeepMind released the AlphaFold3 model, which can perform joint structure predictions for complexes including proteins, nucleic acids, small molecules, ions and modified residues. The accuracy of AlphaFold3 has been significantly improved compared to many dedicated tools in the past (protein-ligand interaction, protein-nucleic acid interaction, antibody-antigen prediction). This shows that within a single unified deep learning framework, it is possible to achieve

SOTA performance, Xiamen multi-modal protein-ligand affinity prediction AI method, combines molecular surface information for the first time

Jul 17, 2024 pm 06:37 PM

SOTA performance, Xiamen multi-modal protein-ligand affinity prediction AI method, combines molecular surface information for the first time

Jul 17, 2024 pm 06:37 PM

Editor | KX In the field of drug research and development, accurately and effectively predicting the binding affinity of proteins and ligands is crucial for drug screening and optimization. However, current studies do not take into account the important role of molecular surface information in protein-ligand interactions. Based on this, researchers from Xiamen University proposed a novel multi-modal feature extraction (MFE) framework, which for the first time combines information on protein surface, 3D structure and sequence, and uses a cross-attention mechanism to compare different modalities. feature alignment. Experimental results demonstrate that this method achieves state-of-the-art performance in predicting protein-ligand binding affinities. Furthermore, ablation studies demonstrate the effectiveness and necessity of protein surface information and multimodal feature alignment within this framework. Related research begins with "S