Technology peripherals

Technology peripherals

AI

AI

Latest interview with DeepMind Chief Scientist Oriol Vinyals: The future of general AI is strong interactive meta-learning

Latest interview with DeepMind Chief Scientist Oriol Vinyals: The future of general AI is strong interactive meta-learning

Latest interview with DeepMind Chief Scientist Oriol Vinyals: The future of general AI is strong interactive meta-learning

Since AlphaGo defeated humans at Go in 2016, DeepMind scientists have been working on exploring powerful general artificial intelligence algorithms, and Oriol Vinyals is one of them.

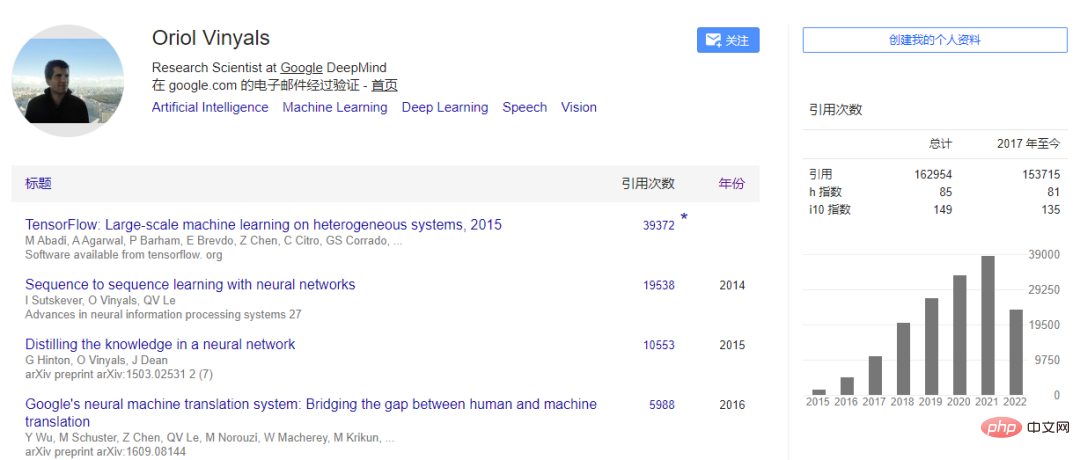

Vinyals joined DeepMind in 2016 and currently serves as chief scientist, leading the deep learning group. Previously he worked at Google Brain. His PhD was at the University of California, Berkeley, where he received the 2016 MIT TR35 Innovator Award. His research results in seq2seq, knowledge distillation, and TensorFlow have been applied to products such as Google Translate, text-to-speech, and speech recognition. His articles have been cited more than 160,000 times.

Recently, Oriol Vinyals was a guest on Lex Fridman’s podcast and talked about some of his thoughts on deep learning, generalist agent Gato, meta-learning, neural networks, AI consciousness, etc. view. Vinyals believes:

- Expanding the model scale can enhance the synergy between the multi-modal agents. Modular models are a way to effectively expand the model scale. By reusing weights, there is no need to start from scratch. Training model;

- Future meta-learning will pay more attention to the interactive learning between the agent and the environment;

- Transformer’s built-in inductive attention bias makes it more powerful than other neural network architectures;

- The key to the technical success of a general, large-scale model or agent is: data engineering, deployment engineering and establishing benchmarks.

- The existing AI model is still far away from generating consciousness. The biological brain is far more complex than the computational brain. The way human thinking operates can inspire research at the algorithm level;

- It is possible for future AI systems It has the same level of intelligence as humans, but it is not certain whether it can surpass human levels.

The following AI Technology Review has edited and organized the interview content without changing the original meaning:

1. General algorithm

Fridman: Is it possible in our lifetime to build an AI system that could replace us as the interviewer or the interviewee in this conversation?

Vinyals: What I want to ask is, do we want to realize that wish? I'm happy to see that we're using very powerful models and feel like they're getting closer to us, but the question is, would it still be an interesting artifact without the human side of the conversation? Probably not. For example, in StarCraft, we can create agents to play games and compete against themselves, but ultimately what people care about is how the agent will do when the competitor is a human.

So there is no doubt that with AI, we will be stronger. For example, you can filter out some very interesting problems from the AI system. In the field of language, we sometimes call this "Terry Picking". Likewise, if I had a tool like this right now, you asked an interesting question and a certain system would select some words to form the answer, but that doesn't excite me very much.

Fridman: What if arousing people's excitement is itself part of the system's objective function?

Vinyals: In games, when you design an algorithm, you can write the reward function with winning as a goal. But if you can measure it and optimize for it, what’s exciting about it? This is probably why we play video games, interact online, and watch cat videos. Indeed, it is very interesting to model rewards beyond the obvious reward functions used in reinforcement learning.

Also, AI does make some key advances in specific aspects, for example, we can evaluate whether a conversation or information is trustworthy based on its acceptance on the Internet. Then if you can learn a function automatically, you can more easily optimize and then have a conversation to optimize for some of the less obvious information like excitement. It would be interesting to build a system where at least one aspect is entirely driven by the excitatory reward function.

But obviously, the system still contains many human elements from the system builder, and the label for excitement comes from us, so it is difficult to calculate excitement. As far as I know, no one has done anything like this yet.

Fridman: Maybe the system also needs to have a strong sense of identity. It will have memories and be able to tell stories about its past. It can learn from controversial opinions because there is a lot of data on the Internet about what opinions people hold, and the excitement associated with a certain opinion. The system can create something out of it that is no longer optimizing for grammar and verisimilitude, but optimizing for the human consistency of the sentence.

Vinyals: From the perspective of a builder of neural networks, artificial intelligence, typically you try to map a lot of the interesting topics that you talk about into benchmarks, and then also about those systems In the current actual architecture of how it is built, how it learns, what data it learns from, and what it learns, what we are going to talk about here is the weight of mathematical functions.

With the current state of gaming, what do we need to achieve these life experiences, like fear? On the language side, we're seeing very little progress right now because what we're doing now is taking a lot of online human interactions and then extracting sequences, which are strings of words, letters, images, sounds, modalities, and then trying to learn a function, through a neural network, to maximize the likelihood of seeing these sequences.

Some of the ways we currently train these models will hopefully develop the kind of capabilities you're talking about. One of them is the life cycle of the agent or model, which the model learns from offline data, so it just passively observes and maximizes. Just like in a mountainous landscape, where there is human interaction data everywhere, increase the weight; where there is no data, lower the weight. Models typically do not experience themselves, they are just passive observers of data. We then have them generate data as we interact with them, but this greatly limits the experience they may actually experience while optimizing or further optimizing weights. But we haven't even reached that stage yet.

In AlphaGo and SlphaStar, we deploy the model and let it compete with humans or interact with humans (such as language models) to train the model. They are not continuously trained, they do not learn based on weights learned from the data, and they do not continuously improve themselves.

But if you think about neural networks, it's understandable that they may not learn from changes in weights in the strict sense of the word, which has to do with how neurons are interconnected and how we learn throughout our lives. But when you talk to these systems, the context of the conversation does exist in their memory. It's like if you boot up a computer and it has a lot of information on its hard drive, you also have access to the Internet which contains all the information. There is also memory, which we regard as the hope of the agent.

Storage is very limited at the moment, we're now talking about about 2,000 words that we have, beyond that we start forgetting what we see, so some short-term continuity is there. If the agent has coherence, then if you ask "What is your name?" it can remember that sentence, but it may forget context beyond 2,000 words.

So technically speaking, there is such a limit to what people can expect from deep learning. But we hope that benchmarking and technology can have a continuous accumulation of memory experience, and offline learning is obviously very powerful. We've come a long way, we've seen again the power of these imitations or the Internet scale of getting these basic things about the world to be weighted, but the experience is lacking.

In fact, when we talk to systems, we don't even train them unless their memory is affected. That's the dynamic part, but they don't learn in the same way that you and I learn from birth. So to your question, the point I'm making here is that memory and experience are different than just observing and learning about the world.

The second problem I see is that we are training all these models from scratch. It seems that if we don’t train the model from scratch and find inspiration from the very beginning, something will be missing. There should be some way for us to train the model like a species every few months, while many other elements of the universe are built from previous iterations. From a pure neural network perspective, it is difficult not to throw away the previous weights, we learn from the data and update these weights. So it feels like something is missing, and we might eventually find it, but what it will look like isn't quite clear yet.

Fridman: Training from scratch seems like a waste, every time we solve Go and chess, StarCraft, protein folding problems, there must be some way to reuse the weights as we extend Huge new neural network database. So how do we reuse weights? How do you learn to extract what is generalizable and how to discard the rest? How to better initialize weights?

Vinyals: At the core of deep learning is the brilliant idea that a single algorithm solves all tasks. As more and more benchmarks emerge, this basic principle has been proven to be impossible. That is, you have a blank computational brain-like initialization of the neural network, and then you feed it more stuff in supervised learning.

The ideal situation is that what the input is expected to be, the output should be what it is. For example, image classification may be to select one of 1000 categories. This is the image network. Many problems can be mapped in this way. There should also be a general approach that you can use for any given task without making a lot of changes or thinking, which I think is the core of deep learning research.

We haven’t figured it out yet, but it would be exciting if people could discover less tricks—a general algorithm—to solve important problems. At an algorithmic level, we already have something general, a formula for training very powerful neural network models on large amounts of data.

In many cases, you need to consider the particularities of some practical problems. The protein folding problem is important and there are already some basic approaches such as Transformer models, graph neural networks, insights from NLP (such as BERT), and knowledge distillation. In this formulation, we also need to find something unique to the protein folding problem that is so important that we should solve it, potentially what we learn in this problem will be applied to the next iteration of deep learning researchers.

Perhaps in the past 23 years, in the field of meta-learning, general algorithms have made some progress, mainly GPT-3 produced in the language field. The model is only trained once, and it's not limited to translating languages or only knowing the sentiment underlying a sentence, which can actually be taught to it through hints, which essentially show them more examples. We are prompted through language, and language itself is a natural way for us to learn from each other. Maybe it will ask me some questions first, and then I tell it it should do this new task. You don't need to retrain it from scratch. We've seen some magic moments with few-shot learning, prompting with language in language-only modalities.

Over the past two years we have seen this expand to other modalities beyond language, adding visuals, action and games, and making great strides. This might be a way to implement a single model. The problem is that it's difficult to add weight or capacity to this model, but it's certainly powerful.

Current progress has occurred in text-based tasks or visual style classification tasks, but there should be more breakthroughs. We have a good baseline, and we want the baseline to move towards general artificial intelligence, and the community is moving in that direction, which is great. What excites me is, what are the next steps in deep learning to make these models more powerful? How to train them? If they must evolve, how can they be "bred"? Should they change weights when you teach it tasks? There are still many questions that need to be answered.

2. Generalist Agent Gato

Fridman: Can you explain the "Meow" and cat expression in your tweet? And what is Gato? How does it work? What kind of neural network is involved? How to train?

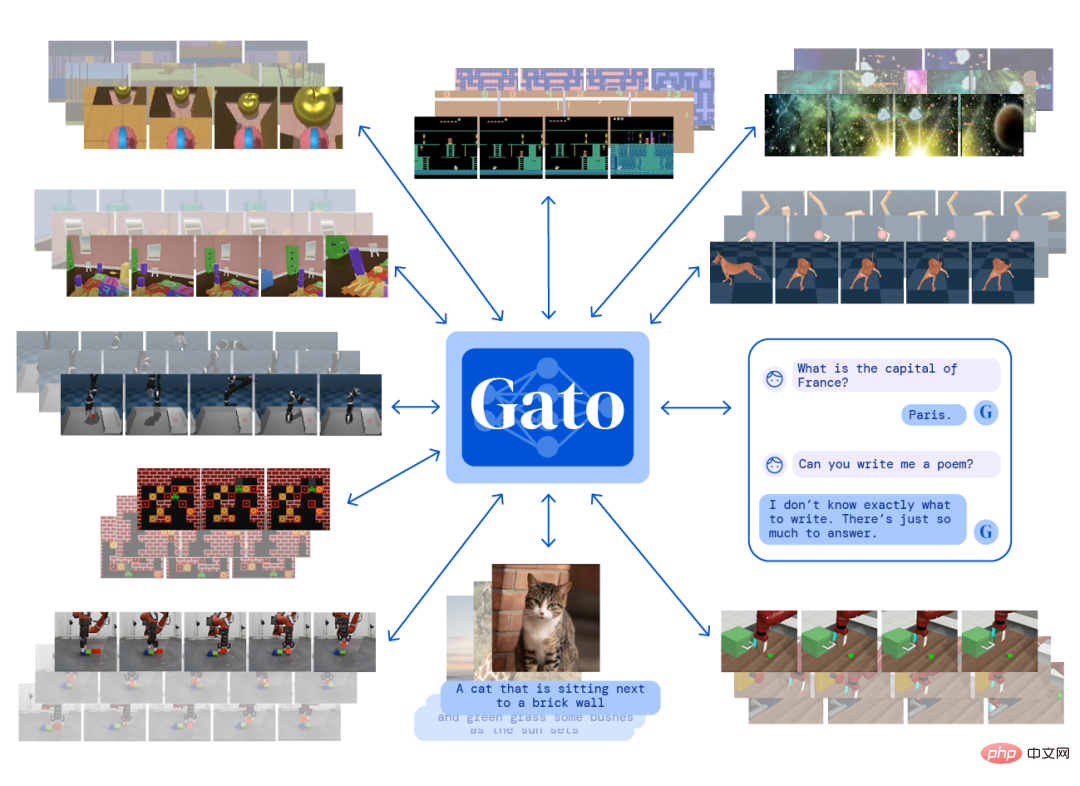

Vinyals: First of all, the name Gato, like a series of other models released by DeepMind, is named after an animal. Large sequence models are starting with language only, but we are expanding to other modalities. Gopher, Chinchilla, these are pure language models, and recently we released Flamingo, which covers vision. Gato adds visual and action modalities. Discrete actions such as up, down, left, and right can be naturally mapped from words to powerful language sequence models.

Before releasing Gato, we discussed which animal we should choose to name it. I think the main thing to consider is the general agent, which is a unique attribute of Gato. "gato" is in Spanish It means "cat" in Chinese.

The fundamentals of Gato are no different than many other jobs. It is a Transformer model, a recurrent neural network that covers multiple modalities, including vision, language, and action. The goal during training is to be able to predict what is next in the sequence. If the training is an action sequence, then it is to predict what the next action is. Character sequences and image sequences are also similar. We think of them all as bytes, and the model's task is to predict what the next byte is, and then you can understand this byte as an action and use this action in the game; you can also understand it as an word and write the word down during the conversation with the system.

Gato’s input includes images, text, videos, actions, and some perception sensors from robots, because robots are also part of the training content. It outputs text and actions, it does not output images. We are currently designing such an output form, so I say Gato is a start, because there is more work to be done. Essentially, Gato is a brain that, if you give it any sequence of observations and modalities, it will output the next step in the sequence. Then you start your move to the next one and keep predicting the next one, and so on.

Now it is not just a language model. You can chat with Gato like Chinchilla and Flamingo, but it is also an agent. It is trained on various data sets to be universal. , not just good at StarCraft, Atari games, or Go.

Fridman: In terms of action modality, what kind of model can be called an "intelligent agent"?

Vinyals: In my opinion, an agent is actually the ability to take action in an environment. It reacts by giving an action to the environment, which returns a new observation, and then it generates the next action.

The way we train Gato is to extract the observation data set, which is a large-scale imitation learning algorithm, such as training it to predict what the next word in the data set will be. We have a dataset of people texting and chatting on web pages.

DeepMind is interested in reinforcement learning and learning agents that work in different environments. We developed a dataset that records the agent's experience trajectories. Other agents we train are for a single goal, such as controlling a three-dimensional game environment and navigating a maze, and we add the experience gained from an agent's interaction with the environment to the data set.

When training Gato, we mix data such as words and the interaction between the agent and the environment for training. This is the "universality" of Gato. For different modalities and tasks, it only has A single "brain", and compared to most neural networks in recent years, it's not that big, with only 1 billion parameters.

Despite its small size, its training data set is very challenging and diverse, containing not only Internet data but also the agent's interaction experience with different environments.

In principle, Gato can control any environment, especially trained video games, various robot tasks and other environments. But it won't do better than the teacher who taught it. Scale is still important. Gato is still relatively small, so it is a start. Increasing scale may enhance the synergy between various modalities. And I believe there will be some new ways of studying or preparing data, for example we need to make it clear to the model that it is not just considering up and down movements when playing Atari games, the agent needs to be able to see the screen before starting to play the game. With a certain background, you can use text to tell it "I am showing you a whole sequence, and you are going to start playing this game." So text may be a way to enhance the data.

Fridman: How to tokenize text, images, game actions, and robot tasks?

Vinyals: Good question. Tokenization is a starting point for making all data into a sequence, it's like we break everything down into these puzzle pieces and then we can simulate what the puzzle looks like. When you line them up, they become a sequence. Gato uses the current standard text tokenization technology. We tokenize text through commonly used substrings. For example, "ing" is a commonly used substring in English, so it can be used as a token.

Fridman: How many tokens are needed for a word?

Vinyals: For an English word, the current tokenization granularity is generally 2~5 symbols, which is larger than letters and smaller than words.

Fridman: Have you tried tokenizing emojis?

Vinyals: emojis are actually just sequences of letters.

Fridman: Are emojis images or text?

Vinyals: You can actually map emojis to character sequences, so you can feed the model input emojis and it will output emojis. In Gato, the way we process images is to compress them into pixels of varying intensities, resulting in a very long sequence of pixels.

Fridman: So there are no semantics involved? You don't need to understand anything about the image?

Vinyals: Yes, only the concept of compression is used here. At the tokenization level, what we do is find common patterns to compress images.

Fridman: Visual information such as color does capture the meaning of an image, not just statistics.

Vinyals: In machine learning, the method of processing images is more data-driven. We just use the statistics of the image and then quantify them. Common substrings are positioned as a token, and images are similar, but there is no connection between them. If we think of tokens as integers, let's say the text has 10,000 tokens, from 1 to 10,000, and they represent all the languages and words we will see.

The image is another set of integers, from 10001 to 20000, and the two are completely independent. What connects them is the data, and in the dataset, the title of the image tells what the image is about. The model needs to predict from text to pixels, and the correlation between the two occurs as the algorithm learns. In addition to words and images, we can also assign integers to actions, discretize them, and use similar ideas to compress actions into tokens.

This is how we now map all spatial types to integer sequences. They each occupy different spaces, and what connects them is the learning algorithm.

Fridman: You mentioned before that it’s hard to scale. What does that mean? Some emergences have scale thresholds. Why is it so difficult to expand a network like Gato?

Vinyals: If you retrain the Gato network, it's not difficult to scale up. The point is we now have 1 billion parameters, can we use the same weights to scale this into a bigger brain? This is very difficult. So there is the concept of modularity in software engineering, and there have been some studies using modularity. Flamingo does not handle actions, but it is powerful in handling images, and the tasks between these projects are different and modular.

We achieved modularity perfectly in the Flamingo model by taking the weights from the pure language model Chinchilla, then freezing those weights and splicing some new neural networks in the correct places in the model. You need to figure out how to add other features without breaking other features.

We create a small sub-network that is not randomly initialized but learns through self-supervision. We then used the dataset to connect the two modalities, visual and verbal. We froze the largest part of the network and then added some parameters on top of the training from scratch. Then Flamingo appeared, its input was text and images, and its output was text. You can teach it new vision tasks, and it does things beyond what the dataset itself provides, but it leverages a lot of the language knowledge it got from Chinchilla.

The key idea of this modularity is that we take a frozen brain and add a new function to it. To some extent, you can see that even at DeepMind, we have this eclecticism of Flamingo, which allows for more reasonable utilization of scale without having to retrain a system from scratch.

Although Gato also uses the same data set, it is trained from scratch. So I guess the big question for the community is, should we train from scratch, or should we embrace modularity? Modularity is very effective as a way to scale.

3. Meta-learning will include more interactions

Fridman: After the emergence of Gato, can we redefine the term "meta-learning"? What do you think meta-learning is? Will meta-learning look like an expanded Gato in 5 or 10 years?

Vinyals: Maybe looking backward instead of forward would provide a good perspective. When we talk about meta-learning in 2019, its meaning has mostly changed with the GPT-3 revolution. The benchmark at the time was about the ability to learn object identity, so it was very suitable for vision and object classification. We're not just learning the 1000 categories that ImageNet tells us to learn, we're also learning the object categories that can be defined when interacting with the model.

The evolution of the model is interesting. To start, we have a special language, which is a small dataset, and we prompt the model with a new classification task. With hints in the form of machine learning datasets, we get a system that can predict or classify objects as we define them. Finally, the language model becomes a learner. GPT-3 shows that we can focus on object classification and what meta-learning means in the context of learning object classes.

Now, we are no longer bound by the benchmark, we can directly tell the model some logical tasks through natural language. These models are not perfect, but they are doing new tasks and gaining new capabilities through meta-learning. The Flamingo model extends to visual and language multimodality but has the same capabilities. You can teach it. For example, one emergent feature is that you can take a picture of a number and teach it to do arithmetic. You show it a few examples and it learns, so it goes far beyond previous image classification.

This expands on what meta-learning has meant in the past. Meta-learning is an ever-changing term. Given the current progress, I'm excited to see what happens next, maybe in 5 years it will be another story. We have a system that has a set of weights and we can teach it to play StarCraft through interactive prompts. Imagine you talk to a system, teach it a new game, and show it examples of the game. Maybe the system will even ask you questions, like, "I just played this game, did I play well? Can you teach me more?" So five or ten years from now, in specialized fields, these elements Learning capabilities will be more interactive and richer. For example, AlphaStar, which we developed specifically for StarCraft, is very different. The algorithm is general, but the weights are specific.

Meta-learning goes beyond prompts to include more interactions. The system may tell us to give it some feedback after it makes a mistake or loses a game. In fact, the benchmarks already exist, we just changed their goals. So in a way, I like to think of general artificial intelligence as: we already have 101% performance on specific tasks like chess and StarCraft, and in the next iteration, we can get to 20% on all tasks . The progress of the next generation of models is definitely in this direction. Of course we might go wrong on some things, like we might not have the tools, or maybe not enough Transformer. In the next 5 to 10 years, the weights of the model will likely have been trained, and it will be more about teaching or allowing the model to meta-learn.

This is an interactive teaching. In the field of machine learning, this method has not been used to deal with classification tasks for a long time. My idea sounds a bit like the nearest neighbor algorithm. It is almost the simplest algorithm and does not require learning or calculating gradients. Nearest neighbor measures the distance between points in a data set, and then to classify a new point, you only need to calculate what is the closest point in this large amount of data. So you can think of the hint as: you are not just processing simple points when uploading, but you are adding knowledge to the pre-trained system.

The tip is a development of a very classic concept in machine learning, that is, learning by the nearest point. One of our studies in 2016 used the nearest neighbor method, which is also very common in the field of computer vision. How to calculate the distance between two images is a very active research area. If you can get a good Distance matrix, you can also get a good classifier.

These distances and points are not limited to images, but can also be text or text, images, action sequences and other new information taught to the model. We probably won't be doing any more weight training. Some techniques of meta-learning do do some fine-tuning, and when they get a new task, they train the weights a little bit.

4. The power of Transformer

Fridman: We have made general, large-scale models and agents such as Flamingo, Chinchilla, and Gopher, which are technically What's so special about it?

Vinyals: I think the key to success is engineering. The first is data engineering, because what we end up collecting is a data set. Then comes the deployment project, where we deploy the model to some computing clusters on a large scale. This success factor applies to everything, the devil is indeed in the details.

The other is the current progress in benchmarks. A team spends several months doing a study and is not sure whether it will succeed, but if you don’t take risks and do something that seems impossible, you won’t. There is a chance of success. However, we need a way to measure progress, so establishing benchmarks is crucial.

We developed AlphaFold using extensive benchmarks, and the data and indicators for this project are all readily available. A good team should not be about finding some incremental improvement and publishing a paper, but about having a higher goal and working on it for years.

In the field of machine learning, we like architectures like neural networks, and before Transformer came along, this was a very rapidly growing field. “Attentionis All You Need” is indeed a great essay topic. This architecture fulfills our dream of modeling any sequence of bytes. I think the progress of these architectures lies in the way neural networks work to some extent. It is difficult to find an architecture that was invented five years ago and is still stable and has changed very little, so Transformer can continue to appear in many projects. is surprising.

Fridman: At the philosophical level of technology, where does the magic of attention lie? How does attention work in the human mind?

Vinyals: There is a difference between Transformer and long short-term memory artificial neural network LSTMs. In the early days of Transformer, LSTMs were still very powerful sequence models, such as AlphaStar. Both. The power of Transformer is that it has an inductive attention bias built into it. Suppose we want to solve a complex task for a string of words, such as translating an entire paragraph, or predicting the next paragraph based on the previous ten paragraphs.

Intuitively, the way Transformer does these tasks is to imitate and copy humans. In Transformer, you are looking for something. After you just read a paragraph of text, you will think about what will happen next. , you might want to take another look at the text, this is a hypothesis-driven process. If I'm wondering whether my next word will be "cat" or "dog," the way the Transformer works is that it has two assumptions: Will it be cat? Or a dog? If it's a cat, I'll find some words (not necessarily the word "cat" itself) and backtrack to see if "cat" or "dog" makes more sense.

Then it does some very deep calculations on words, it combines words, and it can also query. If you really think about the text, you need to look back at all the text above, but what is directing attention? What I just wrote is certainly important, but what you wrote ten pages ago may also be critical, so it’s not the placement you have to think about, but the content. Transformer's can query specific content and pull it out for better decision-making. This is one way to explain Transformer, and I think this inductive bias is very powerful. The Transformer may have some detailed changes over time, but the inductive bias makes the Transformer more powerful than a recurrent network based on recency bias, which is effective in some tasks, but it has very large flaws.

Transformer itself also has flaws. I think one of the main challenges is the prompts we just discussed. A prompt can be up to 1000 words long, or even I need to show the system a video and Wikipedia article about a game. I also had to interact with the system as it played the game and asked me questions. I need to be a good teacher to teach models to achieve things beyond their current capabilities. So the question is, how do we benchmark these tasks? How do we change the structure of the architecture? This is controversial.

Fridman: How important are individual people in all this research progress? To what extent have they changed the field? You're now leading deep learning research at DeepMind, you have a lot of projects, a lot of brilliant researchers, how much change can all these humans bring?

Vinyals: I believe that people play a huge role. Some people want to get an idea that works and stick with it, others may be more practical and don't care what idea works as long as they can crack protein folding. We need both of these seemingly opposing ideas. Historically, both produced something sooner or later. The distinction between the two may also be similar to what is called the Exploration-Exploitation Tradeoff in the field of reinforcement learning. When interacting with people in a team or at a meeting, you will quickly find that something is explorable or exploitable.

It is wrong to deny any kind of research style. I am from the industry, so we have large-scale computing power to use, and there will also be corresponding specific types of research. For scientific progress, we need to answer questions that we should answer now.

At the same time, I also see a lot of progress. The attention mechanism was originally discovered in Montreal, Canada due to lack of computing power, while we were working on sequence-to-sequence models with our friends at Google Brain. We used 8 GPUs (which was actually quite a lot at that time), and I think Montreal was still relatively limited in terms of computing scale. But then they discovered the concept of content-based attention, which further led to Transformer.

Fridman: Many people tend to think that genius resides in grand ideas, but I suspect that genius in engineering often lies in the details. Sometimes a single engineer or a few engineers can change what we do. Things, especially those made on large-scale computers, where one engineering decision can trigger a chain reaction.

Vinyals: If you look back at the history of the development of deep learning and neural networks, you will find that there are accidental elements. Because GPUs happened to appear at the right time, albeit for video games. So even hardware engineering is affected by the time factor. It was also because of this hardware revolution that data centers were established. For example, Google's data centers. With such a data center, we can train models. Software is also an important factor, and more and more people are entering this field. We might also expect a system to have all the benchmarks.

5. AI is still far from emerging consciousness

Fridman: You have a paper co-authored with Jeff Dean, Percy Liang and others, titled "Emergent Abilities of Large Language Models”. How is emergence in neural networks explained intuitively? Is there a magic tipping point? Does this vary from task to task?

Vinyals: Take benchmark testing as an example. As you train the system, when you analyze how much the data set size affects performance, how the model size affects performance, how long it takes to train the system to affect performance, etc., the curve is quite smooth. If we look at ImageNet as a very smooth and predictable training curve, it looks pretty smooth and predictable in a way.

On the language side, the benchmark requires more thinking, requiring more processing and more introspection even if the input is a sentence describing a mathematical problem. The performance of the model may become random. Until a correct question is asked by Transformer's query system or a language model like Transformer, the performance starts to change from random to non-random. This is very empirical and has no formalization behind it. theory.

Fridman: A Google engineer recently claimed that the Lambda language model is conscious. This case touches on the human aspect, the technical aspect of machine learning, and the philosophical aspect of the role of AI systems in the human world. What is your perspective as a machine learning engineer and as a human being?

Vinyals: I think any of the current models are still far from being conscious. I feel like I'm a bit of a failed scientist, and I always feel like seeing machine learning as a science that could possibly help other sciences, I like astronomy, biology, but I'm not an expert in those fields, so I decided to study machine learning.

But as I learned more about Alphafold and learned a bit about proteins, biology and life sciences, I started looking at what was happening at the atomic level. We tend to think of neural networks as brains, and when I'm not an expert, it seems complex and magical, but biological systems are far more complex than computational brains, and existing models have not yet reached the level of biological brains.

I’m not that surprised about what happened to this Google engineer. Maybe because I see the time curve getting smoother, language models have not progressed that fast since Shannon's work in the 50s, and the ideas we had 100 years ago are not that different from the ideas we have now. But no one should tell others what they should think.

The complexity of humanity from the beginning of its creation, and the complexity of the evolution of the universe as a whole, is to me an order of magnitude more fascinating. It's good to be obsessed with what you do, but I wish a biology expert would tell me it's not that magical. Through interaction in the community, we also gain a level of education that helps understand what is not normal, what is unsafe, etc., without which a technology will not be used correctly.

Fridman: In order to solve intelligence problems, does a system need to gain consciousness? What part of the human mind is instructive in creating AI systems?

Vinyals: I don’t think a system is smart enough to have an extremely useful brain that can challenge you and guide you. Instead, you should teach it to do things. Personally, I'm not sure consciousness is necessary, maybe consciousness or other biological or evolutionary perspectives will influence our next generation algorithms.

The details of calculations performed by the human brain and neural networks are different. Of course, there are some similarities between the two, but we do not know enough about the details of the brain. But if you narrow the scope a little bit, such as our thinking process, how memory works, even how we evolved to what we are now, what exploration and development are, etc., these can inspire research at the algorithm level.

Fridman: Do you agree with Richard Sutton's view in The Bitter Lesson that the biggest lesson from 70 years of artificial intelligence research is the general method of leveraging computing power? Is it the final effective method?

Vinyals: I strongly agree with this point of view. Scale is necessary to build trustworthy, complex systems. This may not be enough and we need some breakthroughs. Sutton mentioned that search is a method of scaling, and in a field like Go, search is useful because there is a clear reward function. But in some other missions, we weren't quite sure what to do.

6. AI can have at least human-level intelligence

Fridman: Do you think we can build a general artificial intelligence system that reaches or even surpasses human intelligence in your lifetime?

Vinyals: I absolutely believe it will have human-level intelligence. The word “beyond” is difficult to define, especially when we look at current standards from an imitation learning perspective, but we can certainly have AI imitate and surpass humans in language. So getting to human level through imitation requires reinforcement learning and other things. It's already paying off in some areas.

In terms of surpassing human capabilities, AlphaGo is by far my favorite example. And in a general sense, I'm not sure we can define a reward function in a way that mimics human intelligence. As for transcendence, I'm not sure yet, but it will definitely reach human level. Obviously we're not going to try to transcend, and if we did we would have superhuman scientists and discoveries to advance the world, but at least human-level systems would be very powerful.

Fridman: Do you think there will be a singularity moment when billions of intelligence agents at or beyond human level are deeply integrated into human society? Will you be scared or excited about the world?

Vinyals: Maybe we need to consider whether we can actually achieve this goal. Having too many people coexist with limited resources can create problems. Quantity limits should probably also exist for digital entities. This is for energy availability reasons as they also consume energy.

The reality is that most systems are less efficient than ours when it comes to energy requirements. But I think as a society we need to work together to find reasonable ways to grow and how we can coexist. I would be excited if it happened, aspects of automation that give access to certain resources or knowledge to people who would otherwise obviously not have access to it, and that's the application I'm most excited to see.

Fridman: Last question, as humans move out of the solar system, will there be more humans or more robots in the future world?

Vinyals: It’s just speculation that humans and AI might coexist in a mix, but there are already companies trying to make us better in this way. I would like the ratio to be at most 1:1. 1:1 may be feasible, but losing balance is not good.

Original video link: https://youtu.be/aGBLRlLe7X8

The above is the detailed content of Latest interview with DeepMind Chief Scientist Oriol Vinyals: The future of general AI is strong interactive meta-learning. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1379

1379

52

52

Debian mail server firewall configuration tips

Apr 13, 2025 am 11:42 AM

Debian mail server firewall configuration tips

Apr 13, 2025 am 11:42 AM

Configuring a Debian mail server's firewall is an important step in ensuring server security. The following are several commonly used firewall configuration methods, including the use of iptables and firewalld. Use iptables to configure firewall to install iptables (if not already installed): sudoapt-getupdatesudoapt-getinstalliptablesView current iptables rules: sudoiptables-L configuration

How to set the Debian Apache log level

Apr 13, 2025 am 08:33 AM

How to set the Debian Apache log level

Apr 13, 2025 am 08:33 AM

This article describes how to adjust the logging level of the ApacheWeb server in the Debian system. By modifying the configuration file, you can control the verbose level of log information recorded by Apache. Method 1: Modify the main configuration file to locate the configuration file: The configuration file of Apache2.x is usually located in the /etc/apache2/ directory. The file name may be apache2.conf or httpd.conf, depending on your installation method. Edit configuration file: Open configuration file with root permissions using a text editor (such as nano): sudonano/etc/apache2/apache2.conf

How debian readdir integrates with other tools

Apr 13, 2025 am 09:42 AM

How debian readdir integrates with other tools

Apr 13, 2025 am 09:42 AM

The readdir function in the Debian system is a system call used to read directory contents and is often used in C programming. This article will explain how to integrate readdir with other tools to enhance its functionality. Method 1: Combining C language program and pipeline First, write a C program to call the readdir function and output the result: #include#include#include#includeintmain(intargc,char*argv[]){DIR*dir;structdirent*entry;if(argc!=2){

How to optimize the performance of debian readdir

Apr 13, 2025 am 08:48 AM

How to optimize the performance of debian readdir

Apr 13, 2025 am 08:48 AM

In Debian systems, readdir system calls are used to read directory contents. If its performance is not good, try the following optimization strategy: Simplify the number of directory files: Split large directories into multiple small directories as much as possible, reducing the number of items processed per readdir call. Enable directory content caching: build a cache mechanism, update the cache regularly or when directory content changes, and reduce frequent calls to readdir. Memory caches (such as Memcached or Redis) or local caches (such as files or databases) can be considered. Adopt efficient data structure: If you implement directory traversal by yourself, select more efficient data structures (such as hash tables instead of linear search) to store and access directory information

Debian mail server SSL certificate installation method

Apr 13, 2025 am 11:39 AM

Debian mail server SSL certificate installation method

Apr 13, 2025 am 11:39 AM

The steps to install an SSL certificate on the Debian mail server are as follows: 1. Install the OpenSSL toolkit First, make sure that the OpenSSL toolkit is already installed on your system. If not installed, you can use the following command to install: sudoapt-getupdatesudoapt-getinstallopenssl2. Generate private key and certificate request Next, use OpenSSL to generate a 2048-bit RSA private key and a certificate request (CSR): openss

How to implement file sorting by debian readdir

Apr 13, 2025 am 09:06 AM

How to implement file sorting by debian readdir

Apr 13, 2025 am 09:06 AM

In Debian systems, the readdir function is used to read directory contents, but the order in which it returns is not predefined. To sort files in a directory, you need to read all files first, and then sort them using the qsort function. The following code demonstrates how to sort directory files using readdir and qsort in Debian system: #include#include#include#include#include//Custom comparison function, used for qsortintcompare(constvoid*a,constvoid*b){returnstrcmp(*(

How to perform digital signature verification with Debian OpenSSL

Apr 13, 2025 am 11:09 AM

How to perform digital signature verification with Debian OpenSSL

Apr 13, 2025 am 11:09 AM

Using OpenSSL for digital signature verification on Debian systems, you can follow these steps: Preparation to install OpenSSL: Make sure your Debian system has OpenSSL installed. If not installed, you can use the following command to install it: sudoaptupdatesudoaptininstallopenssl to obtain the public key: digital signature verification requires the signer's public key. Typically, the public key will be provided in the form of a file, such as public_key.pe

How Debian OpenSSL prevents man-in-the-middle attacks

Apr 13, 2025 am 10:30 AM

How Debian OpenSSL prevents man-in-the-middle attacks

Apr 13, 2025 am 10:30 AM

In Debian systems, OpenSSL is an important library for encryption, decryption and certificate management. To prevent a man-in-the-middle attack (MITM), the following measures can be taken: Use HTTPS: Ensure that all network requests use the HTTPS protocol instead of HTTP. HTTPS uses TLS (Transport Layer Security Protocol) to encrypt communication data to ensure that the data is not stolen or tampered during transmission. Verify server certificate: Manually verify the server certificate on the client to ensure it is trustworthy. The server can be manually verified through the delegate method of URLSession