Technology peripherals

Technology peripherals

AI

AI

Google team launches new Transformer to optimize panoramic segmentation solution

Google team launches new Transformer to optimize panoramic segmentation solution

Google team launches new Transformer to optimize panoramic segmentation solution

Recently, the Google AI team proposed an end-to-end solution for panoramic segmentation using Mask Transformer, inspired by Transformer and DETR.

The full name is end-to-end solution for panoptic segmentation with mask transformers, which is mainly used to generate extensions of the segmentation MaskTransformer architecture.

The solution uses a pixel path (composed of a convolutional neural network or a visual Transformer) to extract pixel features, a memory path (composed of a Transformer decoder module) to extract memory features, and a dual-path Transformer for pixel features and Characteristics of interactions between memories.

However, the dual-path Transformer utilizing cross-attention was originally designed for language tasks, and its input sequence consists of hundreds of words.

For visual tasks, especially segmentation problems, the input sequence consists of tens of thousands of pixels, which not only indicates that the magnitude of the input scale is much larger, but also represents a lower representation compared to language words. level of embedding.

Panoramic segmentation is a computer vision problem that is now a core task in many applications.

It is divided into two parts: semantic segmentation and instance segmentation.

Semantic segmentation is like assigning semantic labels to each pixel in the image, such as "person" and "sky".

Instance segmentation only identifies and segments countable objects in the graph, such as "pedestrians" and "cars", and further divides them into several subtasks.

Each subtask is processed individually, and additional modules are applied to merge the results of each subtask stage.

This process is not only complex, but also introduces many artificially designed priors when processing subtasks and integrating the results of different subtasks.

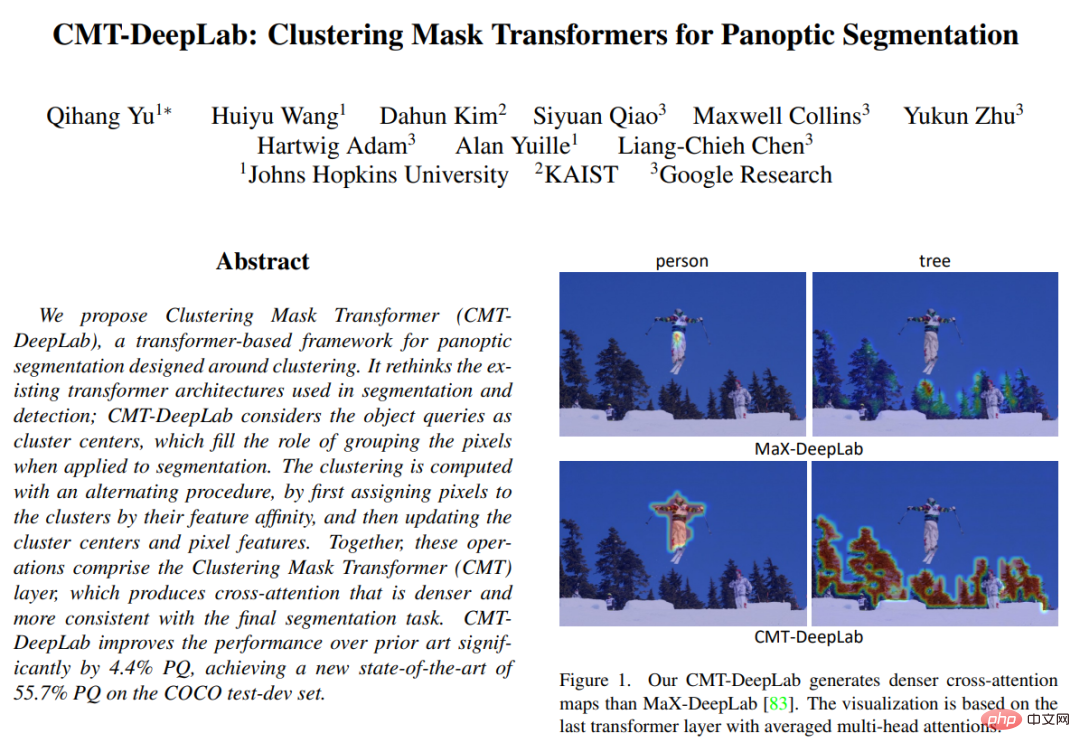

In "CMT-DeepLab: Clustering Mask Transformers for Panoptic Segmentation" published at CVPR 2022, the article proposes to reinterpret and redesign cross-attention from the perspective of clustering cross attention (that is, grouping pixels with the same semantic label into the same group) to better adapt to visual tasks.

CMT-DeepLab builds on the previous state-of-the-art method MaX-DeepLab and adopts a pixel clustering method to perform cross-attention, resulting in denser and more reasonable attention maps.

kMaX-DeepLab further redesigns cross-attention to be more like a k-means clustering algorithm with simple changes to the activation function.

Structural Overview

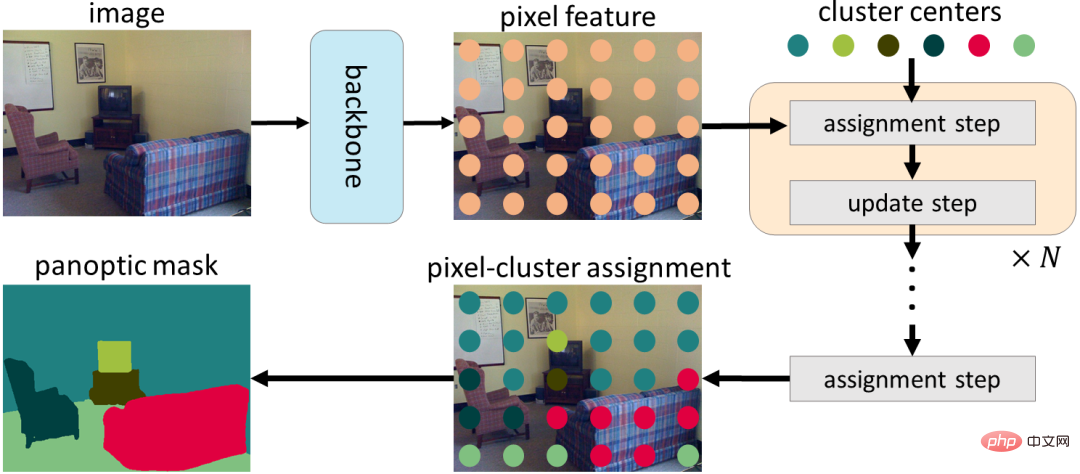

Researchers will reinterpret it from the perspective of clustering, rather than directly applying cross-attention to visual tasks without modification.

Specifically, they note that Mask Transformer object queries can be thought of as cluster centers (aimed at grouping pixels with the same semantic label).

The process of cross-attention is similar to the k-means clustering algorithm, (1) iterative process of assigning pixels to cluster centers, in which multiple pixels can be assigned to a single cluster center, and some Cluster centers may not have assigned pixels, and (2) cluster centers are updated by averaging pixels assigned to the same cluster center, if no pixels are assigned, cluster centers are not updated).

In CMT-DeepLab and kMaX-DeepLab, we reformulate cross-attention from a clustering perspective, which includes iterative cluster assignment and clustering update step

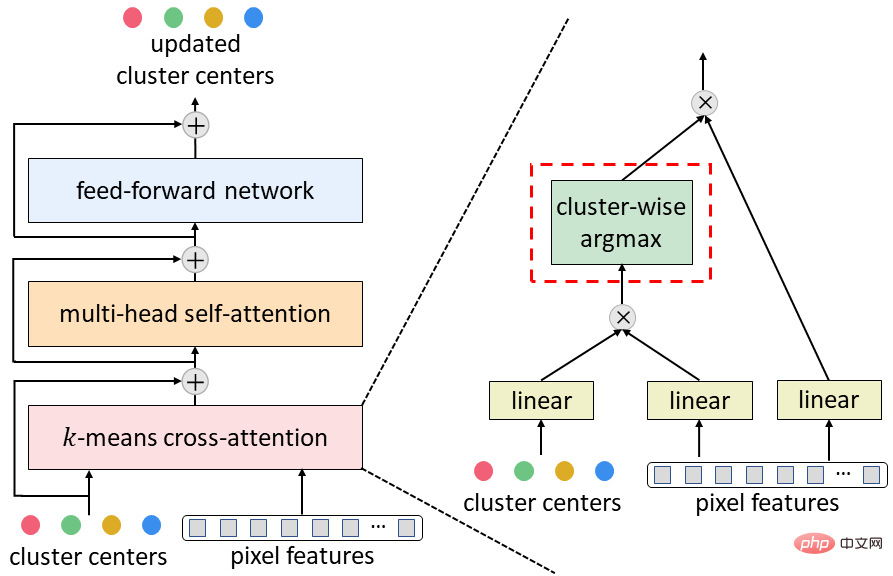

Given the popularity of k-means clustering algorithm, in CMT-DeepLab, they redesigned the cross-attention for spatial-aspect softmax operation (i.e., applied along the spatial resolution of the image softmax operation), which actually assigns cluster centers to the opposite, pixels are applied along the cluster centers.

In kMaX-DeepLab, we further simplify spatial-wise softmax to cluster-wise argmax (i.e., apply the argmax operation along the cluster center).

They note that the argmax operation is the same as the hard assignment (i.e. one pixel is assigned to only one cluster) used in the k-means clustering algorithm.

Reconstructing MaskTransformer's cross-attention from a clustering perspective significantly improves segmentation performance and simplifies the complex MaskTransformer pipeline to make it more interpretable.

First, an encoder-decoder structure is used to extract pixel features from the input image. The pixels are then grouped using a set of cluster centers, which are further updated based on cluster assignments. Finally, the cluster assignment and update steps are performed iteratively, and the last assignment can be directly used as segmentation prediction.

In order to convert the typical MaskTransformer decoder (composed of cross-attention, multi-head self-attention and feed-forward network) into the one proposed above k-means cross-attention, just replace the spatial-wise softmax with the cluster-wise maximum parameter.

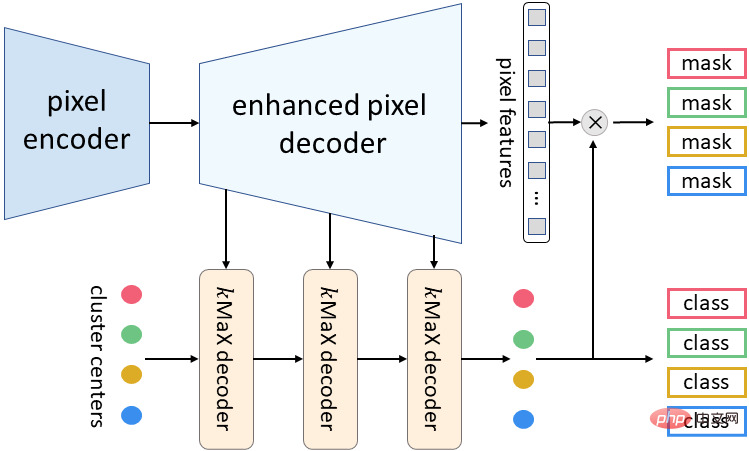

The meta-architecture of kMaX-DeepLab proposed this time consists of three components: pixel encoder, enhanced pixel decoder and kMaX decoder.

The pixel encoder is the backbone of any network and is used to extract image features.

The enhanced pixel decoder includes a Transformer encoder to enhance pixel features, and an upsampling layer to generate higher resolution features.

A series of kMax decoders convert cluster centers into (1) Mask embedding vectors, which are multiplied with pixel features to generate predicted Masks, and (2) class predictions for each Mask.

kMaX-DeepLab’s meta-architecture

Research results

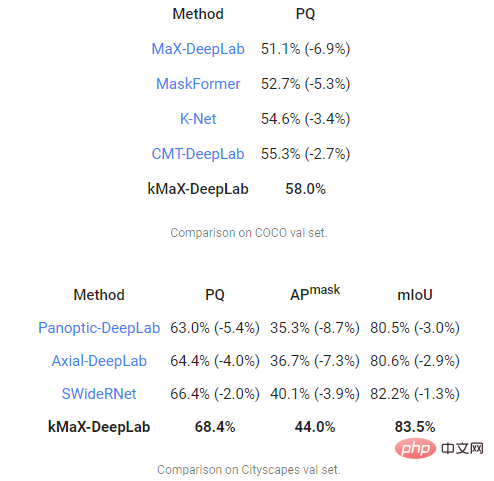

Finally, the research team achieved success in the two most challenging panoramic segmentation data We evaluate CMT-DeepLab and kMaX-DeepLab using the Panorama Quality (PQ) metric on COCO and Cityscapes, and compare MaX-DeepLab with other state-of-the-art methods.

Among them, CMT-DeepLab achieved significant performance improvement, while kMaX-DeepLab not only simplified the modification, but also further improved it. The PQ on COCO val set was 58.0%, PQ was 68.4%, and 44.0% Mask Average precision (Mask AP), 83.5% average intersection over union (mIoU) on Cityscapes validation set, without test-time augmentation or use of external datasets.

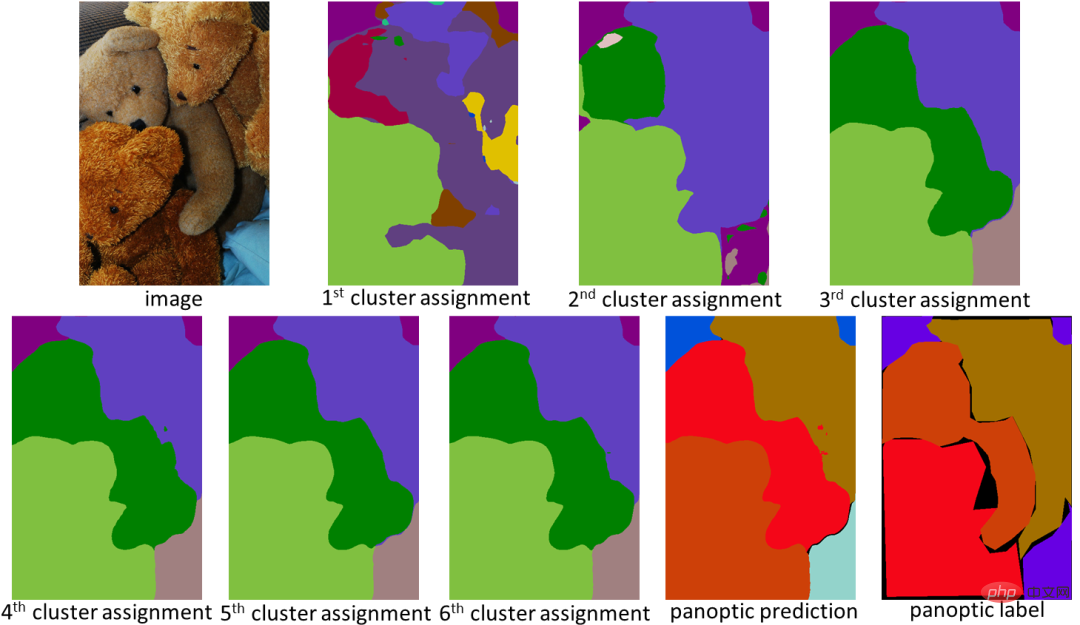

Designed from the perspective of clustering, kMaX-DeepLab not only has higher performance, but also can more reasonably visualize the attention map to understand its working mechanism.

In the example below, kMaX-DeepLab iteratively performs cluster assignment and updates, gradually improving Mask quality.

kMaX-DeepLab’s attention map can be directly visualized as panoramic segmentation, making the model working mechanism more reasonable

Conclusion

This research Demonstrates a way to better design MaskTransformers in vision tasks.

With simple modifications, CMT-DeepLab and kMaX-DeepLab reconstruct cross-attention to make it more like a clustering algorithm.

Thus, the proposed model achieves state-of-the-art performance on COCO and Cityscapes datasets.

The research team stated that they hope that the open source version of kMaX-DeepLab in the DeepLab2 library will contribute to future research on the design of architectures dedicated to visual Transformers.

The above is the detailed content of Google team launches new Transformer to optimize panoramic segmentation solution. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

How to comment deepseek

Feb 19, 2025 pm 05:42 PM

How to comment deepseek

Feb 19, 2025 pm 05:42 PM

DeepSeek is a powerful information retrieval tool. Its advantage is that it can deeply mine information, but its disadvantages are that it is slow, the result presentation method is simple, and the database coverage is limited. It needs to be weighed according to specific needs.

How to search deepseek

Feb 19, 2025 pm 05:39 PM

How to search deepseek

Feb 19, 2025 pm 05:39 PM

DeepSeek is a proprietary search engine that only searches in a specific database or system, faster and more accurate. When using it, users are advised to read the document, try different search strategies, seek help and feedback on the user experience in order to make the most of their advantages.

Sesame Open Door Exchange Web Page Registration Link Gate Trading App Registration Website Latest

Feb 28, 2025 am 11:06 AM

Sesame Open Door Exchange Web Page Registration Link Gate Trading App Registration Website Latest

Feb 28, 2025 am 11:06 AM

This article introduces the registration process of the Sesame Open Exchange (Gate.io) web version and the Gate trading app in detail. Whether it is web registration or app registration, you need to visit the official website or app store to download the genuine app, then fill in the user name, password, email, mobile phone number and other information, and complete email or mobile phone verification.

Why can't the Bybit exchange link be directly downloaded and installed?

Feb 21, 2025 pm 10:57 PM

Why can't the Bybit exchange link be directly downloaded and installed?

Feb 21, 2025 pm 10:57 PM

Why can’t the Bybit exchange link be directly downloaded and installed? Bybit is a cryptocurrency exchange that provides trading services to users. The exchange's mobile apps cannot be downloaded directly through AppStore or GooglePlay for the following reasons: 1. App Store policy restricts Apple and Google from having strict requirements on the types of applications allowed in the app store. Cryptocurrency exchange applications often do not meet these requirements because they involve financial services and require specific regulations and security standards. 2. Laws and regulations Compliance In many countries, activities related to cryptocurrency transactions are regulated or restricted. To comply with these regulations, Bybit Application can only be used through official websites or other authorized channels

Sesame Open Door Trading Platform Download Mobile Version Gateio Trading Platform Download Address

Feb 28, 2025 am 10:51 AM

Sesame Open Door Trading Platform Download Mobile Version Gateio Trading Platform Download Address

Feb 28, 2025 am 10:51 AM

It is crucial to choose a formal channel to download the app and ensure the safety of your account.

Sesame Open Door Exchange Web Page Login Latest version gateio official website entrance

Mar 04, 2025 pm 11:48 PM

Sesame Open Door Exchange Web Page Login Latest version gateio official website entrance

Mar 04, 2025 pm 11:48 PM

A detailed introduction to the login operation of the Sesame Open Exchange web version, including login steps and password recovery process. It also provides solutions to common problems such as login failure, unable to open the page, and unable to receive verification codes to help you log in to the platform smoothly.

Binance binance official website latest version login portal

Feb 21, 2025 pm 05:42 PM

Binance binance official website latest version login portal

Feb 21, 2025 pm 05:42 PM

To access the latest version of Binance website login portal, just follow these simple steps. Go to the official website and click the "Login" button in the upper right corner. Select your existing login method. If you are a new user, please "Register". Enter your registered mobile number or email and password and complete authentication (such as mobile verification code or Google Authenticator). After successful verification, you can access the latest version of Binance official website login portal.

Top 10 recommended for crypto digital asset trading APP (2025 global ranking)

Mar 18, 2025 pm 12:15 PM

Top 10 recommended for crypto digital asset trading APP (2025 global ranking)

Mar 18, 2025 pm 12:15 PM

This article recommends the top ten cryptocurrency trading platforms worth paying attention to, including Binance, OKX, Gate.io, BitFlyer, KuCoin, Bybit, Coinbase Pro, Kraken, BYDFi and XBIT decentralized exchanges. These platforms have their own advantages in terms of transaction currency quantity, transaction type, security, compliance, and special features. For example, Binance is known for its largest transaction volume and abundant functions in the world, while BitFlyer attracts Asian users with its Japanese Financial Hall license and high security. Choosing a suitable platform requires comprehensive consideration based on your own trading experience, risk tolerance and investment preferences. Hope this article helps you find the best suit for yourself