Technology peripherals

Technology peripherals

AI

AI

Tiny machine learning promises to embed deep learning into microprocessors

Tiny machine learning promises to embed deep learning into microprocessors

Tiny machine learning promises to embed deep learning into microprocessors

Translator|Zhu Xianzhong

Reviewer|Liang Ce Sun Shujuan

The initial success of deep learning models was attributed to large servers with large amounts of memory and GPU clusters . The promise of deep learning has spawned an industry that provides cloud computing services for deep neural networks. As a result, large neural networks running on virtually unlimited cloud resources have become extremely popular, especially for tech companies with ample budgets.

But at the same time, an opposite trend has also emerged in recent years, namely the creation of machine learning models for edge devices. Known as Tiny Machine Learning (TinyML), these models work on devices with limited memory and processing power, and non-existent or limited internet connectivity.

A latest research effort jointly conducted by IBM and the Massachusetts Institute of Technology (MIT) solves the peak memory bottleneck problem of convolutional neural networks (CNN). This is a deep learning architecture that is particularly important for computer vision applications. A model called McUnETV2 that is capable of running convolutional neural networks (CNN) on low-memory and low-power microcontrollers is detailed in a paper presented at the NeurIPS 2021 conference.

1. Why does TinyML (TinyML) appear?

Although cloud deep learning is very successful, it is not suitable for all situations. In fact, many applications often require inference tasks to be completed directly on the hardware device. For example, in some mission environments such as drone rescue, Internet connectivity is not guaranteed. In other areas, such as healthcare, privacy requirements and regulatory constraints also make it difficult to send data to the cloud for processing. For applications that require real-time machine language inference, the latency caused by round-trip cloud computing is even more prohibitive.

All the above conditions must be met to make machine learning devices scientifically and commercially attractive. For example, iPhone phones now have many applications that run facial recognition and speech recognition, and Android phones can also directly run translation software. In addition, Apple Watch can already use machine learning algorithms to detect movement and ECG patterns (Note: ECG is the abbreviation of electrocardiogram, also known as EKG. It is a test experiment used to record the time nodes of the electrical signal sequence that triggers the heartbeat. and intensity. By analyzing ECG images, doctors can better diagnose whether the heart rate is normal and whether there are problems with heart function).

The ML models on these devices are enabled in part by technological advances that enable neural networks to be compact and more computationally and storage efficient. At the same time, due to advances in hardware technology, the implementation of such ML models in mobile settings has also become possible. Our smartphones and wearables now have more computing power than the high-performance servers of 30 years ago, and some even have specialized coprocessors for machine language inference.

TinyML takes edge AI one step further, making it possible to run deep learning models on microcontrollers (MCUs), even though microcontrollers are more demanding than the tiny computers we carry in our pockets and on our wrists. Limited to resources.

Microcontrollers, on the other hand, are inexpensive, selling for less than $0.50 on average, and they are almost everywhere and can be embedded in everything from consumer industries to industrial equipment. At the same time, they do not have the resources found in general-purpose computing devices, and most do not have operating systems. The microcontroller's CPU is small, with only a few hundred kilobytes of low-power memory (SRAM) and a few megabytes of storage, and does not have any networking equipment. Most of them have no mains power supply and for many years have had to use button batteries. Therefore, installing deep learning models on MCUs may open new avenues for many applications.

2. Memory bottleneck in convolutional neural network

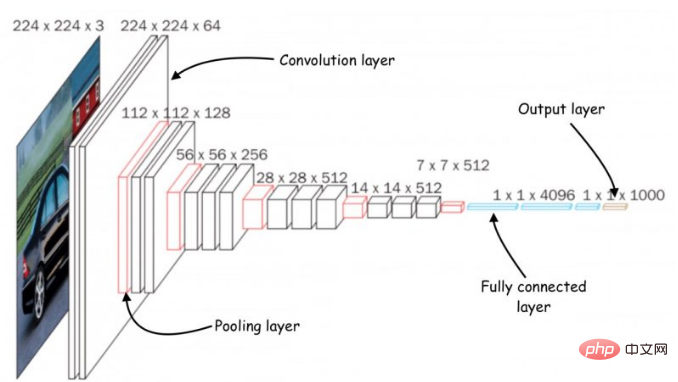

The architecture of convolutional neural network (CNN)

In order to combine deep neural network There have been many efforts to shrink the network to a size suitable for small memory computing devices. However, most of these efforts focus on reducing the number of parameters in deep learning models. For example, "pruning" is a popular optimization algorithm that shrinks neural networks by removing unimportant parameters from the model output.

The problem with pruning methods is that they cannot solve the memory bottleneck of neural networks. Standard implementations of deep learning libraries require the entire network layer and activation layer mapping to be loaded into memory. Unfortunately, classical optimization methods do not make any significant changes to the early computational layers of neural networks, especially in convolutional neural networks.

This causes an imbalance in the size of different layers of the network and leads to the "memory spike" problem: even if the network becomes more lightweight after pruning, the device running it must have the same memory as the largest layer. For example, in the popular TinyML model MobileNetV2, early layer computation reaches a memory peak of about 1.4 megabytes, while later layers have a very small memory footprint. To run the model, the device will require the same amount of memory as the model peaks. Since most MCUs have no more than a few hundred KB of memory, they cannot run off-the-shelf versions of MobileNetV2.

MobileNetV2 is a neural network optimized for edge devices, but its memory peaks at about 1.4 megabytes, making it inaccessible to many microcontrollers.

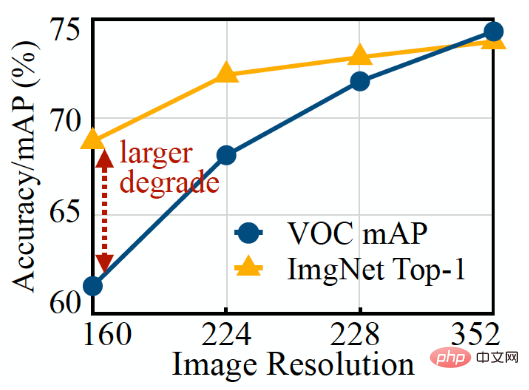

Another way to optimize a neural network is to reduce the input size of the model. Smaller input images require smaller CNNs to perform prediction tasks. However, reducing input size presents its own challenges and is not effective for all computer vision tasks. For example, object detection deep learning models are very sensitive to image size, and their performance degrades rapidly when the input resolution decreases.

It is easy to see from the above figure that the image classification ML model (orange line) is easier to reduce resolution than the target detection model (blue line).

3. MCUNetV2 patch-based inference

To solve the memory bottleneck problem of convolutional neural networks, researchers created a deep learning architecture called MCUNetV2 that can adjust its memory bandwidth to the limits of microcontrollers. MCUNetV2 was developed based on the previous results of the same scientific research group, which has been accepted and successfully submitted to the NeurIPS 2020 conference.

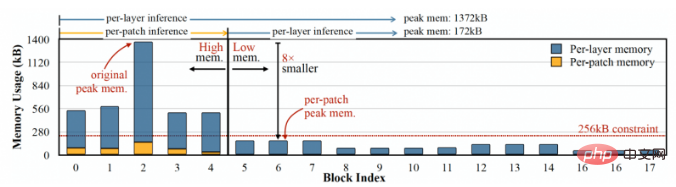

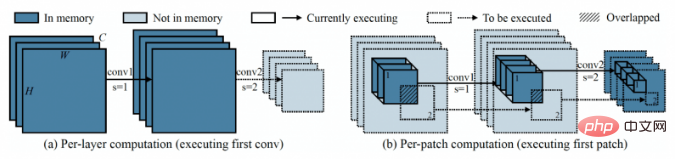

The main idea behind MCUNetV2 is "patch-based inference", a technique that reduces the memory footprint of a CNN without reducing its accuracy. Instead of loading an entire neural network layer into memory, MCUNetV2 loads and computes smaller regions or "patches" of the layer at any given time. It then iterates through the layer block by block and combines these values until it calculates the activation portion of the entire layer.

The left side of the figure shows the classic deep learning system calculating an entire layer, while the right side shows MCUNetV2 calculating one patch at a time, thereby reducing the memory for DL inference. need.

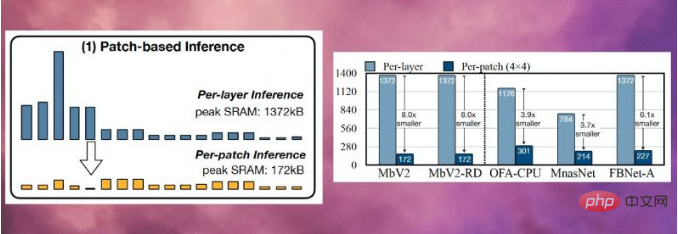

Because MCUNetV2 only needs to store one neuron at a time, it significantly reduces memory peaks without reducing model resolution or parameters. Researchers' experiments show that MCUNetV2 can reduce peak memory to one-eighth.

MCUNetV2 can reduce the memory peak of deep learning models to one-eighth

Patch-based inference saves memory while also bringing computing power Cost trade-off issue. Researchers at the Massachusetts Institute of Technology (MIT) and IBM found that overall network computation can increase by 10-17% in different architectures, which obviously does not apply to low-power microcontrollers.

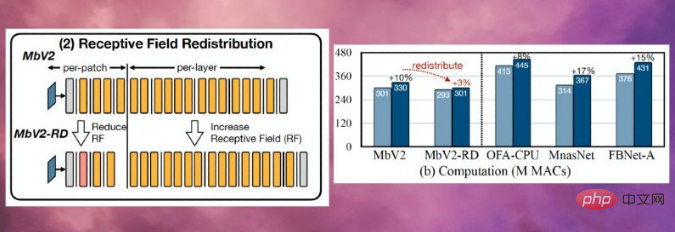

In order to overcome this limitation, the researchers redistributed the "receptive fields" of different blocks of the neural network (Note: In CNN, a pixel in the n-th layer feature map corresponds to the input image of the first layer. The number of pixels is the receptive field ("RF") of the layer. In CNN, the receptive field is the image area that can be processed at any time. Larger receptive fields require larger patches and overlap between patches. Of course, this results in higher computational overhead. By shrinking the receptive field in the initial blocks of the network and widening the receptive field in later stages, the researchers were able to reduce computational overhead by more than two-thirds.

The redistribution of receptive fields helps reduce the computational overhead of MCUNetV2 by more than two-thirds

Finally, the researchers observed that the adjustment of MCUNetV2 A lot depends on the ML model architecture, the application, and the memory and storage capacity of the target device. To avoid manually tuning deep learning models for each device and application, the researchers used "neural algorithm search," a process that uses machine learning to automatically optimize neural network structure and inference scheduling.

The researchers tested the deep learning architecture in different applications on several microcontroller models with smaller memory capacities. The results show that MCUNetV2 outperforms other TinyML technologies and is able to achieve higher accuracy in image classification and object detection with smaller memory requirements and lower latency.

As shown in the figure below, researchers are using MCUNetV2 with real-time person detection, visual wake words, and face/mask detection.

Translator's Note: What is shown here is only a screenshot from the video using MCUNetV2 displayed on the youtube.com website.

4. TinyML Applications

In a 2018 article titled “Why the Future of Machine Learning is Tiny,” Software Engineer Pete Warden believes that machine learning on MCU is extremely important. Worden wrote: "I believe that machine learning can run on small, low-power chips, and this combination will solve a large number of problems that we cannot currently solve."

Thanks to advances in sensors and CPUs, we have Access to data around the world is greatly enhanced. But our ability to process and use this data through machine learning models is limited by network connectivity and cloud server access. As Worden said, processors and sensors are more energy efficient than radio transmitters like Bluetooth and WiFi.

Worden wrote: "The physical process of moving data seems to require a lot of energy. It seems to be a rule that the energy required for an operation is directly proportional to the distance to send the bits. The CPU and sensors send only a few millimeters Sending bits of data is cheap and expensive, whereas radio transmission requires several meters or more to send a few bits of data and is expensive... It is clear that there is a market with huge potential waiting to be opened up with the right technology. We need A device that works on a cheap microcontroller, uses very little energy, relies on computation rather than radio, and can turn all our wasted sensor data into useful data. This is machine learning, specifically deep Learning is going to fill the gap."

Thanks to MCUNetV2 and TinyML making progress in other areas as well, Worden's prediction may soon become a reality. In the coming years, we can expect TinyML to find its way into billions of microcontrollers in homes, offices, hospitals, factories, farms, roads, bridges, and more, potentially enabling applications that were simply not possible before.

Original link: https://thenextweb.com/news/tinyml-deep-learning-microcontrollers-syndication

Translator introduction

Zhu Xianzhong, 51CTO community editor, 51CTO Expert blogger, lecturer, computer teacher at a university in Weifang, and a veteran in the freelance programming industry. In the early days, he focused on various Microsoft technologies (compiled three technical books related to ASP.NET AJX and Cocos 2d-X). In the past ten years, he has devoted himself to the open source world (familiar with popular full-stack web development technology) and learned about OneNet/AliOS Arduino/ IoT development technologies such as ESP32/Raspberry Pi and big data development technologies such as Scala Hadoop Spark Flink.

The above is the detailed content of Tiny machine learning promises to embed deep learning into microprocessors. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1377

1377

52

52

This article will take you to understand SHAP: model explanation for machine learning

Jun 01, 2024 am 10:58 AM

This article will take you to understand SHAP: model explanation for machine learning

Jun 01, 2024 am 10:58 AM

In the fields of machine learning and data science, model interpretability has always been a focus of researchers and practitioners. With the widespread application of complex models such as deep learning and ensemble methods, understanding the model's decision-making process has become particularly important. Explainable AI|XAI helps build trust and confidence in machine learning models by increasing the transparency of the model. Improving model transparency can be achieved through methods such as the widespread use of multiple complex models, as well as the decision-making processes used to explain the models. These methods include feature importance analysis, model prediction interval estimation, local interpretability algorithms, etc. Feature importance analysis can explain the decision-making process of a model by evaluating the degree of influence of the model on the input features. Model prediction interval estimate

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Written previously, today we discuss how deep learning technology can improve the performance of vision-based SLAM (simultaneous localization and mapping) in complex environments. By combining deep feature extraction and depth matching methods, here we introduce a versatile hybrid visual SLAM system designed to improve adaptation in challenging scenarios such as low-light conditions, dynamic lighting, weakly textured areas, and severe jitter. sex. Our system supports multiple modes, including extended monocular, stereo, monocular-inertial, and stereo-inertial configurations. In addition, it also analyzes how to combine visual SLAM with deep learning methods to inspire other research. Through extensive experiments on public datasets and self-sampled data, we demonstrate the superiority of SL-SLAM in terms of positioning accuracy and tracking robustness.

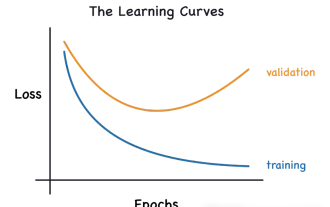

Identify overfitting and underfitting through learning curves

Apr 29, 2024 pm 06:50 PM

Identify overfitting and underfitting through learning curves

Apr 29, 2024 pm 06:50 PM

This article will introduce how to effectively identify overfitting and underfitting in machine learning models through learning curves. Underfitting and overfitting 1. Overfitting If a model is overtrained on the data so that it learns noise from it, then the model is said to be overfitting. An overfitted model learns every example so perfectly that it will misclassify an unseen/new example. For an overfitted model, we will get a perfect/near-perfect training set score and a terrible validation set/test score. Slightly modified: "Cause of overfitting: Use a complex model to solve a simple problem and extract noise from the data. Because a small data set as a training set may not represent the correct representation of all data." 2. Underfitting Heru

The evolution of artificial intelligence in space exploration and human settlement engineering

Apr 29, 2024 pm 03:25 PM

The evolution of artificial intelligence in space exploration and human settlement engineering

Apr 29, 2024 pm 03:25 PM

In the 1950s, artificial intelligence (AI) was born. That's when researchers discovered that machines could perform human-like tasks, such as thinking. Later, in the 1960s, the U.S. Department of Defense funded artificial intelligence and established laboratories for further development. Researchers are finding applications for artificial intelligence in many areas, such as space exploration and survival in extreme environments. Space exploration is the study of the universe, which covers the entire universe beyond the earth. Space is classified as an extreme environment because its conditions are different from those on Earth. To survive in space, many factors must be considered and precautions must be taken. Scientists and researchers believe that exploring space and understanding the current state of everything can help understand how the universe works and prepare for potential environmental crises

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Common challenges faced by machine learning algorithms in C++ include memory management, multi-threading, performance optimization, and maintainability. Solutions include using smart pointers, modern threading libraries, SIMD instructions and third-party libraries, as well as following coding style guidelines and using automation tools. Practical cases show how to use the Eigen library to implement linear regression algorithms, effectively manage memory and use high-performance matrix operations.

Explainable AI: Explaining complex AI/ML models

Jun 03, 2024 pm 10:08 PM

Explainable AI: Explaining complex AI/ML models

Jun 03, 2024 pm 10:08 PM

Translator | Reviewed by Li Rui | Chonglou Artificial intelligence (AI) and machine learning (ML) models are becoming increasingly complex today, and the output produced by these models is a black box – unable to be explained to stakeholders. Explainable AI (XAI) aims to solve this problem by enabling stakeholders to understand how these models work, ensuring they understand how these models actually make decisions, and ensuring transparency in AI systems, Trust and accountability to address this issue. This article explores various explainable artificial intelligence (XAI) techniques to illustrate their underlying principles. Several reasons why explainable AI is crucial Trust and transparency: For AI systems to be widely accepted and trusted, users need to understand how decisions are made

Is Flash Attention stable? Meta and Harvard found that their model weight deviations fluctuated by orders of magnitude

May 30, 2024 pm 01:24 PM

Is Flash Attention stable? Meta and Harvard found that their model weight deviations fluctuated by orders of magnitude

May 30, 2024 pm 01:24 PM

MetaFAIR teamed up with Harvard to provide a new research framework for optimizing the data bias generated when large-scale machine learning is performed. It is known that the training of large language models often takes months and uses hundreds or even thousands of GPUs. Taking the LLaMA270B model as an example, its training requires a total of 1,720,320 GPU hours. Training large models presents unique systemic challenges due to the scale and complexity of these workloads. Recently, many institutions have reported instability in the training process when training SOTA generative AI models. They usually appear in the form of loss spikes. For example, Google's PaLM model experienced up to 20 loss spikes during the training process. Numerical bias is the root cause of this training inaccuracy,

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Machine learning is an important branch of artificial intelligence that gives computers the ability to learn from data and improve their capabilities without being explicitly programmed. Machine learning has a wide range of applications in various fields, from image recognition and natural language processing to recommendation systems and fraud detection, and it is changing the way we live. There are many different methods and theories in the field of machine learning, among which the five most influential methods are called the "Five Schools of Machine Learning". The five major schools are the symbolic school, the connectionist school, the evolutionary school, the Bayesian school and the analogy school. 1. Symbolism, also known as symbolism, emphasizes the use of symbols for logical reasoning and expression of knowledge. This school of thought believes that learning is a process of reverse deduction, through existing