Technology peripherals

Technology peripherals

AI

AI

Touch has never been so real! Two Chinese Ph.D.s from the University of Southern California innovate the 'tactile perception' algorithm

Touch has never been so real! Two Chinese Ph.D.s from the University of Southern California innovate the 'tactile perception' algorithm

Touch has never been so real! Two Chinese Ph.D.s from the University of Southern California innovate the 'tactile perception' algorithm

The development of electronic technology allows us to enjoy an "audio-visual feast" anytime and anywhere, and human hearing and vision have been completely liberated.

#In recent years, adding "haptics" to devices has gradually become a new research hotspot, especially with the blessing of the concept of "metaverse". The high sense of touch will undoubtedly greatly enhance the realism of the virtual world.

Current tactile sensing technology mainly simulates and renders touch through a "data-driven" model. The model first records the user's interaction with the real texture. The signal is then input to the texture generation part, and the touch sensation is "played back" to the user in the form of vibration.

# Some recent methods are mostly based on user interactive motion and high-frequency vibration signals to model texture features, such as friction and microscopic surface features.

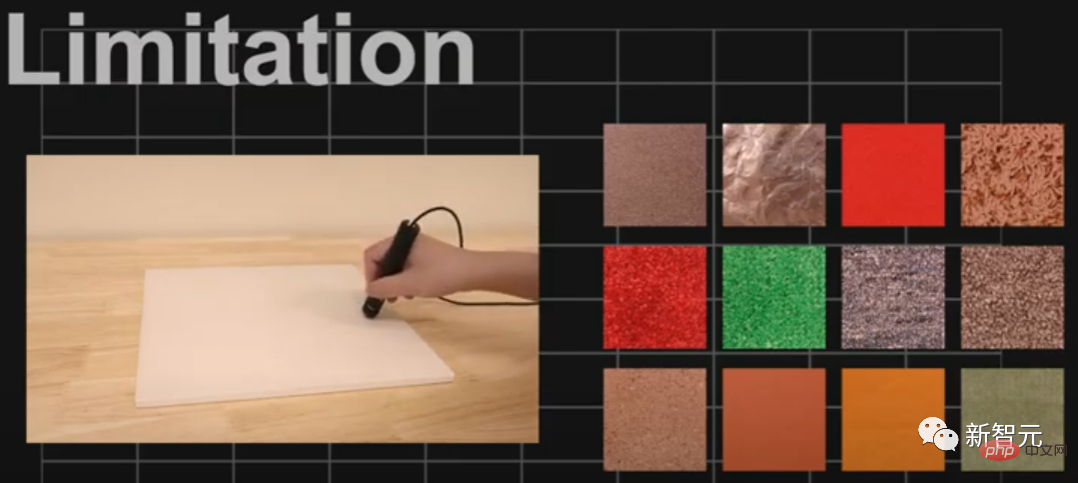

Although data-driven greatly improves the realism of simulation, there are still many limitations.

#For example, there are "countless types" of textures in the world. If each texture was recorded, the manpower and material resources required would be unimaginable. It also cannot meet the needs of some niche users.

Human beings are very sensitive to touch. Different people have different feelings for the same object. The data-driven approach cannot fundamentally eliminate the problem of touch. Texture recording to texture rendering perceptual mismatch issue.

Recently, three doctoral students at the University of Southern California Viterbi School of Engineering proposed a new "preference-driven" model framework that uses humans to resolve texture details. The ability to adjust the generated virtual perception can ultimately achieve quite realistic tactile perception. The paper was published in IEEE Transactions on Haptics.

Paper link : https://ieeexplore.ieee.org/document/9772285

The preference-driven model will first give the user a realistic touch texture, and then the model will use Dozens of variables randomly generate three virtual textures, from which the user can then choose the one that feels most similar to the real object.

With continuous trial and error and feedback, the model will continuously optimize the distribution of variables through search, making the generated texture closer to the user's preference. This method has significant advantages over directly recording and playing back textures, as there is always a gap between what the computer reads and how humans actually feel.

This process is actually similar to "Party A and Party B". As the perceiver (Party A), if we feel that the touch feels wrong, we will Go back and let the algorithm (Party B) modify and regenerate until the generated effect is satisfactory.

This is actually very reasonable, because different people will have different feelings when touching the same object, but the signal released by the computer is the same, so It is necessary to customize the touch according to each person!

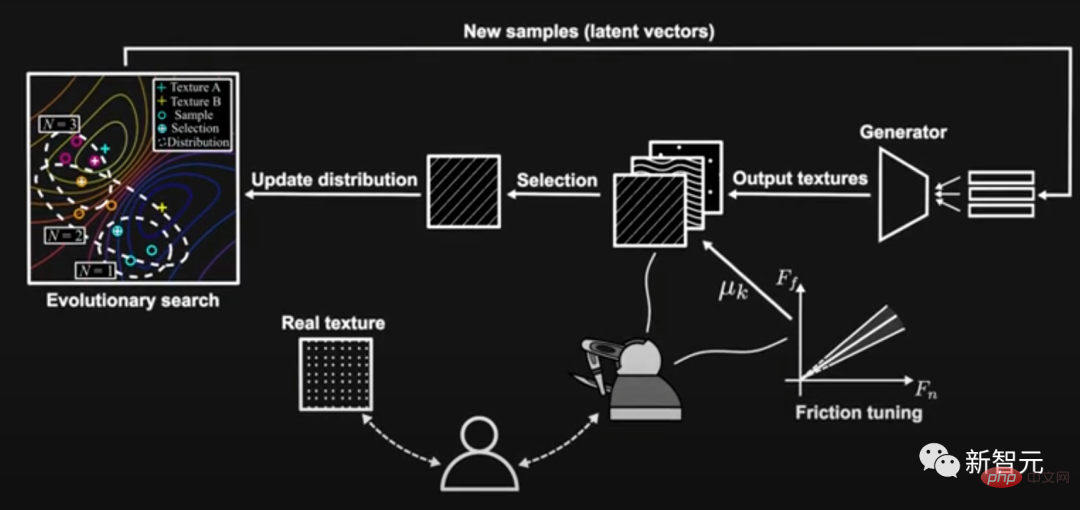

The entire system consists of two modules. The first is a deep convolutional generative adversarial network (DCGAN), which is used to map the vectors of the latent space to the texture model. In the UPenn Haptic Texture Toolkit (HaTT) for training.

The second module is a comparison-based evolutionary algorithm: from a set of generated texture models, the covariance matrix adaptive evolution strategy (CMA-ES) will create a new texture model based on user preference feedback. evolution.

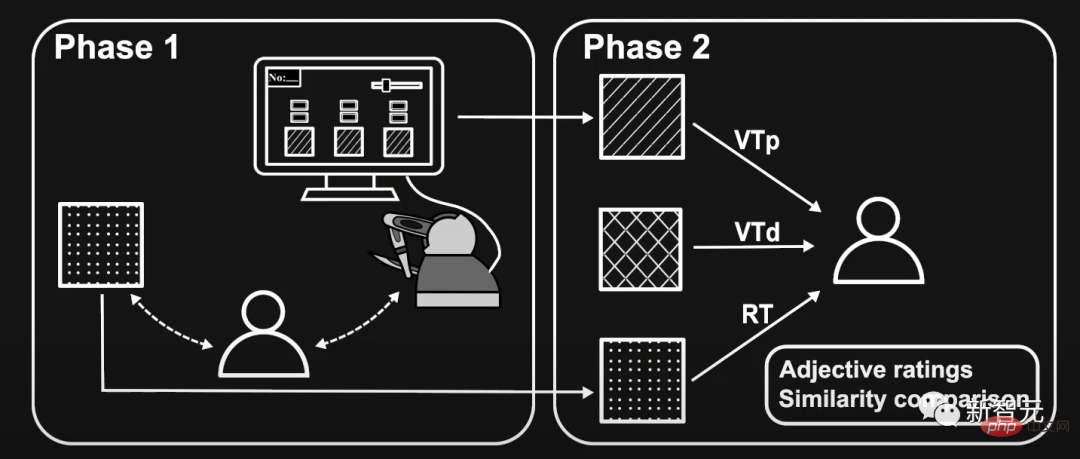

To simulate real textures, the researchers first ask users to touch real textures using custom tools, and then use a haptic device to touch a set of virtual texture candidates, where haptic Feedback is transmitted via Haptuator connected to the device's stylus.

The only thing the user needs to do is to select the virtual texture that is closest to the real texture and use a simple slider interface to adjust the amount of friction. Because friction is critical to texture feel, it can also vary from person to person.

Then all virtual textures will be updated according to the evolution strategy according to the user's selection, and then the user will select and adjust again.

Repeat the above process until the user finds a virtual texture that they believe is close to the real texture and saves it, or a closer virtual texture cannot be found .

The researchers divided the evaluation process into two phases, each with a separate group of participants.

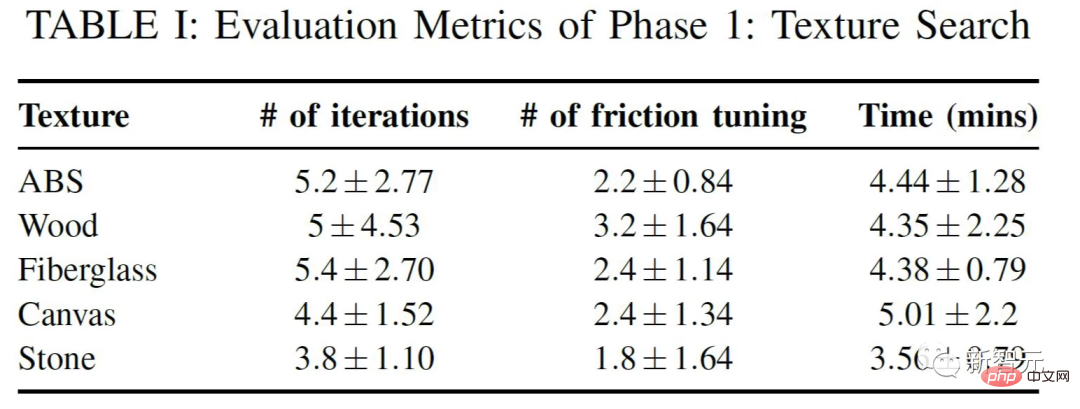

#In the first stage, five participants generated and searched virtual textures for 5 real textures respectively.

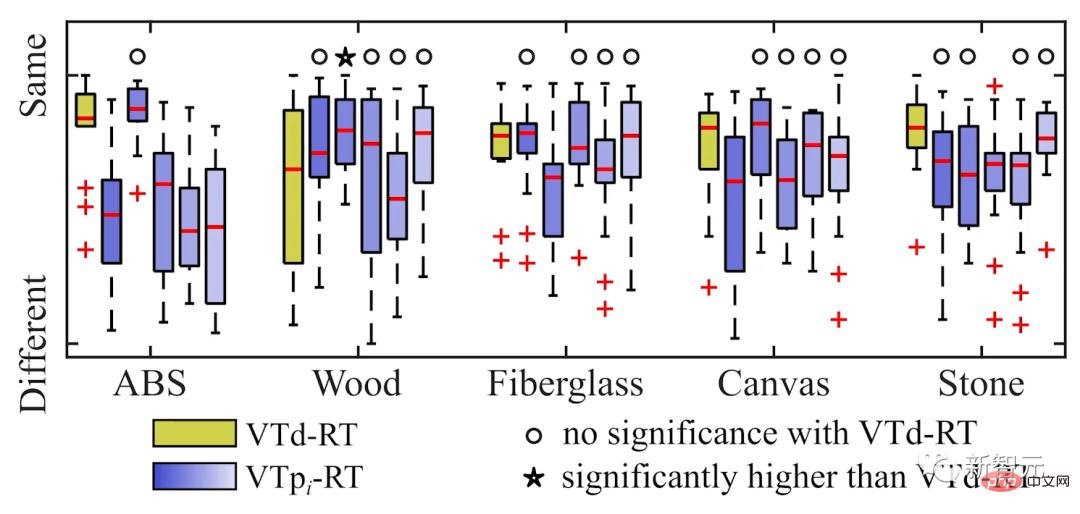

The second stage is to evaluate the gap between the final saved preference-driven texture (VTp) and its corresponding real texture (RT).

The evaluation method mainly uses adjective rating to evaluate perceptual dimensions including roughness, hardness and smoothness.

#And compare the similarities between VTp, RT and data-driven textures (VTd).

#The experimental results also show that following the evolutionary process, users can effectively find a virtual texture model that is more realistic than the data-driven model.

In addition, more than 80% of participants believed that the virtual texture ratings generated by the preference-driven model were better than those generated by the data-driven model.

Haptic devices are becoming more and more popular in video games, fashion design, and surgical simulations, and even at home, we are starting to see users using Laptops are as popular as those tactile devices.

#For example, adding touch to first-person video games will greatly enhance the player’s sense of reality.

The author of the paper stated that when we interact with the environment through tools, tactile feedback is just one form, one kind of sensory feedback, and audio is another sensory feedback, both are very important.

#In addition to games, the results of this work will be particularly useful for virtual textures used in dental or surgical training, which need to be very accurate.

"Surgical training is absolutely a huge field that requires very realistic textures and tactile feedback; decoration design also requires a high degree of precision in texture during development Simulate it on the ground and then manufacture it."

Everything from video games to fashion design is integrating haptic technology, and existing virtual texture databases can be improved with this user-preference approach.

Texture search models also allow users to extract virtual textures from databases, such as the University of Pennsylvania's Tactile Texture Toolkit, which can be refined until they are obtained desired result.

#Once this technology is combined with the texture search model, you can use virtual textures that have been recorded by others before, and then optimize the textures based on strategies.

#The author imagines that in the future, models may not even need real textures.

The sense of some common things in our lives is very intuitive, and we are hard-wired to fine-tune our senses by looking at photos without having to Reference to real textures.

For example, when we see a table, we can imagine how it will feel once we touch the table, using our prior knowledge of the surface. With experience knowledge, you can provide visual feedback to users and allow them to select matching content.

The first author of the article, Shihan Lu, is currently a doctoral candidate at the School of Computer Science at the University of Southern California. He has previously done sound-related work in immersive technology. , which makes virtual textures more immersive by introducing matching sounds when tools interact with them.

The second author of the article, Mianlun Zheng (Zheng Mianlun), is a doctoral candidate in the School of Computer Science at the University of Southern California. He graduated from Wuhan University with bachelor's and master's degrees. .

##

##

The above is the detailed content of Touch has never been so real! Two Chinese Ph.D.s from the University of Southern California innovate the 'tactile perception' algorithm. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

CLIP-BEVFormer: Explicitly supervise the BEVFormer structure to improve long-tail detection performance

Mar 26, 2024 pm 12:41 PM

CLIP-BEVFormer: Explicitly supervise the BEVFormer structure to improve long-tail detection performance

Mar 26, 2024 pm 12:41 PM

Written above & the author’s personal understanding: At present, in the entire autonomous driving system, the perception module plays a vital role. The autonomous vehicle driving on the road can only obtain accurate perception results through the perception module. The downstream regulation and control module in the autonomous driving system makes timely and correct judgments and behavioral decisions. Currently, cars with autonomous driving functions are usually equipped with a variety of data information sensors including surround-view camera sensors, lidar sensors, and millimeter-wave radar sensors to collect information in different modalities to achieve accurate perception tasks. The BEV perception algorithm based on pure vision is favored by the industry because of its low hardware cost and easy deployment, and its output results can be easily applied to various downstream tasks.

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Common challenges faced by machine learning algorithms in C++ include memory management, multi-threading, performance optimization, and maintainability. Solutions include using smart pointers, modern threading libraries, SIMD instructions and third-party libraries, as well as following coding style guidelines and using automation tools. Practical cases show how to use the Eigen library to implement linear regression algorithms, effectively manage memory and use high-performance matrix operations.

Explore the underlying principles and algorithm selection of the C++sort function

Apr 02, 2024 pm 05:36 PM

Explore the underlying principles and algorithm selection of the C++sort function

Apr 02, 2024 pm 05:36 PM

The bottom layer of the C++sort function uses merge sort, its complexity is O(nlogn), and provides different sorting algorithm choices, including quick sort, heap sort and stable sort.

Practice and reflections on Jiuzhang Yunji DataCanvas multi-modal large model platform

Oct 20, 2023 am 08:45 AM

Practice and reflections on Jiuzhang Yunji DataCanvas multi-modal large model platform

Oct 20, 2023 am 08:45 AM

1. The historical development of multi-modal large models. The photo above is the first artificial intelligence workshop held at Dartmouth College in the United States in 1956. This conference is also considered to have kicked off the development of artificial intelligence. Participants Mainly the pioneers of symbolic logic (except for the neurobiologist Peter Milner in the middle of the front row). However, this symbolic logic theory could not be realized for a long time, and even ushered in the first AI winter in the 1980s and 1990s. It was not until the recent implementation of large language models that we discovered that neural networks really carry this logical thinking. The work of neurobiologist Peter Milner inspired the subsequent development of artificial neural networks, and it was for this reason that he was invited to participate in this project.

Can artificial intelligence predict crime? Explore CrimeGPT's capabilities

Mar 22, 2024 pm 10:10 PM

Can artificial intelligence predict crime? Explore CrimeGPT's capabilities

Mar 22, 2024 pm 10:10 PM

The convergence of artificial intelligence (AI) and law enforcement opens up new possibilities for crime prevention and detection. The predictive capabilities of artificial intelligence are widely used in systems such as CrimeGPT (Crime Prediction Technology) to predict criminal activities. This article explores the potential of artificial intelligence in crime prediction, its current applications, the challenges it faces, and the possible ethical implications of the technology. Artificial Intelligence and Crime Prediction: The Basics CrimeGPT uses machine learning algorithms to analyze large data sets, identifying patterns that can predict where and when crimes are likely to occur. These data sets include historical crime statistics, demographic information, economic indicators, weather patterns, and more. By identifying trends that human analysts might miss, artificial intelligence can empower law enforcement agencies

Improved detection algorithm: for target detection in high-resolution optical remote sensing images

Jun 06, 2024 pm 12:33 PM

Improved detection algorithm: for target detection in high-resolution optical remote sensing images

Jun 06, 2024 pm 12:33 PM

01 Outlook Summary Currently, it is difficult to achieve an appropriate balance between detection efficiency and detection results. We have developed an enhanced YOLOv5 algorithm for target detection in high-resolution optical remote sensing images, using multi-layer feature pyramids, multi-detection head strategies and hybrid attention modules to improve the effect of the target detection network in optical remote sensing images. According to the SIMD data set, the mAP of the new algorithm is 2.2% better than YOLOv5 and 8.48% better than YOLOX, achieving a better balance between detection results and speed. 02 Background & Motivation With the rapid development of remote sensing technology, high-resolution optical remote sensing images have been used to describe many objects on the earth’s surface, including aircraft, cars, buildings, etc. Object detection in the interpretation of remote sensing images

Application of algorithms in the construction of 58 portrait platform

May 09, 2024 am 09:01 AM

Application of algorithms in the construction of 58 portrait platform

May 09, 2024 am 09:01 AM

1. Background of the Construction of 58 Portraits Platform First of all, I would like to share with you the background of the construction of the 58 Portrait Platform. 1. The traditional thinking of the traditional profiling platform is no longer enough. Building a user profiling platform relies on data warehouse modeling capabilities to integrate data from multiple business lines to build accurate user portraits; it also requires data mining to understand user behavior, interests and needs, and provide algorithms. side capabilities; finally, it also needs to have data platform capabilities to efficiently store, query and share user profile data and provide profile services. The main difference between a self-built business profiling platform and a middle-office profiling platform is that the self-built profiling platform serves a single business line and can be customized on demand; the mid-office platform serves multiple business lines, has complex modeling, and provides more general capabilities. 2.58 User portraits of the background of Zhongtai portrait construction

A must-read for AI product managers! A beginner's guide to getting started with machine learning algorithms

Nov 28, 2023 pm 05:25 PM

A must-read for AI product managers! A beginner's guide to getting started with machine learning algorithms

Nov 28, 2023 pm 05:25 PM

Interesting explanations of machine learning algorithms are the subject of the next article. This article is shared for students who are AI product managers and is highly recommended to students who have just entered this field! We have talked before about the artificial intelligence industry, the second curve of product managers, and the differences between the two positions. This time we will delve deeper into the topic of interesting machine learning algorithms. Machine learning algorithms may sound a bit unfathomable. I understand that many people, including me, will feel a headache at the beginning. I try not to use formulas and only present them in the form of cases. We will gradually deepen from the whole to the part. 1. Overview of Machine Learning Algorithms First, let’s understand the basic concepts of machine learning algorithms. Machine learning is a method for computers to learn and improve from data, and machine learning algorithms are