Technology peripherals

Technology peripherals

AI

AI

Why do tree-based models still outperform deep learning on tabular data?

Why do tree-based models still outperform deep learning on tabular data?

Why do tree-based models still outperform deep learning on tabular data?

Deep learning has made huge progress in areas such as images, language and even audio. However, deep learning performs mediocrely when it comes to processing tabular data. Since tabular data has characteristics such as uneven characteristics, small sample size, and large extreme values, it is difficult to find corresponding invariants.

Tree-based models are not differentiable and cannot be trained jointly with deep learning modules, so creating table-specific deep learning architectures is a very active research area. Many studies have claimed to be able to beat or rival tree-based models, but their studies have been met with much skepticism.

The fact that learning from tabular data lacks established benchmarks gives researchers a lot of freedom when evaluating their methods. Furthermore, most tabular datasets available online are small compared to benchmarks in other machine learning subdomains, making evaluation more difficult.

To alleviate these concerns, researchers from the French National Institute of Information and Automation, the Sorbonne University and other institutions have proposed a tabular data benchmark that can evaluate the latest deep learning models. And show that tree-based models are still SOTA on medium-sized tabular datasets.

For this conclusion, the article gives conclusive evidence that on tabular data, it is easier to achieve good predictions using tree-based methods than deep learning (even modern architectures) , researchers have discovered the reasons.

Paper address: https://hal.archives-ouvertes.fr/hal-03723551/document It is worth mentioning that one of the authors of the paper is Gaël Varoquaux, who is one of the leaders of the Scikit-learn project. The project has now become one of the most popular machine learning libraries on GitHub. The article "Scikit-learn: Machine learning in Python" by Gaël Varoquaux has 58,949 citations.

The contribution of this article can be summarized as:

This study creates a new benchmark (selected 45 open datasets) and share these datasets through OpenML, which makes them easy to use.

This study compares deep learning models and tree-based models under various settings on tabular data and considers the cost of selecting hyperparameters. The study also shares raw results from random searches, which will allow researchers to cheaply test new algorithms for a fixed hyperparameter optimization budget.

On tabular data, tree-based models still outperform deep learning methods

The new benchmark refers to 45 tabular data sets, and the selected benchmarks are as follows:

- Heterogeneous columns, columns should correspond to features of different nature, thereby excluding image or signal data sets.

- The dimensionality is low and the d/n ratio of the data set is less than 1/10.

- Invalid data sets, delete data sets with little available information.

- I.I.D. (Independently and Identically Distributed) data, removing stream-like data sets or time series.

- Real world data, remove artificial datasets but keep some simulated datasets.

- The data set cannot be too small, delete data sets with too few features (

- Delete data sets that are too simple.

- Delete data sets for games such as poker and chess because these data sets are deterministic in nature.

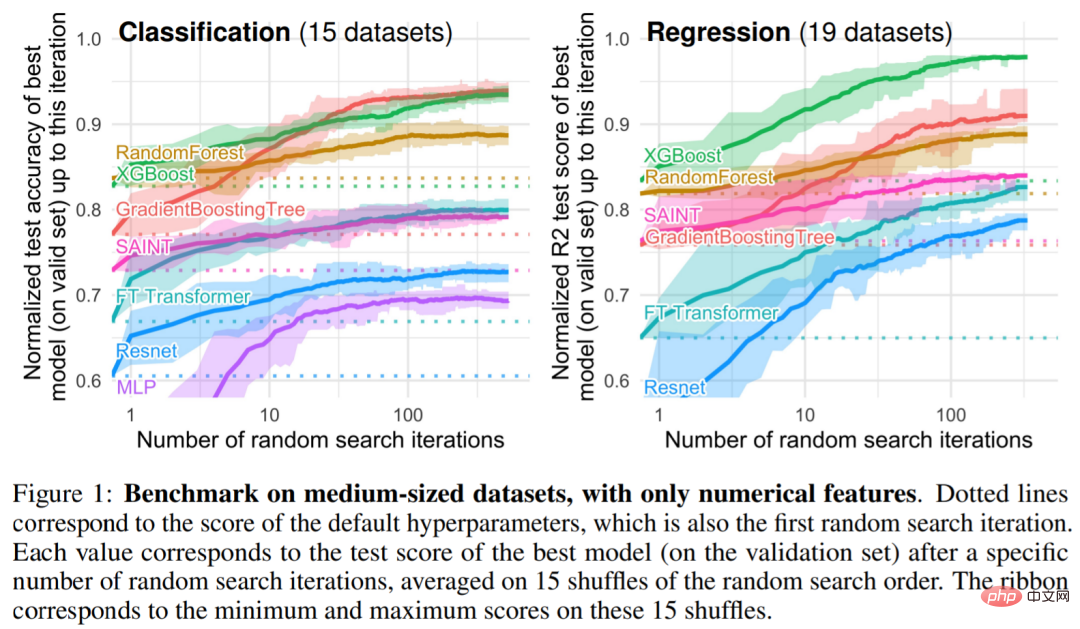

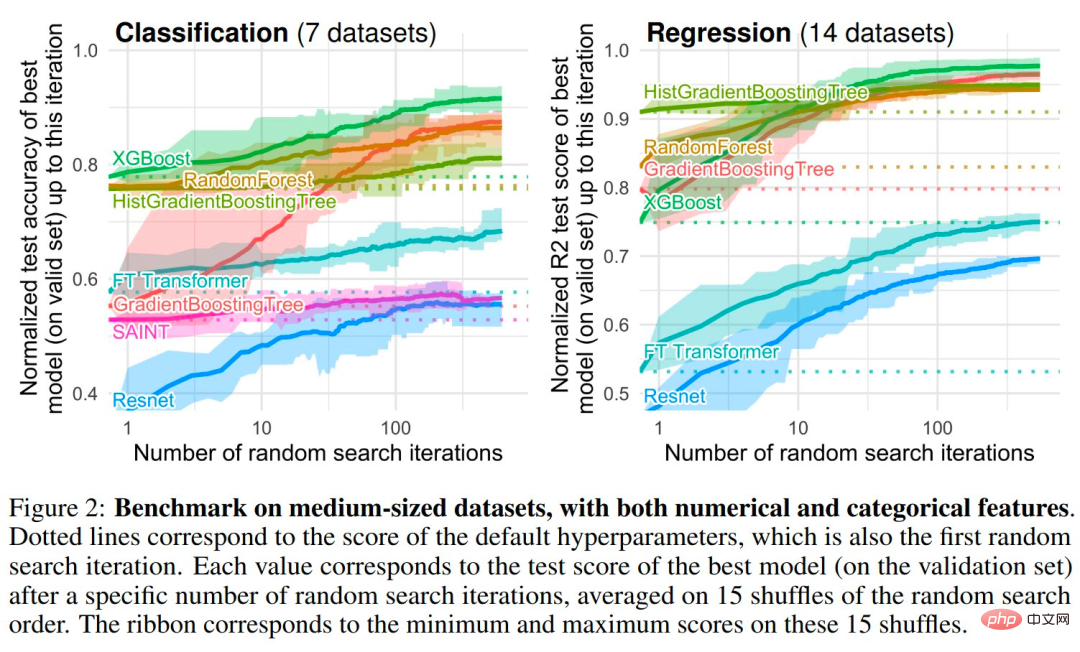

Among the tree-based models, the researchers chose three SOTA models: Scikit Learn’s RandomForest, GradientBoostingTrees (GBTs), and XGBoost. The study conducted the following benchmarks on deep models: MLP, Resnet, FT Transformer, SAINT. Figures 1 and 2 show the benchmark results for different types of data sets

Empirical investigation: why tree-based models still outperform deep learning on tabular data

Inductive Bias . Tree-based models beat neural networks across a variety of hyperparameter choices. In fact, the best methods for processing tabular data have two properties in common: they are ensemble methods, bagging (random forests) or boosting (XGBoost, GBT), and the weak learners used in these methods are decision trees.

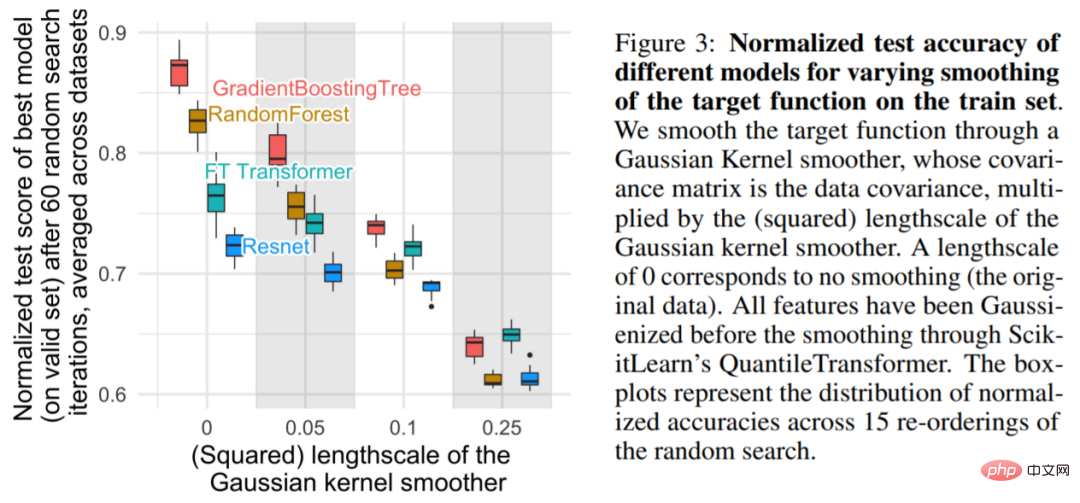

Finding 1: Neural Network (NN) tends to over-smooth solutions

As shown in Figure 3 It is shown that for smaller scales, smoothing the objective function on the training set significantly reduces the accuracy of tree-based models, but has little impact on NN. These results indicate that the objective function in the dataset is not smooth and that NN has difficulty adapting to these irregular functions compared to tree-based models. This is consistent with the findings of Rahaman et al., who found that NNs are biased toward low-frequency functions. Decision tree-based models learn piece-wise constant functions without such biases.

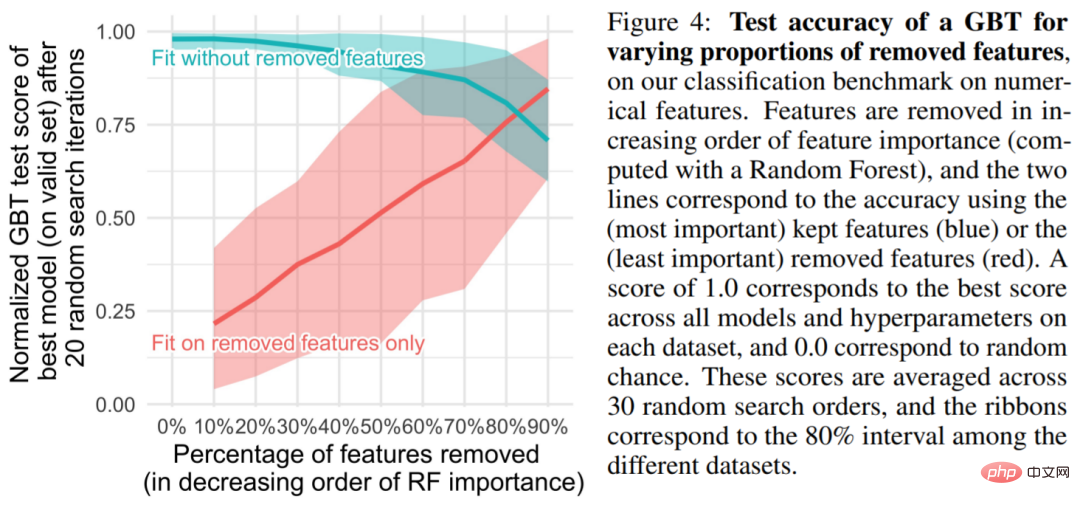

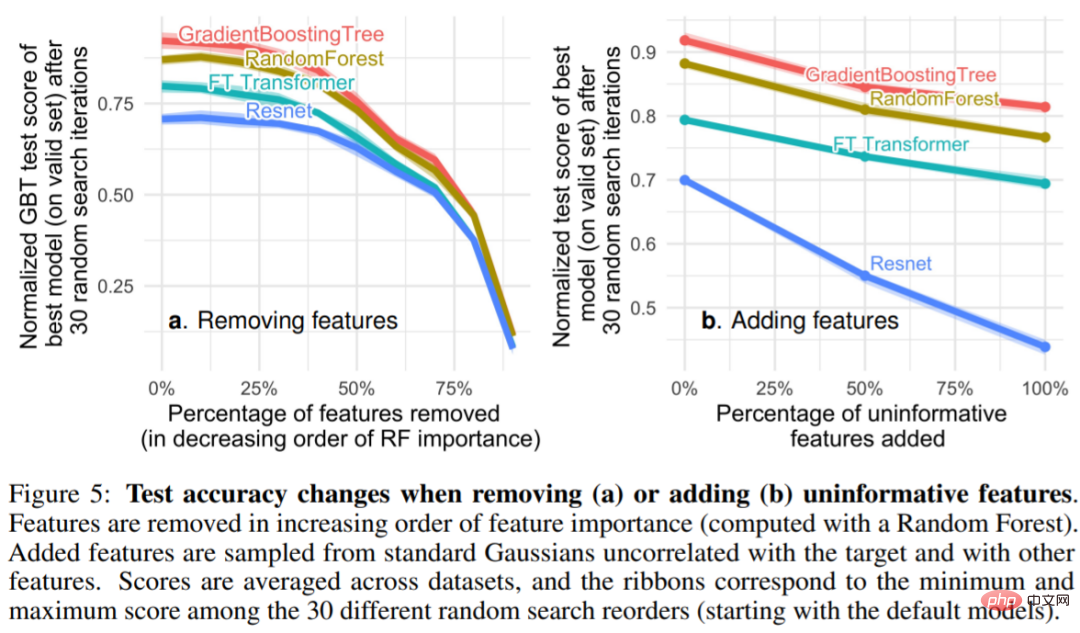

Finding 2: Non-informative features can more affect MLP-like NN

Tabular data sets contain many uninformative features, and for each data set, the study will choose to discard a certain proportion of features (usually sorted by random forest) based on the importance of the features. As can be seen from Figure 4, removing more than half of the features has little impact on the classification accuracy of GBT.

Figure 5 It can be seen that removing non-informative features (5a) reduces the difference between MLP (Resnet) and other models ( performance gap between FT Transformers and tree-based models), while adding non-informative features widens the gap, indicating that MLP is less robust to non-informative features. In Figure 5a, when the researcher removes a larger proportion of features, useful information features are also removed accordingly. Figure 5b shows that the accuracy drop caused by removing these features can be compensated by removing non-informative features, which is more helpful to MLP compared with other models (at the same time, this study also removes redundant features and does not affect model performance).

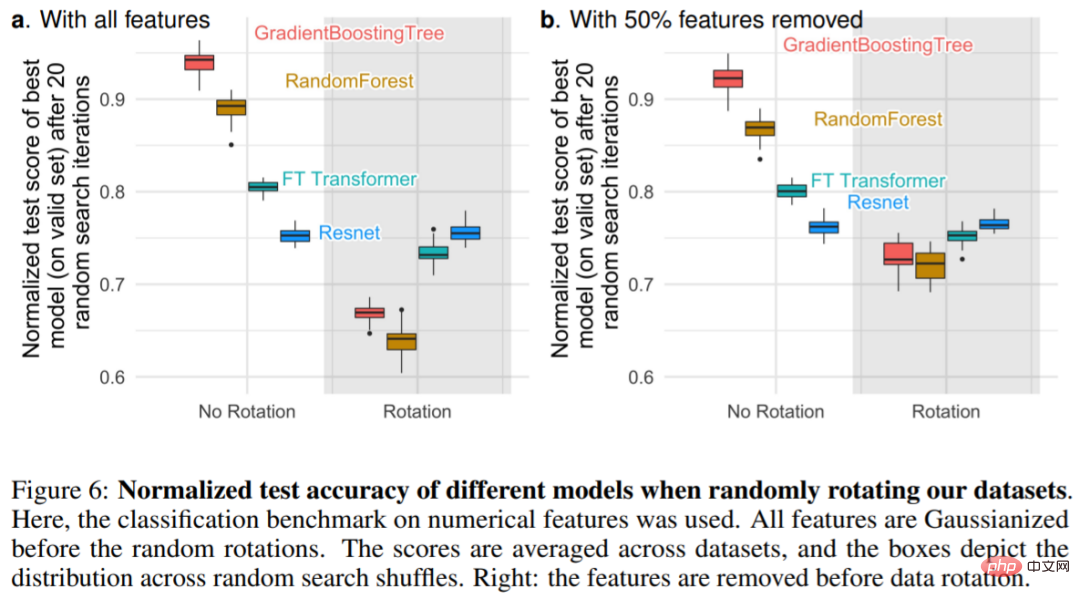

Discovery 3: Through rotation, the data is non-invariant

Why is MLP more susceptible to uninformative features compared to other models? One answer is that MLPs are rotation invariant: the process of learning an MLP on the training set and evaluating it on the test set is invariant when rotations are applied to training and test set features. In fact, any rotation-invariant learning process has a worst-case sample complexity that grows linearly at least in the number of irrelevant features. Intuitively, in order to remove useless features, the rotation-invariant algorithm must first find the original orientation of the feature and then select the least informative feature.

Figure 6a shows the change in test accuracy when the dataset is randomly rotated, confirming that only Resnets are rotation invariant. Notably, random rotation reverses the order of performance: the result is NNs above tree-based models and Resnets above FT Transformers, indicating that rotation invariance is undesirable. In fact, tabular data often has individual meanings, such as age, weight, etc. As shown in Figure 6b: Removing the least important half of the features in each dataset (before rotation) reduces the performance of all models except Resnets, but compared to using all features without removing features. , the decline is smaller.

The above is the detailed content of Why do tree-based models still outperform deep learning on tabular data?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1382

1382

52

52

This article will take you to understand SHAP: model explanation for machine learning

Jun 01, 2024 am 10:58 AM

This article will take you to understand SHAP: model explanation for machine learning

Jun 01, 2024 am 10:58 AM

In the fields of machine learning and data science, model interpretability has always been a focus of researchers and practitioners. With the widespread application of complex models such as deep learning and ensemble methods, understanding the model's decision-making process has become particularly important. Explainable AI|XAI helps build trust and confidence in machine learning models by increasing the transparency of the model. Improving model transparency can be achieved through methods such as the widespread use of multiple complex models, as well as the decision-making processes used to explain the models. These methods include feature importance analysis, model prediction interval estimation, local interpretability algorithms, etc. Feature importance analysis can explain the decision-making process of a model by evaluating the degree of influence of the model on the input features. Model prediction interval estimate

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

Earlier this month, researchers from MIT and other institutions proposed a very promising alternative to MLP - KAN. KAN outperforms MLP in terms of accuracy and interpretability. And it can outperform MLP running with a larger number of parameters with a very small number of parameters. For example, the authors stated that they used KAN to reproduce DeepMind's results with a smaller network and a higher degree of automation. Specifically, DeepMind's MLP has about 300,000 parameters, while KAN only has about 200 parameters. KAN has a strong mathematical foundation like MLP. MLP is based on the universal approximation theorem, while KAN is based on the Kolmogorov-Arnold representation theorem. As shown in the figure below, KAN has

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Common challenges faced by machine learning algorithms in C++ include memory management, multi-threading, performance optimization, and maintainability. Solutions include using smart pointers, modern threading libraries, SIMD instructions and third-party libraries, as well as following coding style guidelines and using automation tools. Practical cases show how to use the Eigen library to implement linear regression algorithms, effectively manage memory and use high-performance matrix operations.

Comprehensively surpassing DPO: Chen Danqi's team proposed simple preference optimization SimPO, and also refined the strongest 8B open source model

Jun 01, 2024 pm 04:41 PM

Comprehensively surpassing DPO: Chen Danqi's team proposed simple preference optimization SimPO, and also refined the strongest 8B open source model

Jun 01, 2024 pm 04:41 PM

In order to align large language models (LLMs) with human values and intentions, it is critical to learn human feedback to ensure that they are useful, honest, and harmless. In terms of aligning LLM, an effective method is reinforcement learning based on human feedback (RLHF). Although the results of the RLHF method are excellent, there are some optimization challenges involved. This involves training a reward model and then optimizing a policy model to maximize that reward. Recently, some researchers have explored simpler offline algorithms, one of which is direct preference optimization (DPO). DPO learns the policy model directly based on preference data by parameterizing the reward function in RLHF, thus eliminating the need for an explicit reward model. This method is simple and stable

Explainable AI: Explaining complex AI/ML models

Jun 03, 2024 pm 10:08 PM

Explainable AI: Explaining complex AI/ML models

Jun 03, 2024 pm 10:08 PM

Translator | Reviewed by Li Rui | Chonglou Artificial intelligence (AI) and machine learning (ML) models are becoming increasingly complex today, and the output produced by these models is a black box – unable to be explained to stakeholders. Explainable AI (XAI) aims to solve this problem by enabling stakeholders to understand how these models work, ensuring they understand how these models actually make decisions, and ensuring transparency in AI systems, Trust and accountability to address this issue. This article explores various explainable artificial intelligence (XAI) techniques to illustrate their underlying principles. Several reasons why explainable AI is crucial Trust and transparency: For AI systems to be widely accepted and trusted, users need to understand how decisions are made

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Machine learning is an important branch of artificial intelligence that gives computers the ability to learn from data and improve their capabilities without being explicitly programmed. Machine learning has a wide range of applications in various fields, from image recognition and natural language processing to recommendation systems and fraud detection, and it is changing the way we live. There are many different methods and theories in the field of machine learning, among which the five most influential methods are called the "Five Schools of Machine Learning". The five major schools are the symbolic school, the connectionist school, the evolutionary school, the Bayesian school and the analogy school. 1. Symbolism, also known as symbolism, emphasizes the use of symbols for logical reasoning and expression of knowledge. This school of thought believes that learning is a process of reverse deduction, through existing

No OpenAI data required, join the list of large code models! UIUC releases StarCoder-15B-Instruct

Jun 13, 2024 pm 01:59 PM

No OpenAI data required, join the list of large code models! UIUC releases StarCoder-15B-Instruct

Jun 13, 2024 pm 01:59 PM

At the forefront of software technology, UIUC Zhang Lingming's group, together with researchers from the BigCode organization, recently announced the StarCoder2-15B-Instruct large code model. This innovative achievement achieved a significant breakthrough in code generation tasks, successfully surpassing CodeLlama-70B-Instruct and reaching the top of the code generation performance list. The unique feature of StarCoder2-15B-Instruct is its pure self-alignment strategy. The entire training process is open, transparent, and completely autonomous and controllable. The model generates thousands of instructions via StarCoder2-15B in response to fine-tuning the StarCoder-15B base model without relying on expensive manual annotation.

AI startups collectively switched jobs to OpenAI, and the security team regrouped after Ilya left!

Jun 08, 2024 pm 01:00 PM

AI startups collectively switched jobs to OpenAI, and the security team regrouped after Ilya left!

Jun 08, 2024 pm 01:00 PM

Last week, amid the internal wave of resignations and external criticism, OpenAI was plagued by internal and external troubles: - The infringement of the widow sister sparked global heated discussions - Employees signing "overlord clauses" were exposed one after another - Netizens listed Ultraman's "seven deadly sins" Rumors refuting: According to leaked information and documents obtained by Vox, OpenAI’s senior leadership, including Altman, was well aware of these equity recovery provisions and signed off on them. In addition, there is a serious and urgent issue facing OpenAI - AI safety. The recent departures of five security-related employees, including two of its most prominent employees, and the dissolution of the "Super Alignment" team have once again put OpenAI's security issues in the spotlight. Fortune magazine reported that OpenA