Technology peripherals

Technology peripherals

AI

AI

Ten Questions about Artificial Intelligence: As AI becomes more and more complex, where is the future?

Ten Questions about Artificial Intelligence: As AI becomes more and more complex, where is the future?

Ten Questions about Artificial Intelligence: As AI becomes more and more complex, where is the future?

In recent years, artificial intelligence has experienced explosions, development, and recently a gradual decline in popularity. It seems that artificial intelligence has become a game that can only be played by big manufacturers.

The reason is that the "threshold" for artificial intelligence is getting higher and higher.

Not long ago, Jeff Dean, the representative figure of Google AI, published a new work, but it caused quite a stir in the industry. The reason is not that the work itself is great. This study only improved by 0.03% compared to the latest results, but it cost more than $57,000 worth of TPU computing power, which is a big deal.

Many people say that current AI research has become a representation of computing power and resources, and ordinary scholars can no longer study.

There are still many people who have such doubts: What changes has artificial intelligence brought to us? What else can it do besides playing Go, and what will its future hold?

With these questions, we had an in-depth communication with Dr. Feng Ji. He is the executive director of the Nanjing AI Research Institute of Sinovation Ventures and the founder of Bei Yang Quantitative. He has many years of research experience in the field of AI. Through this conversation, we have a new understanding of the future development and implementation of AI.

1 artificial intelligence innovation, encounter a ceiling?

Google has indeed received a lot of attention on this issue recently. I think there are three issues worth thinking about:

First, big manufacturers have begun to gradually Moving towards "violent aesthetics", means using "ultra-large-scale data" and "ultra-large-scale computing power" to violently explore the ceiling of deep neural networks. However, where are the boundaries and limits of this approach?

Second, from an academic and scientific research perspective, is this method the only way out for AI? In fact, there is already a lot of research exploring other technical routes, such as how to transform from perceptual intelligence to cognitive intelligence, how to use a relatively small amount of data to solve problems encountered by artificial intelligence, etc. .

#Third, is such a large computing power really needed for practical applications in the industry? There are a large number of tasks in the industry that are related to non-speech, image, and text, which is also forcing the academic community to develop some more efficient algorithms.

2 Artificial intelligence algorithm, only deep neural network?

Before the 1990s, the representative technology of "artificial intelligence" was still based on "symbolism", that is, based on logical reasoning, technologies such as Planning and Searching.

After 2010, an important change in artificial intelligence ushered in, which was to use neural network technology to better represent these perceptual tasks. However, there are still a large number of "holy grail" problems of artificial intelligence that have not been solved, such as how to do logical reasoning, how to do common sense, how to better model memory, etc.

In order to solve these problems, is it enough to use deep neural networks? This may be the next important direction that academia and industry are more concerned about.

3 The future of artificial intelligence: perception vs cognition?

The so-called "perceptual artificial intelligence" is actually a representative example of the successful implementation of artificial intelligence in recent years, such as image recognition, speech-to-text, and some text generation tasks.

But more importantly, how to shift from perceptual tasks to cognitive tasks, especially how to use artificial intelligence to achieve logical reasoning and common sense , thereby truly realizing general artificial intelligence?

In response to this problem, as far as I know, there are three main technical routes in academia.

First, still follow the path of neural networks and try to solve the problem by constantly piling up data and computing power.

Second, try to introduce the technology of symbolism, that is, the combination of connectionism and symbolism.

Third, continue to improve traditional logical reasoning techniques, and this route is also the most difficult.

4 Data: How to extract oil in the digital age?

Data has become increasingly important to artificial intelligence engineering. The industry has proposed a new concept called the "data-centered" development model. In contrast, it was previously called "model-centered".

Traditionally, engineers spend more time on how to build a model and how to adjust parameters to make the system perform better. But nowadays, 80% of everyone’s attention is on how to make the data set better, how to make the training set better, how to make the training set more balanced, and then let the model be trained on a good data set. and get better results.

As our demand for data privacy gradually grows, some of the negative effects and non-technical requirements brought by data are also increasing. For example, when several institutions do joint modeling, data cannot be shared among the institutions to protect data privacy. So technologies like federated learning are designed to achieve joint modeling while protecting data privacy.

Now everyone has gradually realized that the difference between each organization in specific industrial development is their data. Now that there is a very convenient software open source framework and a very efficient hardware implementation, engineers have turned to focus on data - This is a Paradigm Shift, that is, a paradigm-level shift.

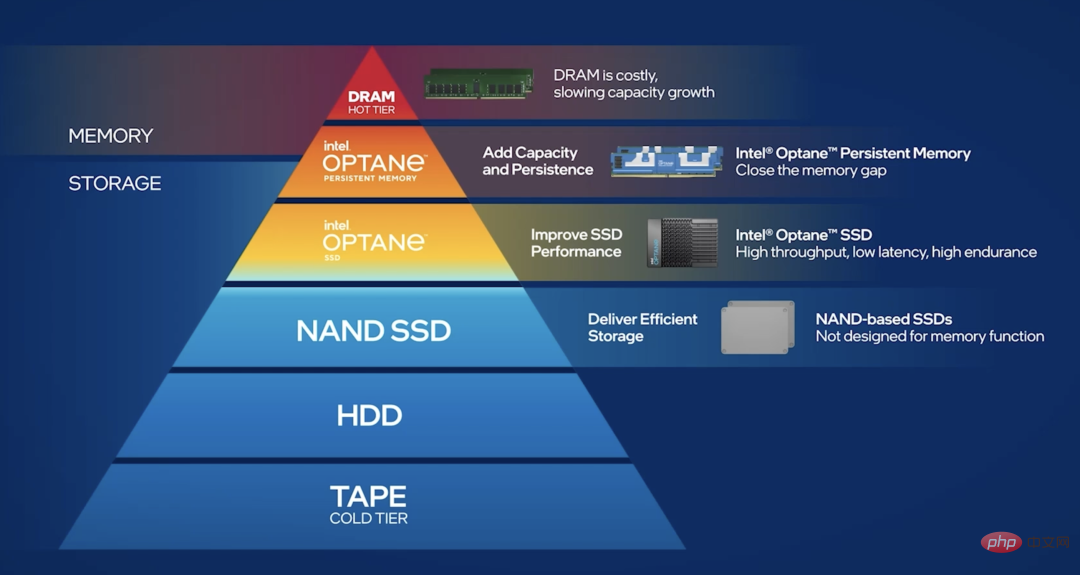

Beyang Quantitative, which I incubated myself, is a hedge fund with AI technology as its core. Within the company, the amount of data that needs to be stored every day is about 25-30TB. So we encounter the "memory wall" problem.

In order to cope with the pressure brought by massive data on memory, we divide the data into cold data, warm data and hot data. "Cold data" means that the frequency of data access is not very high and it can be dropped into the database. "Hot data" means that we have to do a lot of reading and writing tasks, and the data is generally scattered, and the amount of reading and writing each time is very large. So how to store hot data in a distributed manner?

Compared with pure SSD solutions, there are now better solutions, such as Optane persistent memory: it is between memory and SSD In this way, hot data can be stored in a distributed manner, which can alleviate the "memory wall" problem to a certain extent.

5Will "AI-native" IT infrastructure emerge?

There is now a very popular concept called "cloud native", which promotes the reconstruction of cloud computing infrastructure. And "AI-native" for artificial intelligence has actually happened. Especially in the past 10 years, computer hardware innovation has actually developed around artificial intelligence applications.

For example, our current demand for trusted computing in the cloud is increasing. For example, the calculation process of an AI model is a company's core intellectual property. If it is placed on the cloud or a public platform, there will naturally be concerns about the risk of the calculation process being stolen.

In this case, is there any hardware based solution? The answer is yes. For example, we are using the SGX privacy sandbox on Intel chips, which can protect our calculations in hardware. This is actually a very important basis for cross-organizational cooperation.

This is a very typical example, that is, starting from demand, pushing chip or hardware manufacturers to provide corresponding solutions.

6 Artificial intelligence hardware is equal to GPU?

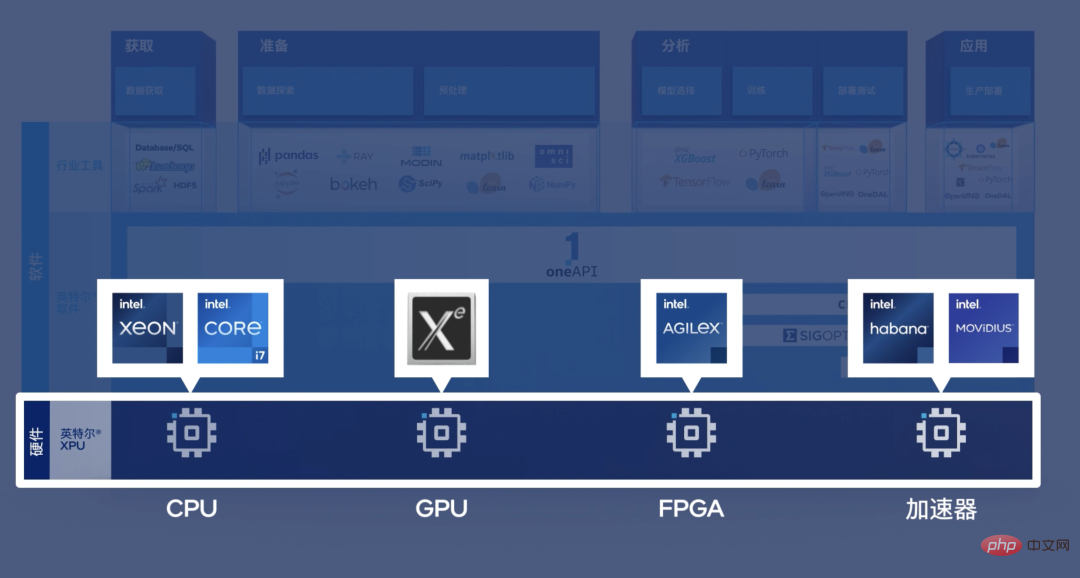

This view is indeed one-sided. Take the daily work of BeiYang Quantitative as an example. When we are doing quantitative trading, if we copy the data from the CPU to the GPU and then copy it back, it will be too late for many quantitative trading tasks. In other words, we need to have a very high-performance, CPU version of the implementation of the artificial intelligence model.

For another example, we have many tasks that require direct analysis and processing of data on the network card, and the network card usually has an FPGA chip. If the data it processes is to be transferred to the GPU It's even too late to get up. For this kind of low-latency scenario that requires the help of artificial intelligence technology, we need a heterogeneous architecture.

In other words, whether it is FPGA, ASIC, CPU, or GPU, they have different uses in different scenarios. .

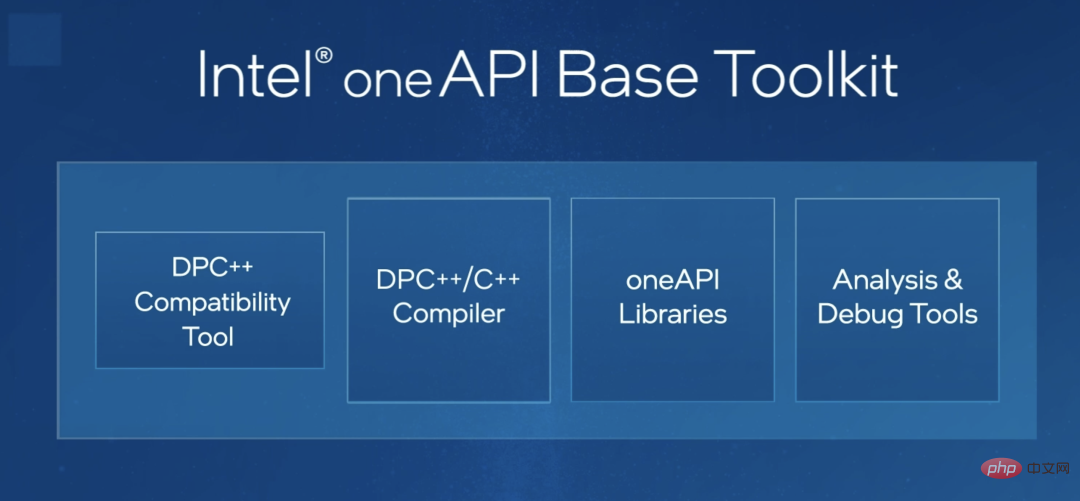

Regarding programming for heterogeneous platforms, I see that the industry has already made some attempts. For example, Intel's oneAPI, I think it is a very important tool. In other words, oneAPI allows the same set of codes to automatically adapt to CPU, FPGA or other types of chips. This will greatly reduce the programming difficulty for engineers and allow them to focus on algorithm innovation.

I think this is very important to promote heterogeneous applications.

7 What are the other directions for the development of artificial intelligence in the future?

I think a better end-to-end solution may be needed. Now we have actually upgraded from "Software 1.0" to the "Software 2.0" era. In other words, has changed from the traditional rule-driven construction of complex software engineering to a data-driven software engineering construction method.

Before, we had to rely on our high ingenuity to write a series of exquisite systems to make the entire program run. This is similar to a mechanical watch. The best programmers focus on building the operation of the "gears" and how to make this "watch" run.

Now, if I don’t know how to determine this set of operating rules, then just give it to a large amount of data or a machine learning algorithm. This algorithm will generate a new Algorithm, and this new algorithm is what we want to get. This approach is a bit like building a robot that builds robots.

In the Software 2.0 era, the development paradigm of the entire software engineering will undergo a big change. We very much hope to get a set of end-to-end solutions. The core is how to implement it more conveniently. "Data-centric" software engineering development.

8 How will artificial intelligence be implemented in the future?

I think there are probably two aspects. First, from an industrial perspective, we still have to start from first principles, that is, based on our own needs and taking into account many non-technical factors. For example, I saw a company that wanted to develop a face recognition system for community security, but each entrance and exit required four very expensive GPUs. This is a typical example of not starting from the needs and costs.

Second, academic research does not necessarily follow the trend. As we said at the beginning, there is no need to compete with each other regarding the size of the models: if you have one with hundreds of billions, I will build one with trillions, and if you have one with trillions, I will build one. Ten trillion.

In fact, there are a large number of tasks that require small-scale parameters, or only a small number of samples can be provided due to cost and other constraints. Under such conditions, how to innovate and make breakthroughs? This is a responsibility that the academic community should take the initiative to shoulder.

9 Is artificial intelligence entrepreneurship still a hot topic?

Let’s think about it. In the late 1990s, it cost 20,000 to 30,000 yuan to build a website, because there were very few people with network programming skills at that time. But today, probably any high school student can build a website with just one click of the mouse.

#In other words, network knowledge is already in every ordinary programmer's tool kit.

#In fact, the same is true for artificial intelligence technology. Around 2015, there were probably no more than 1,000 people in the world who could build a deep learning framework and run it on a GPU. But now that it has experienced exponential growth, many people know how to do it. We have reason to believe that in about five years, any programmer will have richer artificial intelligence solutions in his toolkit, and the threshold for its implementation will definitely be continuously lowered. Only in this way can artificial intelligence technology be more commonly used in every company.

# Therefore, AI Labs in large factories will inevitably disappear. Just like around 2000, many companies have an Internet Lab, which is a laboratory dedicated to all network-related matters of the company, and this laboratory provides technical output to other business departments. This is because there are very few people who know this technology, so they have to do this.

The same is true for AI Lab. When the threshold for the implementation of AI technology gradually lowers, and a large number of people in business departments also possess similar technologies, then this kind of AI Lab will inevitably disappear. I think this is a temporary product in the process of technological development, which is a good thing. When big manufacturers don’t have AI Labs, that’s probably when artificial intelligence really blossoms.

10 How can artificial intelligence benefit the public?

First, we also need the blessing of Moore’s Law. There are still a large number of tasks that require a lot of computing power. We must continue to iterate the hardware and update the algorithm. Only when things that need to be run on a cluster can be run on a mobile phone can AI be implemented in large numbers.

Second, the focus of artificial intelligence innovation must shift from Internet business to some traditional industries. Before, everyone focused on how to use artificial intelligence to create better visual solutions, or better recommendation systems, or better P-picture software. But in the real economy, there are actually a large number of departments and businesses that generate data. When these real economic data can be better informed, the value they bring may far exceed that of the current virtual economy.

The above is the detailed content of Ten Questions about Artificial Intelligence: As AI becomes more and more complex, where is the future?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1385

1385

52

52

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

The CentOS shutdown command is shutdown, and the syntax is shutdown [Options] Time [Information]. Options include: -h Stop the system immediately; -P Turn off the power after shutdown; -r restart; -t Waiting time. Times can be specified as immediate (now), minutes ( minutes), or a specific time (hh:mm). Added information can be displayed in system messages.

How to check CentOS HDFS configuration

Apr 14, 2025 pm 07:21 PM

How to check CentOS HDFS configuration

Apr 14, 2025 pm 07:21 PM

Complete Guide to Checking HDFS Configuration in CentOS Systems This article will guide you how to effectively check the configuration and running status of HDFS on CentOS systems. The following steps will help you fully understand the setup and operation of HDFS. Verify Hadoop environment variable: First, make sure the Hadoop environment variable is set correctly. In the terminal, execute the following command to verify that Hadoop is installed and configured correctly: hadoopversion Check HDFS configuration file: The core configuration file of HDFS is located in the /etc/hadoop/conf/ directory, where core-site.xml and hdfs-site.xml are crucial. use

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

Backup and Recovery Policy of GitLab under CentOS System In order to ensure data security and recoverability, GitLab on CentOS provides a variety of backup methods. This article will introduce several common backup methods, configuration parameters and recovery processes in detail to help you establish a complete GitLab backup and recovery strategy. 1. Manual backup Use the gitlab-rakegitlab:backup:create command to execute manual backup. This command backs up key information such as GitLab repository, database, users, user groups, keys, and permissions. The default backup file is stored in the /var/opt/gitlab/backups directory. You can modify /etc/gitlab

How is the GPU support for PyTorch on CentOS

Apr 14, 2025 pm 06:48 PM

How is the GPU support for PyTorch on CentOS

Apr 14, 2025 pm 06:48 PM

Enable PyTorch GPU acceleration on CentOS system requires the installation of CUDA, cuDNN and GPU versions of PyTorch. The following steps will guide you through the process: CUDA and cuDNN installation determine CUDA version compatibility: Use the nvidia-smi command to view the CUDA version supported by your NVIDIA graphics card. For example, your MX450 graphics card may support CUDA11.1 or higher. Download and install CUDAToolkit: Visit the official website of NVIDIACUDAToolkit and download and install the corresponding version according to the highest CUDA version supported by your graphics card. Install cuDNN library:

Centos install mysql

Apr 14, 2025 pm 08:09 PM

Centos install mysql

Apr 14, 2025 pm 08:09 PM

Installing MySQL on CentOS involves the following steps: Adding the appropriate MySQL yum source. Execute the yum install mysql-server command to install the MySQL server. Use the mysql_secure_installation command to make security settings, such as setting the root user password. Customize the MySQL configuration file as needed. Tune MySQL parameters and optimize databases for performance.

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Docker uses Linux kernel features to provide an efficient and isolated application running environment. Its working principle is as follows: 1. The mirror is used as a read-only template, which contains everything you need to run the application; 2. The Union File System (UnionFS) stacks multiple file systems, only storing the differences, saving space and speeding up; 3. The daemon manages the mirrors and containers, and the client uses them for interaction; 4. Namespaces and cgroups implement container isolation and resource limitations; 5. Multiple network modes support container interconnection. Only by understanding these core concepts can you better utilize Docker.

How to view GitLab logs under CentOS

Apr 14, 2025 pm 06:18 PM

How to view GitLab logs under CentOS

Apr 14, 2025 pm 06:18 PM

A complete guide to viewing GitLab logs under CentOS system This article will guide you how to view various GitLab logs in CentOS system, including main logs, exception logs, and other related logs. Please note that the log file path may vary depending on the GitLab version and installation method. If the following path does not exist, please check the GitLab installation directory and configuration files. 1. View the main GitLab log Use the following command to view the main log file of the GitLabRails application: Command: sudocat/var/log/gitlab/gitlab-rails/production.log This command will display product

How to choose a GitLab database in CentOS

Apr 14, 2025 pm 05:39 PM

How to choose a GitLab database in CentOS

Apr 14, 2025 pm 05:39 PM

When installing and configuring GitLab on a CentOS system, the choice of database is crucial. GitLab is compatible with multiple databases, but PostgreSQL and MySQL (or MariaDB) are most commonly used. This article analyzes database selection factors and provides detailed installation and configuration steps. Database Selection Guide When choosing a database, you need to consider the following factors: PostgreSQL: GitLab's default database is powerful, has high scalability, supports complex queries and transaction processing, and is suitable for large application scenarios. MySQL/MariaDB: a popular relational database widely used in Web applications, with stable and reliable performance. MongoDB:NoSQL database, specializes in