Technology peripherals

Technology peripherals

AI

AI

Using AI to find loved ones separated after the Holocaust! Google engineers develop facial recognition program that can identify more than 700,000 old World War II photos

Using AI to find loved ones separated after the Holocaust! Google engineers develop facial recognition program that can identify more than 700,000 old World War II photos

Using AI to find loved ones separated after the Holocaust! Google engineers develop facial recognition program that can identify more than 700,000 old World War II photos

Has new business opened up in the field of AI facial recognition?

This time, it was about identifying faces in old photos from World War II.

Recently, Daniel Patt, a software engineer from Google, developed an AI face recognition technology called N2N (Numbers to Names), which can identify photos of Europe before World War II and the Holocaust, and Relate them to modern people.

Using AI to find long-lost relatives

In 2016, Pat came up with an idea when he visited the Memorial Museum of Polish Jews in Warsaw.

Could these strange faces be related to him by blood?

Three of his grandparents were Holocaust survivors from Poland, he thought. Help your grandmother find photos of family members killed by the Nazis.

During World War II, due to the large number of Polish Jews who were imprisoned in different concentration camps, many of them were missing.

Just through a yellowed photo, it is difficult to identify the face in it, let alone find one's lost relative.

So, he returned home and immediately turned this idea into reality.

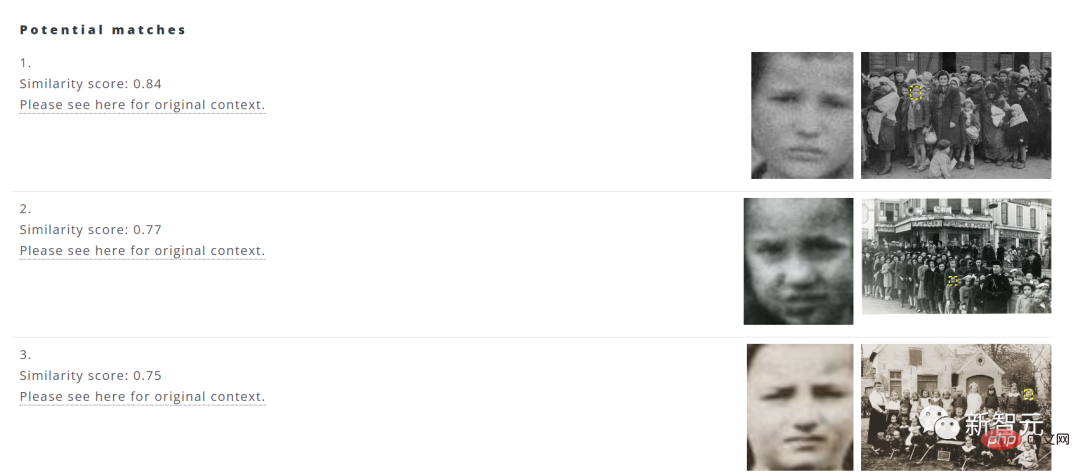

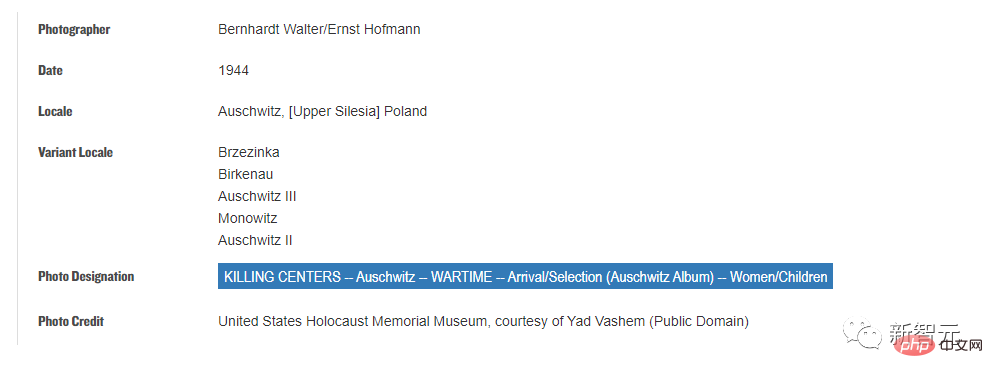

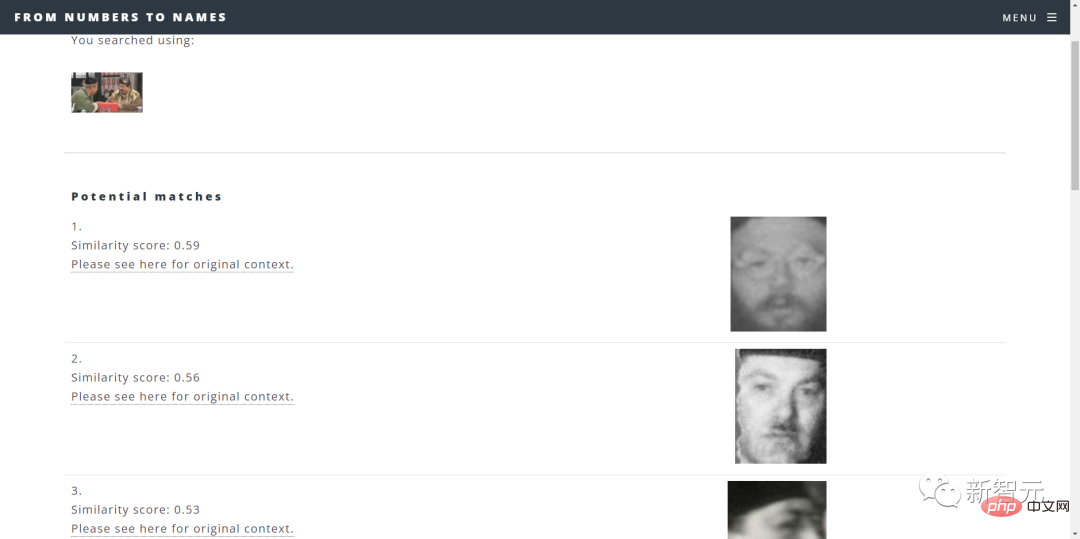

The original idea of the software was to collect image information of faces through a database and use artificial intelligence algorithms to help match the top ten options with the highest similarity.

Most of the image data comes from The US Holocaust Memorial Museum (The US Holocaust Memorial Museum), with more than a million images from databases across the country.

Users only need to select the image in the computer file and click upload, and the system will automatically filter out the top ten options with the highest matching images.

In addition, users can also click on the source address to view the year, location, collection and other information of the picture.

One drawback is that if you enter modern character images, the search results may be outrageous.

Is this the result? (Black question mark)

In short, the system functions still need to be improved.

In addition, Patt works with other teams of software engineers and data scientists at Google to improve the scope and accuracy of searches.

Due to the risk of privacy leakage in the facial recognition system, Patt said, "We do not make any evaluation of identity. We are only responsible for presenting the results using similarity scores and letting users make their own judgments."

Development of AI facial recognition technology

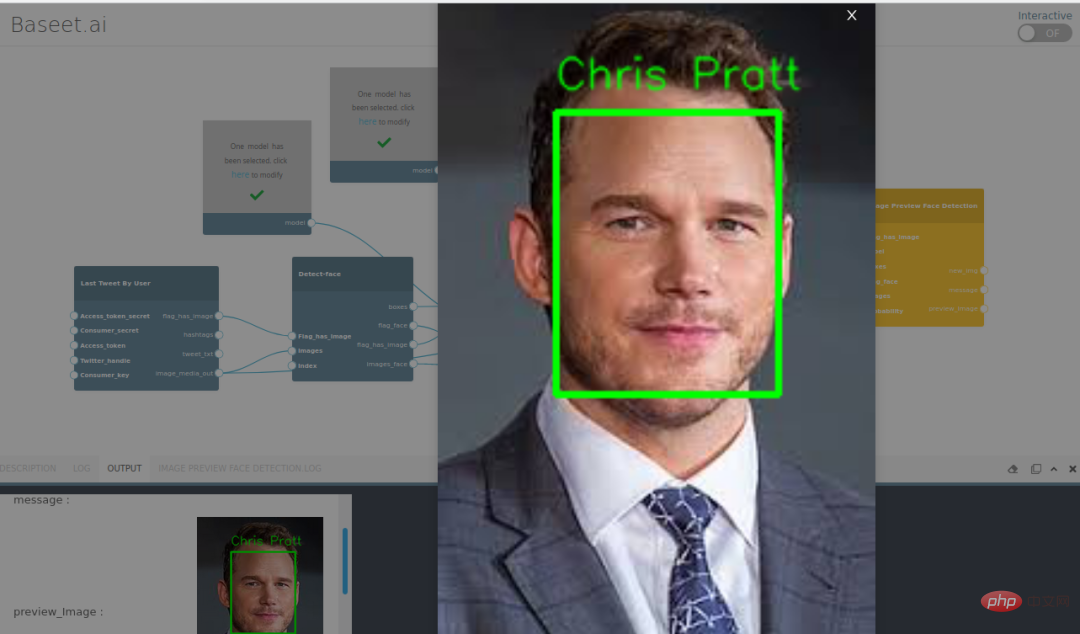

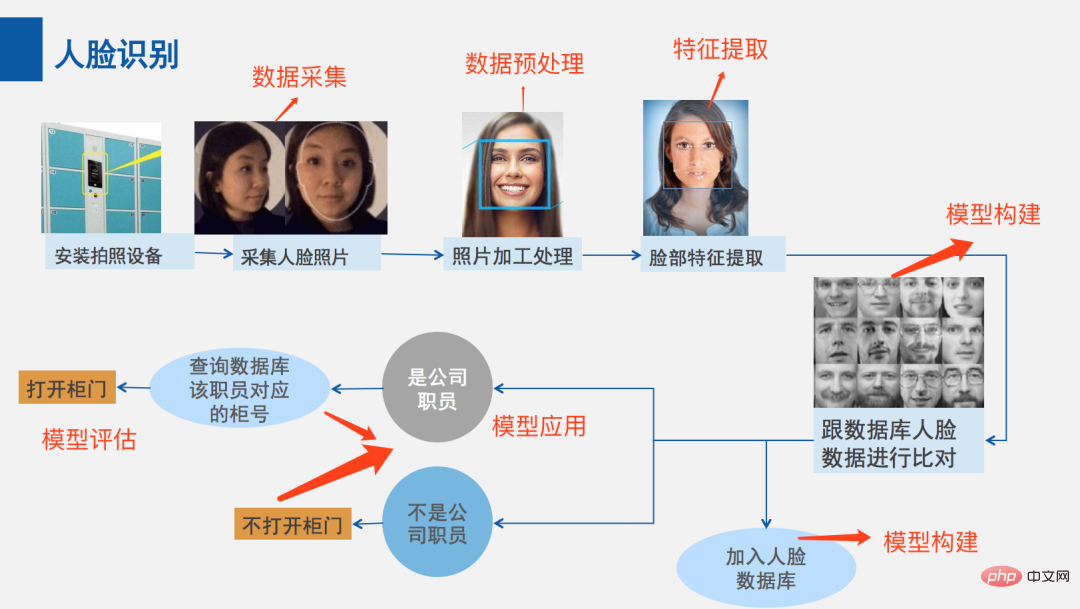

So how does this technology recognize faces?

Initially, face recognition technology had to start with "how to determine whether the detected image is a face."

In 2001, computer vision researchers Paul Viola and Michael Jones proposed a framework to detect faces in real time with high accuracy.

This framework can be based on training models to understand "what is a face and what is not a face".

After training, the model extracts specific features and then stores these features in a file so that features in new images can be compared with previously stored features at various stages.

To help ensure accuracy, the algorithm needs to be trained on "a large data set containing hundreds of thousands of positive and negative images," which improves the algorithm's ability to determine whether a face is in an image and where it is.

If the image under study passes each stage of feature comparison, a face has been detected and the operation can proceed.

Although the Viola-Jones framework is highly accurate for face recognition in real-time applications, it has certain limitations.

For example, the framework may not work if a face is wearing a mask, or if a face is not oriented correctly.

To help eliminate the shortcomings of the Viola-Jones framework and improve face detection, they developed additional algorithms.

Such as region-based convolutional neural network (R-CNN) and single shot detector (SSD) to help improve the process.

Convolutional neural network (CNN) is an artificial neural network used for image recognition and processing, specifically designed to process pixel data.

R-CNN generates region proposals on a CNN framework to localize and classify objects in images.

While methods based on region proposal networks (such as R-CNN) require two shots - one to generate region proposals and another to detect each proposed object - SSD only requires one shot to detect multiple objects in an image. Therefore, SSD is significantly faster than R-CNN.

In recent years, the advantages of face recognition technology driven by deep learning models are significantly better than traditional computer vision methods.

Early face recognition mostly used traditional machine learning algorithms, and research focused more on how to extract more discriminating features and how to align faces more effectively.

With the deepening of research, the performance improvement of traditional machine learning algorithm face recognition on two-dimensional images has gradually reached a bottleneck.

People began to study the problem of face recognition in videos, or combined with three-dimensional model methods to further improve the performance of face recognition, while a few scholars began to study the problem of three-dimensional face recognition.

On the most famous LFW public library, the deep learning algorithm has broken through the bottleneck of traditional machine learning algorithms in face recognition performance on two-dimensional images, and for the first time has improved the recognition rate. Improved to more than 97%.

That is to use the "high-dimensional model established by the CNN network" to directly extract effective identification features from the input face image, and directly calculate the cosine distance for face recognition.

Face detection has evolved from basic computer vision techniques to advances in machine learning (ML) to increasingly complex artificial neural networks (ANN) and related techniques, with the result being continued performance improvements.

Now it plays an important role as the first step in many critical applications - including facial tracking, facial analysis and facial recognition.

During World War II, China also suffered the trauma of the war, and many of the people in the photos at that time were no longer identifiable.

Those who have been traumatized by the war have many relatives and friends whose whereabouts are unknown.

The development of this technology may help people uncover the dusty years and find some comfort for people in the past.

Reference: https://www.timesofisrael.com/google-engineer-identifies-anonymous-faces-in-wwii-photos-with-ai-facial-recognition/

The above is the detailed content of Using AI to find loved ones separated after the Holocaust! Google engineers develop facial recognition program that can identify more than 700,000 old World War II photos. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1391

1391

52

52

What are the top ten platforms in the currency exchange circle?

Apr 21, 2025 pm 12:21 PM

What are the top ten platforms in the currency exchange circle?

Apr 21, 2025 pm 12:21 PM

The top exchanges include: 1. Binance, the world's largest trading volume, supports 600 currencies, and the spot handling fee is 0.1%; 2. OKX, a balanced platform, supports 708 trading pairs, and the perpetual contract handling fee is 0.05%; 3. Gate.io, covers 2700 small currencies, and the spot handling fee is 0.1%-0.3%; 4. Coinbase, the US compliance benchmark, the spot handling fee is 0.5%; 5. Kraken, the top security, and regular reserve audit.

'Black Monday Sell' is a tough day for the cryptocurrency industry

Apr 21, 2025 pm 02:48 PM

'Black Monday Sell' is a tough day for the cryptocurrency industry

Apr 21, 2025 pm 02:48 PM

The plunge in the cryptocurrency market has caused panic among investors, and Dogecoin (Doge) has become one of the hardest hit areas. Its price fell sharply, and the total value lock-in of decentralized finance (DeFi) (TVL) also saw a significant decline. The selling wave of "Black Monday" swept the cryptocurrency market, and Dogecoin was the first to be hit. Its DeFiTVL fell to 2023 levels, and the currency price fell 23.78% in the past month. Dogecoin's DeFiTVL fell to a low of $2.72 million, mainly due to a 26.37% decline in the SOSO value index. Other major DeFi platforms, such as the boring Dao and Thorchain, TVL also dropped by 24.04% and 20, respectively.

Top 10 cryptocurrency exchange platforms The world's largest digital currency exchange list

Apr 21, 2025 pm 07:15 PM

Top 10 cryptocurrency exchange platforms The world's largest digital currency exchange list

Apr 21, 2025 pm 07:15 PM

Exchanges play a vital role in today's cryptocurrency market. They are not only platforms for investors to trade, but also important sources of market liquidity and price discovery. The world's largest virtual currency exchanges rank among the top ten, and these exchanges are not only far ahead in trading volume, but also have their own advantages in user experience, security and innovative services. Exchanges that top the list usually have a large user base and extensive market influence, and their trading volume and asset types are often difficult to reach by other exchanges.

Rexas Finance (RXS) can surpass Solana (Sol), Cardano (ADA), XRP and Dogecoin (Doge) in 2025

Apr 21, 2025 pm 02:30 PM

Rexas Finance (RXS) can surpass Solana (Sol), Cardano (ADA), XRP and Dogecoin (Doge) in 2025

Apr 21, 2025 pm 02:30 PM

In the volatile cryptocurrency market, investors are looking for alternatives that go beyond popular currencies. Although well-known cryptocurrencies such as Solana (SOL), Cardano (ADA), XRP and Dogecoin (DOGE) also face challenges such as market sentiment, regulatory uncertainty and scalability. However, a new emerging project, RexasFinance (RXS), is emerging. It does not rely on celebrity effects or hype, but focuses on combining real-world assets (RWA) with blockchain technology to provide investors with an innovative way to invest. This strategy makes it hoped to be one of the most successful projects of 2025. RexasFi

Web3 social media platform TOX collaborates with Omni Labs to integrate AI infrastructure

Apr 21, 2025 pm 07:06 PM

Web3 social media platform TOX collaborates with Omni Labs to integrate AI infrastructure

Apr 21, 2025 pm 07:06 PM

Decentralized social media platform Tox has reached a strategic partnership with OmniLabs, a leader in artificial intelligence infrastructure solutions, to integrate artificial intelligence capabilities into the Web3 ecosystem. This partnership is published by Tox's official X account and aims to build a fairer and smarter online environment. OmniLabs is known for its intelligent autonomous systems, with its AI-as-a-service (AIaaS) capability supporting numerous DeFi and NFT protocols. Its infrastructure uses AI agents for real-time decision-making, automated processes and in-depth data analysis, aiming to seamlessly integrate into the decentralized ecosystem to empower the blockchain platform. The collaboration with Tox will make OmniLabs' AI tools more extensive, by integrating them into decentralized social networks,

Ranking of leveraged exchanges in the currency circle The latest recommendations of the top ten leveraged exchanges in the currency circle

Apr 21, 2025 pm 11:24 PM

Ranking of leveraged exchanges in the currency circle The latest recommendations of the top ten leveraged exchanges in the currency circle

Apr 21, 2025 pm 11:24 PM

The platforms that have outstanding performance in leveraged trading, security and user experience in 2025 are: 1. OKX, suitable for high-frequency traders, providing up to 100 times leverage; 2. Binance, suitable for multi-currency traders around the world, providing 125 times high leverage; 3. Gate.io, suitable for professional derivatives players, providing 100 times leverage; 4. Bitget, suitable for novices and social traders, providing up to 100 times leverage; 5. Kraken, suitable for steady investors, providing 5 times leverage; 6. Bybit, suitable for altcoin explorers, providing 20 times leverage; 7. KuCoin, suitable for low-cost traders, providing 10 times leverage; 8. Bitfinex, suitable for senior play

Web3 trading platform ranking_Web3 global exchanges top ten summary

Apr 21, 2025 am 10:45 AM

Web3 trading platform ranking_Web3 global exchanges top ten summary

Apr 21, 2025 am 10:45 AM

Binance is the overlord of the global digital asset trading ecosystem, and its characteristics include: 1. The average daily trading volume exceeds $150 billion, supports 500 trading pairs, covering 98% of mainstream currencies; 2. The innovation matrix covers the derivatives market, Web3 layout and education system; 3. The technical advantages are millisecond matching engines, with peak processing volumes of 1.4 million transactions per second; 4. Compliance progress holds 15-country licenses and establishes compliant entities in Europe and the United States.

WorldCoin (WLD) price forecast 2025-2031: Will WLD reach USD 4 by 2031?

Apr 21, 2025 pm 02:42 PM

WorldCoin (WLD) price forecast 2025-2031: Will WLD reach USD 4 by 2031?

Apr 21, 2025 pm 02:42 PM

WorldCoin (WLD) stands out in the cryptocurrency market with its unique biometric verification and privacy protection mechanisms, attracting the attention of many investors. WLD has performed outstandingly among altcoins with its innovative technologies, especially in combination with OpenAI artificial intelligence technology. But how will the digital assets behave in the next few years? Let's predict the future price of WLD together. The 2025 WLD price forecast is expected to achieve significant growth in WLD in 2025. Market analysis shows that the average WLD price may reach $1.31, with a maximum of $1.36. However, in a bear market, the price may fall to around $0.55. This growth expectation is mainly due to WorldCoin2.