Yesterday, the most popular topic in the entire community was nothing more than a machine learning researcher on reddit questioning the participation of Google AI leader Jeff Dean in the paper. The paper, "An Evolutionary Approach to Dynamic Introduction of Tasks in Large-scale Multitask Learning Systems," was submitted to the preprint paper platform arXiv on Thursday.

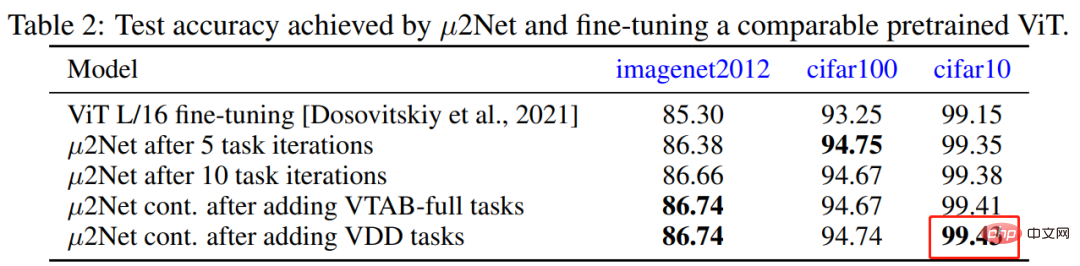

In the paper, Jeff Dean et al. proposed an evolutionary algorithm that can generate large-scale multi-task models, while also supporting the dynamic and continuous addition of new tasks. The generated multi-task model is sparsely activated and integrated with task-based routing. The new method achieves competitive results on 69 image classification tasks, such as achieving a new industry-high recognition accuracy of 99.43% on CIFAR-10 for a model trained only on public data.

It is this new SOTA implemented on CIFAR-10 that has been questioned, the previous SOTA was 99.40. "Producing this result required a total of 17,810 TPU core hours," she said. "If you don't work at Google, this means you have to use on-demand payment of $3.22/hour and the trained model costs $57,348."

Therefore, she asked her soul, "Jeff Dean spent enough money to support a family of four for five years, achieved a 0.03% improvement on CIFAR-10, and created a new SOTA. It was all worth it. ?"

This question has been echoed by many people in the field. Some researchers even said pessimistically, "I have almost lost interest in deep learning. As a practitioner in a small laboratory, it is basically impossible to compete with the technology giants in terms of computing budget. Even if you have a good theoretical idea, There may also be biases in the mainstream environment that make it difficult to see the light of day. This creates an unfair playing field."

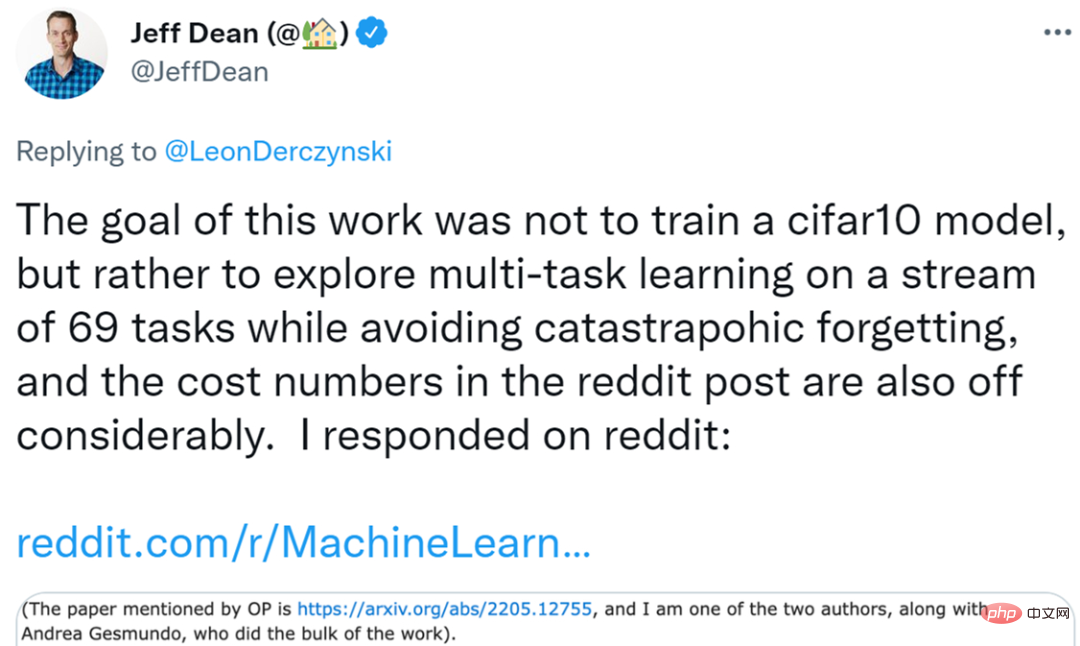

As the topic continued to ferment, Jeff Dean personally responded on reddit. He said, "The goal of our research is not to obtain a higher-quality cifar10 model, and there are also problems with the cost calculation method of the original author."

This paper was completed by Andrea Gesmundo and I, and Andrea Gesmundo did most of the work on the paper.

Paper address: https://arxiv.org/pdf/2205.12755.pdf

What I want to say is that the goal of this research is not to get A high quality cifar10 model. Rather, this study explores a setting that can dynamically introduce new tasks into a running system and successfully obtain a high-quality model for the new task that will reuse representations from existing models and sparsely New parameters are introduced while avoiding multi-task system problems such as catastrophic forgetting or negative migration.

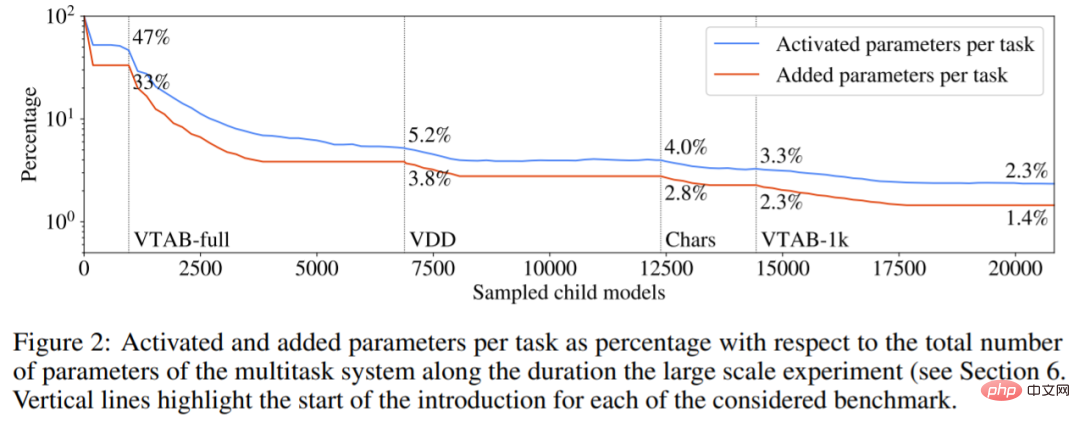

The experiments of this study show that we can dynamically introduce 69 different task streams from several independent visualization task benchmarks, ultimately resulting in a multi-task system that can jointly produce high-quality images for all these tasks. solution. The resulting model is sparsely activated for any given task, with the system introducing fewer and fewer new parameters for new tasks (see Figure 2 below). The multitasking system introduced only 1.4% new parameters for the incremental tasks at the end of this task stream, with each task activating an average of 2.3% of the total parameters of the model. There is considerable representation sharing between tasks, and the evolution process helps determine when it makes sense and when new trainable parameters should be introduced for new tasks.

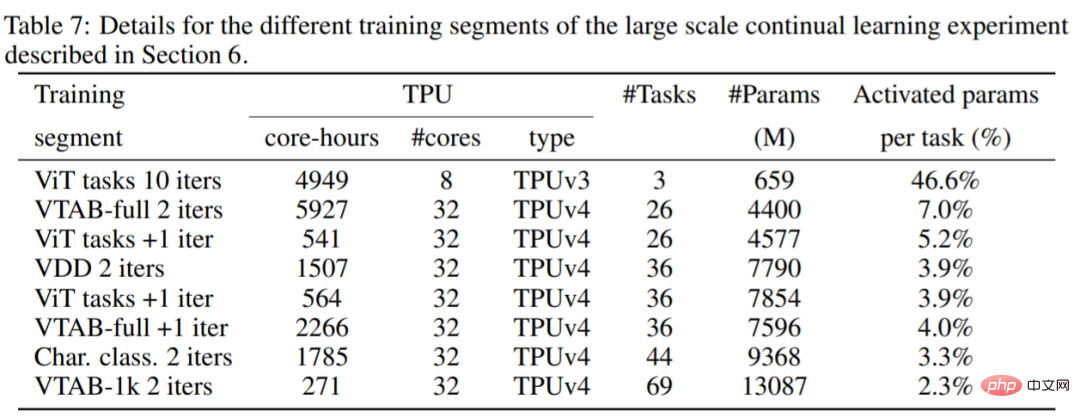

I also think that the author of the original post calculated the cost wrong. The experiment was to train a multi-task model to jointly solve 69 tasks instead of training a cifar10 model. As you can see from Table 7 below, the calculations used are a mix of TPUv3 cores and TPUv4 cores, so core hours cannot be simply calculated as they are priced differently.

Unless you have a particularly urgent task and need to quickly train cifar10 68 tasks, in fact, this type of research can easily use resources with preemptive prices, namely $0.97/hour TPUv4, $0.60/hour TPUv3 (not what they call Says you have on-demand pricing of $3.22/hour). Under these assumptions, the compute public cloud cost described in Table 7 is approximately $13,960 (using preemptible prices of 12,861 TPUv4 chip-hours and 2,474.5 TPUv3 chip-hours), or approximately $202/task.

I think it is important to have models with sparse activations and be able to dynamically introduce new tasks into existing systems that can share representations where appropriate ) and avoid catastrophic forgetting, these studies are at least worth exploring. The system also has the advantage that new tasks can be automatically incorporated into the system without having to be specifically formulated for it (this is what the evolutionary search process does), which seems to be a useful property of a continuously learning system.

The code of this paper is open source and you can view it yourself.

Code address: https://github.com/google-research/google-research/tree/master/muNet

The author of the original post replied to Jeff Dean

After seeing Jeff Dean’s reply, the author of the original post said: To clarify, I think Jeff Dean’s paper (the evolutionary model used to generate model expansion in each task) is really useful. Interesting, this reminds me of another paper, but I can't remember the title. It was about adding new modules to the overall architecture for each new task, using the hidden states of other modules as part of the input to each layer. , but does not update the weights of existing components.

I also have an idea to build modules in the model of each task. Do you know how baby deer can walk within minutes of being born? In contrast, at that time, newborn fawns had essentially no "training data" to learn to sense movement or model the world, and instead had to exploit specialized structures in the brain that had to be inherited in order for the fawn to Have basic skills. These structures will be very useful, so in a sense that it will quickly generalize to a new but related control task.

So this paper got me thinking about the development of already existing inheritable structures that can be used to learn new tasks more efficiently.

Researchers in another lab may have the same idea, but get much worse results because they cannot afford to move from their existing setup to a large cloud platform. And, because the community is now overly focused on SOTA results, their research cannot be published. Even though the cost is "only" $202/task, it takes many iterations to get things right.

So, for those of us who don’t have access to a large computing budget, our options are essentially two. One is to pray and hope that Google will publicly distribute the existing model and we can fine-tune it to our needs. But as a result, the model may have learned biases or adversarial weaknesses that we can't eliminate. The second is to do nothing and lie down.

So, my problem is not just with this study. If OpenAI wants to spend hundreds of billions of dollars (figuratively speaking) on GPT-4, then give it more power. This is a scientific and publishing culture that overly rewards glitz, big numbers, and luxury, rather than helping people get better at real work. My favorite paper is "Representation Learning with Contrastive Predictive Coding" by van der Oord in 2019, which uses an unsupervised pre-training task and then supervised training on a small subset of labels to achieve replica-labeled all data. accuracy results, and discuss this improvement from a data efficiency perspective. I reproduced and used these results in my work, saving myself time and money. Just based on this paper, I am willing to become his doctoral student.

However, OpenAI proposed a larger transformer model GPT-3 in the paper "Language Models are Few-Shot Learners", which received nearly 4,000 citations and the NeurIPS 2020 Best Paper Award, and also won the entire media attention.

The above is the detailed content of The research was questioned, Jeff Dean responded: We were not trying to get new SOTA, and the cost calculation was also wrong.. For more information, please follow other related articles on the PHP Chinese website!