What is AI? In your mind, you may think of a neural network consisting of neurons stacked one on top of another. So what is the art of painting? Is it Da Vinci's "Mona Lisa Smile", Van Gogh's "Starry Night" and "Sunflowers", or Johannes Vermeer's "Girl with a Pearl Earring"? When AI meets painting art, what kind of sparks can be created between them?

In early 2021, the OpenAI team released the DALL-E model that can generate images based on text descriptions. Due to its powerful cross-modal image generation capabilities, it has aroused strong pursuit among natural language and visual circle technology enthusiasts. In just over a year, multi-modal image generation technologies have begun to emerge like mushrooms after a rain. During this period, many applications that use these technologies for AI art creation have been born, such as the recently popular Disco Diffusion. Nowadays, these applications are gradually entering the field of vision of art creators and the general public, and have become the "magic pen Ma Liang" in many people's mouth.

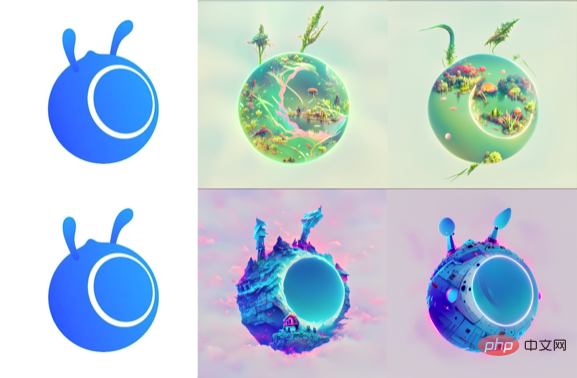

Starting from technical interests, this article introduces multi-modal image generation technology and classic work, and finally explores how to use multi-modal image generation to create magical AI painting art.  The AI painting artwork created by the author using Disco Diffusion

The AI painting artwork created by the author using Disco Diffusion

Multi-modal image generation (Multi- Modal Image Generation) aims to use modal information such as text and audio as guidance conditions to generate realistic images with natural textures. Unlike traditional single-modal generation technology that generates images based on noise, multi-modal image generation has always been a very challenging task. The problems to be solved mainly include:

(1) How to cross " "Semantic gap" to break the inherent barriers between modalities?

(2) How to generate logical, diverse, and high-resolution images? In the past two years, with the successful application of Transformer in fields such as natural language processing (such as GPT), computer vision (such as ViT), multi-modal pre-training (such as CLIP), and image generation technologies represented by VAE and GAN, there have been Gradually being overtaken by the rising star - Diffusion Model, the development of multi-modal image generation is out of control.

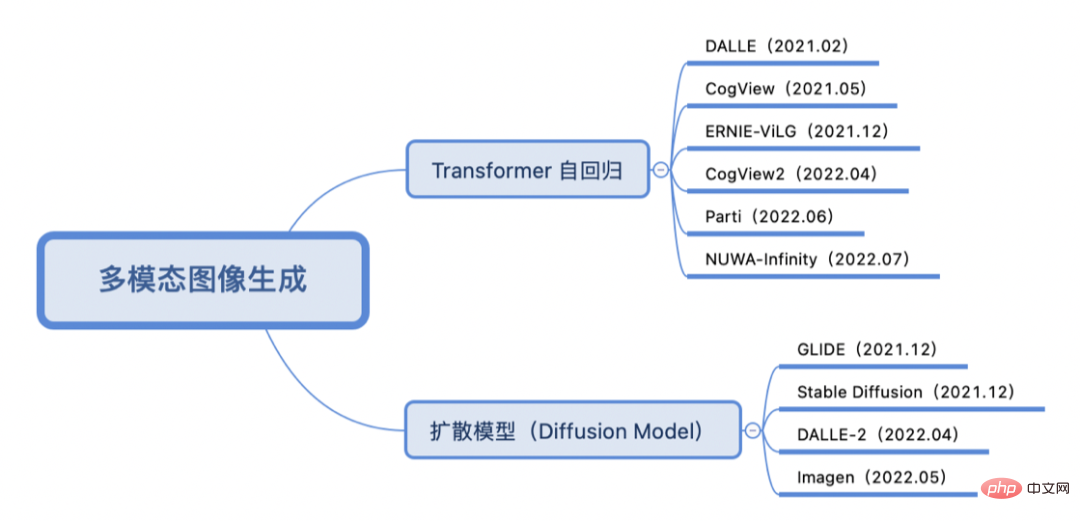

Depending on whether the training method is Transformer autoregressive or diffusion model, The key work on multi-modal image generation in the past two years is classified as follows:

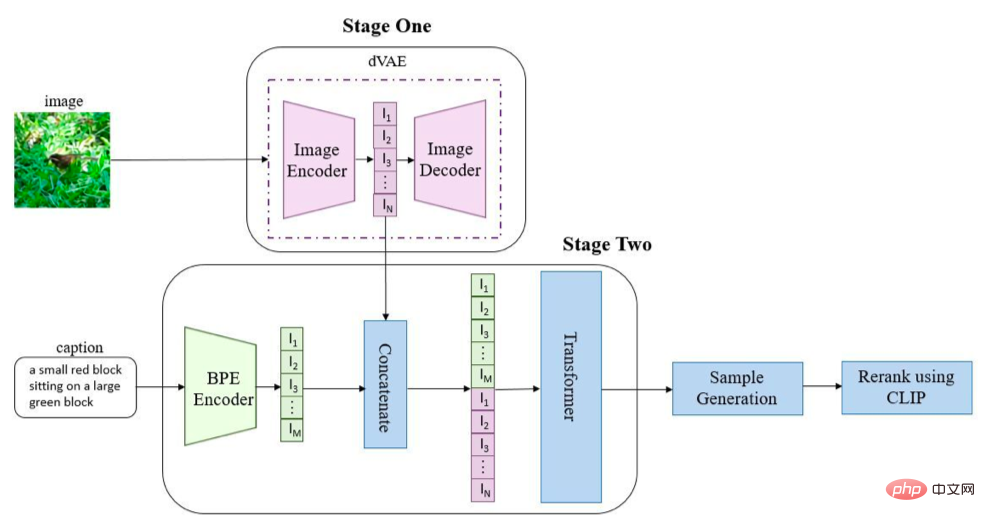

The Transformer autoregressive approach often converts text and images into tokens sequences respectively, then uses the generative Transformer architecture to predict the image sequence from the text sequence (and optional image sequence), and finally uses image generation technology (VAE, GAN, etc.) decodes the image sequence to obtain the final generated image. Take DALL-E (OpenAI) [1] as an example:

Images and text are converted into sequences through their respective encoders, spliced together and sent to the Transformer (here GPT3 is used) for autoregressive sequence generation. In the inference stage, the pre-trained CLIP is used to calculate the similarity between the text and the generated image, and the output of the final generated image is obtained after sorting. Similar to DALL-E, Tsinghua's CogView series [2, 3] and Baidu's ERNIE-ViLG [4] also use the VQ-VAE Transformer architecture design, and Google's Parti [5] replaces the image codec with ViT -VQGAN. Microsoft's NUWA-Infinity [6] uses autoregressive methods to achieve infinite visual generation.

Diffusion Model is an image generation technology that has developed rapidly in the past year and is hailed as the terminator of GAN. As shown in the figure, the diffusion model is divided into two stages: (1) Noising: gradually adding random noise to the image along the Markov chain process of diffusion; (2) Denoising: learning the inverse diffusion process to restore the image. Common variants include denoising diffusion probability model (DDPM), etc.

##

##

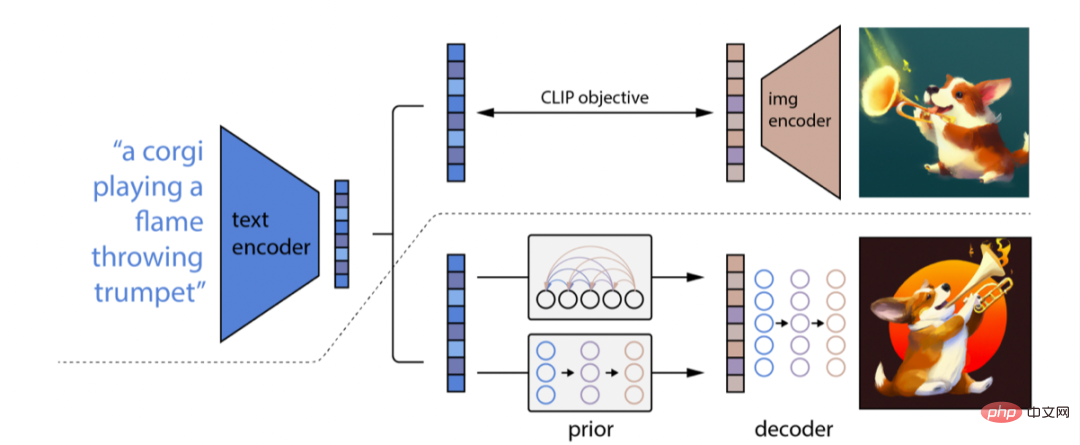

The multi-modal image generation method using the diffusion model mainly learns the mapping of text features to image features through the diffusion model with conditional guidance, and decodes the image features to obtain the final generated image. Take DALL-E-2 (OpenAI) [7] as an example. Although it is the sequel of DALL-E, it takes a completely different technical route from DALL-E. Its principle is more like GLIDE [8] (some people call GLIDE DALL-E-1.5). The overall architecture of DALL-E-2 is shown in the figure:

DALL-E-2 uses CLIP to encode text and uses a diffusion model to learn a prior (prior) process to obtain a mapping from text features to image features; finally, learn an inverse CLIP process to decode the image features into the final image. Compared with DALL-E-2, Google's Imagen [9] uses pre-trained T5-XXL to replace CLIP for text encoding, and then uses a super-resolution diffusion model (U-Net architecture) to increase the image size, obtaining 1024 ✖️1024 HD generated images.

The introduction of autoregressive Transformer and the CLIP comparative learning method establish a bridge between text and images; at the same time, based on the diffusion model with conditional guidance, it generates diverse and high-quality Resolution images lay the foundation. However, evaluating the quality of image generation is often subjective, so it is difficult to compare whether Transformer autoregressive or diffusion model technology is better here. And models such as the DALL-E series, Imagen, and Parti are trained on large-scale data sets, and their use may cause ethical issues and social bias, so these models have not yet been open source. However, there are still many enthusiasts trying to use the technology, and many playable applications have been produced during this period.

The development of multi-modal image generation technology provides more possibilities for AI art creation. Currently, widely used AI creation applications and tools include CLIPDraw, VQGAN-CLIP, Disco Diffusion, DALL-E Mini, Midjourney (requires invitation qualification), DALL-E-2 (requires internal beta qualification), Dream By Wombo ( App), Meta “Make-A-Scene”, Tiktok “AI Green Screen” function, Stable Diffusion [10], Baidu “Yige”, etc. This article mainly uses Disco Diffusion, which is popular in the art creation circle, for AI art creation.

Disco Diffusion [11] is an AI art creation application jointly maintained by many technology enthusiasts on Github. It has already iterated multiple versions. It is not difficult to see from the name of Disco Diffusion that the technology it uses is mainly a diffusion model guided by CLIP. Disco Diffusion can generate artistic images or videos based on specified text descriptions (and optional basemaps). For example, if you input "Sea of Flowers", the model will randomly generate a noise image, and iterate step by step through Diffusion's denoising diffusion process. After reaching a certain number of steps, a beautiful image can be rendered. Thanks to the diverse generation methods of the diffusion model, you will get different images every time you run the program. This "blind box opening" experience is really fascinating.

There are currently several problems in AI creation based on the multi-modal image generation model Disco Diffusion (DD):

(1) Generated image quality Uneven: Depending on the difficulty of the generation task, a rough estimate is that the yield rate of generation tasks with more difficult description content is 20% to 30%, and the yield rate of generation tasks with easier description content is 60% to 70%. The yield rate of most tasks is between Between 30~40%.

(2) The generation speed is slow and the memory consumption is large: taking the iteration of 250 steps to generate a 1280*768 image as an example, it takes about 6 minutes and uses V100 16G video memory.

(3) Rely heavily on expert experience: Selecting a suitable set of descriptors requires a lot of trial and error of text content and weight setting, understanding of the painter's painting style and art community, and the selection of text modifiers; adjusting parameters You need to have a deep understanding of concepts such as CLIP guide times/saturation/contrast/noise/cut times/internal and external cuts/gradient size/symmetry/... included in DD, and you must also have certain art skills. The large number of parameters also means that strong expert experience is required to obtain a decent generated image.

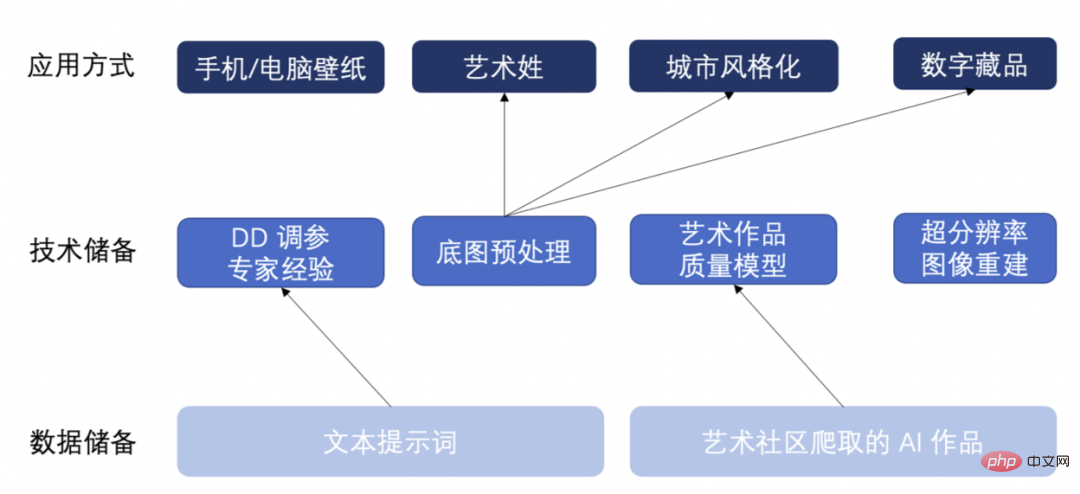

In response to the above problems, we have made some data and technical reserves, and YY some possible future applications. As shown in the picture below:

Using these data and technical reserves, we have accumulated multi-modal image generation application methods such as mobile phone/computer wallpapers, artistic surnames/first names, landmark city stylization, and digital collections. Below we will show specific AI-generated artwork.

Stylization of City Landmark Buildings

Generate paintings of different styles (animation style) by inputting text descriptions and landmark city base maps / Cyberpunk style/ pixel art style):

(1) A building with anime style, by makoto shinkai and beeple, Trending on artstation.

(2) A building with cyberpunk style , by Gregory Grewdson, Trending on artstation.

(3) A building with pixel style, by Stefan Bogdanovi, Trending on artstation.

Digital Collection

Create on the base map by inputting text description and base map.

(1) A landscape with vegetation and lake, by RAHDS and beeple, Trending on artstation .(2) Enchanted cottage on the edge of a cliff foreboding ominous fantasy landscape, by RAHDS and beeple, Trending on artstation.

(3) A spacecraft by RAHDS and beeple, Trending on artstation.

(1) Transformers with machine armor, by Alex Milne, Trending on artstation.

(2) Spongebob by RAHDS and beeple, Trending on artstation.

手机/computer wallpaper

(1) The esoteric dreamscape by Dan Luvisi, trending on Artstation, matte painting vast landscape.

(2) Scattered terraces, winter, snow, by Makoto Shinka, trending on Artstation, 4k wallpaper.

(3) A beautiful cloudpunk painting of Atlantis arising from the abyss heralded by steampunk whales by Pixar rococo style, Artstation, volumetric lighting.

(4) A beautiful render of a magical building in a dreamy landscape by daniel merriam, soft lighting, 4k hd wallpaper, Trending on artstation and behance.

AI 艺术姓

(1) Large-scale military factories, mech testing machines, Semi-finished mechs, engineering vehicles, automation management, indicators, future, sci-fi, light effect, high-definition picture.

(2) A beautiful painting of mashroom, tree, artstation, Artstation, 4k hd wallpaper.

(3) A beautiful painting of sunflowers, fog, unreal engine, shining its light across a tumultuous sea of blood by greg rutkowski and thomas kinkade, Artstation, Andreas Rocha, Greg Rutkowski.

(4) A beautiful painting of the pavilion on the water presents a reflection, by John Howe, Albert Bierstadt, Alena Aenami, and dan mumford concept art wallpaper 4k, trending on artstation, concept art, cinematic, unreal engine, trending on behance.

(5) A beautiful landscape of a lush jungle with exotic plants and trees, by John Howe, Albert Bierstadt, Alena Aenami, and dan mumford concept art wallpaper 4k, trending on artstation, concept art, cinematic, unreal engine, trending on behance.

(6) Contra Force, Red fortress, spacecraft, by Ernst Haeckel and Pixar, wallpaper hd 4k, trending on artstation.

其他 AI 艺术创作应用

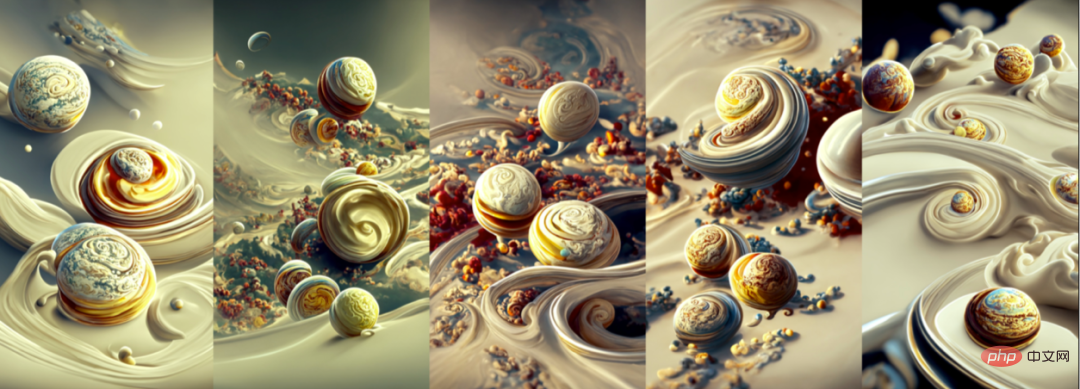

Stable Diffusion [10, 12] 展现了比 Disco Diffusion [11] 更加高效且稳定的创作能力,尤其是在“物”的刻画上更加突出。下图是笔者利用 Stable Diffusion,根据文本创作的 AI 绘画作品:

本文主要介绍了近两年来多模态图像生成技术及相关的进展工作,并尝试使用多模态图像生成进行多种 AI 艺术创作。接下来,我们还将探索多模态图像生成技术在消费级 CPU 上运行的可能性,以及结合业务为 AI 智能创作赋能,并尝试更多如电影、动漫主题封面,游戏,元宇宙内容创作等更多相关应用。

使用多模态图像生成技术进行艺术创作只是 AI 自主生产内容(AIGC,AI generated content)的一种应用方式。得益于当前海量数据与预训练大模型的发展,AIGC 能够加速落地,为人类提供更多优质内容。或许,通用人工智能又迈进了一小步?如果你对本文涉及到的技术或者应用感兴趣,欢迎共创交流。

[1] Ramesh A, Pavlov M, Goh G, et al. Zero-shot text-to-image generation[C]//International Conference on Machine Learning. PMLR, 2021: 8821-8831.

[2] Ding M, Yang Z, Hong W, et al. Cogview: Mastering text-to-image generation via transformers[J]. Advances in Neural Information Processing Systems, 2021, 34: 19822-19835.

[3] Ding M, Zheng W, Hong W, et al. CogView2: Faster and Better Text-to-Image Generation via Hierarchical Transformers[J]. arXiv preprint arXiv:2204.14217, 2022.

[4] Zhang H, Yin W, Fang Y, et al. ERNIE-ViLG: Unified generative pre-training for bidirectional vision-language generation[J]. arXiv preprint arXiv:2112.15283, 2021.

[5] Yu J, Xu Y, Koh J Y, et al. Scaling Autoregressive Models for Content-Rich Text-to-Image Generation[J]. arXiv preprint arXiv:2206.10789, 2022.

[6] Wu C, Liang J, Hu X, et al. NUWA-Infinity: Autoregressive over Autoregressive Generation for Infinite Visual Synthesis[J]. arXiv preprint arXiv:2207.09814, 2022.

[7] Ramesh A, Dhariwal P, Nichol A, et al. Hierarchical text-conditional image generation with clip latents[J]. arXiv preprint arXiv:2204.06125, 2022.

[8] Nichol A, Dhariwal P, Ramesh A, et al. Glide: Towards photorealistic image generation and editing with text-guided diffusion models[J]. arXiv preprint arXiv:2112.10741, 2021.

[9] Saharia C, Chan W, Saxena S, et al. Photorealistic Text-to-Image Diffusion Models with Deep Language Understanding[J]. arXiv preprint arXiv:2205.11487, 2022.

[10] Rombach R, Blattmann A, Lorenz D, et al. High-resolution image synthesis with latent diffusion models[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2022: 10684-10695.

[11] Github: https://github.com/alembics/disco-diffusion

[12] Github: https://github.com/CompVis/stable-diffusion

The above is the detailed content of When AI meets the art of painting, what kind of sparks will emerge?. For more information, please follow other related articles on the PHP Chinese website!

Spot trading software

Spot trading software

Minimum configuration requirements for win10 system

Minimum configuration requirements for win10 system

oracle database running sql method

oracle database running sql method

Will Sols inscription coins return to zero?

Will Sols inscription coins return to zero?

The core technologies of the big data analysis system include

The core technologies of the big data analysis system include

How to change c language software to Chinese

How to change c language software to Chinese

What are the java text editors

What are the java text editors

How to make the background transparent in ps

How to make the background transparent in ps