Technology peripherals

Technology peripherals

AI

AI

Summary of important knowledge points related to machine learning regression models

Summary of important knowledge points related to machine learning regression models

Summary of important knowledge points related to machine learning regression models

1. What are the assumptions of linear regression?

Linear regression has four assumptions:

- Linear: There should be a linear relationship between the independent variable (x) and the dependent variable (y), which means that changes in the x value also should change the y value in the same direction.

- Independence: Features should be independent of each other, which means minimal multicollinearity.

- Normality: Residuals should be normally distributed.

- Homoskedasticity: The variance of the data points around the regression line should be the same for all values.

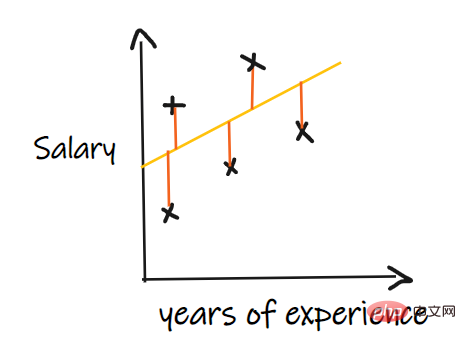

2. What is residual and how is it used to evaluate regression models?

The residual refers to the error between the predicted value and the observed value. It measures the distance of the data points from the regression line. It is calculated by subtracting predicted values from observed values.

Residual plots are a good way to evaluate regression models. It is a graph that shows all the residuals on the vertical axis and the features on the x-axis. If the data points are randomly scattered on lines with no pattern, then a linear regression model fits the data well, otherwise we should use a nonlinear model.

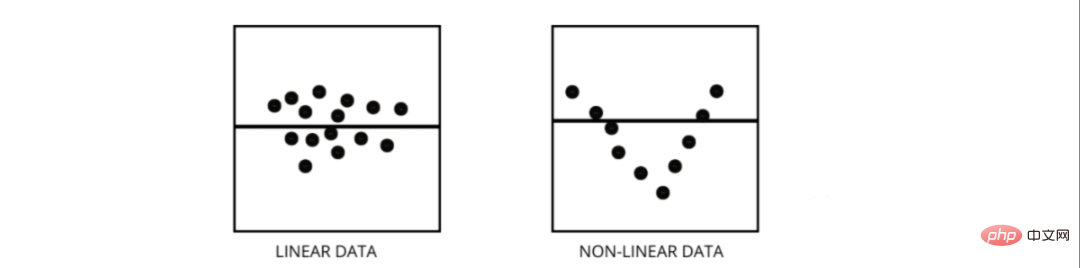

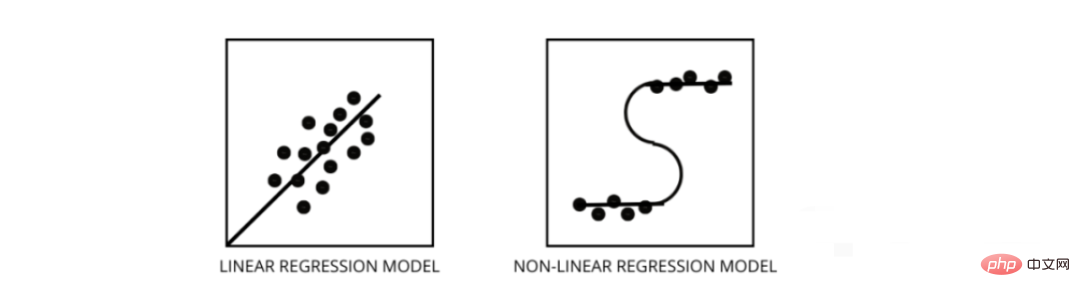

3. How to distinguish between linear regression model and nonlinear regression model?

Both are types of regression problems. The difference between the two is the data they are trained on.

The linear regression model assumes a linear relationship between features and labels, which means if we take all the data points and plot them into a linear (straight) line it should fit the data.

Nonlinear regression models assume that there is no linear relationship between variables. Nonlinear (curvilinear) lines should separate and fit the data correctly.

Three best ways to find out if your data is linear or non-linear -

- Residual plot

- Scatter points Figure

- Assuming the data is linear, a linear model is trained and evaluated by accuracy.

4. What is multicollinearity and how does it affect model performance?

Multicollinearity occurs when certain features are highly correlated with each other. Correlation refers to a measure that indicates how one variable is affected by changes in another variable.

If an increase in feature a leads to an increase in feature b, then the two features are positively correlated. If an increase in a causes a decrease in feature b, then the two features are negatively correlated. Having two highly correlated variables on the training data will lead to multicollinearity as its model cannot find patterns in the data, resulting in poor model performance. Therefore, before training the model, we must first try to eliminate multicollinearity.

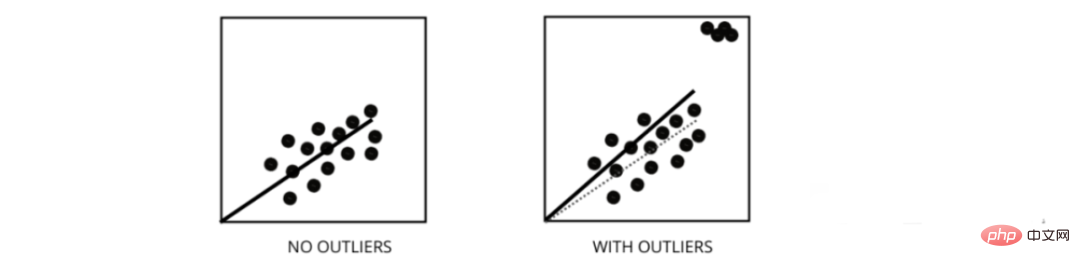

5. How do outliers affect the performance of linear regression models?

Outliers are data points whose values differ from the average range of the data points. In other words, these points are different from the data or outside the 3rd criterion.

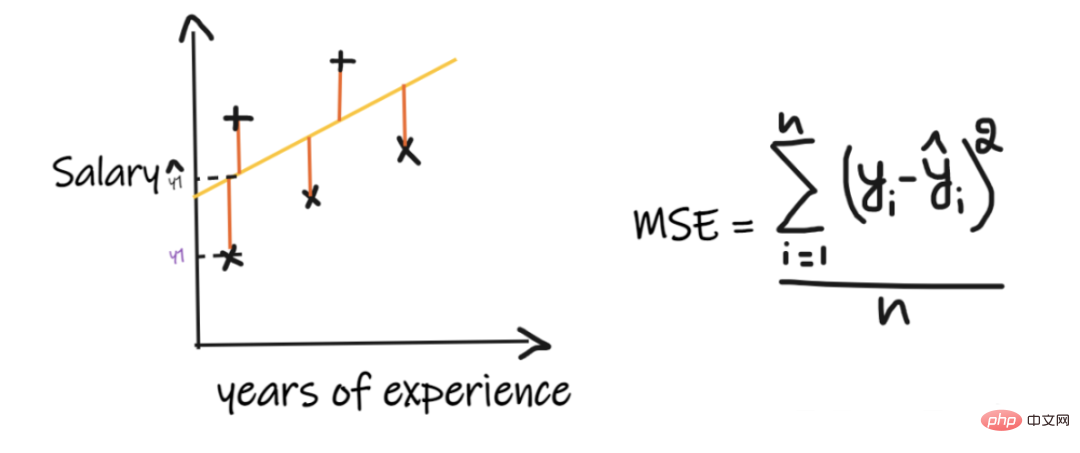

# Linear regression models attempt to find a best-fit line that reduces residuals. If the data contains outliers, the line of best fit will shift a bit toward the outliers, increasing the error rate and resulting in a model with a very high MSE.

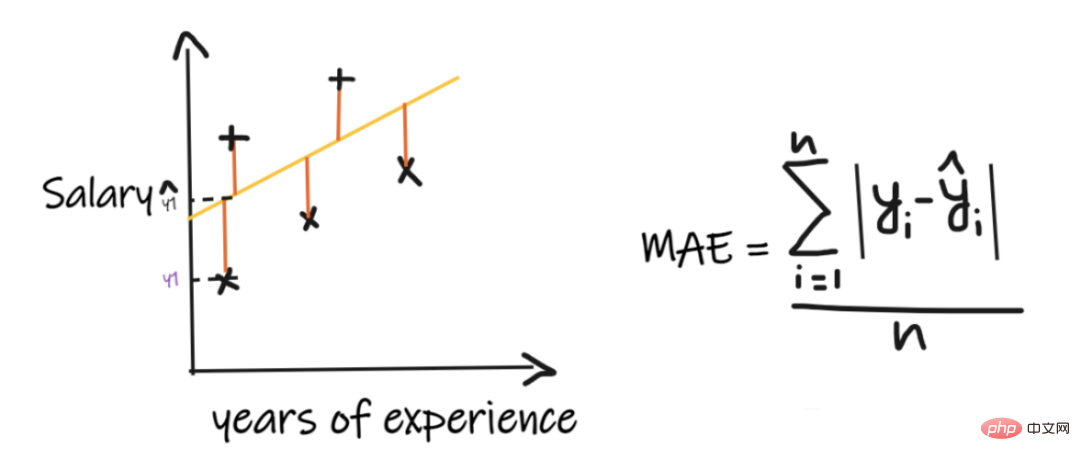

6. What is the difference between MSE and MAE?

MSE stands for mean square error, which is the squared difference between the actual value and the predicted value. And MAE is the absolute difference between the target value and the predicted value.

MSE penalizes big mistakes, MAE does not. As the values of both MSE and MAE decrease, the model tends to a better fitting line.

7. What are L1 and L2 regularization and when should they be used?

In machine learning, our main goal is to create a general model that can perform better on training and test data, but when there is very little data, basic linear regression models tend to overfit. together, so we will use l1 and l2 regularization.

L1 regularization or lasso regression works by adding the absolute value of the slope as a penalty term within the cost function. Helps remove outliers by removing all data points with slope values less than a threshold.

L2 regularization or ridge regression adds a penalty term equal to the square of the coefficient size. It penalizes features with higher slope values.

l1 and l2 are useful when the training data is small, the variance is high, the predicted features are larger than the observed values, and multicollinearity exists in the data.

8. What does heteroskedasticity mean?

It refers to the situation where the variances of the data points around the best-fit line are different within a range. It results in uneven dispersion of residuals. If it is present in the data, then the model tends to predict invalid output. One of the best ways to test for heteroskedasticity is to plot the residuals.

One of the biggest causes of heteroskedasticity within data is the large differences between range features. For example, if we have a column from 1 to 100000, then increasing the values by 10% will not change the lower values, but will make a very large difference at the higher values, thus producing a large variance difference. data point.

9. What is the role of the variance inflation factor?

The variance inflation factor (vif) is used to find out how well an independent variable can be predicted using other independent variables.

Let's take example data with features v1, v2, v3, v4, v5 and v6. Now, to calculate the vif of v1, consider it as a predictor variable and try to predict it using all other predictor variables.

If the value of VIF is small, it is better to remove the variable from the data. Because smaller values indicate high correlation between variables.

10. How does stepwise regression work?

Stepwise regression is a method of creating a regression model by removing or adding predictor variables with the help of hypothesis testing. It predicts the dependent variable by iteratively testing the significance of each independent variable and removing or adding some features after each iteration. It runs n times and tries to find the best combination of parameters that predicts the dependent variable with the smallest error between the observed and predicted values.

It can manage large amounts of data very efficiently and solve high-dimensional problems.

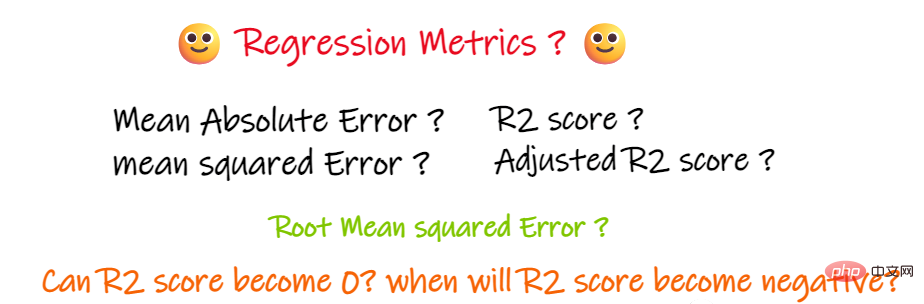

11. In addition to MSE and MAE, are there any other important regression indicators?

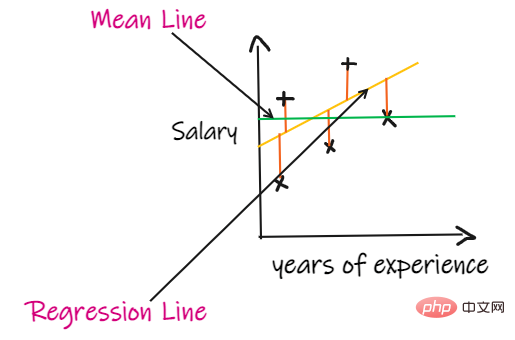

We use a regression problem to introduce these indicators, where our input is work experience and the output is salary. The graph below shows a linear regression line drawn to predict salary.

1. Mean absolute error (MAE):

Mean absolute error (MAE) is the simplest regression measure. It adds the difference between each actual and predicted value and divides it by the number of observations. In order for a regression model to be considered a good model, the MAE should be as small as possible.

The advantages of MAE are:

Simple and easy to understand. The result will have the same units as the output. For example: If the unit of the output column is LPA, then if the MAE is 1.2, then we can interpret the result as 1.2LPA or -1.2LPA, MAE is relatively stable to outliers (compared to some other regression indicators, MAE is affected by outliers smaller).

The disadvantage of MAE is:

MAE uses a modular function, but the modular function is not differentiable at all points, so it cannot be used as a loss function in many cases.

2. Mean square error (MSE):

MSE takes the difference between each actual value and the predicted value, then squares the difference and adds them together, finally dividing by the number of observations. In order for a regression model to be considered a good model, the MSE should be as small as possible.

Advantages of MSE: The square function is differentiable at all points, so it can be used as a loss function.

Disadvantages of MSE: Since MSE uses the square function, the unit of the result is the square of the output. It is therefore difficult to interpret the results. Since it uses a square function, if there are outliers in the data, the differences will also be squared, and therefore, MSE is not stable for outliers.

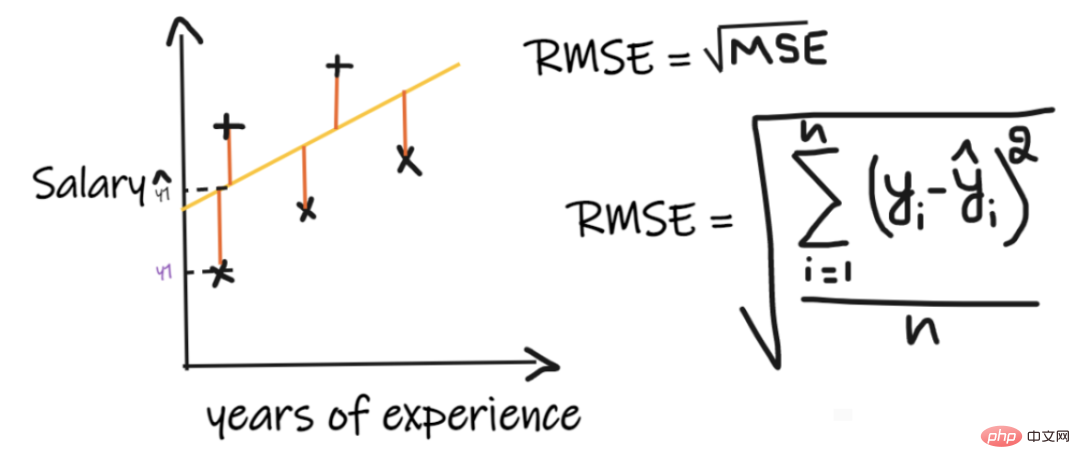

3. Root mean square error (RMSE):

The root mean square error (RMSE) takes the difference between each actual value and the predicted value values, then square the differences and add them, and finally divide by the number of observations. Then take the square root of the result. Therefore, RMSE is the square root of MSE. In order for a regression model to be considered a good model, the RMSE should be as small as possible.

RMSE solves the problem of MSE, the units will be the same as those of the output since it takes the square root, but is still less stable to outliers.

The above indicators depend on the context of the problem we are solving. We cannot judge the quality of the model by just looking at the values of MAE, MSE and RMSE without understanding the actual problem.

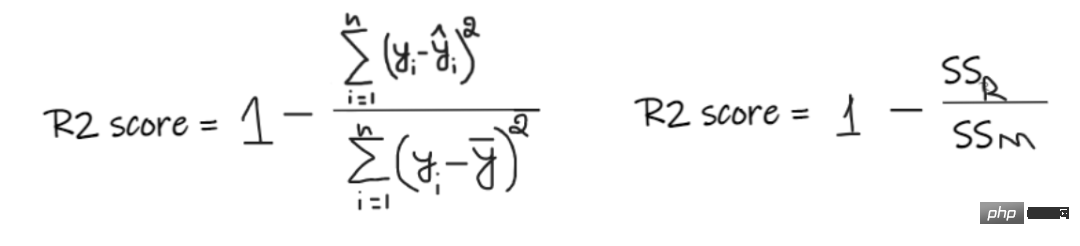

4, R2 score:

If we don’t have any input data, but want to know how much salary he can get in this company, then we can The best thing to do is give them an average of all employee salaries.

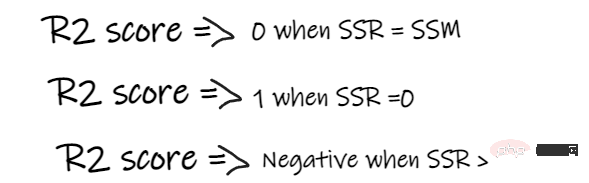

#R2 score gives a value between 0 and 1 and can be interpreted for any context. It can be understood as the quality of the fit.

SSR is the sum of squared errors of the regression line, and SSM is the sum of squared errors of the moving average. We compare the regression line to the mean line.

- #If the R2 score is 0, it means that our model has the same results as the average, so our model needs to be improved.

- If the R2 score is 1, the right-hand side of the equation becomes 0, which can only happen if our model fits each data point without error.

- If the R2 score is negative, it means the right side of the equation is greater than 1, which can happen when SSR > SSM. This means that our model is worst than the average, which means that our model is worse than taking the average to predict

If the R2 score of our model is 0.8, this means that it can be said that the model can Explains 80% of the output variance. That is, 80% of the wage variation can be explained by the input (working years), but the remaining 20% is unknown.

If our model has 2 features, working years and interview scores, then our model can explain 80% of the salary changes using these two input features.

Disadvantages of R2:

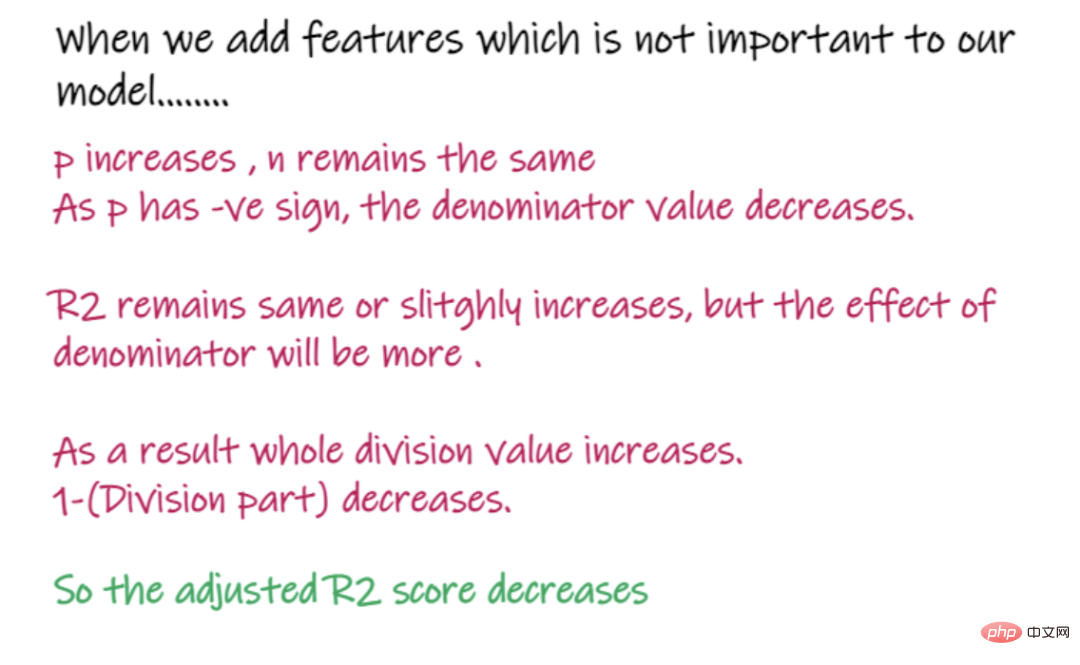

As the number of input features increases, R2 will tend to increase accordingly or remain unchanged, but will never decrease, even if the input features are not useful for our model important (e.g., adding the temperature on the day of the interview to our example, R2 will not decrease even if the temperature is not important to the output).

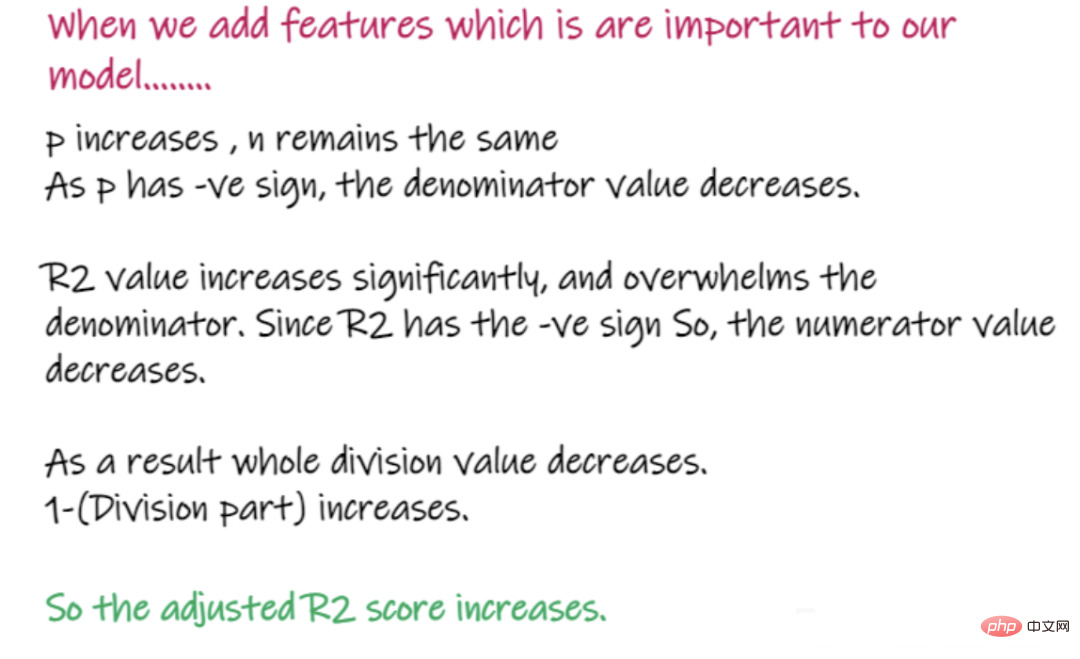

5. Adjusted R2 score:

In the above formula, R2 is R2, n is the number of observations (rows), and p is the number of independent features. Adjusted R2 solves the problems of R2.

When we add features that are less important to our model, like adding temperature to predict wages.....

When adding features that are important to the model, such as adding interview scores to predict salary...

The above is the regression problem Important knowledge points and the introduction of various important indicators used to solve regression problems and their advantages and disadvantages. I hope it will be helpful to you.

The above is the detailed content of Summary of important knowledge points related to machine learning regression models. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1387

1387

52

52

This article will take you to understand SHAP: model explanation for machine learning

Jun 01, 2024 am 10:58 AM

This article will take you to understand SHAP: model explanation for machine learning

Jun 01, 2024 am 10:58 AM

In the fields of machine learning and data science, model interpretability has always been a focus of researchers and practitioners. With the widespread application of complex models such as deep learning and ensemble methods, understanding the model's decision-making process has become particularly important. Explainable AI|XAI helps build trust and confidence in machine learning models by increasing the transparency of the model. Improving model transparency can be achieved through methods such as the widespread use of multiple complex models, as well as the decision-making processes used to explain the models. These methods include feature importance analysis, model prediction interval estimation, local interpretability algorithms, etc. Feature importance analysis can explain the decision-making process of a model by evaluating the degree of influence of the model on the input features. Model prediction interval estimate

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

Earlier this month, researchers from MIT and other institutions proposed a very promising alternative to MLP - KAN. KAN outperforms MLP in terms of accuracy and interpretability. And it can outperform MLP running with a larger number of parameters with a very small number of parameters. For example, the authors stated that they used KAN to reproduce DeepMind's results with a smaller network and a higher degree of automation. Specifically, DeepMind's MLP has about 300,000 parameters, while KAN only has about 200 parameters. KAN has a strong mathematical foundation like MLP. MLP is based on the universal approximation theorem, while KAN is based on the Kolmogorov-Arnold representation theorem. As shown in the figure below, KAN has

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Common challenges faced by machine learning algorithms in C++ include memory management, multi-threading, performance optimization, and maintainability. Solutions include using smart pointers, modern threading libraries, SIMD instructions and third-party libraries, as well as following coding style guidelines and using automation tools. Practical cases show how to use the Eigen library to implement linear regression algorithms, effectively manage memory and use high-performance matrix operations.

Comprehensively surpassing DPO: Chen Danqi's team proposed simple preference optimization SimPO, and also refined the strongest 8B open source model

Jun 01, 2024 pm 04:41 PM

Comprehensively surpassing DPO: Chen Danqi's team proposed simple preference optimization SimPO, and also refined the strongest 8B open source model

Jun 01, 2024 pm 04:41 PM

In order to align large language models (LLMs) with human values and intentions, it is critical to learn human feedback to ensure that they are useful, honest, and harmless. In terms of aligning LLM, an effective method is reinforcement learning based on human feedback (RLHF). Although the results of the RLHF method are excellent, there are some optimization challenges involved. This involves training a reward model and then optimizing a policy model to maximize that reward. Recently, some researchers have explored simpler offline algorithms, one of which is direct preference optimization (DPO). DPO learns the policy model directly based on preference data by parameterizing the reward function in RLHF, thus eliminating the need for an explicit reward model. This method is simple and stable

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Machine learning is an important branch of artificial intelligence that gives computers the ability to learn from data and improve their capabilities without being explicitly programmed. Machine learning has a wide range of applications in various fields, from image recognition and natural language processing to recommendation systems and fraud detection, and it is changing the way we live. There are many different methods and theories in the field of machine learning, among which the five most influential methods are called the "Five Schools of Machine Learning". The five major schools are the symbolic school, the connectionist school, the evolutionary school, the Bayesian school and the analogy school. 1. Symbolism, also known as symbolism, emphasizes the use of symbols for logical reasoning and expression of knowledge. This school of thought believes that learning is a process of reverse deduction, through existing

Explainable AI: Explaining complex AI/ML models

Jun 03, 2024 pm 10:08 PM

Explainable AI: Explaining complex AI/ML models

Jun 03, 2024 pm 10:08 PM

Translator | Reviewed by Li Rui | Chonglou Artificial intelligence (AI) and machine learning (ML) models are becoming increasingly complex today, and the output produced by these models is a black box – unable to be explained to stakeholders. Explainable AI (XAI) aims to solve this problem by enabling stakeholders to understand how these models work, ensuring they understand how these models actually make decisions, and ensuring transparency in AI systems, Trust and accountability to address this issue. This article explores various explainable artificial intelligence (XAI) techniques to illustrate their underlying principles. Several reasons why explainable AI is crucial Trust and transparency: For AI systems to be widely accepted and trusted, users need to understand how decisions are made

No OpenAI data required, join the list of large code models! UIUC releases StarCoder-15B-Instruct

Jun 13, 2024 pm 01:59 PM

No OpenAI data required, join the list of large code models! UIUC releases StarCoder-15B-Instruct

Jun 13, 2024 pm 01:59 PM

At the forefront of software technology, UIUC Zhang Lingming's group, together with researchers from the BigCode organization, recently announced the StarCoder2-15B-Instruct large code model. This innovative achievement achieved a significant breakthrough in code generation tasks, successfully surpassing CodeLlama-70B-Instruct and reaching the top of the code generation performance list. The unique feature of StarCoder2-15B-Instruct is its pure self-alignment strategy. The entire training process is open, transparent, and completely autonomous and controllable. The model generates thousands of instructions via StarCoder2-15B in response to fine-tuning the StarCoder-15B base model without relying on expensive manual annotation.

Is Flash Attention stable? Meta and Harvard found that their model weight deviations fluctuated by orders of magnitude

May 30, 2024 pm 01:24 PM

Is Flash Attention stable? Meta and Harvard found that their model weight deviations fluctuated by orders of magnitude

May 30, 2024 pm 01:24 PM

MetaFAIR teamed up with Harvard to provide a new research framework for optimizing the data bias generated when large-scale machine learning is performed. It is known that the training of large language models often takes months and uses hundreds or even thousands of GPUs. Taking the LLaMA270B model as an example, its training requires a total of 1,720,320 GPU hours. Training large models presents unique systemic challenges due to the scale and complexity of these workloads. Recently, many institutions have reported instability in the training process when training SOTA generative AI models. They usually appear in the form of loss spikes. For example, Google's PaLM model experienced up to 20 loss spikes during the training process. Numerical bias is the root cause of this training inaccuracy,