Technology peripherals

Technology peripherals

AI

AI

Based on cross-modal element transfer, the reference video object segmentation method of Meitu & Dalian University of Technology requires only a single stage

Based on cross-modal element transfer, the reference video object segmentation method of Meitu & Dalian University of Technology requires only a single stage

Based on cross-modal element transfer, the reference video object segmentation method of Meitu & Dalian University of Technology requires only a single stage

Introduction

Referring VOS (RVOS) is a newly emerging task. It aims to segment the objects referred to by the text from a video sequence based on the reference text. . Compared with semi-supervised video object segmentation, RVOS only relies on abstract language descriptions instead of pixel-level reference masks, providing a more convenient option for human-computer interaction and therefore has received widespread attention.

## Paper link: https://www.aaai.org/AAAI22Papers/AAAI-1100.LiD.pdf

The main purpose of this research is to solve two major challenges faced in existing RVOS tasks:

- How to convert text information into , cross-modal fusion of picture information, so as to maintain the scale consistency between the two modalities and fully integrate the useful feature references provided by the text into the picture features;

- How to abandon the two-stage strategy of existing methods (that is, first obtain rough results frame by frame at the picture level, then use the results as a reference, and obtain the final prediction through structural refinement of enhanced temporal information), and unify the entire RVOS task into a single-stage framework.

In this regard, this study proposes an end-to-end RVOS framework for cross-modal element migration - YOFO , its main contributions and innovations are:

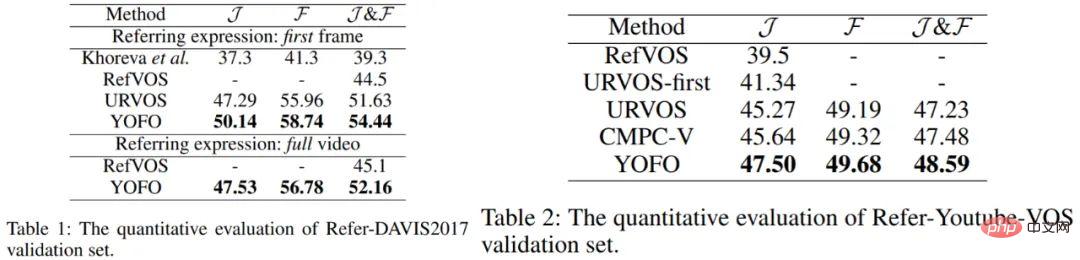

- Only one-stage reasoning is needed to directly obtain the segmentation of video targets using reference text information. As a result, the results obtained on two mainstream data sets - Ref-DAVIS2017 and Ref-Youtube-VOS surpassed all current two-stage methods;

- proposed a meta-migration ( Meta-Transfer) module to enhance temporal information, thereby achieving more target-focused feature learning;

- proposes a multi-scale cross-modal feature mining (Multi-Scale Cross-Modal Feature Mining) module, which can fully integrate useful features in language and pictures.

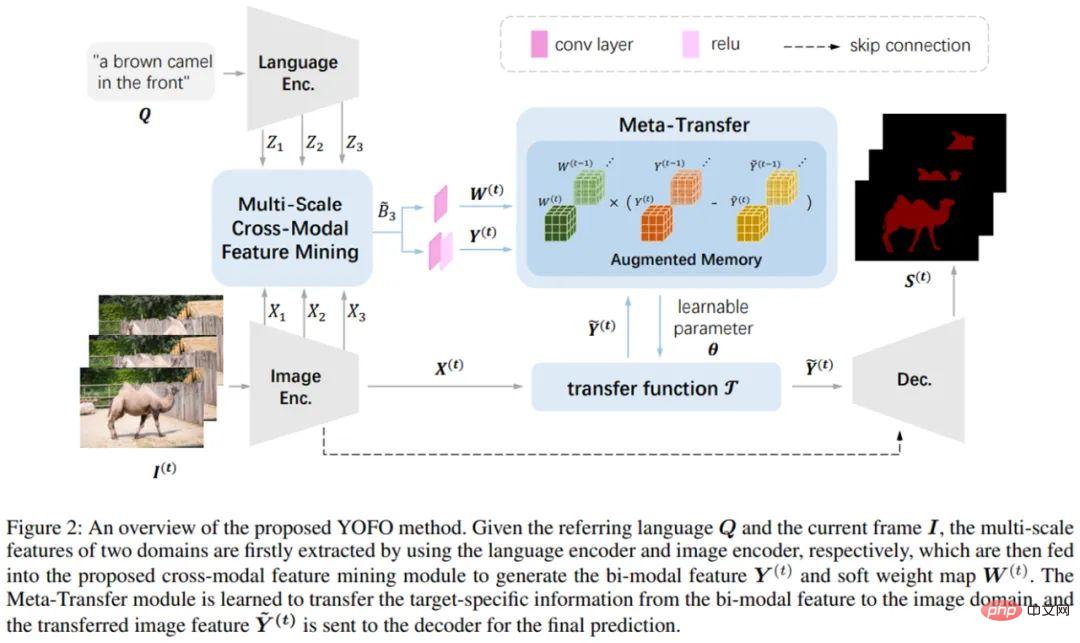

The main process of the YOFO framework is as follows: input images and text first pass through the image encoder and language encoder to extract features, and then in multi-scale Cross-modal feature mining module for fusion. The fused bimodal features are simplified in the meta-transfer module that contains the memory library to eliminate redundant information in the language features. At the same time, temporal information can be preserved to enhance temporal correlation, and finally the segmentation results are obtained through a decoder.

Figure 1: Main process of YOFO framework.

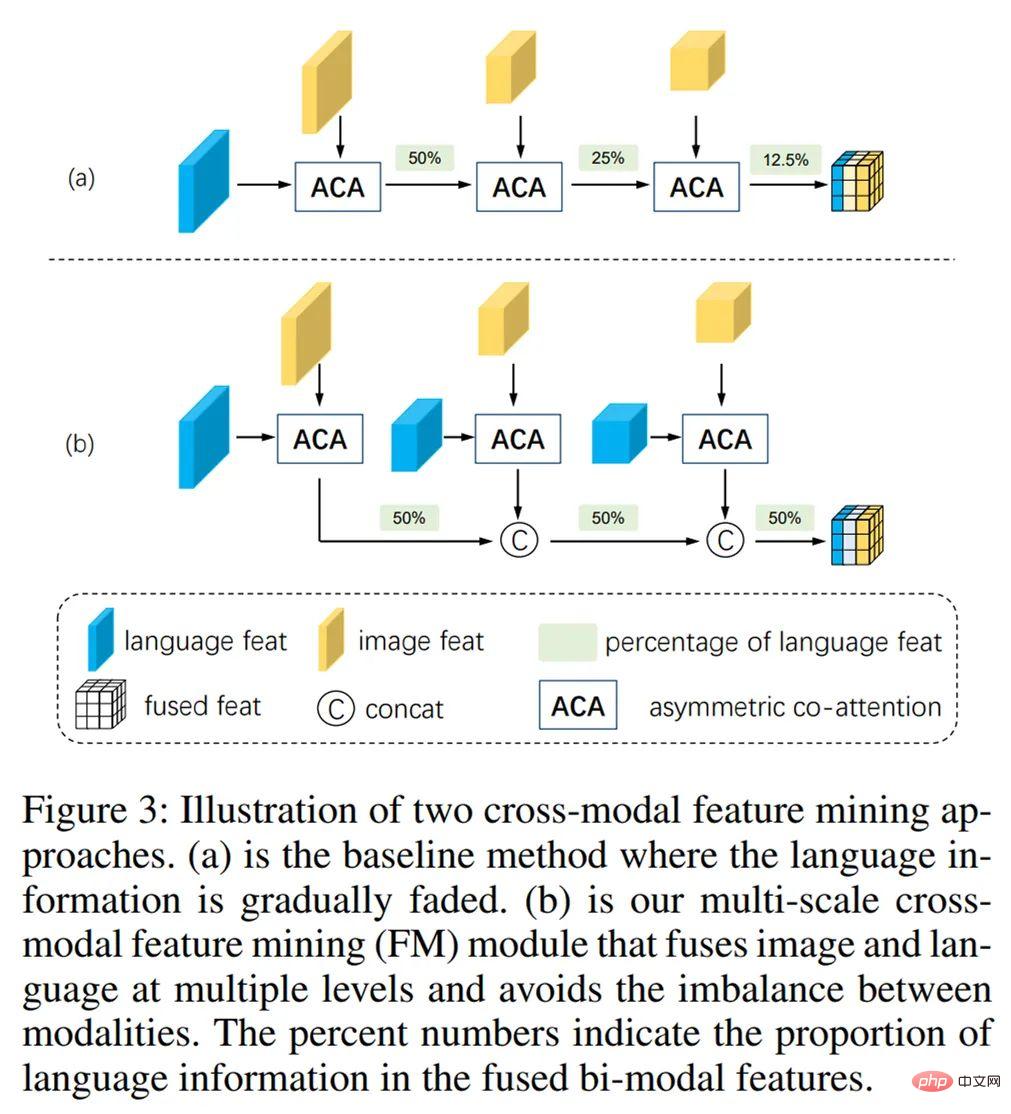

Multi-scale cross-modal feature mining module: This module passes the Fusion of two modal features of different scales can maintain the consistency between the scale information conveyed by the image features and the language features. More importantly, it ensures that the language information will not be diluted and overwhelmed by the multi-scale image information during the fusion process.

Figure 2: Multi-scale cross-modal feature mining module.

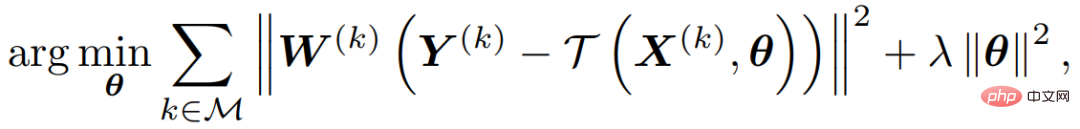

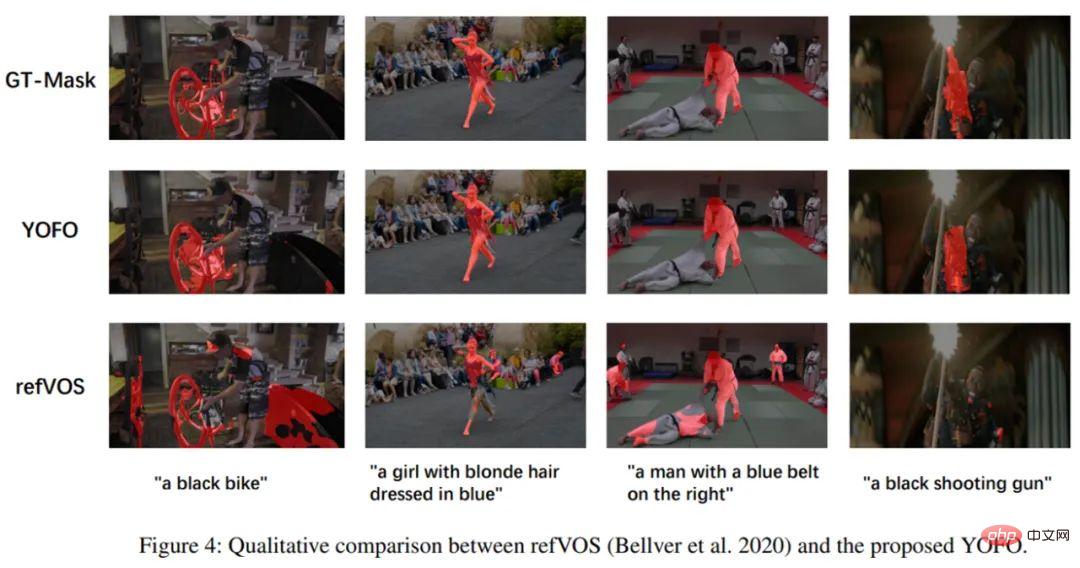

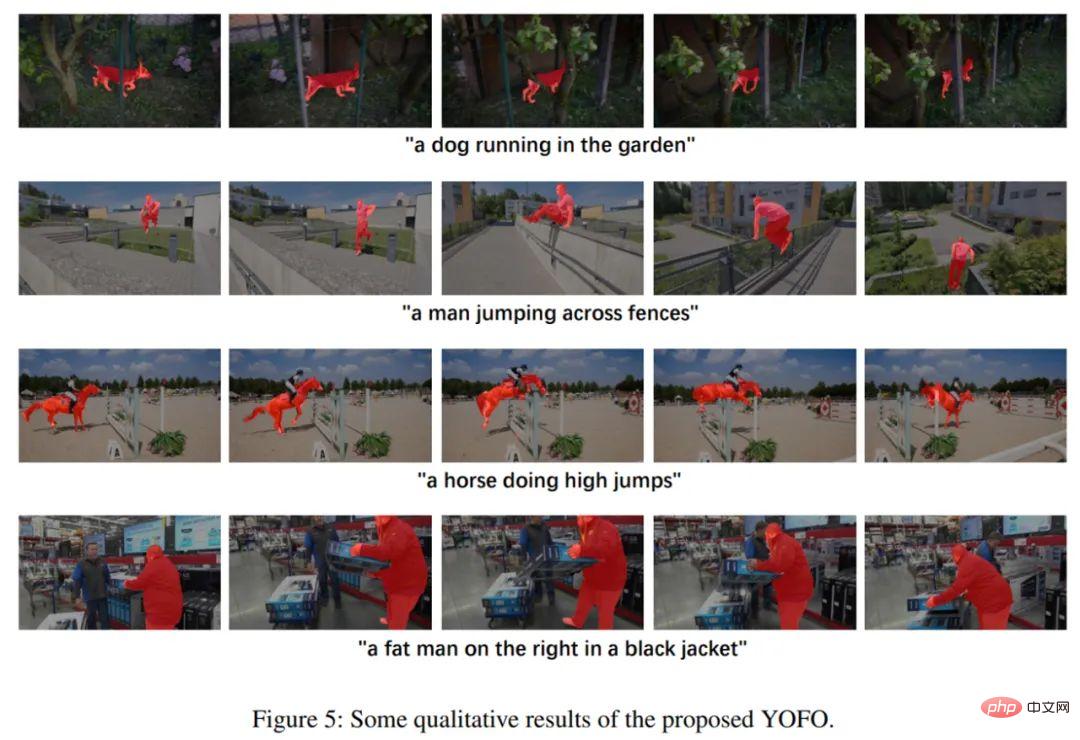

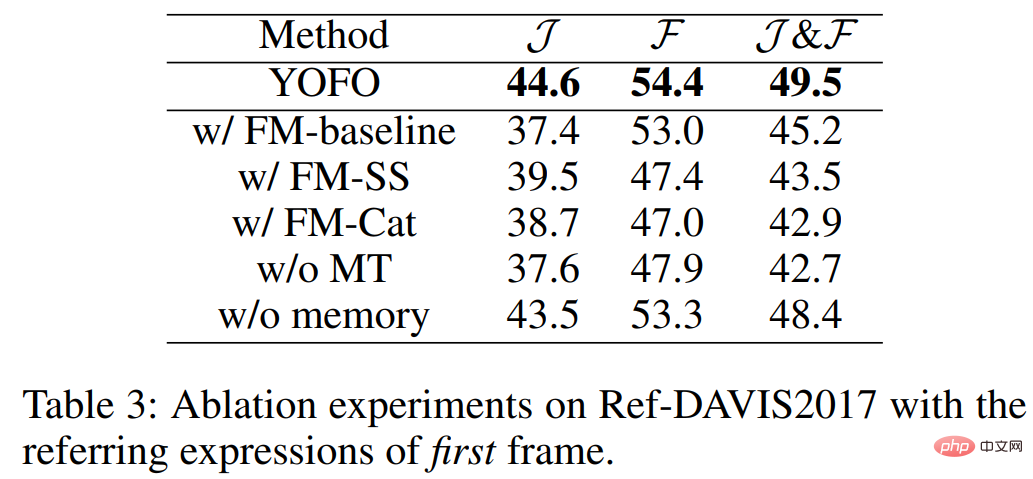

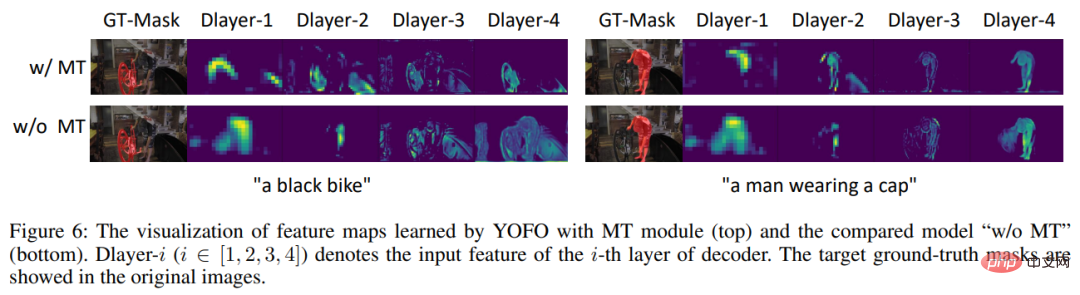

##Meta Migration Module: A learning-to-learn strategy is adopted, and the process can be simply described as the following mapping function. The migration function is a convolution, then The optimization process can be expressed as the following objective function: Among them, M represents A memory bank that can store historical information. W represents the weight of different positions and can give different attention to different positions in the feature. Y represents the bimodal features of each video frame stored in the memory bank. This optimization process maximizes the ability of the meta-transfer function to reconstruct bimodal features, and also enables the entire framework to be trained end-to-end. ##Training and testing: The loss function used in training is lovasz loss, and the training set is two video data sets Ref-DAVIS2017 , Ref-Youtube-VOS, and use the static data set Ref-COCO to perform random affine transformation to simulate video data as auxiliary training. The meta-migration process is performed during training and prediction, and the entire network runs at a speed of 10FPS on 1080ti. The method used in the study has achieved excellent results on two mainstream RVOS data sets (Ref-DAVIS2017 and Ref-Youtube-VOS). The quantitative indicators and some visualization renderings are as follows: ## Figure 3: Quantitative indicators on two mainstream data sets. Figure 4: Visualization on the VOS dataset. Figure 5: Other visualization effects of YOFO.

Figure 6: Effectiveness of feature mining module (FM) and meta-transfer module (MT).

Figure 7: Comparison of decoder output features before and after using the MT module. About the research team is its convolution kernel parameter:

is its convolution kernel parameter:

The above is the detailed content of Based on cross-modal element transfer, the reference video object segmentation method of Meitu & Dalian University of Technology requires only a single stage. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1653

1653

14

14

1413

1413

52

52

1304

1304

25

25

1251

1251

29

29

1224

1224

24

24

How to evaluate the cost-effectiveness of commercial support for Java frameworks

Jun 05, 2024 pm 05:25 PM

How to evaluate the cost-effectiveness of commercial support for Java frameworks

Jun 05, 2024 pm 05:25 PM

Evaluating the cost/performance of commercial support for a Java framework involves the following steps: Determine the required level of assurance and service level agreement (SLA) guarantees. The experience and expertise of the research support team. Consider additional services such as upgrades, troubleshooting, and performance optimization. Weigh business support costs against risk mitigation and increased efficiency.

How does the learning curve of PHP frameworks compare to other language frameworks?

Jun 06, 2024 pm 12:41 PM

How does the learning curve of PHP frameworks compare to other language frameworks?

Jun 06, 2024 pm 12:41 PM

The learning curve of a PHP framework depends on language proficiency, framework complexity, documentation quality, and community support. The learning curve of PHP frameworks is higher when compared to Python frameworks and lower when compared to Ruby frameworks. Compared to Java frameworks, PHP frameworks have a moderate learning curve but a shorter time to get started.

Performance comparison of Java frameworks

Jun 04, 2024 pm 03:56 PM

Performance comparison of Java frameworks

Jun 04, 2024 pm 03:56 PM

According to benchmarks, for small, high-performance applications, Quarkus (fast startup, low memory) or Micronaut (TechEmpower excellent) are ideal choices. SpringBoot is suitable for large, full-stack applications, but has slightly slower startup times and memory usage.

How do the lightweight options of PHP frameworks affect application performance?

Jun 06, 2024 am 10:53 AM

How do the lightweight options of PHP frameworks affect application performance?

Jun 06, 2024 am 10:53 AM

The lightweight PHP framework improves application performance through small size and low resource consumption. Its features include: small size, fast startup, low memory usage, improved response speed and throughput, and reduced resource consumption. Practical case: SlimFramework creates REST API, only 500KB, high responsiveness and high throughput

Golang framework documentation best practices

Jun 04, 2024 pm 05:00 PM

Golang framework documentation best practices

Jun 04, 2024 pm 05:00 PM

Writing clear and comprehensive documentation is crucial for the Golang framework. Best practices include following an established documentation style, such as Google's Go Coding Style Guide. Use a clear organizational structure, including headings, subheadings, and lists, and provide navigation. Provides comprehensive and accurate information, including getting started guides, API references, and concepts. Use code examples to illustrate concepts and usage. Keep documentation updated, track changes and document new features. Provide support and community resources such as GitHub issues and forums. Create practical examples, such as API documentation.

How to choose the best golang framework for different application scenarios

Jun 05, 2024 pm 04:05 PM

How to choose the best golang framework for different application scenarios

Jun 05, 2024 pm 04:05 PM

Choose the best Go framework based on application scenarios: consider application type, language features, performance requirements, and ecosystem. Common Go frameworks: Gin (Web application), Echo (Web service), Fiber (high throughput), gorm (ORM), fasthttp (speed). Practical case: building REST API (Fiber) and interacting with the database (gorm). Choose a framework: choose fasthttp for key performance, Gin/Echo for flexible web applications, and gorm for database interaction.

Java Framework Learning Roadmap: Best Practices in Different Domains

Jun 05, 2024 pm 08:53 PM

Java Framework Learning Roadmap: Best Practices in Different Domains

Jun 05, 2024 pm 08:53 PM

Java framework learning roadmap for different fields: Web development: SpringBoot and PlayFramework. Persistence layer: Hibernate and JPA. Server-side reactive programming: ReactorCore and SpringWebFlux. Real-time computing: ApacheStorm and ApacheSpark. Cloud Computing: AWS SDK for Java and Google Cloud Java.

What are the common misunderstandings in the learning process of Golang framework?

Jun 05, 2024 pm 09:59 PM

What are the common misunderstandings in the learning process of Golang framework?

Jun 05, 2024 pm 09:59 PM

There are five misunderstandings in Go framework learning: over-reliance on the framework and limited flexibility. If you don’t follow the framework conventions, the code will be difficult to maintain. Using outdated libraries can cause security and compatibility issues. Excessive use of packages obfuscates code structure. Ignoring error handling leads to unexpected behavior and crashes.