Technology peripherals

Technology peripherals

AI

AI

Super realistic rendering! Unreal Engine technology expert explains the global illumination system Lumen

Super realistic rendering! Unreal Engine technology expert explains the global illumination system Lumen

Super realistic rendering! Unreal Engine technology expert explains the global illumination system Lumen

Real-time global illumination (Real-time GI) has always been the Holy Grail of computer graphics.

Over the years, the industry has also proposed various methods to solve this problem.

Commonly used method packages constrain the problem domain by utilizing certain assumptions, such as static geometry, rough scene representation or tracking rough probes, and interpolating lighting between the two.

#In Unreal Engine, the global illumination and reflection system Lumen technology was founded by Krzysztof Narkowicz and Daniel Wright.

The goal was to build a solution that was different from its predecessors, able to achieve unified lighting and a baked-like lighting quality.

Recently, at SIGGRAPH 2022, Krzysztof Narkowicz and the team talked about their journey of building Lumen technology.

Software Ray Tracing - Height Field

The current hardware ray tracing lacks powerful GPU computing power support. We don't know how fast hardware ray tracing will be, or even if new generation consoles will support it.

Therefore, the software ray tracing method is used. It turns out to be a really useful tool for scaling or supporting scenes with a lot of overlapping instances.

Software ray tracing provides the possibility to use a variety of tracking structures, such as triangles, distance fields, and surfels. , or heightfields.

Here, Krzysztof Narkowicz abandons studying triangles and briefly studies surfels, but for those geometries that require considerable density to represent, updating or tracking surfels is Quite expensive.

After initial exploration, height fields were the most suitable as they map well into the hardware and provide surface representation and simple continuous LODs.

Because we can use all POM algorithms, such as min-max quadtree, its tracking speed is very fast.

Additionally, multiple height fields can represent complex geometries, similar to rasterizing bounding volume hierarchies.

It is also very interesting to think of it as an acceleration structure of surfels. A single texel is a surfel restricted by a regular grid. .

In addition to the height field, Lumen has other properties, such as albedo or lighting, so that it can calculate the lighting every time.

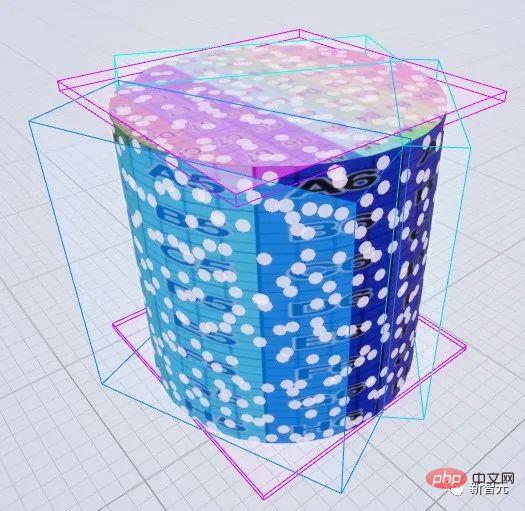

In Lumen, the developer named this complete decal projection with surface data Cards, which is the capture position.

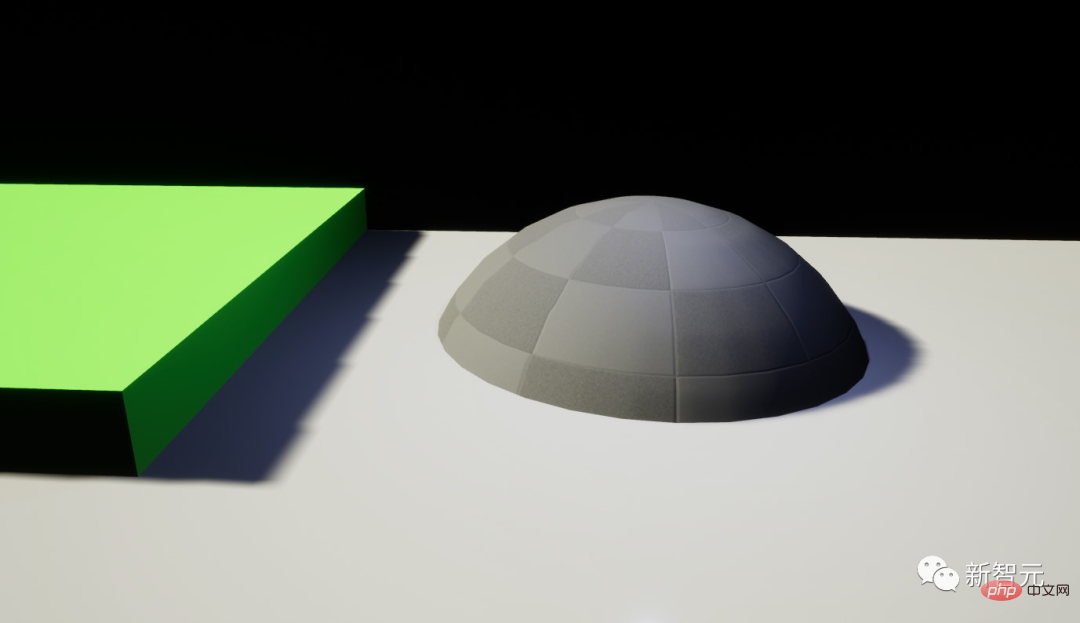

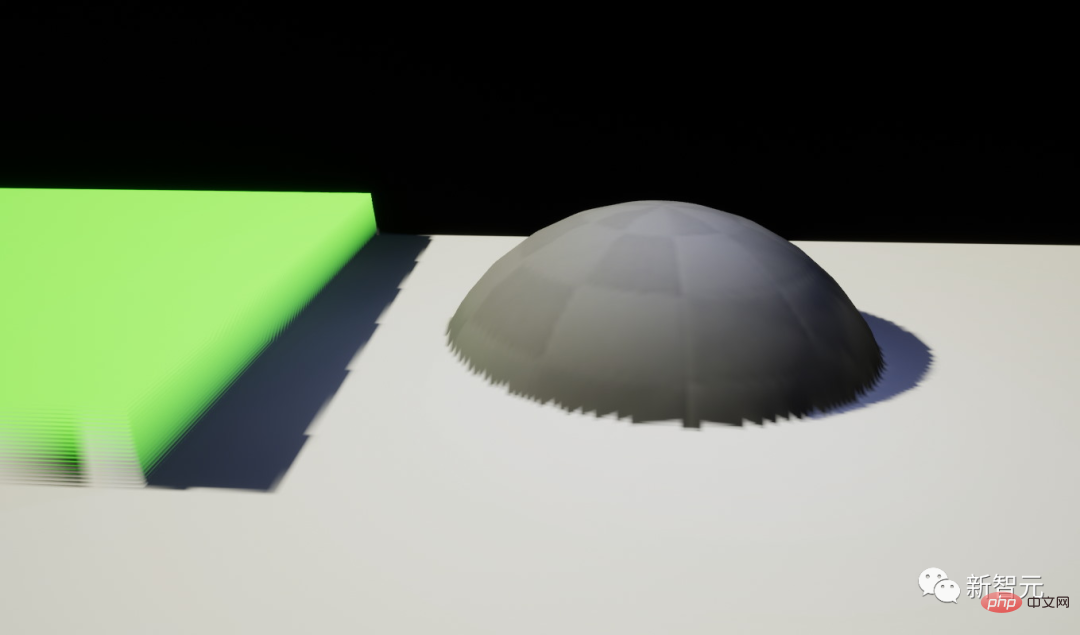

Rasterized triangle

Raymarched cards (height field)

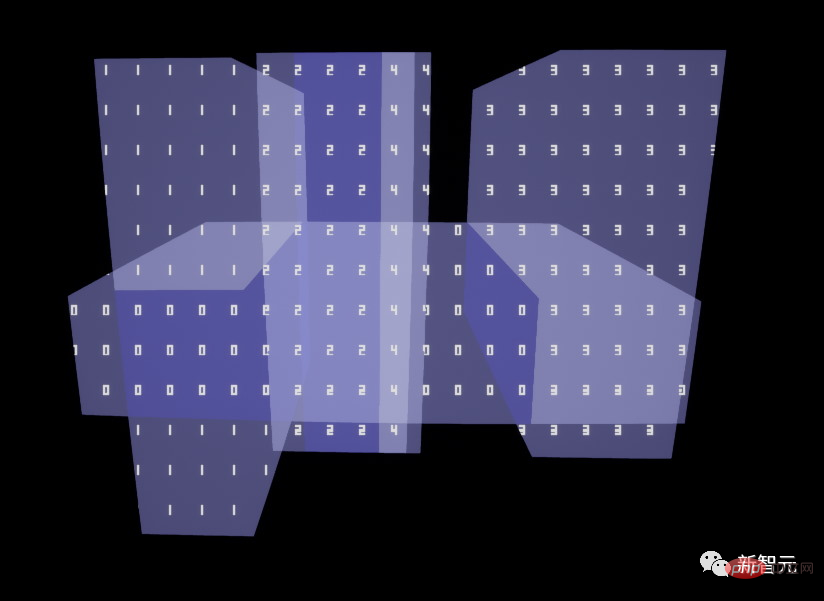

Raymarched cards are too slow for each card in the scene. Therefore, a card acceleration structure is needed, and the developers chose a 4-node BVH. It is built for a complete scene, with every frame on the CPU and uploaded to the GPU.

Then in the trace shader we will do a stack based traversal and dynamically order the nodes so that the closest nodes are traversed first.

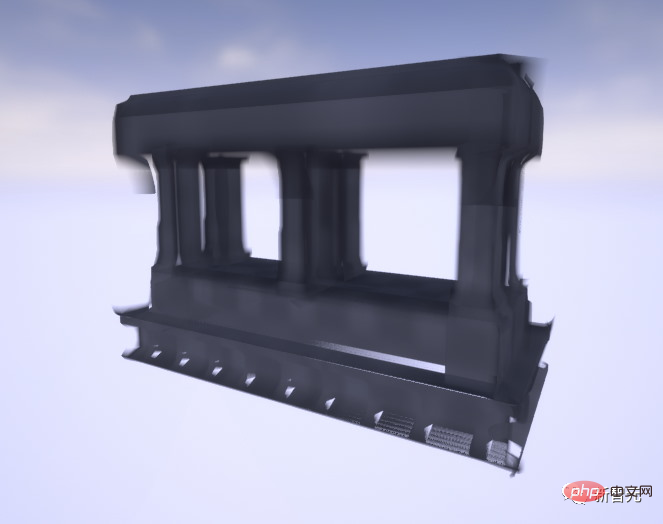

BVH Debug View

Capture Position

The trickiest part here is how to place the height field so that the entire net is captured grid. Krzysztof Narkowicz said, "One of the ideas is based on the global distance field of the GPU. Every frame we will trace a small set of primary rays to find rays that are not covered by the card.

Next, For each undiscovered ray, we will traverse the global distance field using surface gradients to determine an optimal card orientation and range, resulting in a new card.

Capture position of the global distance field

On the one hand, it is confirmed that it can generate cards for the entire merged scene without having to grid to generate cards. On the other hand, it turns out to be quite finicky in practice, as it will produce different results every time the camera moves.

Another idea is to put each Mesh cards as a mesh import step. Do this by building a geometric BVH where each node will be converted to N cards.

As follows:

Rasterized triangle

Ray Step Card (High Field)

##Card Position View

This method encountered problems in finding a good location because the BVH node was not a good proxy for placing the card.

So, the researchers Another idea was proposed: follow the UV unwrapping technique and try to cluster surface elements.

Since they were dealing with millions of polygons provided by Nanite, they replaced the triangles with Surface elements.

At the same time, they also switched to a less constrained free guide card to try to match the surface better.

Freely Guided Card Position

By trying this method, this method works very well for simple shapes, but before converging to more Problems arose with complex shapes.

Finally, Narkowicz switched back to axis-aligned cards, but this time generated by bin clusters and per-mesh.

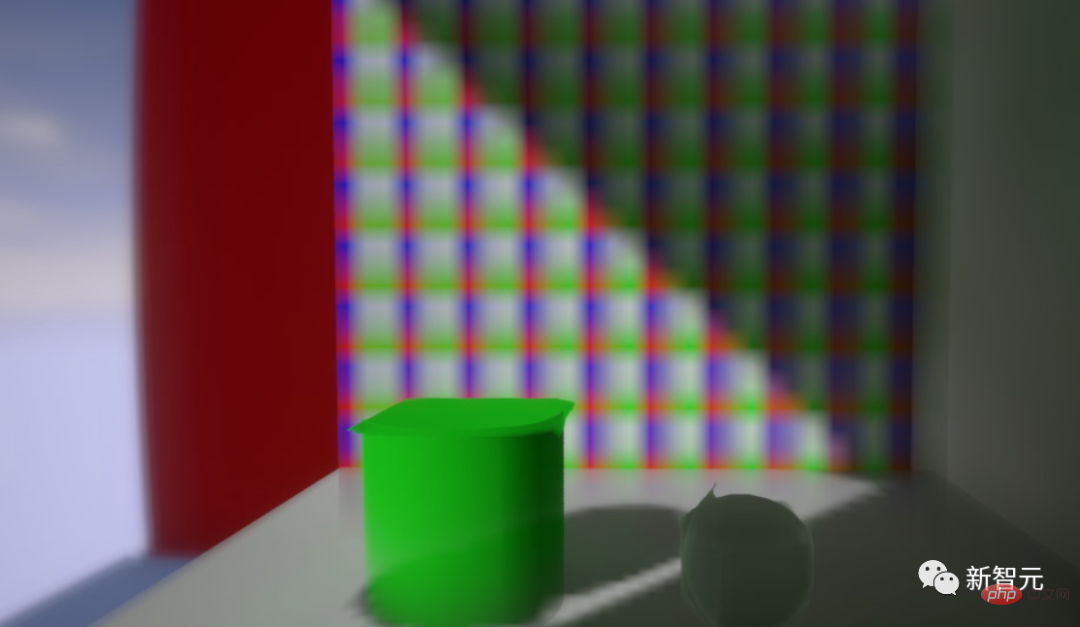

Cone trackingThe unique properties of tracking height fields can also achieve cone tracking.

Cone tracking is useful for reducing noise Very efficient because a pre-filtered single cone trace represents thousands of individual rays.

Ray Tracing

Cone Tracking

For each card, the developers also store a complete pre-filtered mip-map chain with surface height, lighting and material properties.

When tracing, select the appropriate stepping ray based on the cone footprint and perform ray tracing on it.

Tracking of edges without jams and edges with jams

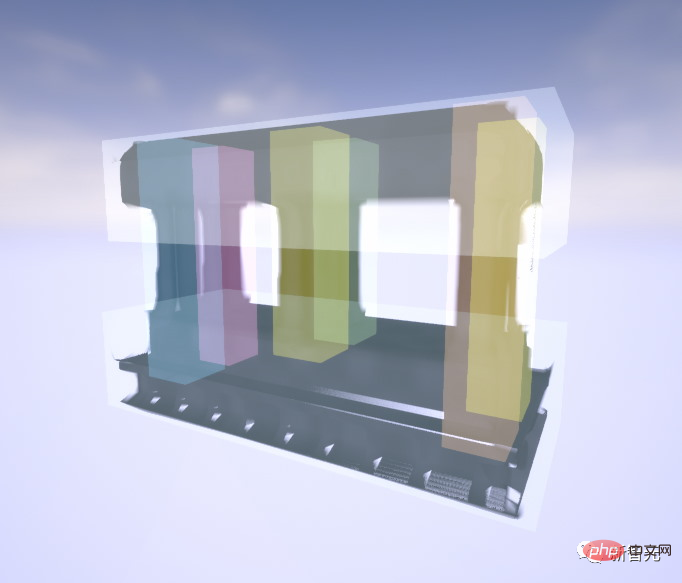

Merge scene representation

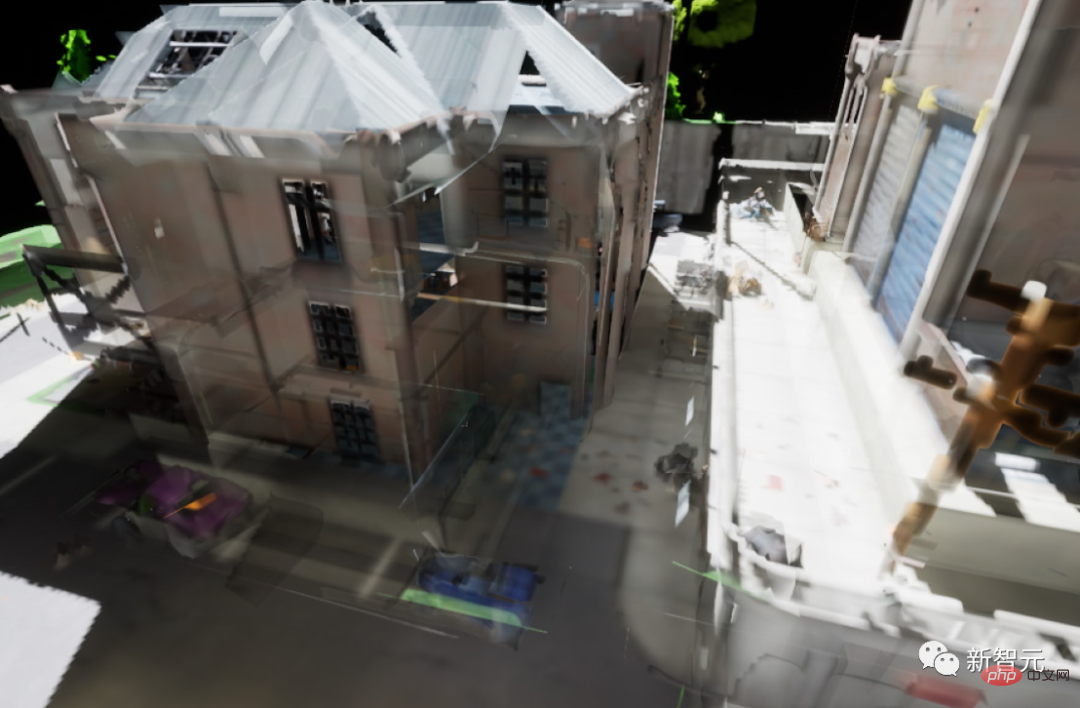

Tracing large numbers of incoherent rays in software is very slow. Ideally, a single global structure would be used instead of multiple height fields.

When the cone footprint gets larger and larger, an exact representation of the scene is not actually needed and can be replaced by a more approximate representation for faster speeds.

A more complex scene with dozens of cards to trace each ray

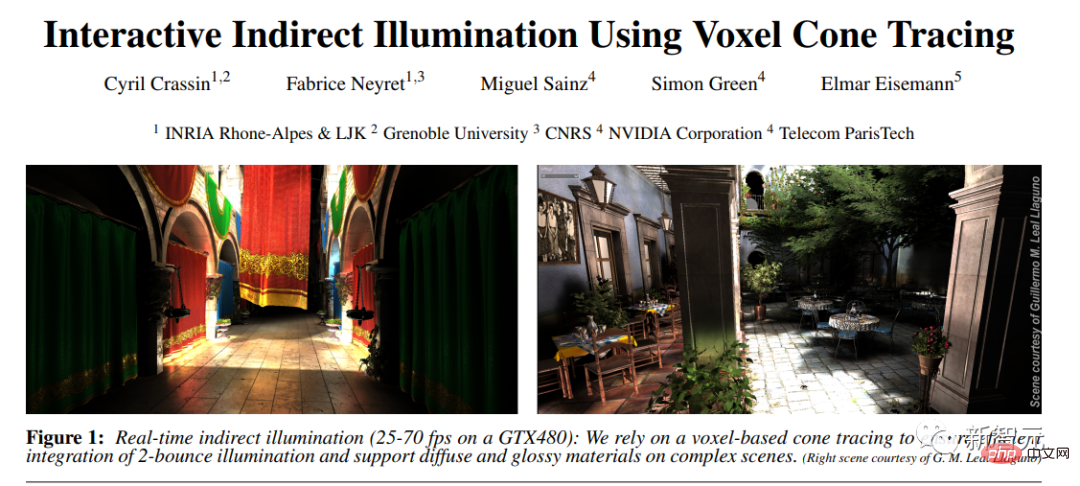

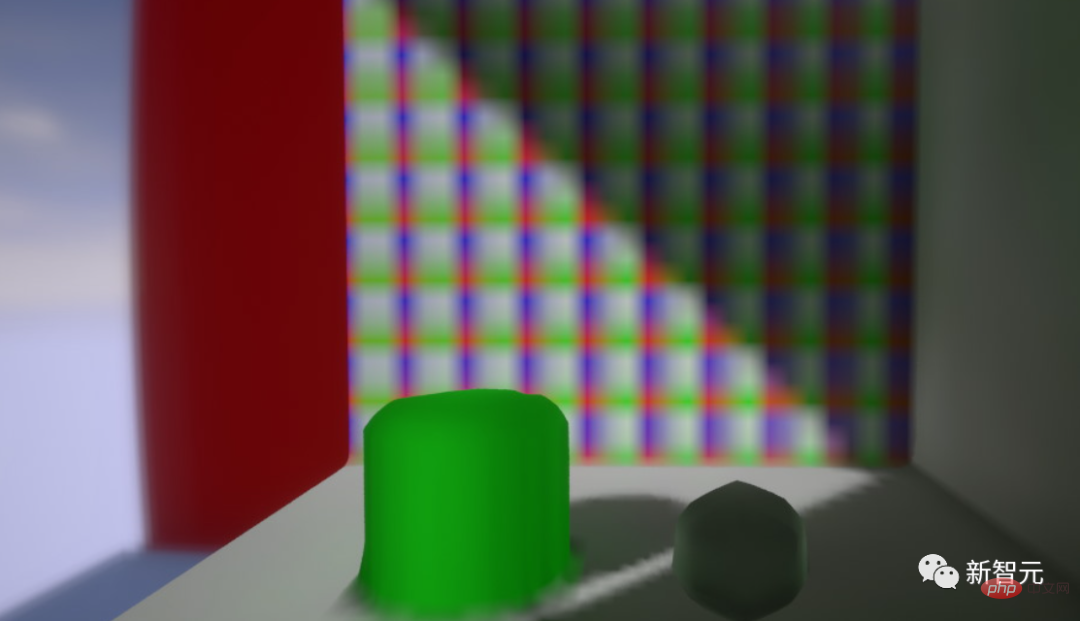

The first successful method was to implement pure voxel cone tracing, where the entire scene is voxelized at runtime, as in the classic "Interactive Indirect Illumination Using Voxel Cone Tracing" article accomplish.

Rasterized triangle

Ray Step Card (Height Field)

##Voxel Cone Tracking

The ray stepping card continues with voxel cone tracking

And this The main drawback of the method is leakage due to excessive fusion of scene geometry, which is especially noticeable when tracking coarse low-mapping.

The first technique to reduce image leakage is to track the global distance field and only sample voxels close to the surface. During the sampling process, opacity accumulates as the sampling range expands, and when tracking is stopped, the opacity will reach 1. This always performs accurate sampling near the geometry to reduce graphics leakage.

The second technique is to voxelize the interior of the mesh. This greatly reduces leakage at thicker walls, although this can also cause some excessive occlusion.

Other experiments include tracking sparse voxel blobs and voxels per face transparency channel. The purpose of both experiments is to solve the ray direction voxel interpolation problem, i.e., an axis-aligned solid wall will become transparent for rays that are not normal to the wall.

Voxel Bit Brick stores one bit per voxel in an 8x8x8 brick to indicate whether a given voxel is empty. A two-stage DDA algorithm is then used for ray stepping. Voxels with transparent surfaces are similar but have the same DDA and increasing transparency along the ray direction. It turns out that both methods are not as good at representing geometry as the distance domain and are quite slow.

Voxels with transparency

The earliest way to track the merged representation was to use a global distance field and a global per-scene card The shading hits perform cone tracking. That is, iterate through a BVH, find out which cards in the scene affect the sampling points, and then sample the moderate sliding level of each card based on the tapered footprint.

This article abandoned this method because it was not considered to only use it to represent the far-field trajectory, but was regarded as A direct replacement for high field light stepping. Somewhat ironically, this abandoned approach came closest to the solution we ultimately arrived at two years later.

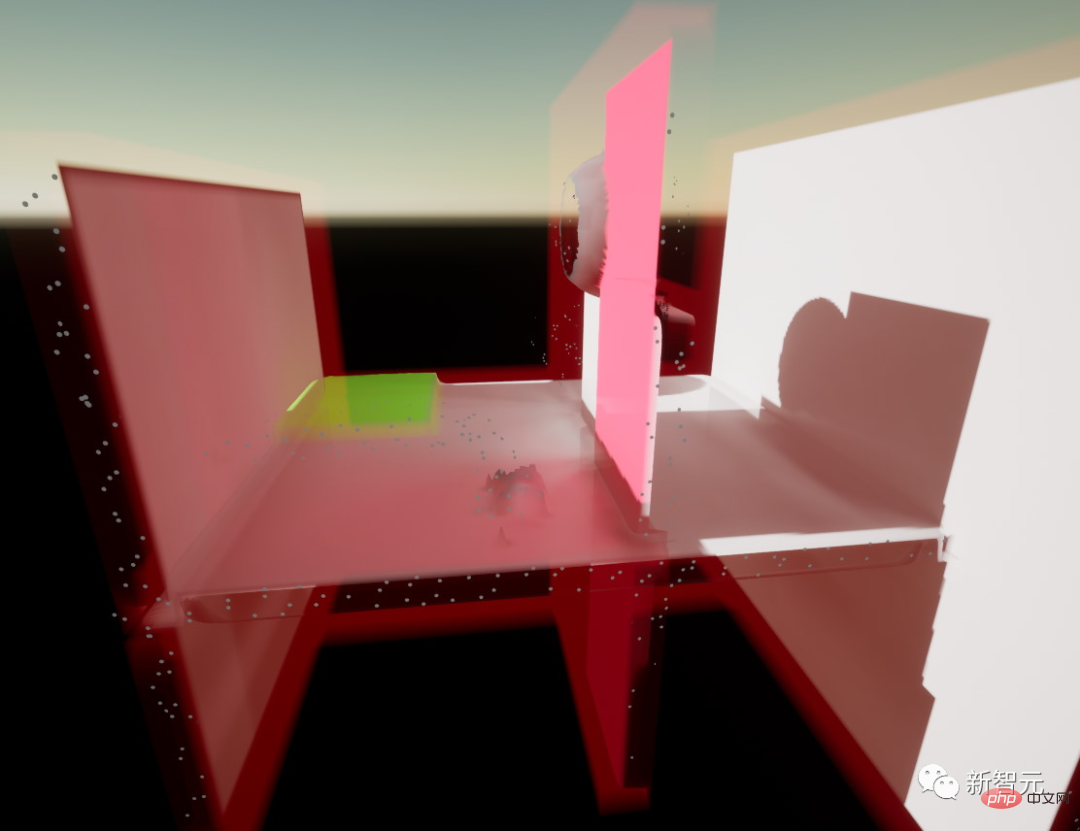

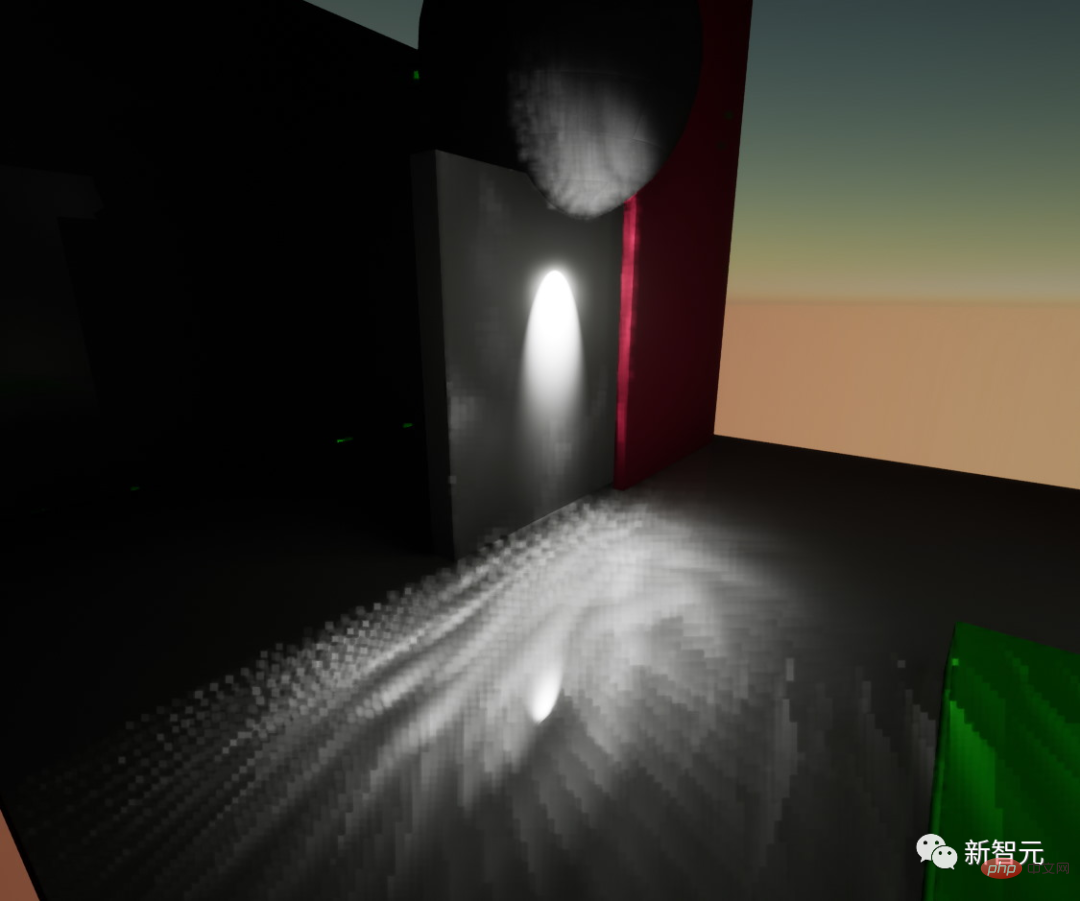

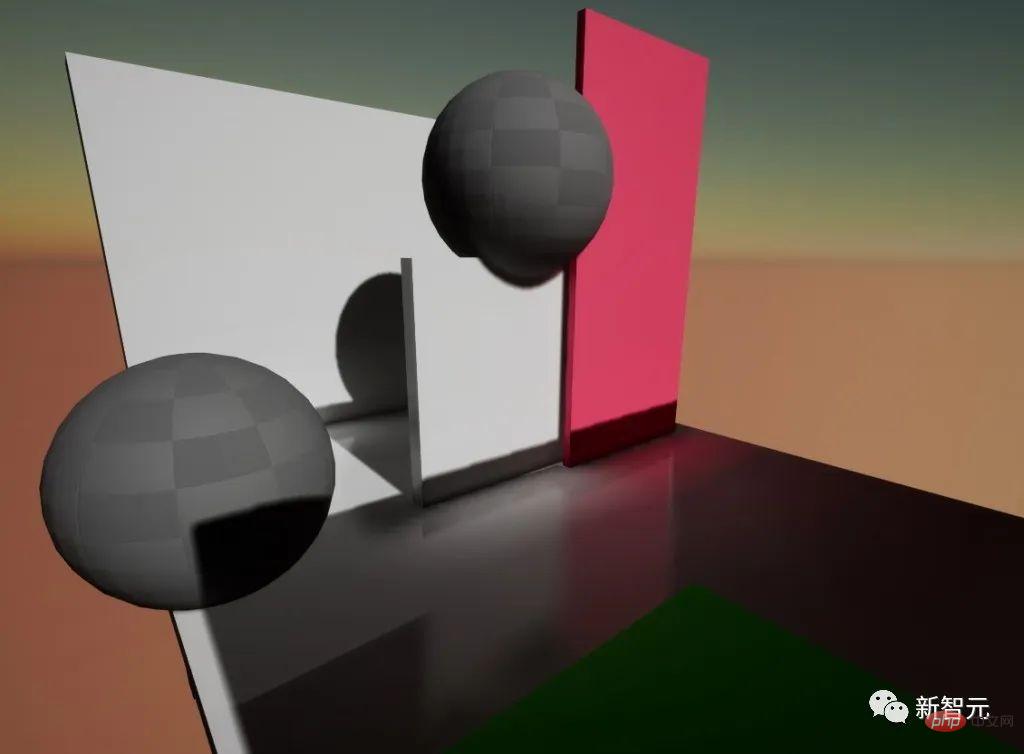

First demo

At this point, we can already produce some pretty good results:

Despite this, we encountered a lot of graphics leakage issues, and in this simple scene, the performance was less than ideal even on a high-end PC GPU.

In order to solve the leakage problem to process more instances, processing is completed in under 8 milliseconds on PS5. This demo was a real catalyst.

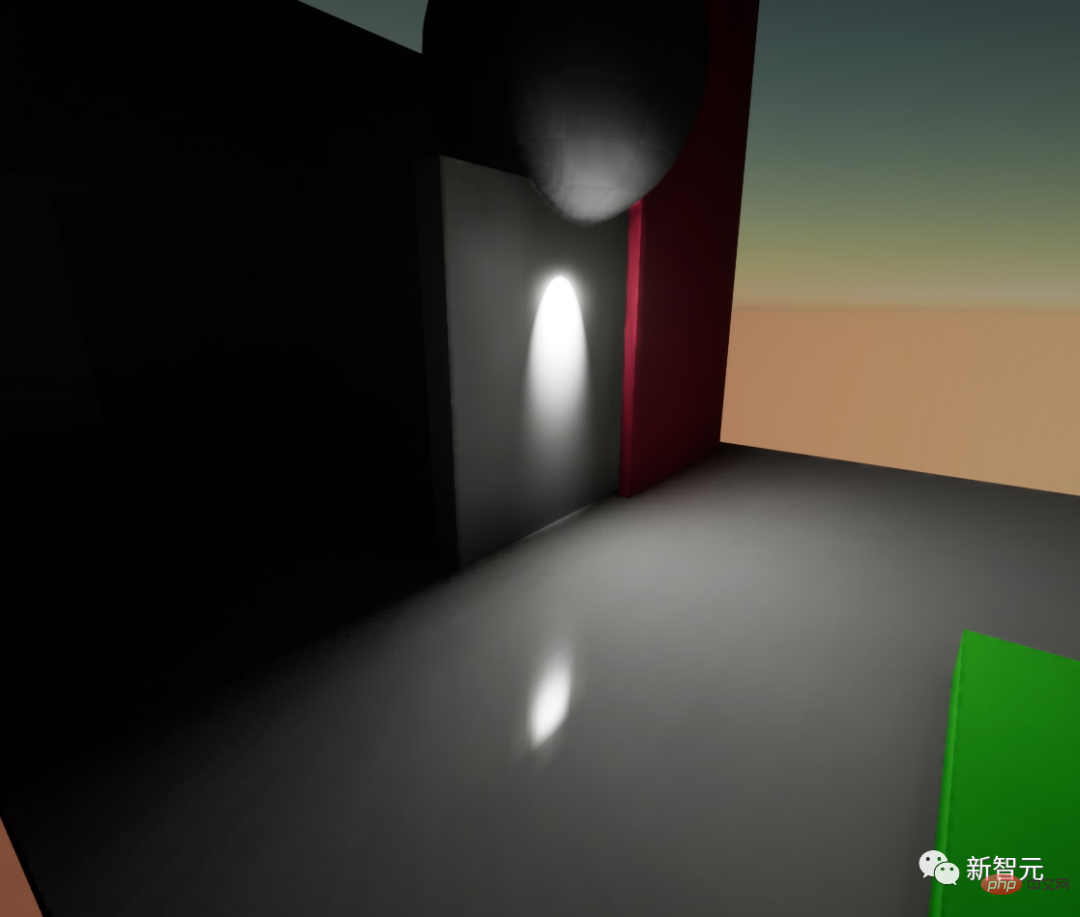

Compared with previous solutions, the first and biggest change is the replacement of height field tracking with distance field tracking.

To mask the health point, insert the ray of the health point from the card, because the distance field has no vertex properties, this way, the uncovered area will only cause energy loss, not leakage.

For the same reason, change voxel cone tracing to global distance field ray tracing.

At the same time, we have also made many different optimizations and time-divided different parts of Lumen through caching solutions. It's worth noting that without cone tracking we have to denoise and cache the tracking more aggressively, but again that's a long and complicated story.

This is the final result after we sent the first demo, which was consistently under 8ms on PS5, including updates to all shared data structures such as the global distance field. The current performance is even better. For example, the completion time of the latest demo is close to 4 milliseconds, and the quality has also been significantly improved.

End

In short, this article has completely rewritten the entire Lumen, and there are many different ideas that have not been implemented. On the other hand, some things are repurposed. Just like originally we used cards as a tracking representation, but now they are used as a way to cache various calculations on the surface of the mesh. Similar to software tracking, it started as our main tracking method, mainly cone tracking, but ended up being a way to scale down and support complex heavy scenes with a lot of overlapping instances.

The above is the detailed content of Super realistic rendering! Unreal Engine technology expert explains the global illumination system Lumen. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

Trajectory prediction plays an important role in autonomous driving. Autonomous driving trajectory prediction refers to predicting the future driving trajectory of the vehicle by analyzing various data during the vehicle's driving process. As the core module of autonomous driving, the quality of trajectory prediction is crucial to downstream planning control. The trajectory prediction task has a rich technology stack and requires familiarity with autonomous driving dynamic/static perception, high-precision maps, lane lines, neural network architecture (CNN&GNN&Transformer) skills, etc. It is very difficult to get started! Many fans hope to get started with trajectory prediction as soon as possible and avoid pitfalls. Today I will take stock of some common problems and introductory learning methods for trajectory prediction! Introductory related knowledge 1. Are the preview papers in order? A: Look at the survey first, p

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

StableDiffusion3’s paper is finally here! This model was released two weeks ago and uses the same DiT (DiffusionTransformer) architecture as Sora. It caused quite a stir once it was released. Compared with the previous version, the quality of the images generated by StableDiffusion3 has been significantly improved. It now supports multi-theme prompts, and the text writing effect has also been improved, and garbled characters no longer appear. StabilityAI pointed out that StableDiffusion3 is a series of models with parameter sizes ranging from 800M to 8B. This parameter range means that the model can be run directly on many portable devices, significantly reducing the use of AI

Have you really mastered coordinate system conversion? Multi-sensor issues that are inseparable from autonomous driving

Oct 12, 2023 am 11:21 AM

Have you really mastered coordinate system conversion? Multi-sensor issues that are inseparable from autonomous driving

Oct 12, 2023 am 11:21 AM

The first pilot and key article mainly introduces several commonly used coordinate systems in autonomous driving technology, and how to complete the correlation and conversion between them, and finally build a unified environment model. The focus here is to understand the conversion from vehicle to camera rigid body (external parameters), camera to image conversion (internal parameters), and image to pixel unit conversion. The conversion from 3D to 2D will have corresponding distortion, translation, etc. Key points: The vehicle coordinate system and the camera body coordinate system need to be rewritten: the plane coordinate system and the pixel coordinate system. Difficulty: image distortion must be considered. Both de-distortion and distortion addition are compensated on the image plane. 2. Introduction There are four vision systems in total. Coordinate system: pixel plane coordinate system (u, v), image coordinate system (x, y), camera coordinate system () and world coordinate system (). There is a relationship between each coordinate system,

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

This paper explores the problem of accurately detecting objects from different viewing angles (such as perspective and bird's-eye view) in autonomous driving, especially how to effectively transform features from perspective (PV) to bird's-eye view (BEV) space. Transformation is implemented via the Visual Transformation (VT) module. Existing methods are broadly divided into two strategies: 2D to 3D and 3D to 2D conversion. 2D-to-3D methods improve dense 2D features by predicting depth probabilities, but the inherent uncertainty of depth predictions, especially in distant regions, may introduce inaccuracies. While 3D to 2D methods usually use 3D queries to sample 2D features and learn the attention weights of the correspondence between 3D and 2D features through a Transformer, which increases the computational and deployment time.

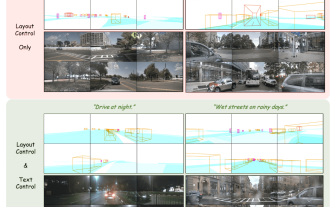

The first multi-view autonomous driving scene video generation world model | DrivingDiffusion: New ideas for BEV data and simulation

Oct 23, 2023 am 11:13 AM

The first multi-view autonomous driving scene video generation world model | DrivingDiffusion: New ideas for BEV data and simulation

Oct 23, 2023 am 11:13 AM

Some of the author’s personal thoughts In the field of autonomous driving, with the development of BEV-based sub-tasks/end-to-end solutions, high-quality multi-view training data and corresponding simulation scene construction have become increasingly important. In response to the pain points of current tasks, "high quality" can be decoupled into three aspects: long-tail scenarios in different dimensions: such as close-range vehicles in obstacle data and precise heading angles during car cutting, as well as lane line data. Scenes such as curves with different curvatures or ramps/mergings/mergings that are difficult to capture. These often rely on large amounts of data collection and complex data mining strategies, which are costly. 3D true value - highly consistent image: Current BEV data acquisition is often affected by errors in sensor installation/calibration, high-precision maps and the reconstruction algorithm itself. this led me to

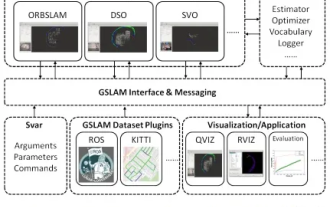

GSLAM | A general SLAM architecture and benchmark

Oct 20, 2023 am 11:37 AM

GSLAM | A general SLAM architecture and benchmark

Oct 20, 2023 am 11:37 AM

Suddenly discovered a 19-year-old paper GSLAM: A General SLAM Framework and Benchmark open source code: https://github.com/zdzhaoyong/GSLAM Go directly to the full text and feel the quality of this work ~ 1 Abstract SLAM technology has achieved many successes recently and attracted many attracted the attention of high-tech companies. However, how to effectively perform benchmarks on speed, robustness, and portability with interfaces to existing or emerging algorithms remains a problem. In this paper, a new SLAM platform called GSLAM is proposed, which not only provides evaluation capabilities but also provides researchers with a useful way to quickly develop their own SLAM systems.

'Minecraft' turns into an AI town, and NPC residents role-play like real people

Jan 02, 2024 pm 06:25 PM

'Minecraft' turns into an AI town, and NPC residents role-play like real people

Jan 02, 2024 pm 06:25 PM

Please note that this square man is frowning, thinking about the identities of the "uninvited guests" in front of him. It turned out that she was in a dangerous situation, and once she realized this, she quickly began a mental search to find a strategy to solve the problem. Ultimately, she decided to flee the scene and then seek help as quickly as possible and take immediate action. At the same time, the person on the opposite side was thinking the same thing as her... There was such a scene in "Minecraft" where all the characters were controlled by artificial intelligence. Each of them has a unique identity setting. For example, the girl mentioned before is a 17-year-old but smart and brave courier. They have the ability to remember and think, and live like humans in this small town set in Minecraft. What drives them is a brand new,

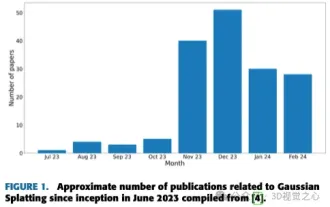

More than just 3D Gaussian! Latest overview of state-of-the-art 3D reconstruction techniques

Jun 02, 2024 pm 06:57 PM

More than just 3D Gaussian! Latest overview of state-of-the-art 3D reconstruction techniques

Jun 02, 2024 pm 06:57 PM

Written above & The author’s personal understanding is that image-based 3D reconstruction is a challenging task that involves inferring the 3D shape of an object or scene from a set of input images. Learning-based methods have attracted attention for their ability to directly estimate 3D shapes. This review paper focuses on state-of-the-art 3D reconstruction techniques, including generating novel, unseen views. An overview of recent developments in Gaussian splash methods is provided, including input types, model structures, output representations, and training strategies. Unresolved challenges and future directions are also discussed. Given the rapid progress in this field and the numerous opportunities to enhance 3D reconstruction methods, a thorough examination of the algorithm seems crucial. Therefore, this study provides a comprehensive overview of recent advances in Gaussian scattering. (Swipe your thumb up