Technology peripherals

Technology peripherals

AI

AI

Let's talk about low-speed autonomous driving and high-speed autonomous driving in one article

Let's talk about low-speed autonomous driving and high-speed autonomous driving in one article

Let's talk about low-speed autonomous driving and high-speed autonomous driving in one article

In a previously shared article: How to make self-driving cars "recognize the road", I mainly talked about the importance of high-precision maps in self-driving cars. A friend left a message, "If the author knew about STO Express sorting I’m afraid the working scene of an automatic mobile car will not have the views of this article?” In this dialogue, the related concepts of low-speed automatic driving and high-speed automatic driving were involved.

Self-driving cars, also known as driverless cars, are an automated vehicle and a vehicle that requires driver assistance or does not require control at all. As automated vehicles, self-driving cars can sense the surrounding environment and complete navigation and travel tasks without human operation. The ultimate goal of the development of autonomous driving is to be able to complete manned travel through autonomous vehicles. However, the development of autonomous vehicle technology, especially the development of high-speed autonomous driving, is not as simple and smooth as we imagined. At this stage, the self-driving transport vehicles and express delivery vehicles we see in fixed places such as campuses, parks, and airports all fall into the category of low-speed autonomous driving. So what exactly are high-speed autonomous driving and low-speed autonomous driving? What is the difference between high-speed autonomous driving and low-speed autonomous driving?

Low-speed automatic driving

First of all, let’s talk about low-speed automatic driving. As the name suggests, low-speed automatic driving refers to autonomous vehicles that drive at lower speeds. The main purpose of low-speed autonomous vehicles is Its function is to carry objects, and it is simple and fixed relative to the application scenario, and the speed is generally less than 50 km/h. The technological development of low-speed autonomous driving has been relatively mature and has been applied to all aspects of our daily lives, such as in campuses, parks and other scenes, we see express delivery vehicles, shuttle buses in scenic spots and airports, etc. According to conservative estimates, including low-speed passenger-carrying unmanned vehicles, low-speed cargo-carrying unmanned vehicles, and unmanned work vehicles, China's low-speed autonomous vehicle sales will reach 25,000 units in 2021, and will reach 104,000 units in 2022. With the low-speed With the technological development of autonomous vehicles, low-speed autonomous vehicles will become a part of our daily lives.

The development of low-speed autonomous driving has also given rise to the formulation of industry standards. On October 29, 2021, led by the Shenzhen Intelligent Transportation Industry Association, more than 57 units and 112 experts jointly compiled the "Low-speed Autonomous Vehicles" "Urban Commercial Operation Safety Management Specifications" group standard was officially released. This team standard plays an important guiding role in the launch and use of low-speed autonomous vehicles. It also provides effective guidance for government functional management departments and places where low-speed autonomous vehicles are used. reference.

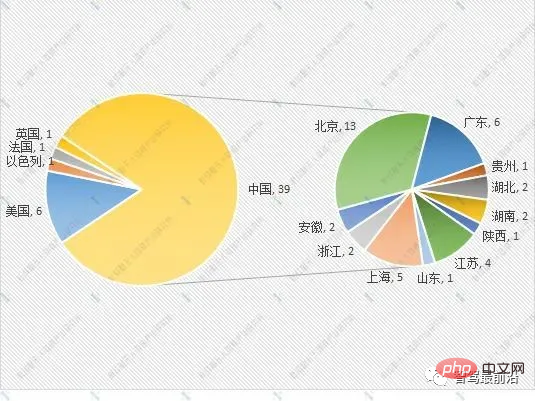

The development of low-speed autonomous driving has also won the favor of a lot of capital. In 2021, the domestic and foreign autonomous driving industry disclosed more than 200 important financing events, of which nearly 70% of low-speed autonomous driving product and solution providers received financing. Starting from 30 billion yuan. Among the nearly 70 financings, 47 were funded by foreign companies, including 9 foreign companies and 39 Chinese companies.

Regional distribution of financing companies

The development prospects of low-speed autonomous driving are very broad, and here are the reasons Mainly because low-speed autonomous driving solves many problems for consumers. For example, low-speed autonomous driving provides a good solution to the problem of the last mile of express delivery. Compared with the high cost of using manual labor for the last mile of transportation, Or use express lockers to deliver the last mile to consumers. None of these solutions can perfectly solve the last mile problem, but the emergence of low-speed autonomous driving can complete the job very well. Consumers can set the last mile through the mobile app. During the delivery time, the low-speed self-driving transport vehicle can deliver the express delivery downstairs or to the door on time, saving the time and cost of manual transportation of express delivery, and eliminating the need for consumers to go to the express cabinet to pick up the express delivery.

But in the development process of low-speed autonomous driving, there are still many problems that need to be faced. The most important one is the limitation of low-speed autonomous driving usage scenarios. When low-speed autonomous vehicles are released in an area, sufficient information of the site needs to be scanned (road information, intersection information, building information, etc.). Low-speed autonomous driving The driving car can be very familiar with the scanned site and can fully realize the automatic driving function. However, after changing the scene, the low-speed automatic driving car will not be able to adapt to the environment. It's like a child who needs to hold on to something to walk. If he doesn't have something to hold on to, he may not be able to walk. In short, low-speed autonomous vehicles are not intelligent and can only exert their full capabilities in autonomous driving in fixed scenarios.

Low-speed autonomous driving also provides a lot of technical reference for the development of high-speed autonomous driving. For example, in autonomous vehicles, various technologies such as hardware, software, algorithms, and communications will be integrated. The laser radar, Hardware equipment such as millimeter wave radar, satellite positioning, and inertial navigation are also used in low-speed autonomous vehicles, and technologies such as perception, positioning, planning, decision-making, and data storage are also applied, including wire-controlled chassis technology in the automotive industry chain. All are popularized in low-speed autonomous vehicles.

High-speed automatic driving

The main difference between high-speed automatic driving and low-speed automatic driving is speed and usage scenarios. The development goal of high-speed automatic driving is to be the same as human-driven cars. , can drive in all scenarios such as rural roads, urban roads, highways, etc., and can reach or even exceed the level of human drivers driving cars.

As mentioned above, the development of high-speed autonomous driving is inseparable from the use of hardware equipment such as lidar, millimeter wave radar, satellite positioning, and inertial navigation. It also requires perception, positioning, planning, decision-making, data storage, etc. Technology and other applications, in order to make high-speed autonomous vehicles drive safer, they also need the blessing of high-precision maps, GPS positioning and other technologies. In order to enable high-speed autonomous vehicles to drive in multiple scenarios and multiple ranges, the application of intelligent network technology has also become becomes more important.

At this stage, the development of high-speed autonomous driving is still in the testing stage. As high-speed autonomous driving technology continues to mature, intelligent network connection pilot demonstration areas, smart cars and wisdom Traffic demonstration areas, national-level Internet of Vehicles pilot areas, provincial-level Internet of Vehicles pilot areas and other venues are gradually opened, allowing high-speed autonomous vehicles to gain more usage scenarios. In July 2021, the Beijing High-Level Autonomous Driving Demonstration Zone Promotion Working Group announced that the Beijing Intelligent Connected Vehicle Policy Pioneer Zone officially opened the autonomous driving high-speed test scenario, allowing the first batch of companies to obtain highway test notices to conduct pilot tests, opening A two-way 10 km section of the Beijing section of the Beijing-Taiwan Expressway (Fifth Ring Road-Sixth Ring Road) was conducted for preliminary road testing and verification. This is also the country's first high-speed autonomous driving test section, providing more possibilities for the future development of high-speed autonomous driving.

The development of high-speed autonomous driving is not as rapid as that of low-speed autonomous driving. The main reason is that there will be more considerations for the deployment of high-speed autonomous driving. Unlike low-speed autonomous driving, there are fixed usage scenarios and the scenarios are relatively simple. . High-speed autonomous driving directly participates in the traffic environment and needs to face complex traffic scenarios. It needs to be able to flexibly solve emergencies such as ghost probes and pedestrians running red lights. Whether the technical level of high-speed autonomous driving can meet the requirements? If an accident occurs, it will It may cause life hazards to passengers and pedestrians, and affect the traffic environment. In addition, consumers are not consistent in their acceptance of high-speed autonomous driving. In the formulation of traffic laws and regulations, there are no specific standard requirements for high-speed autonomous driving. This series of problems has caused the development of high-speed autonomous driving to still be in its infancy. .

The above is the detailed content of Let's talk about low-speed autonomous driving and high-speed autonomous driving in one article. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

Why is Gaussian Splatting so popular in autonomous driving that NeRF is starting to be abandoned?

Jan 17, 2024 pm 02:57 PM

Why is Gaussian Splatting so popular in autonomous driving that NeRF is starting to be abandoned?

Jan 17, 2024 pm 02:57 PM

Written above & the author’s personal understanding Three-dimensional Gaussiansplatting (3DGS) is a transformative technology that has emerged in the fields of explicit radiation fields and computer graphics in recent years. This innovative method is characterized by the use of millions of 3D Gaussians, which is very different from the neural radiation field (NeRF) method, which mainly uses an implicit coordinate-based model to map spatial coordinates to pixel values. With its explicit scene representation and differentiable rendering algorithms, 3DGS not only guarantees real-time rendering capabilities, but also introduces an unprecedented level of control and scene editing. This positions 3DGS as a potential game-changer for next-generation 3D reconstruction and representation. To this end, we provide a systematic overview of the latest developments and concerns in the field of 3DGS for the first time.

How to solve the long tail problem in autonomous driving scenarios?

Jun 02, 2024 pm 02:44 PM

How to solve the long tail problem in autonomous driving scenarios?

Jun 02, 2024 pm 02:44 PM

Yesterday during the interview, I was asked whether I had done any long-tail related questions, so I thought I would give a brief summary. The long-tail problem of autonomous driving refers to edge cases in autonomous vehicles, that is, possible scenarios with a low probability of occurrence. The perceived long-tail problem is one of the main reasons currently limiting the operational design domain of single-vehicle intelligent autonomous vehicles. The underlying architecture and most technical issues of autonomous driving have been solved, and the remaining 5% of long-tail problems have gradually become the key to restricting the development of autonomous driving. These problems include a variety of fragmented scenarios, extreme situations, and unpredictable human behavior. The "long tail" of edge scenarios in autonomous driving refers to edge cases in autonomous vehicles (AVs). Edge cases are possible scenarios with a low probability of occurrence. these rare events

Choose camera or lidar? A recent review on achieving robust 3D object detection

Jan 26, 2024 am 11:18 AM

Choose camera or lidar? A recent review on achieving robust 3D object detection

Jan 26, 2024 am 11:18 AM

0.Written in front&& Personal understanding that autonomous driving systems rely on advanced perception, decision-making and control technologies, by using various sensors (such as cameras, lidar, radar, etc.) to perceive the surrounding environment, and using algorithms and models for real-time analysis and decision-making. This enables vehicles to recognize road signs, detect and track other vehicles, predict pedestrian behavior, etc., thereby safely operating and adapting to complex traffic environments. This technology is currently attracting widespread attention and is considered an important development area in the future of transportation. one. But what makes autonomous driving difficult is figuring out how to make the car understand what's going on around it. This requires that the three-dimensional object detection algorithm in the autonomous driving system can accurately perceive and describe objects in the surrounding environment, including their locations,

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

StableDiffusion3’s paper is finally here! This model was released two weeks ago and uses the same DiT (DiffusionTransformer) architecture as Sora. It caused quite a stir once it was released. Compared with the previous version, the quality of the images generated by StableDiffusion3 has been significantly improved. It now supports multi-theme prompts, and the text writing effect has also been improved, and garbled characters no longer appear. StabilityAI pointed out that StableDiffusion3 is a series of models with parameter sizes ranging from 800M to 8B. This parameter range means that the model can be run directly on many portable devices, significantly reducing the use of AI

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

Trajectory prediction plays an important role in autonomous driving. Autonomous driving trajectory prediction refers to predicting the future driving trajectory of the vehicle by analyzing various data during the vehicle's driving process. As the core module of autonomous driving, the quality of trajectory prediction is crucial to downstream planning control. The trajectory prediction task has a rich technology stack and requires familiarity with autonomous driving dynamic/static perception, high-precision maps, lane lines, neural network architecture (CNN&GNN&Transformer) skills, etc. It is very difficult to get started! Many fans hope to get started with trajectory prediction as soon as possible and avoid pitfalls. Today I will take stock of some common problems and introductory learning methods for trajectory prediction! Introductory related knowledge 1. Are the preview papers in order? A: Look at the survey first, p

SIMPL: A simple and efficient multi-agent motion prediction benchmark for autonomous driving

Feb 20, 2024 am 11:48 AM

SIMPL: A simple and efficient multi-agent motion prediction benchmark for autonomous driving

Feb 20, 2024 am 11:48 AM

Original title: SIMPL: ASimpleandEfficientMulti-agentMotionPredictionBaselineforAutonomousDriving Paper link: https://arxiv.org/pdf/2402.02519.pdf Code link: https://github.com/HKUST-Aerial-Robotics/SIMPL Author unit: Hong Kong University of Science and Technology DJI Paper idea: This paper proposes a simple and efficient motion prediction baseline (SIMPL) for autonomous vehicles. Compared with traditional agent-cent

nuScenes' latest SOTA | SparseAD: Sparse query helps efficient end-to-end autonomous driving!

Apr 17, 2024 pm 06:22 PM

nuScenes' latest SOTA | SparseAD: Sparse query helps efficient end-to-end autonomous driving!

Apr 17, 2024 pm 06:22 PM

Written in front & starting point The end-to-end paradigm uses a unified framework to achieve multi-tasking in autonomous driving systems. Despite the simplicity and clarity of this paradigm, the performance of end-to-end autonomous driving methods on subtasks still lags far behind single-task methods. At the same time, the dense bird's-eye view (BEV) features widely used in previous end-to-end methods make it difficult to scale to more modalities or tasks. A sparse search-centric end-to-end autonomous driving paradigm (SparseAD) is proposed here, in which sparse search fully represents the entire driving scenario, including space, time, and tasks, without any dense BEV representation. Specifically, a unified sparse architecture is designed for task awareness including detection, tracking, and online mapping. In addition, heavy

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

Target detection is a relatively mature problem in autonomous driving systems, among which pedestrian detection is one of the earliest algorithms to be deployed. Very comprehensive research has been carried out in most papers. However, distance perception using fisheye cameras for surround view is relatively less studied. Due to large radial distortion, standard bounding box representation is difficult to implement in fisheye cameras. To alleviate the above description, we explore extended bounding box, ellipse, and general polygon designs into polar/angular representations and define an instance segmentation mIOU metric to analyze these representations. The proposed model fisheyeDetNet with polygonal shape outperforms other models and simultaneously achieves 49.5% mAP on the Valeo fisheye camera dataset for autonomous driving