Technology peripherals

Technology peripherals

AI

AI

An article about the functional safety design of advanced autonomous driving domain controllers

An article about the functional safety design of advanced autonomous driving domain controllers

An article about the functional safety design of advanced autonomous driving domain controllers

The design process of the advanced autonomous driving central domain controller requires a full understanding of the security design principles, because in the early design, whether it is architecture, software, hardware or communication, it is necessary to fully understand its design rules in order to give full play to it. It provides corresponding advantages while avoiding certain design problems.

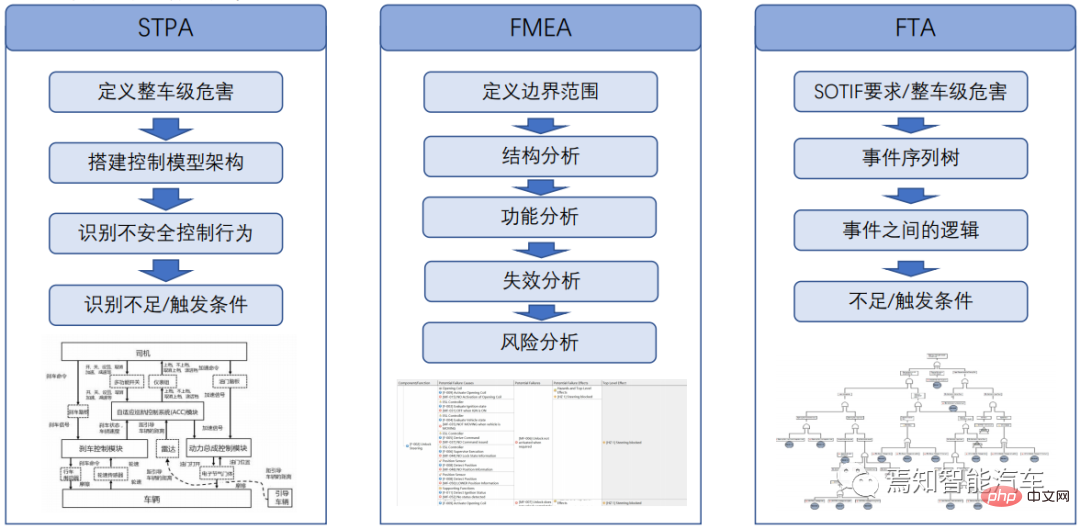

The high-end domain controller functional safety design we are talking about here mainly refers to the scenario analysis involved in expected functional safety in front-end development and all sub-items involved in back-end functional safety. . First, the basic level of hardware is used as the connection base point, and the entire system architecture communication and data stream transmission are realized through the data communication end. The software is burned into the hardware, using the hardware as the carrier, and the communication unit is responsible for calling modules between each other. So for the security design side of the domain controller. From the perspective of vehicle safety capability analysis, the main analysis process also includes the following three aspects: System Theoretic Analysis STPA (Systems Theoretic Process Analysis), Failure Mode and Effect Analysis FMEA, and Fault Tree Analysis (FTA).

For the domain controller at the core of the architecture, a very strong functional security level is involved. We can generally divide it into three levels: data communication security, hardware basic security, and software basic security. The specific analysis process needs to fully consider several aspects including functional safety at the basic hardware level, functional safety at the basic software level, and data communication capabilities, and the analysis of each aspect needs to be comprehensive.

Data communication security

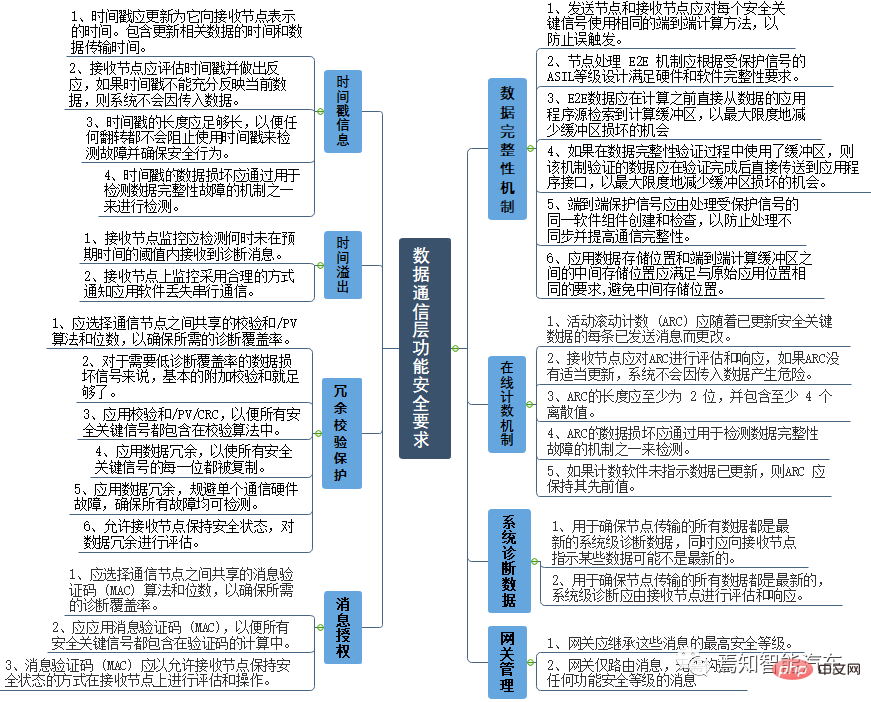

The communication end, as the connection and data inflow and outflow end, plays a decisive role in the entire system architecture communication. At the data communication level, its functional safety requirements mainly refer to the general data integrity mechanism, online counting mechanism (Rolling Counter), system diagnostic data refresh, timestamp information (Time Stamp), time overflow (CheckSum), management authorization code, data Redundancy, gateway and other major aspects. Among them, for data communication, such as online counting, diagnosis, time overflow verification, etc. are consistent with the traditional point-to-point Canbus signal, while for the next generation of autonomous driving, data redundancy, central gateway management optimization, and data authorization Access, etc. are areas that need to be focused on.

Their overall requirements for functional safety are as follows:

Hardware basic level

Functional safety requirements at the basic level of hardware mainly refer to several major modules such as microcontroller modules, storage modules, power supply support, and serial data communication.

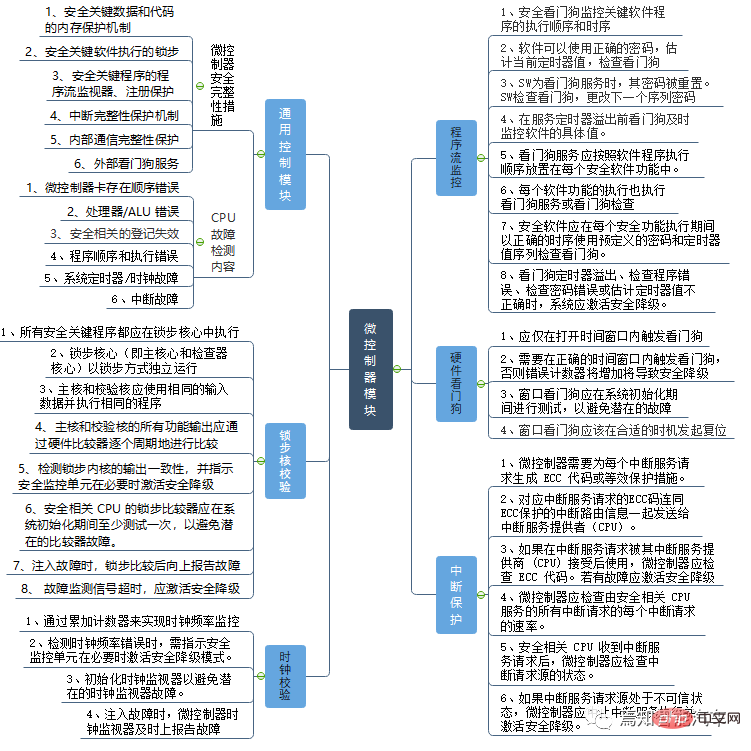

Microcontroller module security

The microcontroller here is what we often call AI chip (SOC), floating point operation Chip (GPU) and logic computing chip (MCU) are the main computing units running on the vehicle-side domain controller. From the perspective of functional safety design, various types of microcontroller modules include general design modules, lock-step core verification (including lock-step core comparison, lock-step core self-test), clock verification (including clock comparison, clock Self-test), program flow monitoring, heartbeat monitoring, hardware watchdog function, interrupt protection, memory/flash/register monitoring/self-test, power supply monitoring and self-test, communication protection, etc.

It should be noted that the microcontroller should provide the "active heartbeat" periodic switching signal to the monitoring unit through hard wires. Switching signals should be managed by a security watchdog that also provides program flow monitoring capabilities. The security watchdog is only allowed to toggle the "active heartbeat" during the watchdog service. The microcontroller security software should then toggle the "active heartbeat" every time the internal security watchdog is serviced, which indicates to the monitoring unit that the microcontroller is running and the security watchdog timer is running. The system background should monitor the "active heartbeat" switching signal by checking that the times of signal switching and high and low states are within the valid range. Once an "active heartbeat" failure is detected, the SMU activates safety downgrade.

For watchdog programs, testing should be performed during system initialization to avoid potential failures. The following fault types should be tested during the process:

- Incorrect watchdog trigger time (triggered in closed window);

- There is no watchdog trigger;

Storage module security

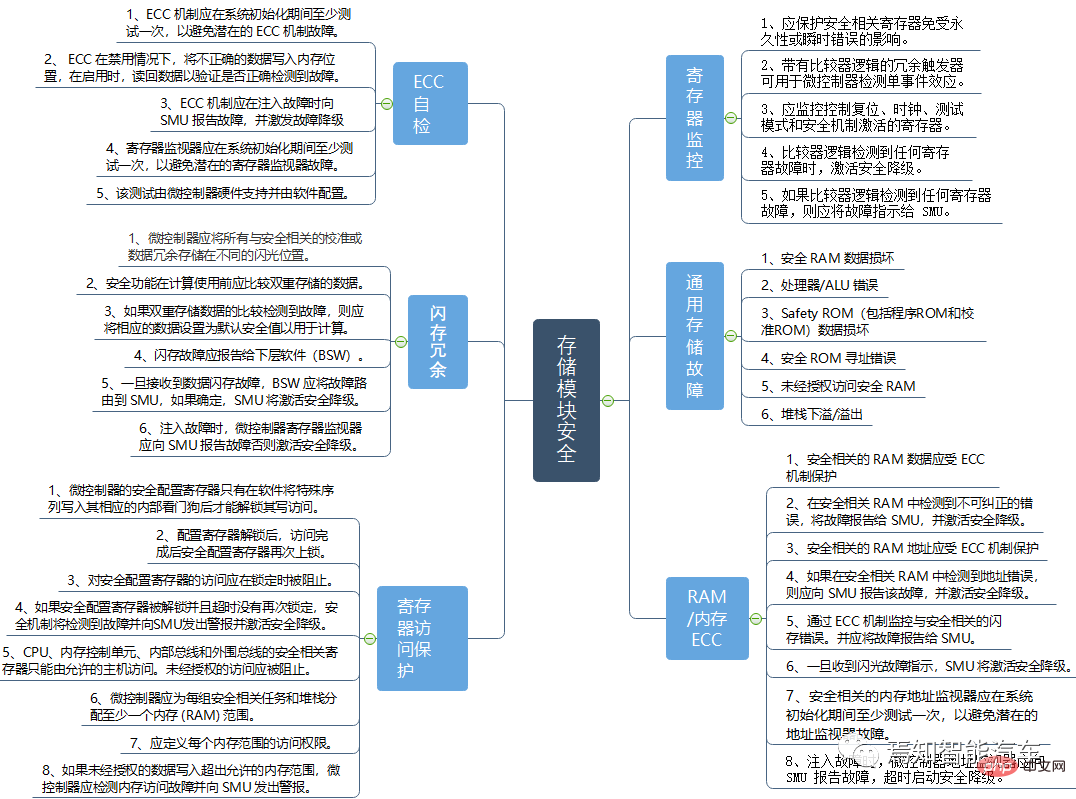

The storage module is an integral part of the entire domain control. During the entire chip operation process, it is mainly used for Temporary and commonly used file storage, as well as data exchange during the operation process. For example, our operating system startup program is stored in a SOC/MCU plug-in storage unit, and for example, our next-generation autonomous driving products must use driving/ Parking high-precision maps are usually stored in the storage unit plugged into the chip, and some diagnostic and log files in the underlying software are also stored in our plug-in chip. So what conditions need to be met for the entire storage unit to ensure appropriate functional safety conditions? See the figure below for detailed explanation.

The security of the entire storage unit mainly includes register monitoring, general storage unit, RAM/memory ECC, ECC self-test, flash redundancy, register write protection, range protection, register self-test, etc. Many aspects.

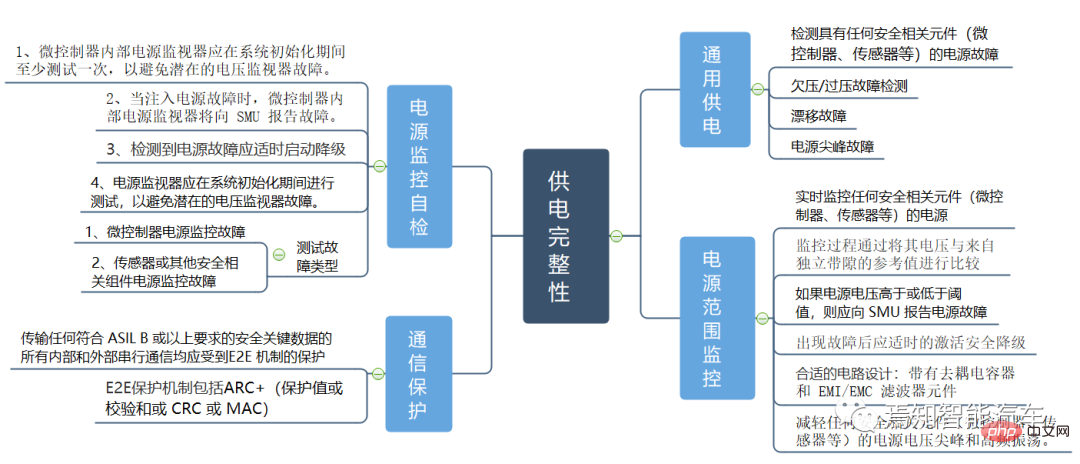

Power supply integrity

The power supply integrity safety method test is mainly through the entire power supply operating status. It is carried out through fault injection and real-time monitoring.

One example of a test approach is to configure a higher or lower monitoring threshold to force the monitor to detect an undervoltage or overvoltage fault and verify that the fault is correctly detected. When a fault is injected, the power monitor should activate the auxiliary shutdown path. The microcontroller should monitor the auxiliary shutdown path and only consider the test a "pass" if the auxiliary shutdown path behaves as expected in the test procedure, otherwise it will be considered a "fail". Once a failure is detected, the microcontroller activates safety degradation. This test is supported by a dedicated BIST function and must be configured by the microcontroller software according to a detailed procedure.

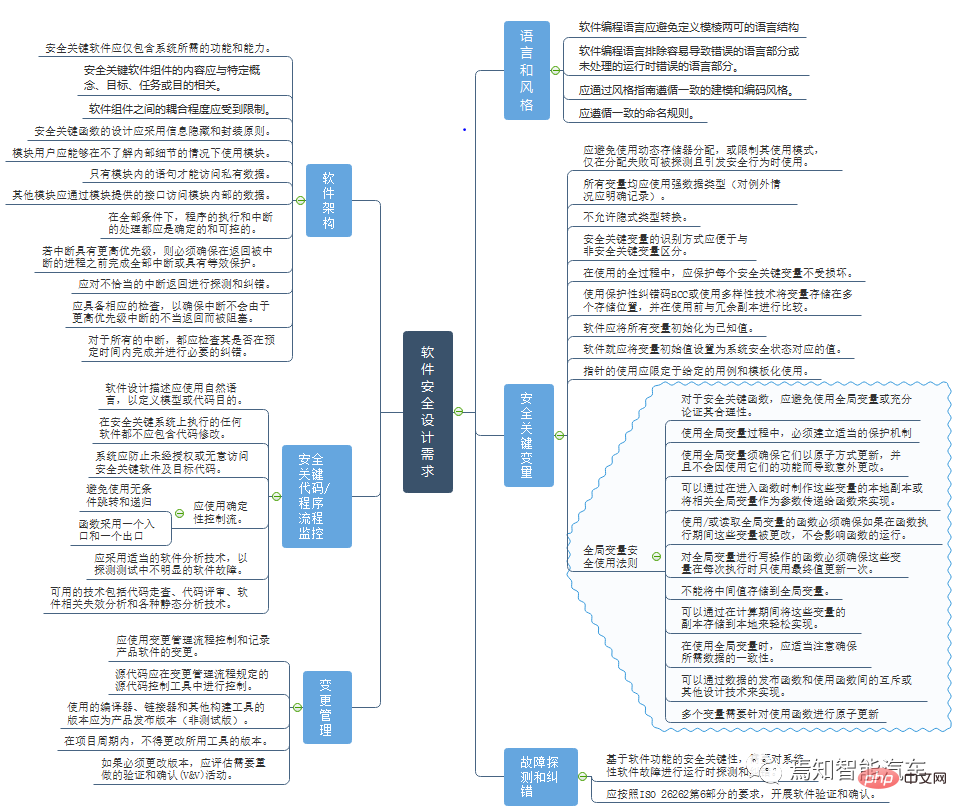

Software Basic Security

Design considerations regarding the basic software security level are mainly for vehicle-mounted Comprehensive consideration of possible software failures during the development of intelligent driving software. These include software document design, software language and style, safety-critical variables, fault detection and correction, software architecture, safety-critical code, program flow monitoring, change management and other major aspects. Software design descriptions at all levels should use natural language to define the purpose of the model or code. For example, when the independence between multiple variables is critical to the security of the system, these variables should not be combined into a single data element using the variable's public address. This can lead to common mode systematic failures involving all elements in the structure. If variables have been grouped, appropriate justification should be made for safety-critical functions.

This article starts from the perspective of functional safety and analyzes in detail the comprehensive elements and processes of the entire autonomous driving domain controller design from different aspects. Among them, it includes various aspects such as hardware foundation, software methods, data communication and so on. These functional safety design capabilities focus on the entire architecture level while also paying full attention to the connections between its internal components to ensure the compliance and integrity of the design process and avoid unpredictable consequences in the later stages of the design. Therefore, as detailed safety design rules, it can provide necessary reference for development engineers.

The above is the detailed content of An article about the functional safety design of advanced autonomous driving domain controllers. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

How to solve the long tail problem in autonomous driving scenarios?

Jun 02, 2024 pm 02:44 PM

How to solve the long tail problem in autonomous driving scenarios?

Jun 02, 2024 pm 02:44 PM

Yesterday during the interview, I was asked whether I had done any long-tail related questions, so I thought I would give a brief summary. The long-tail problem of autonomous driving refers to edge cases in autonomous vehicles, that is, possible scenarios with a low probability of occurrence. The perceived long-tail problem is one of the main reasons currently limiting the operational design domain of single-vehicle intelligent autonomous vehicles. The underlying architecture and most technical issues of autonomous driving have been solved, and the remaining 5% of long-tail problems have gradually become the key to restricting the development of autonomous driving. These problems include a variety of fragmented scenarios, extreme situations, and unpredictable human behavior. The "long tail" of edge scenarios in autonomous driving refers to edge cases in autonomous vehicles (AVs). Edge cases are possible scenarios with a low probability of occurrence. these rare events

nuScenes' latest SOTA | SparseAD: Sparse query helps efficient end-to-end autonomous driving!

Apr 17, 2024 pm 06:22 PM

nuScenes' latest SOTA | SparseAD: Sparse query helps efficient end-to-end autonomous driving!

Apr 17, 2024 pm 06:22 PM

Written in front & starting point The end-to-end paradigm uses a unified framework to achieve multi-tasking in autonomous driving systems. Despite the simplicity and clarity of this paradigm, the performance of end-to-end autonomous driving methods on subtasks still lags far behind single-task methods. At the same time, the dense bird's-eye view (BEV) features widely used in previous end-to-end methods make it difficult to scale to more modalities or tasks. A sparse search-centric end-to-end autonomous driving paradigm (SparseAD) is proposed here, in which sparse search fully represents the entire driving scenario, including space, time, and tasks, without any dense BEV representation. Specifically, a unified sparse architecture is designed for task awareness including detection, tracking, and online mapping. In addition, heavy

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

Target detection is a relatively mature problem in autonomous driving systems, among which pedestrian detection is one of the earliest algorithms to be deployed. Very comprehensive research has been carried out in most papers. However, distance perception using fisheye cameras for surround view is relatively less studied. Due to large radial distortion, standard bounding box representation is difficult to implement in fisheye cameras. To alleviate the above description, we explore extended bounding box, ellipse, and general polygon designs into polar/angular representations and define an instance segmentation mIOU metric to analyze these representations. The proposed model fisheyeDetNet with polygonal shape outperforms other models and simultaneously achieves 49.5% mAP on the Valeo fisheye camera dataset for autonomous driving

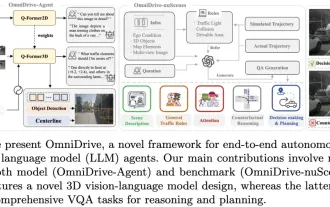

LLM is all done! OmniDrive: Integrating 3D perception and reasoning planning (NVIDIA's latest)

May 09, 2024 pm 04:55 PM

LLM is all done! OmniDrive: Integrating 3D perception and reasoning planning (NVIDIA's latest)

May 09, 2024 pm 04:55 PM

Written above & the author’s personal understanding: This paper is dedicated to solving the key challenges of current multi-modal large language models (MLLMs) in autonomous driving applications, that is, the problem of extending MLLMs from 2D understanding to 3D space. This expansion is particularly important as autonomous vehicles (AVs) need to make accurate decisions about 3D environments. 3D spatial understanding is critical for AVs because it directly impacts the vehicle’s ability to make informed decisions, predict future states, and interact safely with the environment. Current multi-modal large language models (such as LLaVA-1.5) can often only handle lower resolution image inputs (e.g.) due to resolution limitations of the visual encoder, limitations of LLM sequence length. However, autonomous driving applications require

The first pure visual static reconstruction of autonomous driving

Jun 02, 2024 pm 03:24 PM

The first pure visual static reconstruction of autonomous driving

Jun 02, 2024 pm 03:24 PM

A purely visual annotation solution mainly uses vision plus some data from GPS, IMU and wheel speed sensors for dynamic annotation. Of course, for mass production scenarios, it doesn’t have to be pure vision. Some mass-produced vehicles will have sensors like solid-state radar (AT128). If we create a data closed loop from the perspective of mass production and use all these sensors, we can effectively solve the problem of labeling dynamic objects. But there is no solid-state radar in our plan. Therefore, we will introduce this most common mass production labeling solution. The core of a purely visual annotation solution lies in high-precision pose reconstruction. We use the pose reconstruction scheme of Structure from Motion (SFM) to ensure reconstruction accuracy. But pass

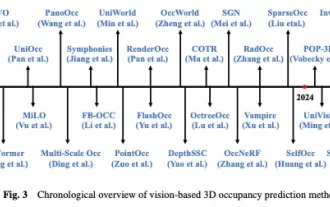

Take a look at the past and present of Occ and autonomous driving! The first review comprehensively summarizes the three major themes of feature enhancement/mass production deployment/efficient annotation.

May 08, 2024 am 11:40 AM

Take a look at the past and present of Occ and autonomous driving! The first review comprehensively summarizes the three major themes of feature enhancement/mass production deployment/efficient annotation.

May 08, 2024 am 11:40 AM

Written above & The author’s personal understanding In recent years, autonomous driving has received increasing attention due to its potential in reducing driver burden and improving driving safety. Vision-based three-dimensional occupancy prediction is an emerging perception task suitable for cost-effective and comprehensive investigation of autonomous driving safety. Although many studies have demonstrated the superiority of 3D occupancy prediction tools compared to object-centered perception tasks, there are still reviews dedicated to this rapidly developing field. This paper first introduces the background of vision-based 3D occupancy prediction and discusses the challenges encountered in this task. Next, we comprehensively discuss the current status and development trends of current 3D occupancy prediction methods from three aspects: feature enhancement, deployment friendliness, and labeling efficiency. at last

Beyond BEVFusion! DifFUSER: Diffusion model enters autonomous driving multi-task (BEV segmentation + detection dual SOTA)

Apr 22, 2024 pm 05:49 PM

Beyond BEVFusion! DifFUSER: Diffusion model enters autonomous driving multi-task (BEV segmentation + detection dual SOTA)

Apr 22, 2024 pm 05:49 PM

Written above & the author’s personal understanding At present, as autonomous driving technology becomes more mature and the demand for autonomous driving perception tasks increases, the industry and academia very much hope for an ideal perception algorithm model that can simultaneously complete three-dimensional target detection and based on Semantic segmentation task in BEV space. For a vehicle capable of autonomous driving, it is usually equipped with surround-view camera sensors, lidar sensors, and millimeter-wave radar sensors to collect data in different modalities. This makes full use of the complementary advantages between different modal data, making the data complementary advantages between different modalities. For example, 3D point cloud data can provide information for 3D target detection tasks, while color image data can provide more information for semantic segmentation tasks. accurate information. Needle

Towards 'Closed Loop' | PlanAgent: New SOTA for closed-loop planning of autonomous driving based on MLLM!

Jun 08, 2024 pm 09:30 PM

Towards 'Closed Loop' | PlanAgent: New SOTA for closed-loop planning of autonomous driving based on MLLM!

Jun 08, 2024 pm 09:30 PM

The deep reinforcement learning team of the Institute of Automation, Chinese Academy of Sciences, together with Li Auto and others, proposed a new closed-loop planning framework for autonomous driving based on the multi-modal large language model MLLM - PlanAgent. This method takes a bird's-eye view of the scene and graph-based text prompts as input, and uses the multi-modal understanding and common sense reasoning capabilities of the multi-modal large language model to perform hierarchical reasoning from scene understanding to the generation of horizontal and vertical movement instructions, and Further generate the instructions required by the planner. The method is tested on the large-scale and challenging nuPlan benchmark, and experiments show that PlanAgent achieves state-of-the-art (SOTA) performance on both regular and long-tail scenarios. Compared with conventional large language model (LLM) methods, PlanAgent