Technology peripherals

Technology peripherals

AI

AI

StarCraft II cooperative confrontation benchmark surpasses SOTA, new Transformer architecture solves multi-agent reinforcement learning problem

StarCraft II cooperative confrontation benchmark surpasses SOTA, new Transformer architecture solves multi-agent reinforcement learning problem

StarCraft II cooperative confrontation benchmark surpasses SOTA, new Transformer architecture solves multi-agent reinforcement learning problem

Multi-agent reinforcement learning (MARL) is a challenging problem that not only requires identifying the policy improvement direction of each agent, but also requires combining the policy updates of individual agents to improve Overall performance. Recently, this problem has been initially solved, and some researchers have introduced the centralized training decentralized execution (CTDE) method, which allows the agent to access global information during the training phase. However, these methods cannot cover the full complexity of multi-agent interactions.

In fact, some of these methods have proven to be failures. In order to solve this problem, someone proposed the multi-agent dominance decomposition theorem. On this basis, the HATRPO and HAPPO algorithms are derived. However, there are limitations to these approaches, which still rely on carefully designed maximization objectives.

In recent years, sequence models (SM) have made substantial progress in the field of natural language processing (NLP). For example, the GPT series and BERT perform well on a wide range of downstream tasks and achieve strong performance on small sample generalization tasks.

Since sequence models naturally fit with the sequence characteristics of language, they can be used for language tasks. However, sequence methods are not limited to NLP tasks, but are a widely applicable general basic model. For example, in computer vision (CV), one can split an image into subimages and arrange them in a sequence as if they were tokens in an NLP task. The more famous recent models such as Flamingo, DALL-E, GATO, etc. all have the shadow of the sequence method.

With the emergence of network architectures such as Transformer, sequence modeling technology has also attracted great attention from the RL community, which has promoted a series of offline RL development based on the Transformer architecture. These methods show great potential in solving some of the most fundamental RL training problems.

Despite the notable success of these methods, none was designed to model the most difficult (and unique to MARL) aspect of multi-agent systems— Interaction between agents. In fact, if we simply give all agents a Transformer policy and train them individually, this is still not guaranteed to improve the MARL joint performance. Therefore, while there are a large number of powerful sequence models available, MARL does not really take advantage of sequence model performance.

How to use sequence models to solve MARL problems? Researchers from Shanghai Jiao Tong University, Digital Brain Lab, Oxford University, etc. proposed a new multi-agent Transformer (MAT, Multi-Agent Transformer) architecture, which can effectively transform collaborative MARL problems into sequence model problems. Its tasks It maps the agent's observation sequence to the agent's optimal action sequence.

The goal of this paper is to build a bridge between MARL and SM in order to unlock the modeling capabilities of modern sequence models for MARL. The core of MAT is the encoder-decoder architecture, which uses the multi-agent advantage decomposition theorem to transform the joint strategy search problem into a sequential decision-making process, so that the multi-agent problem will exhibit linear time complexity, and most importantly, Doing so ensures monotonic performance improvement of MAT. Unlike previous techniques such as Decision Transformer that require pre-collected offline data, MAT is trained in an online strategic manner through online trial and error from the environment.

- Paper address: https://arxiv.org/pdf/2205.14953 .pdf

- Project homepage: https://sites.google.com/view/multi-agent-transformer

To verify MAT, researchers conducted extensive experiments on StarCraftII, Multi-Agent MuJoCo, Dexterous Hands Manipulation and Google Research Football benchmarks. The results show that MAT has better performance and data efficiency compared to strong baselines such as MAPPO and HAPPO. In addition, this study also proved that no matter how the number of agents changes, MAT performs better on unseen tasks, but it can be said to be an excellent small sample learner.

Background knowledge

In this section, the researcher first introduces the collaborative MARL problem formula and the multi-agent advantage decomposition theorem, which are the cornerstones of this article. Then, they review existing MAT-related MARL methods, finally leading to Transformer.

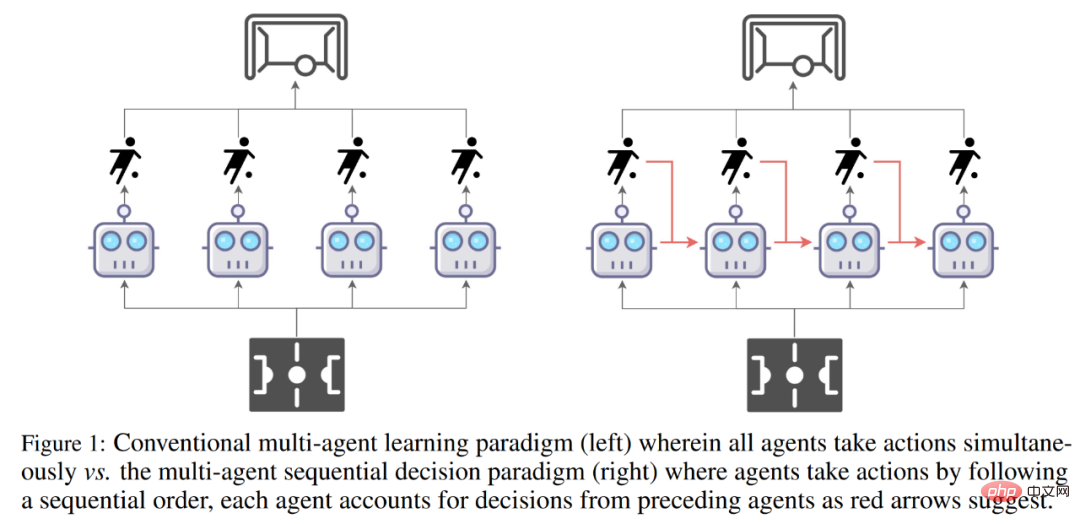

Comparison of the traditional multi-agent learning paradigm (left) and the multi-agent sequence decision-making paradigm (right).

Problem Formula

Collaborative MARL problems are usually composed of discrete partially observable Markov decision processes (Dec-POMDPs) to model.

to model.

Multi-agent dominance decomposition theorem

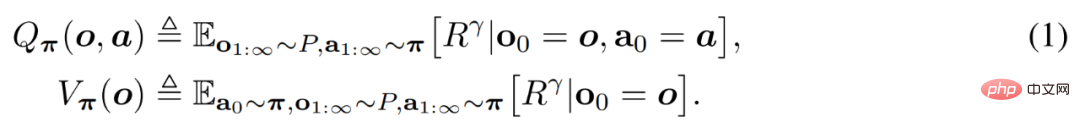

The agent evaluates the value of actions and observations through Q_π(o, a) and V_π(o), which are defined as follows.

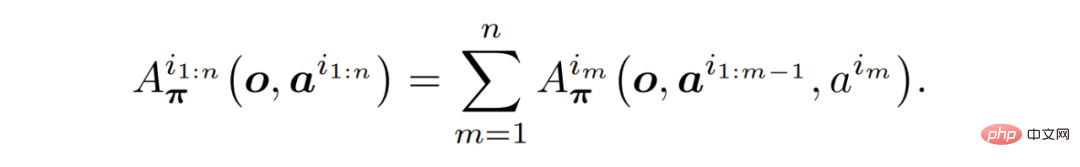

Theorem 1 (Multi-agent Advantage Decomposition): Let i_1:n be the arrangement of agents. The following formula always holds without further assumptions.

# Importantly, Theorem 1 provides an intuition for how to choose incremental improvement actions.

Existing MARL methods

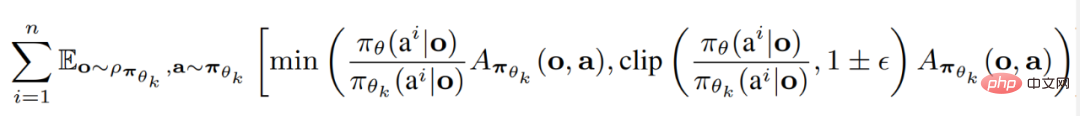

Researchers have summarized two current SOTA MARL algorithms, both of which are built on Proximal Policy Optimization (PPO) . PPO is an RL method known for its simplicity and performance stability.

Multi-Agent Proximal Policy Optimization (MAPPO) is the first and most straightforward method to apply PPO to MARL.

Heterogeneous Agent Proximal Policy Optimization (HAPPO) is one of the current SOTA algorithms, which can make full use of Theorem (1) to Achieving multi-agent trust domain learning with monotonic lifting guarantees.

Transformer model

Based on what is described in Theorem (1) Sequence properties and the principles behind HAPPO can now be intuitively considered to use the Transformer model to implement multi-agent trust domain learning. By treating an agent team as a sequence, the Transformer architecture allows modeling of agent teams with variable numbers and types while avoiding the shortcomings of MAPPO/HAPPO.

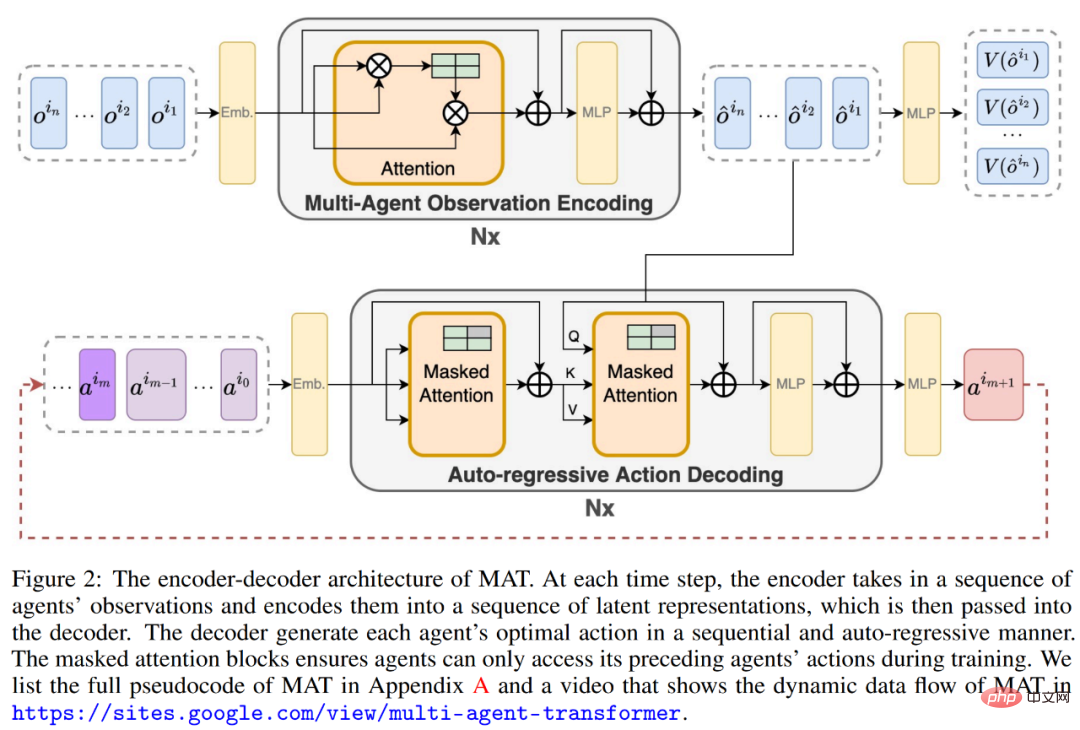

Multi-agent Transformer

In order to realize the sequence modeling paradigm of MARL, the solution provided by the researchers is the multi-agent Transformer (MAT). The idea of applying the Transformer architecture stems from the fact that the agent observes the relationship between the input of the sequence (o^i_1,..., o^i_n) and the output of the action sequence (a^i_1, . . ., a^i_n) Mapping is a sequence modeling task similar to machine translation. As Theorem (1) avoids, action a^i_m depends on the previous decisions of all agents a^i_1:m−1.

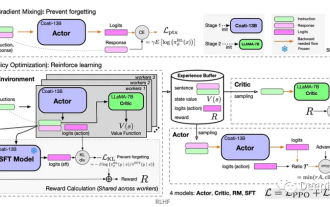

Therefore, as shown in Figure (2) below, MAT contains an encoder for learning joint observation representation and an autoregressive method to output actions for each agent. decoder.

The parameters of the encoder are represented by φ, which obtains the observation sequence in any order (o^i_1 , . . . , o^i_n) and pass them through several computational blocks. Each block consists of a self-attention mechanism, a multilayer perceptron (MLP), and residual connections to prevent vanishing gradients and network degradation with increasing depth.

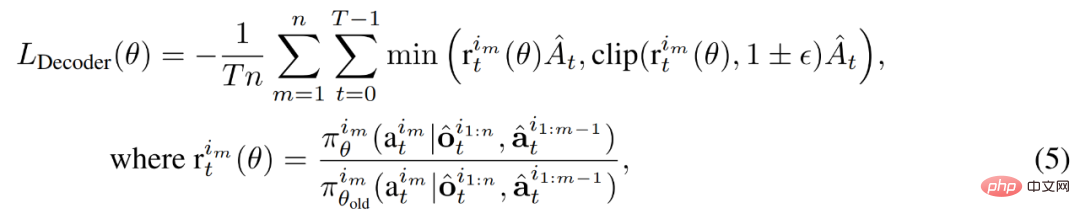

The parameters of the decoder are represented by θ, which embeds the joint action a^i_0:m−1, m = {1, . . . n} (where a^i_0 is Any symbol indicating the start of decoding) is passed to the decoding block sequence. Crucially, each decoding block has a masked self-attention mechanism. To train the decoder, we minimize the cropped PPO objective as follows.

#The detailed data flow in MAT is shown in the following animation.

Experimental results

To evaluate whether MAT meets expectations, researchers tested the StarCraft II Multi-Agent Challenge (SMAC) benchmark (MAPPO on top of MAT was tested on the multi-agent MuJoCo benchmark (on which HAPPO has SOTA performance).

In addition, the researchers also conducted extended tests on MAT on Bimanual Dxterous Hand Manipulation (Bi-DexHands) and Google Research Football benchmarks. The former offers a range of challenging two-hand tasks, and the latter offers a range of cooperative scenarios within a football game.

Finally, since the Transformer model usually shows strong generalization performance on small sample tasks, the researchers believe that MAT can also have similar powerful performance on unseen MARL tasks. Generalization. Therefore, they designed zero-shot and small-shot experiments on SMAC and multi-agent MuJoCo tasks.

Performance on the collaborative MARL benchmark

As shown in Table 1 and Figure 4 below, for the SMAC, multi-agent MuJoCo and Bi-DexHands benchmarks, MAT is It is significantly better than MAPPO and HAPPO on almost all tasks, indicating its powerful construction capabilities on homogeneous and heterogeneous agent tasks. Furthermore, MAT also achieves better performance than MAT-Dec, indicating the importance of decoder architecture in MAT design.

##Similarly, researchers on the Google Research Football benchmark Similar performance results were obtained, as shown in Figure 5 below.

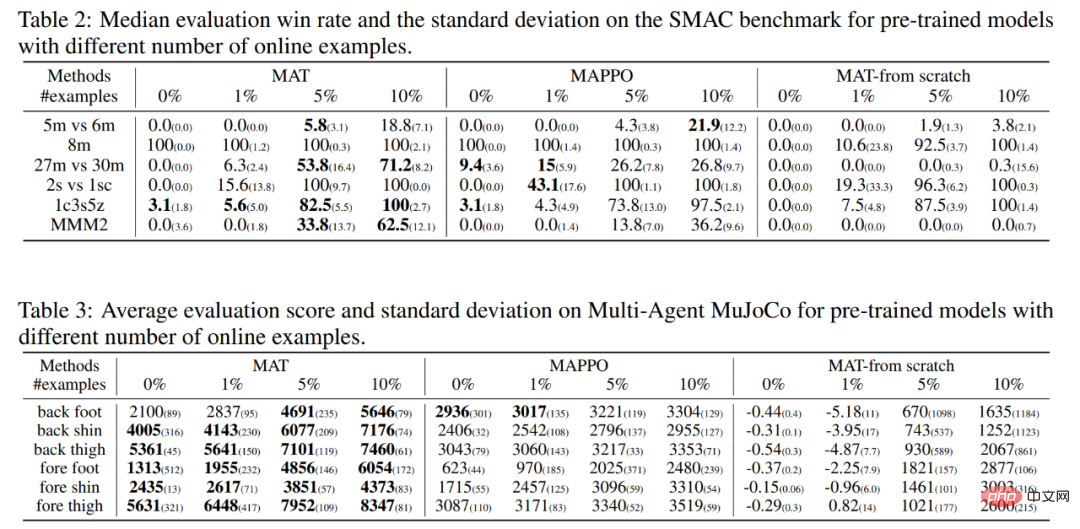

Zero-shot and few-shot examples for each algorithm are summarized in Table 2 and Table 3 Results, where bold numbers indicate the best performance.

The researchers also provided the performance of MAT with the same data, which was trained from scratch like the control group. As shown in the table below, MAT achieves most of the best results, which demonstrates the strong generalization performance of MAT's few-shot learning.

The above is the detailed content of StarCraft II cooperative confrontation benchmark surpasses SOTA, new Transformer architecture solves multi-agent reinforcement learning problem. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1377

1377

52

52

The facial features are flying around, opening the mouth, staring, and raising eyebrows, AI can imitate them perfectly, making it impossible to prevent video scams

Dec 14, 2023 pm 11:30 PM

The facial features are flying around, opening the mouth, staring, and raising eyebrows, AI can imitate them perfectly, making it impossible to prevent video scams

Dec 14, 2023 pm 11:30 PM

With such a powerful AI imitation ability, it is really impossible to prevent it. It is completely impossible to prevent it. Has the development of AI reached this level now? Your front foot makes your facial features fly, and on your back foot, the exact same expression is reproduced. Staring, raising eyebrows, pouting, no matter how exaggerated the expression is, it is all imitated perfectly. Increase the difficulty, raise the eyebrows higher, open the eyes wider, and even the mouth shape is crooked, and the virtual character avatar can perfectly reproduce the expression. When you adjust the parameters on the left, the virtual avatar on the right will also change its movements accordingly to give a close-up of the mouth and eyes. The imitation cannot be said to be exactly the same, but the expression is exactly the same (far right). The research comes from institutions such as the Technical University of Munich, which proposes GaussianAvatars, which

Reward function design issues in reinforcement learning

Oct 09, 2023 am 11:58 AM

Reward function design issues in reinforcement learning

Oct 09, 2023 am 11:58 AM

Reward function design issues in reinforcement learning Introduction Reinforcement learning is a method that learns optimal strategies through the interaction between an agent and the environment. In reinforcement learning, the design of the reward function is crucial to the learning effect of the agent. This article will explore reward function design issues in reinforcement learning and provide specific code examples. The role of the reward function and the target reward function are an important part of reinforcement learning and are used to evaluate the reward value obtained by the agent in a certain state. Its design helps guide the agent to maximize long-term fatigue by choosing optimal actions.

Deep Q-learning reinforcement learning using Panda-Gym's robotic arm simulation

Oct 31, 2023 pm 05:57 PM

Deep Q-learning reinforcement learning using Panda-Gym's robotic arm simulation

Oct 31, 2023 pm 05:57 PM

Reinforcement learning (RL) is a machine learning method that allows an agent to learn how to behave in its environment through trial and error. Agents are rewarded or punished for taking actions that lead to desired outcomes. Over time, the agent learns to take actions that maximize its expected reward. RL agents are typically trained using a Markov decision process (MDP), a mathematical framework for modeling sequential decision problems. MDP consists of four parts: State: a set of possible states of the environment. Action: A set of actions that an agent can take. Transition function: A function that predicts the probability of transitioning to a new state given the current state and action. Reward function: A function that assigns a reward to the agent for each conversion. The agent's goal is to learn a policy function,

What is the architecture and working principle of Spring Data JPA?

Apr 17, 2024 pm 02:48 PM

What is the architecture and working principle of Spring Data JPA?

Apr 17, 2024 pm 02:48 PM

SpringDataJPA is based on the JPA architecture and interacts with the database through mapping, ORM and transaction management. Its repository provides CRUD operations, and derived queries simplify database access. Additionally, it uses lazy loading to only retrieve data when necessary, thus improving performance.

1.3ms takes 1.3ms! Tsinghua's latest open source mobile neural network architecture RepViT

Mar 11, 2024 pm 12:07 PM

1.3ms takes 1.3ms! Tsinghua's latest open source mobile neural network architecture RepViT

Mar 11, 2024 pm 12:07 PM

Paper address: https://arxiv.org/abs/2307.09283 Code address: https://github.com/THU-MIG/RepViTRepViT performs well in the mobile ViT architecture and shows significant advantages. Next, we explore the contributions of this study. It is mentioned in the article that lightweight ViTs generally perform better than lightweight CNNs on visual tasks, mainly due to their multi-head self-attention module (MSHA) that allows the model to learn global representations. However, the architectural differences between lightweight ViTs and lightweight CNNs have not been fully studied. In this study, the authors integrated lightweight ViTs into the effective

MotionLM: Language modeling technology for multi-agent motion prediction

Oct 13, 2023 pm 12:09 PM

MotionLM: Language modeling technology for multi-agent motion prediction

Oct 13, 2023 pm 12:09 PM

This article is reprinted with permission from the Autonomous Driving Heart public account. Please contact the source for reprinting. Original title: MotionLM: Multi-Agent Motion Forecasting as Language Modeling Paper link: https://arxiv.org/pdf/2309.16534.pdf Author affiliation: Waymo Conference: ICCV2023 Paper idea: For autonomous vehicle safety planning, reliably predict the future behavior of road agents is crucial. This study represents continuous trajectories as sequences of discrete motion tokens and treats multi-agent motion prediction as a language modeling task. The model we propose, MotionLM, has the following advantages: First

How steep is the learning curve of golang framework architecture?

Jun 05, 2024 pm 06:59 PM

How steep is the learning curve of golang framework architecture?

Jun 05, 2024 pm 06:59 PM

The learning curve of the Go framework architecture depends on familiarity with the Go language and back-end development and the complexity of the chosen framework: a good understanding of the basics of the Go language. It helps to have backend development experience. Frameworks that differ in complexity lead to differences in learning curves.

Do you know that programmers will be in decline in a few years?

Nov 08, 2023 am 11:17 AM

Do you know that programmers will be in decline in a few years?

Nov 08, 2023 am 11:17 AM

"ComputerWorld" magazine once wrote an article saying that "programming will disappear by 1960" because IBM developed a new language FORTRAN, which allows engineers to write the mathematical formulas they need and then submit them. Give the computer a run, so programming ends. A few years later, we heard a new saying: any business person can use business terms to describe their problems and tell the computer what to do. Using this programming language called COBOL, companies no longer need programmers. . Later, it is said that IBM developed a new programming language called RPG that allows employees to fill in forms and generate reports, so most of the company's programming needs can be completed through it.