Technology peripherals

Technology peripherals

AI

AI

Seamlessly supports Hugging Face community, Colossal-AI easily accelerates large models at low cost

Seamlessly supports Hugging Face community, Colossal-AI easily accelerates large models at low cost

Seamlessly supports Hugging Face community, Colossal-AI easily accelerates large models at low cost

Large models have become a trend in the AI circle, not only sweeping the major performance lists, but also generating many interesting applications. For example, Copilot, an automatic code suggestion completion tool developed by Microsoft and OpenAI, becomes the best assistant for programmers and improves work efficiency.

OpenAI has just released DALL-E 2, an image model that can generate fake and real text. Google then released Imagen. In terms of large models, large companies are also quite large. No worse than CV ranking.

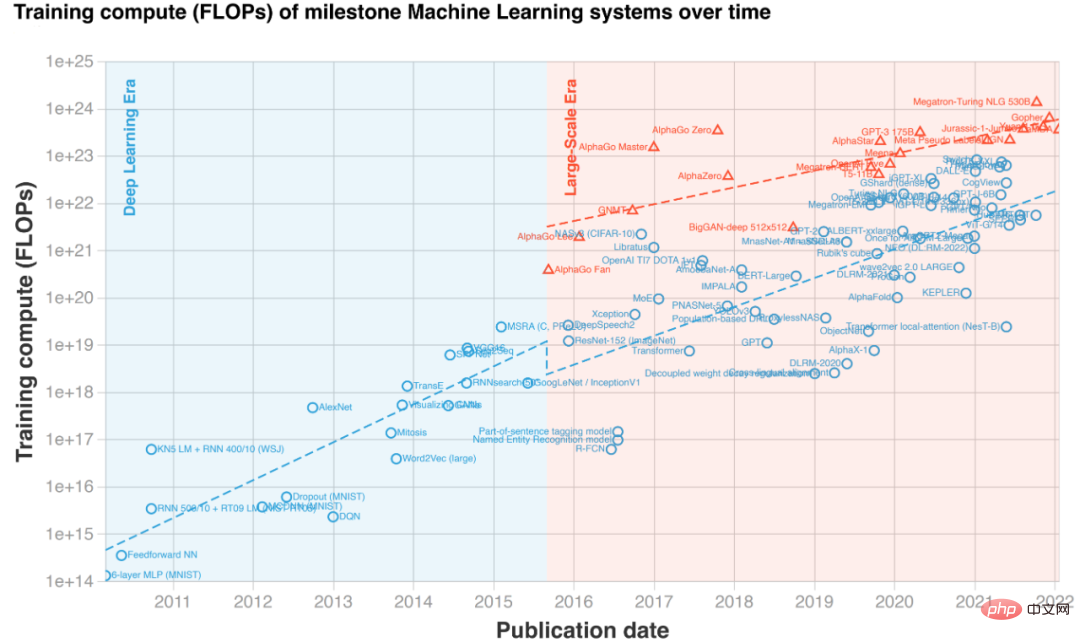

Text-to-image generation example "A statue of a Greek tripped by a cat" (the two columns on the left are Imagen, the two columns on the right are DALL·E 2) The miraculous performance brought about by model enlargement has led to explosive growth in the scale of pre-trained models in recent years. However, training or even fine-tuning large models requires very high hardware costs, often involving dozens or hundreds of GPUs. In addition, existing deep learning frameworks such as PyTorch and TensorFlow are also difficult to effectively handle very large models, and professional AI system engineers are usually required to adapt and optimize specific models. More importantly, not every laboratory and R&D team has the ability to call large-scale GPU clusters to use large models at any time, let alone individual developers who only have one graphics card. Therefore, although the large model has attracted a lot of attention, the high barrier to entry has made it “out of reach” for the public.

#The core reason for the increased cost of using large models is the limitation of video memory. Although GPU computing is fast, its memory capacity is limited and cannot accommodate large models. To address this pain point, Colossal-AI uses a heterogeneous memory system to efficiently use both GPU memory and cheap CPU memory. It can train up to 18 billion parameter GPT on a personal PC with only one GPU, which can increase the model capacity by ten It greatly reduces the threshold for downstream tasks and application deployment such as fine-tuning and inference of large AI models, and can easily expand to large-scale distribution. Hugging Face has provided the deep learning community with the implementation of more than 50,000 AI models, including large models like GPT and OPT, and has become one of the most popular AI libraries.

Colossal-AI seamlessly supports the Hugging Face community model, making large models within reach of every developer. Next, we will take the large model OPT released by Meta as an example to show how to use Colossal-AI to achieve low-cost training and fine-tuning of large models by just adding a few lines of code.

Open source address: https://github.com/hpcaitech/ColossalAI Low-cost accelerated large model OPTOPT The full name of model OPT is Open Pretrained Transformer, which is released by Meta (Facebook) AI laboratory A large-scale Transformer model that benchmarks GPT-3. Compared with GPT-3, which OpenAI has not yet disclosed model weights, Meta AI has generously open sourced all code and model weights, which has greatly promoted the democratization of AI large models, and every developer can develop personality based on this. ization downstream tasks. Next, we will use the pre-trained weights of the OPT model provided by Hugging Face to fine-tune Casual Language Modelling. Adding a configuration file To use the powerful functions in Colossal-AI, users do not need to change the code training logic. They only need to add a simple configuration file to give the model the desired functions, such as mixed precision, gradient accumulation, multi-dimensional parallel training, Redundant memory optimization, etc. On a GPU, taking heterogeneous training as an example, we only need to add relevant configuration items to the configuration file. Among them, tensor_placement_policy determines our heterogeneous training strategy. This parameter can be cuda, cpu and auto. Each strategy has different advantages:

- cuda: places all model parameters on the GPU, suitable for traditional scenarios where training can still be performed without offloading;

- cpu will The model parameters are all placed in the CPU memory, and only the weights currently involved in the calculation are retained in the GPU memory, which is suitable for the training of very large models;

- auto will automatically determine the weights retained in the GPU memory based on real-time memory information. The amount of parameters can maximize the use of GPU memory and reduce data transmission between CPU and GPU.

For ordinary users, they only need to select the auto strategy, and Colossal-AI will automatically and dynamically select the best heterogeneous strategy in real time to maximize computing efficiency.

from colossalai.zero.shard_utils import TensorShardStrategy<br><br><br>zero = dict(model_config=dict(shard_strategy=TensorShardStrategy(),<br>tensor_placement_policy="auto"),<br>optimizer_config=dict(gpu_margin_mem_ratio=0.8)

Run Start After the configuration file is ready, we only need to insert a few lines of code to start the declared new function. First, through a line of code, use the configuration file to start Colossal-AI. Colossal-AI will automatically initialize the distributed environment, read the relevant configuration, and then automatically inject the functions in the configuration into the model, optimizer and other components.

colossalai.launch_from_torch(config='./configs/colossalai_zero.py')

Next, users can define datasets, models, optimizers, loss functions, etc. as usual, such as directly using native PyTorch code. When defining the model, just place the model under ZeroInitContext and initialize it. In the example, we use the OPTForCausalLM model and pre-trained weights provided by Hugging Face to fine-tune on the Wikitext dataset.

with ZeroInitContext(target_device=torch.cuda.current_device(), <br>shard_strategy=shard_strategy,<br>shard_param=True):<br>model = OPTForCausalLM.from_pretrained(<br>'facebook/opt-1.3b'<br>config=config<br>)

Then, just call colossalai.initialize to uniformly inject the heterogeneous memory functions defined in the configuration file into the training engine and start the corresponding functions.

engine, train_dataloader, eval_dataloader, lr_scheduler = colossalai.initialize(model=model,<br> optimizer=optimizer,<br> criterion=criterion,<br> train_dataloader=train_dataloader,<br> test_dataloader=eval_dataloader,<br> lr_scheduler=lr_scheduler)

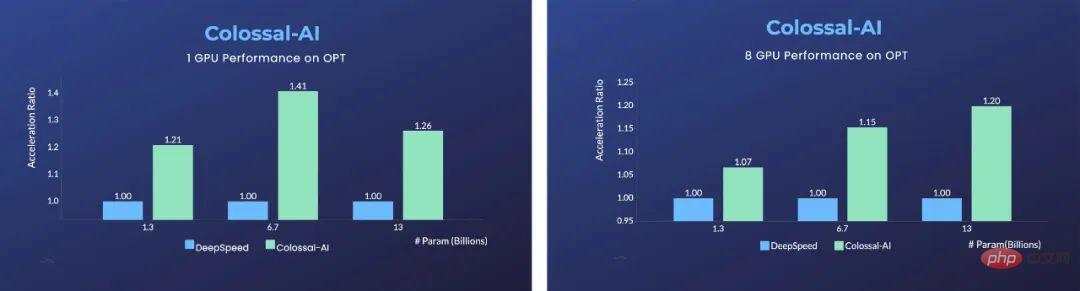

The advantage is significant in a single GPU. Compared with Microsoft DeepSpeed, Colossal-AI uses an automated auto strategy and compares with DeepSpeed's ZeRO Offloading strategy at different model sizes. , all show significant advantages, and can achieve an acceleration of 40% at the fastest. Traditional deep learning frameworks such as PyTorch are no longer able to run such large models on a single GPU.

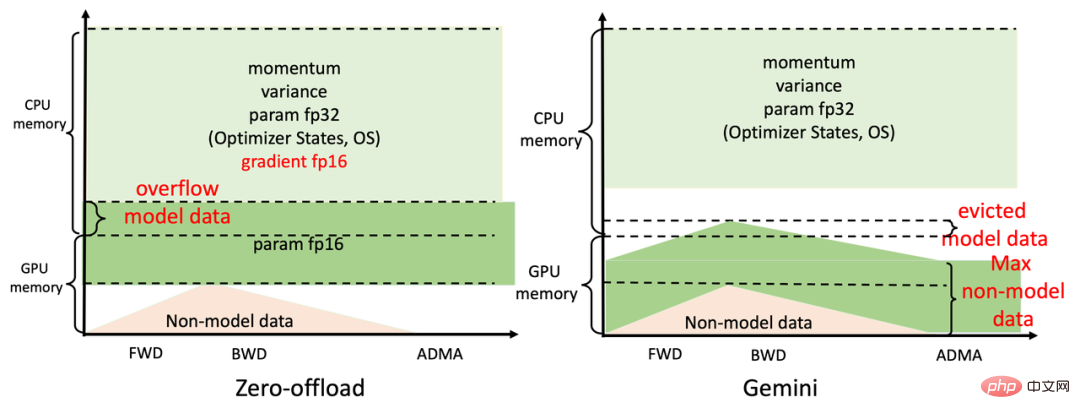

For parallel training using 8 GPUs, Colossal-AI only needs to add - nprocs 8 to the startup command to achieve it! The secret behind such significant improvements comes from Colossal-AI’s efficient heterogeneous memory management subsystem, Gemini. To put it simply, during model training, Gemini preheats in the first few steps and collects memory consumption information in the PyTorch dynamic calculation graph; after the warm-up is completed and before calculating an operator, it uses the collected memory usage records , Gemini will reserve the peak memory required by this operator on the computing device, and at the same time move some model tensors from GPU memory to CPU memory.

Gemini's built-in memory manager marks each tensor with status information, including HOLD, COMPUTE, FREE, etc. Then, based on the dynamically queried memory usage, it continuously dynamically converts the tensor state and adjusts the tensor position. Compared with the static division of DeepSpeed's ZeRO Offload, Colossal-AI Gemini can more efficiently utilize GPU memory and CPU memory to achieve Maximize model capacity and balance training speed when hardware is extremely limited.

For the representative GPT of large models, using Colossal-AI on an ordinary gaming notebook equipped with RTX 2060 6GB is enough to train a model of up to 1.5 billion parameters; for a model equipped with RTX3090 24GB Personal computers can directly train models with 18 billion parameters; Colossal-AI can also show significant improvements for professional computing cards such as Tesla V100. Going one step further: Convenient and efficient parallel expansion Parallel distributed technology is an important means to further accelerate model training. If you want to train the largest and most cutting-edge AI model in the world in the shortest time, you still cannot do without efficient distributed parallel expansion. In view of the pain points of existing solutions such as limited parallel dimensions, low efficiency, poor versatility, difficult deployment, and lack of maintenance, Colossal-AI allows users to deploy efficiently and quickly with only minimal modifications through technologies such as efficient multi-dimensional parallelism and heterogeneous parallelism. AI large model training. For example, complex parallel strategies that use data parallelism, pipeline parallelism, 2.5-dimensional tensor parallelism, etc. can be automatically implemented with simple declarations. Colossal-AI does not need to intrude into the code and manually handle complex underlying logic like other systems and frameworks.

Python<br>parallel = dict(<br>pipeline=2,<br>tensor=dict(mode='2.5d', depth = 1, size=4)<br>)

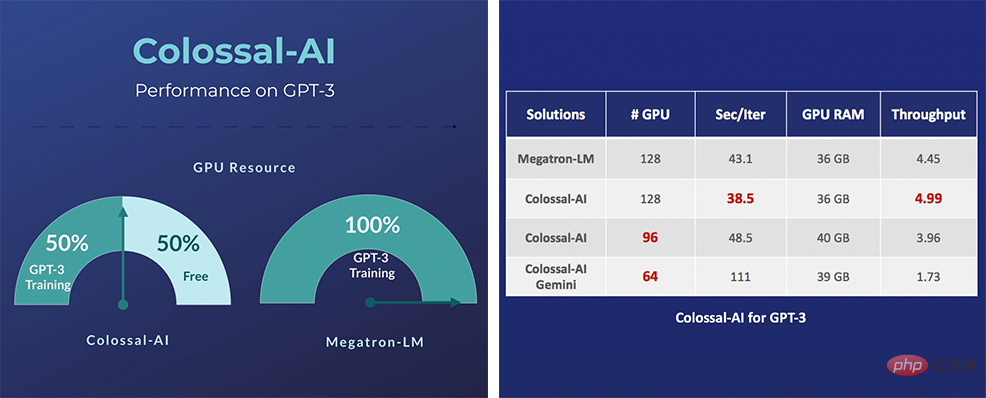

Specifically, for very large AI models like GPT-3, Colossal-AI only needs half the computing resources to start training compared to the NVIDIA solution; if the same Computing resources can be increased by 11%, which can reduce GPT-3 training costs by more than one million US dollars. Colossal-AI related solutions have been successfully implemented by well-known manufacturers in autonomous driving, cloud computing, retail, medicine, chips and other industries, and have been widely praised.

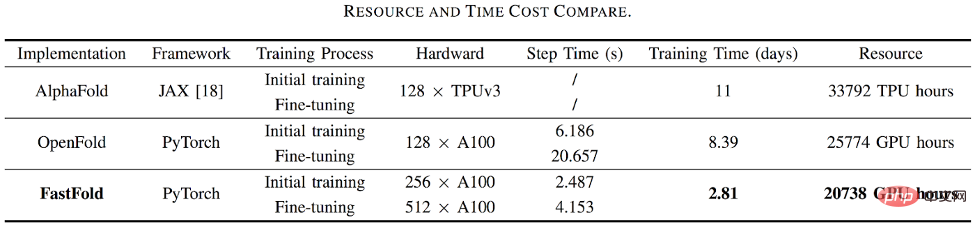

For example, for the protein structure prediction application AlphaFold2, FastFold based on Colossal-AI's acceleration program successfully surpassed Google and Columbia University's programs, reducing the training time of AlphaFold2 from 11 days It is reduced to 67 hours, and the total cost is lower. It also achieves a 9.3 to 11.6 times speed increase in long sequence reasoning.

Colossal-AI focuses on open source community building, provides Chinese tutorials, opens user communities and forums, conducts efficient communication and iterative updates for user feedback, and continuously adds cutting-edge applications such as PaLM and AlphaFold. Since its natural open source, Colossal-AI has ranked first in the world on GitHub and Papers With Code hot lists many times, and has attracted attention at home and abroad together with many star open source projects with tens of thousands of stars!

Portal project address: https://github.com/hpcaitech/ColossalAI

The above is the detailed content of Seamlessly supports Hugging Face community, Colossal-AI easily accelerates large models at low cost. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

How to solve the complexity of WordPress installation and update using Composer

Apr 17, 2025 pm 10:54 PM

How to solve the complexity of WordPress installation and update using Composer

Apr 17, 2025 pm 10:54 PM

When managing WordPress websites, you often encounter complex operations such as installation, update, and multi-site conversion. These operations are not only time-consuming, but also prone to errors, causing the website to be paralyzed. Combining the WP-CLI core command with Composer can greatly simplify these tasks, improve efficiency and reliability. This article will introduce how to use Composer to solve these problems and improve the convenience of WordPress management.

How to solve SQL parsing problem? Use greenlion/php-sql-parser!

Apr 17, 2025 pm 09:15 PM

How to solve SQL parsing problem? Use greenlion/php-sql-parser!

Apr 17, 2025 pm 09:15 PM

When developing a project that requires parsing SQL statements, I encountered a tricky problem: how to efficiently parse MySQL's SQL statements and extract the key information. After trying many methods, I found that the greenlion/php-sql-parser library can perfectly solve my needs.

How to solve complex BelongsToThrough relationship problem in Laravel? Use Composer!

Apr 17, 2025 pm 09:54 PM

How to solve complex BelongsToThrough relationship problem in Laravel? Use Composer!

Apr 17, 2025 pm 09:54 PM

In Laravel development, dealing with complex model relationships has always been a challenge, especially when it comes to multi-level BelongsToThrough relationships. Recently, I encountered this problem in a project dealing with a multi-level model relationship, where traditional HasManyThrough relationships fail to meet the needs, resulting in data queries becoming complex and inefficient. After some exploration, I found the library staudenmeir/belongs-to-through, which easily installed and solved my troubles through Composer.

Solve CSS prefix problem using Composer: Practice of padaliyajay/php-autoprefixer library

Apr 17, 2025 pm 11:27 PM

Solve CSS prefix problem using Composer: Practice of padaliyajay/php-autoprefixer library

Apr 17, 2025 pm 11:27 PM

I'm having a tricky problem when developing a front-end project: I need to manually add a browser prefix to the CSS properties to ensure compatibility. This is not only time consuming, but also error-prone. After some exploration, I discovered the padaliyajay/php-autoprefixer library, which easily solved my troubles with Composer.

How to solve the problem of PHP project code coverage reporting? Using php-coveralls is OK!

Apr 17, 2025 pm 08:03 PM

How to solve the problem of PHP project code coverage reporting? Using php-coveralls is OK!

Apr 17, 2025 pm 08:03 PM

When developing PHP projects, ensuring code coverage is an important part of ensuring code quality. However, when I was using TravisCI for continuous integration, I encountered a problem: the test coverage report was not uploaded to the Coveralls platform, resulting in the inability to monitor and improve code coverage. After some exploration, I found the tool php-coveralls, which not only solved my problem, but also greatly simplified the configuration process.

How to solve the complex problem of PHP geodata processing? Use Composer and GeoPHP!

Apr 17, 2025 pm 08:30 PM

How to solve the complex problem of PHP geodata processing? Use Composer and GeoPHP!

Apr 17, 2025 pm 08:30 PM

When developing a Geographic Information System (GIS), I encountered a difficult problem: how to efficiently handle various geographic data formats such as WKT, WKB, GeoJSON, etc. in PHP. I've tried multiple methods, but none of them can effectively solve the conversion and operational issues between these formats. Finally, I found the GeoPHP library, which easily integrates through Composer, and it completely solved my troubles.

git software installation tutorial

Apr 17, 2025 pm 12:06 PM

git software installation tutorial

Apr 17, 2025 pm 12:06 PM

Git Software Installation Guide: Visit the official Git website to download the installer for Windows, MacOS, or Linux. Run the installer and follow the prompts. Configure Git: Set username, email, and select a text editor. For Windows users, configure the Git Bash environment.

The latest tutorial on how to read the key of git software

Apr 17, 2025 pm 12:12 PM

The latest tutorial on how to read the key of git software

Apr 17, 2025 pm 12:12 PM

This article will explain in detail how to view keys in Git software. It is crucial to master this because Git keys are secure credentials for authentication and secure transfer of code. The article will guide readers step by step how to display and manage their Git keys, including SSH and GPG keys, using different commands and options. By following the steps in this guide, users can easily ensure their Git repository is secure and collaboratively smoothly with others.