Meta AI under LeCun bets on self-supervision

Is self-supervised learning really a key step towards AGI?

Meta’s chief AI scientist, Yann LeCun, did not forget the long-term goals when talking about “specific measures to be taken at this moment.” He said in an interview: "We want to build intelligent machines that learn like animals and humans."

In recent years, Meta has published a series of papers on self-supervised learning (SSL) of AI systems. LeCun firmly believes that SSL is a necessary prerequisite for AI systems, which can help AI systems build world models to obtain human-like capabilities such as rationality, common sense, and the ability to transfer skills and knowledge from one environment to another.

Their new paper shows how a self-supervised system called a masked autoencoder (MAE) can learn to reconstruct images, video and even audio from very fragmented, incomplete data. While MAEs are not a new idea, Meta has expanded this work into new areas. LeCun said that by studying how to predict missing data, whether it is a still image or a video or audio sequence, MAE systems are building a model of the world. He said: "If it can predict what is about to happen in the video, it must understand that the world is three-dimensional, that some objects are inanimate and do not move on their own, and other objects are alive and difficult to predict, until prediction Complex behavior of living beings." Once an AI system has an accurate model of the world, it can use this model to plan actions.

LeCun said, “The essence of intelligence is learning to predict.” Although he did not claim that Meta’s MAE system is close to general artificial intelligence, he believes that it is an important step towards general artificial intelligence.

But not everyone agrees that Meta researchers are on the right path toward general artificial intelligence. Yoshua Bengio sometimes engages in friendly debates with LeCun about big ideas in AI. In an email to IEEE Spectrum, Bengio laid out some of the differences and similarities in their goals.

Bengio wrote: "I really don't think our current methods (whether self-supervised or not) are enough to bridge the gap between artificial and human intelligence levels." He said that the field needs to make "qualitative progress" , can truly push technology closer to human-scale artificial intelligence.

Bengio agreed with LeCun’s view that “the ability to reason about the world is the core element of intelligence.” However, his team did not focus on models that can predict; A model that can present knowledge in the form of natural language. He noted that such models would allow us to combine these pieces of knowledge to solve new problems, conduct counterfactual simulations, or study possible futures. Bengio's team developed a new neural network framework that is more modular than the one favored by LeCun, who works on end-to-end learning.

The Hot Transformer

Meta’s MAE is based on a neural network architecture called Transformer. This architecture initially became popular in the field of natural language processing, and later expanded to many fields such as computer vision.

Of course, Meta is not the first team to successfully use Transformer for visual tasks. Ross Girshick, a researcher at Meta AI, said that Google’s research on Visual Transformer (ViT) inspired the Meta team. “The adoption of the ViT architecture helped (us) eliminate some obstacles encountered during the experiment.”

Girshick is one of the authors of Meta's first MAE system paper. One of the authors of this paper is He Kaiming. They discuss a very simple method: mask random blocks of the input image and reconstruct the lost ones. pixels.

The training of this model is similar to BERT and some other Transformer-based language models. Researchers will show them huge text databases, but some words are missing, In other words, it was "covered". The model needs to predict the missing words on its own, and then the masked words are revealed so that the model can check its work and update its parameters. This process keeps repeating. To do something similar visually, the team broke the image into patches, then masked some of the patches and asked the MAE system to predict the missing parts of the image, Girshick explained.

The training of this model is similar to BERT and some other Transformer-based language models. Researchers will show them huge text databases, but some words are missing, In other words, it was "covered". The model needs to predict the missing words on its own, and then the masked words are revealed so that the model can check its work and update its parameters. This process keeps repeating. To do something similar visually, the team broke the image into patches, then masked some of the patches and asked the MAE system to predict the missing parts of the image, Girshick explained.

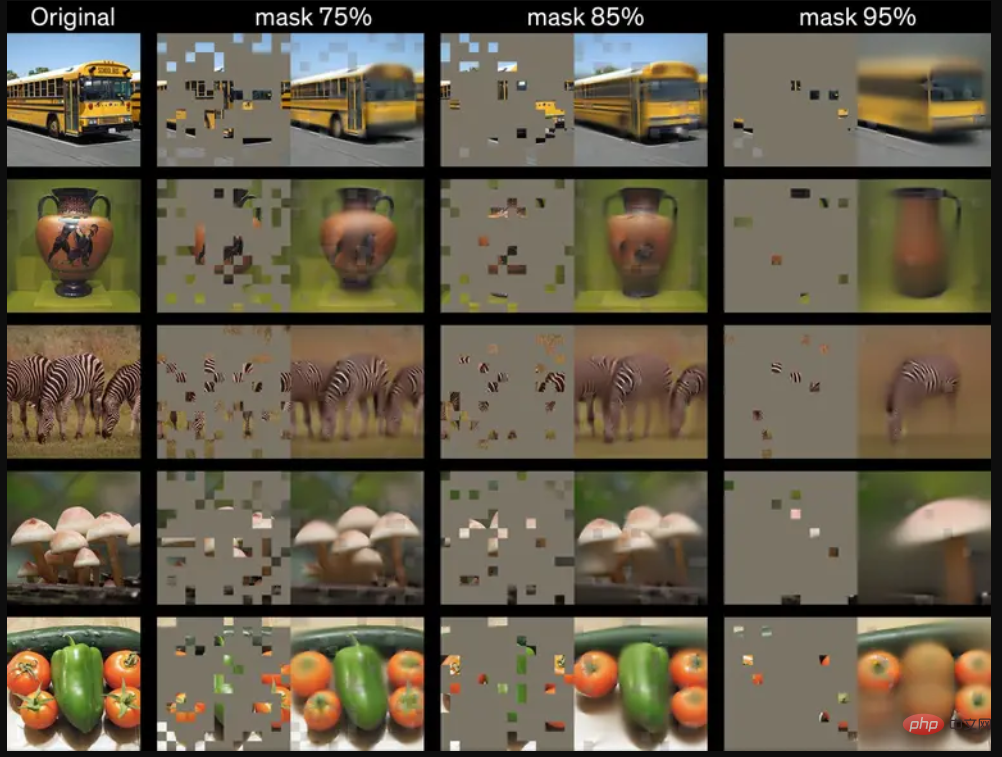

One of the team’s breakthroughs was the realization that masking most of the image would get the best results, a key difference from language transformers, which might only mask 15% of words. “Language is an extremely dense and efficient system of communication, and every symbol carries a lot of meaning,” Girshick said. “But images—these signals from the natural world—are not built to eliminate redundancy. So we This will allow you to compress the content well when creating JPG images."

Researchers at Meta AI experimented with how much of the image needed to be masked to get the best results.

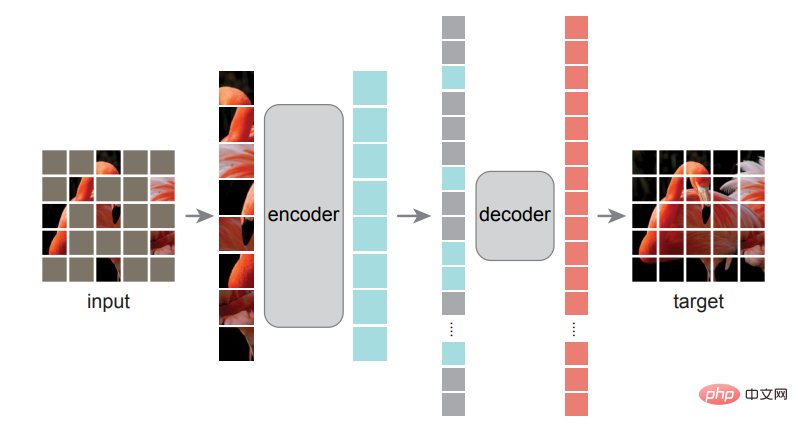

#Girshick explained that by masking more than 75% of the patches in the image, they eliminated redundancy in the image that would otherwise make the task too trivial for training. Their two-part MAE system first uses an encoder to learn the relationships between pixels from a training dataset, and then a decoder does its best to reconstruct the original image from the masked image. After this training scheme is completed, the encoder can also be fine-tuned for vision tasks such as classification and object detection.

Girshick said, "What's ultimately exciting for us is that we see the results of this model in downstream tasks." When using the encoder to complete tasks such as object recognition, "the gains we see are very substantial. ." He pointed out that continuing to increase the model can lead to better performance, which is a potential direction for future models, because SSL "has the potential to use large amounts of data without manual annotation."

Going all out to learn from massive, unfiltered data sets may be Meta's strategy for improving SSL results, but it's also an increasingly controversial approach. AI ethics researchers like Timnit Gebru have called attention to the biases inherent in the uncurated data sets that large language models learn from, which can sometimes lead to disastrous results.

Self-supervised learning for video and audio

In the video MAE system, the masker obscures 95% of each video frame because the similarity between frames means that the video signal is better than the static Images have more redundancy. Meta researcher Christoph Feichtenhofer said that when it comes to video, a big advantage of the MAE approach is that videos are often computationally intensive, and MAE reduces computational costs by up to 95% by masking out up to 95% of the content of each frame. The video clips used in these experiments were only a few seconds long, but Feichtenhofer said training artificial intelligence systems with longer videos is a very active research topic. Imagine you have a virtual assistant who has a video of your home and can tell you where you left your keys an hour ago.

More directly, we can imagine that image and video systems are both useful for the classification tasks required for content moderation on Facebook and Instagram, Feichtenhofer said, "integrity" is one possible application, "We We are communicating with the product team, but this is very new and we don’t have any specific projects yet.”

For the audio MAE work, Meta AI’s team said they will publish the research results on arXiv soon. They found a clever way to apply the masking technique. They converted the sound files into spectrograms, which are visual representations of the spectrum of frequencies in a signal, and then masked parts of the images for training. The reconstructed audio is impressive, although the model can currently only handle clips of a few seconds. Bernie Huang, a researcher on the audio system, said potential applications of this research include classification tasks, assisting voice over IP (VoIP) transmission by filling in the audio lost when packets are dropped, or finding A more efficient way to compress audio files.

Meta has been conducting open source AI research, such as these MAE models, and also provides a pre-trained large language model to the artificial intelligence community. But critics point out that despite being so open to research, Meta has not made its core business algorithms available for study: those that control news feeds, recommendations and ad placement.

The above is the detailed content of Meta AI under LeCun bets on self-supervision. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1376

1376

52

52

New affordable Meta Quest 3S VR headset appears on FCC, suggesting imminent launch

Sep 04, 2024 am 06:51 AM

New affordable Meta Quest 3S VR headset appears on FCC, suggesting imminent launch

Sep 04, 2024 am 06:51 AM

The Meta Connect 2024event is set for September 25 to 26, and in this event, the company is expected to unveil a new affordable virtual reality headset. Rumored to be the Meta Quest 3S, the VR headset has seemingly appeared on FCC listing. This sugge

The first open source model to surpass GPT4o level! Llama 3.1 leaked: 405 billion parameters, download links and model cards are available

Jul 23, 2024 pm 08:51 PM

The first open source model to surpass GPT4o level! Llama 3.1 leaked: 405 billion parameters, download links and model cards are available

Jul 23, 2024 pm 08:51 PM

Get your GPU ready! Llama3.1 finally appeared, but the source is not Meta official. Today, the leaked news of the new Llama large model went viral on Reddit. In addition to the basic model, it also includes benchmark results of 8B, 70B and the maximum parameter of 405B. The figure below shows the comparison results of each version of Llama3.1 with OpenAIGPT-4o and Llama38B/70B. It can be seen that even the 70B version exceeds GPT-4o on multiple benchmarks. Image source: https://x.com/mattshumer_/status/1815444612414087294 Obviously, version 3.1 of 8B and 70

Six quick ways to experience the newly released Llama 3!

Apr 19, 2024 pm 12:16 PM

Six quick ways to experience the newly released Llama 3!

Apr 19, 2024 pm 12:16 PM

Last night Meta released the Llama38B and 70B models. The Llama3 instruction-tuned model is fine-tuned and optimized for dialogue/chat use cases and outperforms many existing open source chat models in common benchmarks. For example, Gemma7B and Mistral7B. The Llama+3 model improves data and scale and reaches new heights. It was trained on more than 15T tokens of data on two custom 24K GPU clusters recently released by Meta. This training dataset is 7 times larger than Llama2 and contains 4 times more code. This brings the capability of the Llama model to the current highest level, which supports text lengths of more than 8K, twice that of Llama2. under

The strongest model Llama 3.1 405B is officially released, Zuckerberg: Open source leads a new era

Jul 24, 2024 pm 08:23 PM

The strongest model Llama 3.1 405B is officially released, Zuckerberg: Open source leads a new era

Jul 24, 2024 pm 08:23 PM

Just now, the long-awaited Llama 3.1 has been officially released! Meta officially issued a voice that "open source leads a new era." In the official blog, Meta said: "Until today, open source large language models have mostly lagged behind closed models in terms of functionality and performance. Now, we are ushering in a new era led by open source. We publicly released MetaLlama3.1405B, which we believe It is the largest and most powerful open source basic model in the world. To date, the total downloads of all Llama versions have exceeded 300 million times, and we have just begun.” Meta founder and CEO Zuckerberg also wrote an article. Long article "OpenSourceAIIsthePathForward",

What does META mean?

Mar 05, 2024 pm 12:18 PM

What does META mean?

Mar 05, 2024 pm 12:18 PM

META usually refers to a virtual world or platform called Metaverse. The metaverse is a virtual world built by humans using digital technology that mirrors or transcends the real world and can interact with the real world. It is a digital living space with a new social system.

Llama3 comes suddenly! The open source community is boiling again: the era of free access to GPT4-level models has arrived

Apr 19, 2024 pm 12:43 PM

Llama3 comes suddenly! The open source community is boiling again: the era of free access to GPT4-level models has arrived

Apr 19, 2024 pm 12:43 PM

Llama3 is here! Just now, Meta’s official website was updated and the official announced Llama 38 billion and 70 billion parameter versions. And it is an open source SOTA after its launch: Meta official data shows that the Llama38B and 70B versions surpass all opponents in their respective parameter scales. The 8B model outperforms Gemma7B and Mistral7BInstruct on many benchmarks such as MMLU, GPQA, and HumanEval. The 70B model has surpassed the popular closed-source fried chicken Claude3Sonnet, and has gone back and forth with Google's GeminiPro1.5. As soon as the Huggingface link came out, the open source community became excited again. The sharp-eyed blind students also discovered immediately

It is expected that in 2024, Meta plans to launch a revolutionary AR glasses prototype called 'Orion'

Jan 04, 2024 pm 09:35 PM

It is expected that in 2024, Meta plans to launch a revolutionary AR glasses prototype called 'Orion'

Jan 04, 2024 pm 09:35 PM

According to news on December 24, meta, a technology company with huge influence in the social media industry, is now pinning its strong expectations on augmented reality (AR) glasses, a technology considered to be the next generation computing platform. Recently, meta’s technical director Andrew Bosworth revealed in an interview that the company is expected to launch an advanced AR glasses prototype code-named “Orion” in 2024. For a long time, meta has invested in AR technology as much as in other fields. They have invested huge amounts of money, amounting to billions of dollars, aiming to create a revolutionary product comparable to the iPhone. Although last year they announced the end of mass production plans for Orion glasses,

Analyst discusses launch pricing for rumoured Meta Quest 3S VR headset

Aug 27, 2024 pm 09:35 PM

Analyst discusses launch pricing for rumoured Meta Quest 3S VR headset

Aug 27, 2024 pm 09:35 PM

Over a year has now passed from Meta's initial release of the Quest 3 (curr. $499.99 on Amazon). Since then, Apple has shipped the considerably more expensive Vision Pro, while Byte Dance has now unveiled the Pico 4 Ultra in China. However, there is