Technology peripherals

Technology peripherals

AI

AI

To explore the road to enterprise MLOps implementation, the AISummit Global Artificial Intelligence Technology Conference 'MLOps Best Practices' was successfully held

To explore the road to enterprise MLOps implementation, the AISummit Global Artificial Intelligence Technology Conference 'MLOps Best Practices' was successfully held

To explore the road to enterprise MLOps implementation, the AISummit Global Artificial Intelligence Technology Conference 'MLOps Best Practices' was successfully held

Currently, there are many difficulties in the large-scale application of artificial intelligence in enterprises, such as: long development and launch cycles, lower than expected results, and difficulty in matching data and models. In this context, MLOps came into being. MLOps is emerging as a key technology to help scale machine learning in the enterprise.

Recently, the AISummit Global Artificial Intelligence Technology Conference organized by 51CTO was successfully held. In the "MLOps Best Practices" special session held at the conference, Tan Zhongyi, Vice Chairman of TOC of the Open Atomic Foundation, Lu Mian, System Architect of 4Paradigm, Wu Guanlin, NetEase Cloud Music Artificial Intelligence Researcher, Big Data and Artificial Intelligence of the Software Development Center of the Industrial and Commercial Bank of China Huang Bing, deputy director of the laboratory, gave his own keynote speech, discussing the actual combat of MLOps around hot topics such as R&D operation and maintenance cycle, continuous training and continuous monitoring, model version and lineage, online and offline data consistency, and efficient data supply. effects and cutting-edge trends.

Definition and Evaluation of MLOps

Andrew NG has expressed on many occasions that AI has shifted from model centric to data centric, and data is the biggest challenge for the implementation of AI. How to ensure the high-quality supply of data is a key issue. To solve this problem, we need to use the practice of MLOps to help AI implement quickly, easily and cost-effectively.

So, what problems does MLOps solve? How to assess the maturity of an MLOps project? Tan Zhongyi, Vice Chairman of Open Atomic Foundation TOC and member of LF AI & Data TAC, gave a keynote speech "From model centric to data centric - MLOps helps AI to be implemented quickly, easily and cost-effectively", which was introduced in detail.

Tan Zhongyi first shared the views of a group of industry scientists and analysts. Andrew NG believes that improving data quality can improve the effectiveness of AI implementation more than improving model algorithms. In his view, the most important task of MLOps is to always maintain high-quality data supply at all stages of the machine learning life cycle.

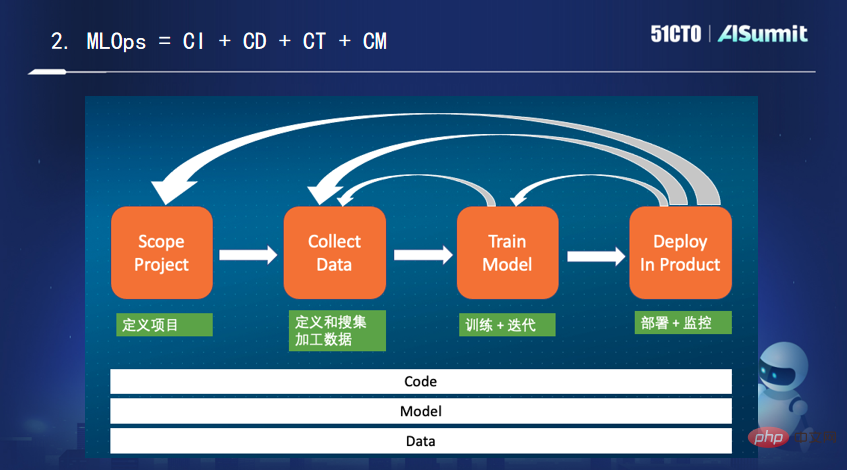

To achieve large-scale implementation of AI, MLOps must be developed. As for what exactly MLOps is, there is no consensus in the industry. He gave his own explanation: it is "continuous integration, continuous deployment, continuous training and continuous monitoring of code, model, and data."

Next, Tan Zhongyi focused on the characteristics of the Feature Store (feature platform), a unique platform in the field of machine learning, as well as the mainstream features currently on the market. platform products.

Finally, Tan Zhongyi briefly elaborated on the MLOps maturity model. He mentioned that Microsoft Azure divided the MLOps mature model into several levels (0, 1, 2, 3, 4) according to the degree of automation of the entire machine learning process, where 0 means no automation and 123 means partial. Automation, 4 is a high degree of automation.

A consistent production-level feature platform for online and offline operations

In many machine learning scenarios, we are faced with the need for real-time feature calculation. From feature scripts developed offline by data scientists to online real-time feature calculations, the cost of AI implementation is very high.

In response to this pain point, Lu Mian, 4Paradigm system architect, database team and high-performance computing team leader, gave a keynote speech "Open Source Machine Learning Database OpenMLDB: Consistent Online and Offline "Production-Level Feature Platform" focuses on how OpenMLDB achieves the goal of machine learning feature development being put online immediately, and how to ensure the accuracy and efficiency of feature calculations.

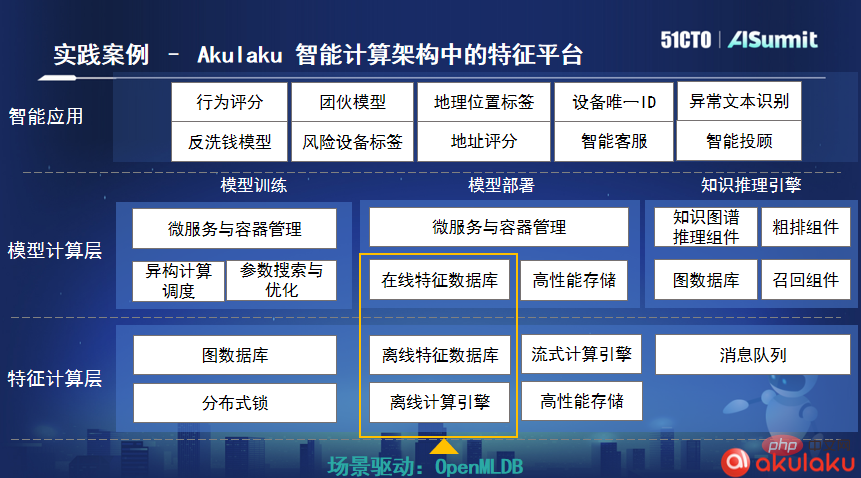

Lu Mian pointed out that with the advancement of artificial intelligence engineering implementation, in the feature engineering process, online consistency verification has brought high implementation costs. OpenMLDB provides a low-cost open source solution. It not only solves the core problem - the consistency of online and offline machine learning, solves the problem of correctness, but also achieves millisecond-level real-time feature calculation. This is its core value.

According to Lu Mian, Indonesian online payment company Akulaku is the first community enterprise user of OpenMLDB since it was open sourced. They have integrated OpenMLDB into their intelligent computing architecture. In actual business, Akulaku processes nearly 1 billion order data a day on average. After using OpenMLDB, its data processing delay is only 4 milliseconds, which fully meets their business needs.

Building an end-to-end machine learning platform

Relying on NetEase Cloud Music’s massive data, precise algorithms, and real-time systems, it serves multiple scenarios of content distribution and commercialization, while meeting the needs of modeling A series of algorithm engineering pursuits include high efficiency, low barriers to use, and significant model effects. To this end, the NetEase Cloud Music Algorithm Engineering Team has started the practical implementation of an end-to-end machine learning platform in conjunction with the music business.

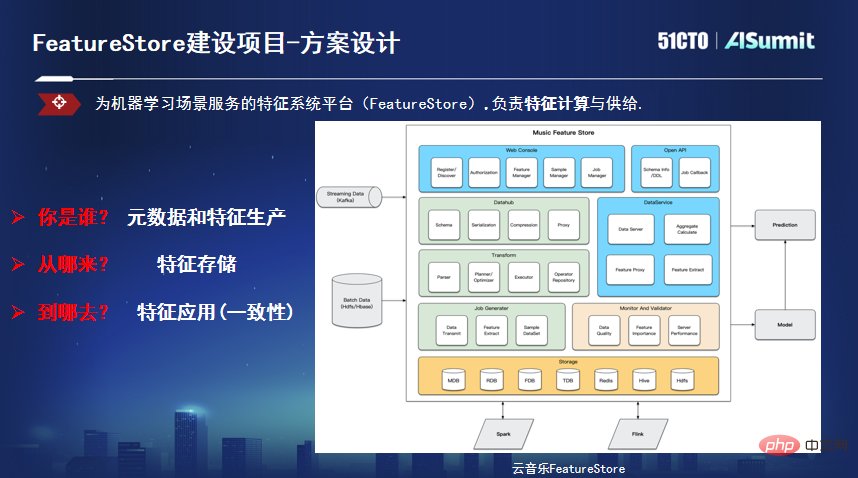

Wu Guanlin, NetEase Cloud Music artificial intelligence researcher and technical director, gave a keynote speech "NetEase Cloud Music Feature Platform Technology Practice", starting from the background of cloud music business, explaining the real-time implementation of the model The plan, combined with the Feature Store, further shared its thoughts with the participants.

Wu Guanlin mentioned that in the construction of cloud music model algorithm projects, there are three major pain points: low real-time level, low modeling efficiency, and limited model capabilities caused by online and offline inconsistencies. . In response to these pain points, they started from the real-time model and built the corresponding Feature Store platform in the process of the model covering the business in real time.

Wu Guanlin introduced that they first explored the real-time model in live broadcast scenarios and achieved certain results. In terms of engineering, a complete link has also been explored and some basic engineering construction has been implemented. However, the real-time model focuses on fine-tuning real-time scenarios, but more than 80% of the scenarios are offline models. In the full-link modeling process, each scenario developer starts from the origin of data, which leads to problems such as long modeling cycle, unpredictable effects, and high development threshold for novices. Considering a model launch cycle, 80% of the time is related to data, of which features account for as much as 50%. They began to precipitate the feature platform Feature Store.

Feature Store mainly solves three problems: First, define metadata, unify feature lineage, calculation, and push process, and implement batch flow-based An integrated and efficient feature production link; the second is to transform the characteristics of features to solve the feature storage problem, and provide various types of storage engines according to the differences in latency and throughput in actual usage scenarios; the third is to solve the problem of feature consistency , reading data in a specified format from a unified API as input to the machine learning model for inference, training, etc.

New infrastructure for the development of financial intelligence

Huang Bing, deputy director of the Big Data and Artificial Intelligence Laboratory of the Software Development Center of the Industrial and Commercial Bank of China, delivered his keynote speech "Building a Strong Financial System" "New Artificial Intelligence Infrastructure for Intelligent Innovation and Development" focuses on ICBC's MLOps practice, covering the construction process and technical practices of the full life cycle management system of model development, model delivery, model management, and model iterative operation.

The reason why MLOps is needed is because behind the rapid development of artificial intelligence, many existing or potential "AI technical debts" cannot be ignored. Huang Bing believes that the concept of MLOps can solve these technical debts. "If DevOps is a tool to solve the technical debt problem of software systems, and DataOps is the key to unlock the technical debt problem of data assets, then MLOps, which is born out of the DevOps concept, is a treatment machine." Learn the cure for your technical debt problem.”

During the construction process, ICBC’s MLOps practical experience can be summarized into four points: consolidating the “foundation” of public capabilities, building an enterprise-level data center, and realizing data precipitation and sharing; reducing Apply the "tool" of the threshold to build relevant modeling and service assembly lines to form a process-based and building-block assembly R&D model; establish a "method" for AI asset accumulation and sharing to minimize the cost of AI construction and form a shared and co-constructed The key to ecology is to form the "technique" of model operation iteration and establish a model operation system based on data and business value. This is the basis for continuous iteration and quantitative evaluation of model quality.

At the end of his speech, Huang Bing made two points of outlook: First, MLOps needs to be safer and more compliant. In the future, enterprise development will require a lot of models to achieve data-driven intelligent decision-making, which will lead to more enterprise-level requirements related to model development, operation and maintenance, permission control, data privacy, security and auditing; second, MLOps Need to be combined with other Ops. Solving the technical debt problem is a complex process. DevOps solutions, DataOps solutions and MLOps solutions must be coordinated and interconnected to empower each other in order to give full play to all the advantages of the three and achieve the effect of "1+1+1>3".

Written at the end

According to IDC predictions, 60% of enterprises will use MLOps to implement machine learning workflows by 2024. IDC analyst Sriram Subramanian once commented: "MLOps reduces model speed to weeks - sometimes even days, just like using DevOps to speed up the average time to build an application. That's why you need MLOps."

Currently, we are at an inflection point where artificial intelligence is rapidly expanding. By adopting MLOps, enterprises can build more models, achieve business innovation faster, and promote the implementation of AI more quickly and cost-effectively. Thousands of industries are witnessing and verifying the fact that MLOps is becoming a catalyst for the scale of enterprise AI. For more exciting content, pleaseclick to view.

The above is the detailed content of To explore the road to enterprise MLOps implementation, the AISummit Global Artificial Intelligence Technology Conference 'MLOps Best Practices' was successfully held. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

I Tried Vibe Coding with Cursor AI and It's Amazing!

Mar 20, 2025 pm 03:34 PM

I Tried Vibe Coding with Cursor AI and It's Amazing!

Mar 20, 2025 pm 03:34 PM

Vibe coding is reshaping the world of software development by letting us create applications using natural language instead of endless lines of code. Inspired by visionaries like Andrej Karpathy, this innovative approach lets dev

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!

Mar 22, 2025 am 10:58 AM

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!

Mar 22, 2025 am 10:58 AM

February 2025 has been yet another game-changing month for generative AI, bringing us some of the most anticipated model upgrades and groundbreaking new features. From xAI’s Grok 3 and Anthropic’s Claude 3.7 Sonnet, to OpenAI’s G

How to Use YOLO v12 for Object Detection?

Mar 22, 2025 am 11:07 AM

How to Use YOLO v12 for Object Detection?

Mar 22, 2025 am 11:07 AM

YOLO (You Only Look Once) has been a leading real-time object detection framework, with each iteration improving upon the previous versions. The latest version YOLO v12 introduces advancements that significantly enhance accuracy

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

The article reviews top AI art generators, discussing their features, suitability for creative projects, and value. It highlights Midjourney as the best value for professionals and recommends DALL-E 2 for high-quality, customizable art.

Is ChatGPT 4 O available?

Mar 28, 2025 pm 05:29 PM

Is ChatGPT 4 O available?

Mar 28, 2025 pm 05:29 PM

ChatGPT 4 is currently available and widely used, demonstrating significant improvements in understanding context and generating coherent responses compared to its predecessors like ChatGPT 3.5. Future developments may include more personalized interactions and real-time data processing capabilities, further enhancing its potential for various applications.

Best AI Chatbots Compared (ChatGPT, Gemini, Claude & More)

Apr 02, 2025 pm 06:09 PM

Best AI Chatbots Compared (ChatGPT, Gemini, Claude & More)

Apr 02, 2025 pm 06:09 PM

The article compares top AI chatbots like ChatGPT, Gemini, and Claude, focusing on their unique features, customization options, and performance in natural language processing and reliability.

How to Use Mistral OCR for Your Next RAG Model

Mar 21, 2025 am 11:11 AM

How to Use Mistral OCR for Your Next RAG Model

Mar 21, 2025 am 11:11 AM

Mistral OCR: Revolutionizing Retrieval-Augmented Generation with Multimodal Document Understanding Retrieval-Augmented Generation (RAG) systems have significantly advanced AI capabilities, enabling access to vast data stores for more informed respons

Top AI Writing Assistants to Boost Your Content Creation

Apr 02, 2025 pm 06:11 PM

Top AI Writing Assistants to Boost Your Content Creation

Apr 02, 2025 pm 06:11 PM

The article discusses top AI writing assistants like Grammarly, Jasper, Copy.ai, Writesonic, and Rytr, focusing on their unique features for content creation. It argues that Jasper excels in SEO optimization, while AI tools help maintain tone consist