Technology peripherals

Technology peripherals

AI

AI

Systematic review of deep reinforcement learning pre-training, online and offline research is enough.

Systematic review of deep reinforcement learning pre-training, online and offline research is enough.

Systematic review of deep reinforcement learning pre-training, online and offline research is enough.

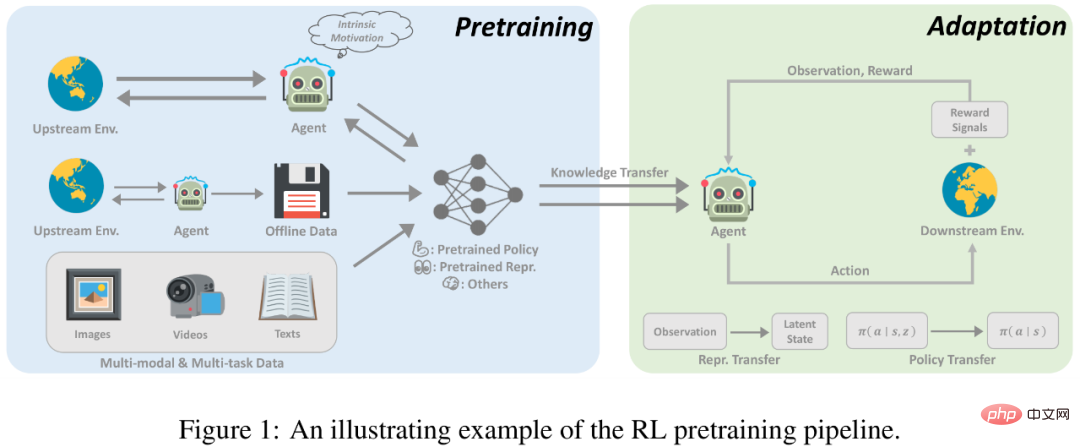

In recent years, reinforcement learning (RL) has developed rapidly, driven by deep learning. Various breakthroughs in fields from games to robotics have stimulated people's interest in designing complex, large-scale RL algorithms and systems. However, existing RL research generally allows agents to learn from scratch when faced with new tasks, making it difficult to use pre-acquired prior knowledge to assist decision-making, resulting in high computational overhead.

In the field of supervised learning, the pre-training paradigm has been verified as an effective way to obtain transferable prior knowledge. By pre-training on large-scale data sets, the network model can Quickly adapt to different downstream tasks. Similar ideas have also been tried in RL, especially the recent research on "generalist" agents [1, 2], which makes people wonder whether something like GPT-3 [3] can also be born in the RL field. Universal pre-trained model.

However, the application of pre-training in the RL field faces many challenges, such as the significant differences between upstream and downstream tasks, how to efficiently obtain and utilize pre-training data, and how to use prior knowledge. Issues such as effective transfer hinder the successful application of pre-training paradigms in RL. At the same time, there are great differences in the experimental settings and methods considered in previous studies, which makes it difficult for researchers to design appropriate pre-training models in real-life scenarios.

In order to sort out the development of pre-training in the field of RL and possible future development directions, Researchers from Shanghai Jiao Tong University and Tencent wrote a review to discuss existing RL Pre-training segmentation methods under different settings and problems to be solved.

##Paper address: https://arxiv.org/pdf/2211.03959.pdf

RL Pre-training IntroductionReinforcement learning (RL) provides a general mathematical form for sequential decision-making. Through RL algorithms and deep neural networks, agents learned in a data-driven manner and optimizing specified reward functions have achieved performance beyond human performance in various applications in different fields. However, although RL has been proven to be effective in solving specified tasks, sample efficiency and generalization ability are still two major obstacles hindering the application of RL in the real world. In RL research, a standard paradigm is for an agent to learn from experience collected by itself or others, optimizing a neural network through random initialization for a single task. In contrast, for humans, prior knowledge of the world greatly aids the decision-making process. If the task is related to previously seen tasks, humans tend to reuse already learned knowledge to quickly adapt to new tasks without learning from scratch. Therefore, compared with humans, RL agents suffer from low data efficiency and are prone to overfitting.

However, recent advances in other areas of machine learning actively advocate leveraging prior knowledge built from large-scale pre-training. By training at scale on a wide range of data, large foundation models can be quickly adapted to a variety of downstream tasks. This pretraining-finetuning paradigm has proven effective in fields such as computer vision and natural language processing. However, pre-training has not had a significant impact on the RL field. Although this approach is promising, designing principles for large-scale RL pretraining faces many challenges. 1) Diversity of domains and tasks; 2) Limited data sources; 3) Rapid adaptation to the difficulty of solving downstream tasks. These factors arise from the inherent characteristics of RL and require special consideration by researchers.

Pre-training has great potential for RL, and this study can serve as a starting point for those interested in this direction. In this article, researchers attempt to conduct a systematic review of existing pre-training work on deep reinforcement learning.

In recent years, deep reinforcement learning pre-training has experienced several breakthroughs. First, pre-training based on expert demonstrations, which uses supervised learning to predict the actions taken by experts, has been used on AlphaGo. In pursuit of less-supervised large-scale pre-training, the field of unsupervised RL has grown rapidly, which allows agents to learn from interactions with the environment without reward signals. In addition, the rapid development of offline reinforcement learning (offline RL) has prompted researchers to further consider how to use unlabeled and sub-optimal offline data for pre-training. Finally, offline training methods based on multi-task and multi-modal data further pave the way for a general pre-training paradigm.

Online pre-training

In the past, the success of RL was achieved with dense and well-designed reward functions. Traditional RL paradigms, which have made great progress in many fields, face two key challenges when scaling to large-scale pre-training. First, RL agents are easily overfitted, and it is difficult for agents pre-trained with complex task rewards to achieve good performance on tasks they have never seen before. In addition, designing reward functions is usually very expensive and requires a lot of expert knowledge, which is undoubtedly a big challenge in practice.

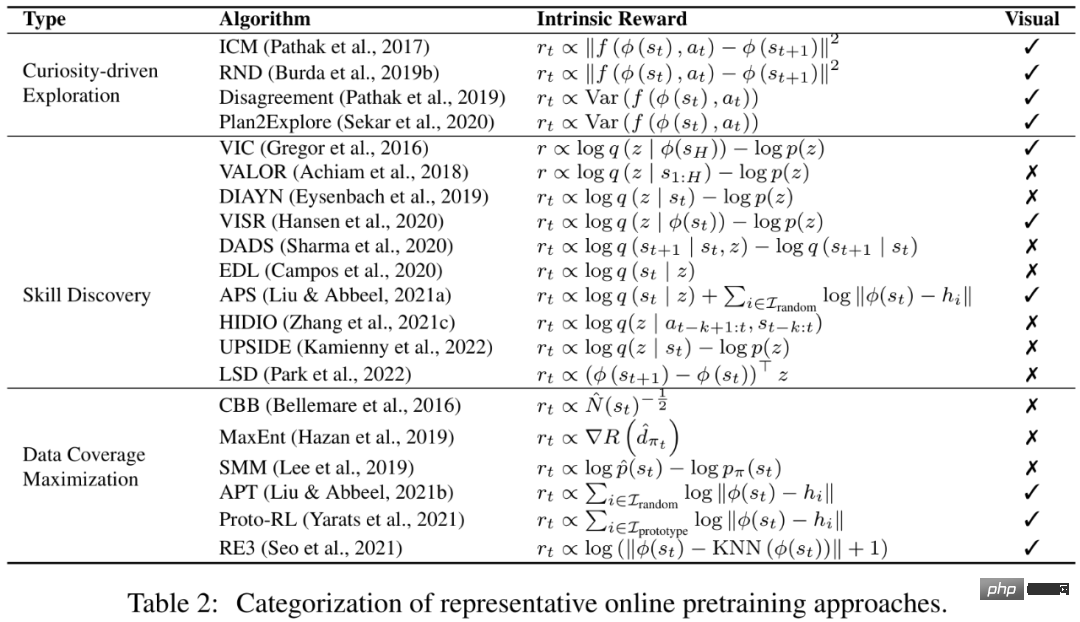

Online pre-training without reward signals may become an available solution for learning universal prior knowledge and supervised signals without human involvement. Online pre-training aims to acquire prior knowledge through interaction with the environment without human supervision. In the pre-training phase, the agent is allowed to interact with the environment for a long time but cannot receive extrinsic rewards. This solution, also known as unsupervised RL, has been actively studied by researchers in recent years.

In order to motivate agents to acquire prior knowledge from the environment without any supervision signals, a mature method is to design intrinsic rewards for agents to encourage The agent designs reward mechanisms accordingly by collecting diverse experiences or mastering transferable skills. Previous research has shown that agents can quickly adapt to downstream tasks through online pretraining with intrinsic rewards and standard RL algorithms.

Offline pre-training

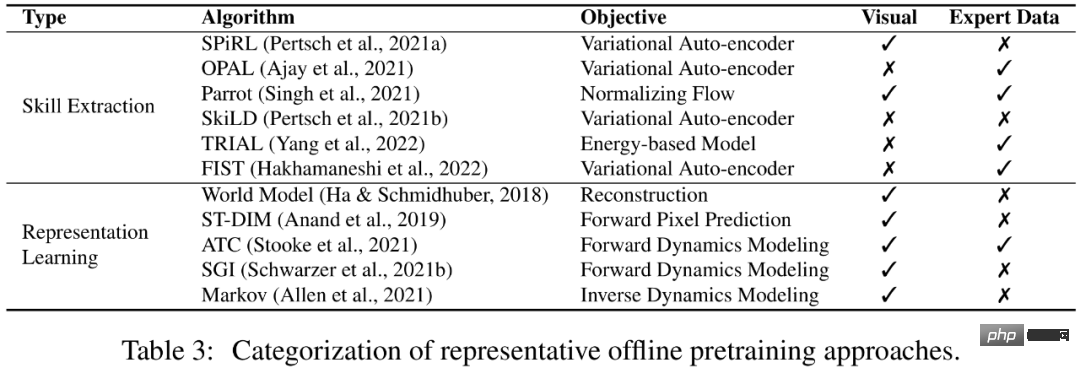

Although online pre-training can achieve good pre-training results without human supervision, But for large-scale applications, online pre-training is still limited. After all, online interaction is somewhat mutually exclusive with the need to train on large and diverse datasets. In order to solve this problem, people often hope to decouple the data collection and pre-training links and directly use historical data collected from other agents or humans for pre-training.

A feasible solution is offline reinforcement learning. The purpose of offline reinforcement learning is to obtain a reward-maximizing RL policy from offline data. A fundamental challenge is the problem of distribution shift, that is, the difference in distribution between the training data and the data seen during testing. Existing offline reinforcement learning methods focus on how to solve this challenge when using function approximation. For example, policy constraint methods explicitly require the learned policy to avoid taking actions not seen in the data set, and value regularization methods alleviate the problem of overestimation of the value function by fitting the value function to some form of lower bound. However, whether strategies trained offline can generalize to new environments not seen in offline datasets remains underexplored.

Perhaps, we can avoid the learning of RL policies and instead use offline data to learn prior knowledge that is beneficial to the convergence speed or final performance of downstream tasks. More interestingly, if our model can leverage offline data without human supervision, it has the potential to benefit from massive amounts of data. In this paper, researchers refer to this setting as offline pre-training, and the agent can extract important information (such as good representation and behavioral priors) from offline data.

Towards a general agent

The pre-training methods in a single environment and single modality mainly focus on the above mentioned Online pre-training and offline pre-training settings, and recently, researchers in the field have become increasingly interested in building a single general decision-making model (e.g., Gato [1] and Multi-game DT [2]), making the same The model is able to handle tasks of different modalities in different environments. In order to enable agents to learn from and adapt to a variety of open-ended tasks, the research hopes to leverage large amounts of prior knowledge in different forms, such as visual perception and language understanding. More importantly, if researchers can successfully build a bridge between RL and machine learning in other fields, and combine previous successful experiences, they may be able to build a general agent model that can complete various tasks.

The above is the detailed content of Systematic review of deep reinforcement learning pre-training, online and offline research is enough.. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

0.What does this article do? We propose DepthFM: a versatile and fast state-of-the-art generative monocular depth estimation model. In addition to traditional depth estimation tasks, DepthFM also demonstrates state-of-the-art capabilities in downstream tasks such as depth inpainting. DepthFM is efficient and can synthesize depth maps within a few inference steps. Let’s read about this work together ~ 1. Paper information title: DepthFM: FastMonocularDepthEstimationwithFlowMatching Author: MingGui, JohannesS.Fischer, UlrichPrestel, PingchuanMa, Dmytr

Tongyi Qianwen is open source again, Qwen1.5 brings six volume models, and its performance exceeds GPT3.5

Feb 07, 2024 pm 10:15 PM

Tongyi Qianwen is open source again, Qwen1.5 brings six volume models, and its performance exceeds GPT3.5

Feb 07, 2024 pm 10:15 PM

In time for the Spring Festival, version 1.5 of Tongyi Qianwen Model (Qwen) is online. This morning, the news of the new version attracted the attention of the AI community. The new version of the large model includes six model sizes: 0.5B, 1.8B, 4B, 7B, 14B and 72B. Among them, the performance of the strongest version surpasses GPT3.5 and Mistral-Medium. This version includes Base model and Chat model, and provides multi-language support. Alibaba’s Tongyi Qianwen team stated that the relevant technology has also been launched on the Tongyi Qianwen official website and Tongyi Qianwen App. In addition, today's release of Qwen 1.5 also has the following highlights: supports 32K context length; opens the checkpoint of the Base+Chat model;

Abandon the encoder-decoder architecture and use the diffusion model for edge detection, which is more effective. The National University of Defense Technology proposed DiffusionEdge

Feb 07, 2024 pm 10:12 PM

Abandon the encoder-decoder architecture and use the diffusion model for edge detection, which is more effective. The National University of Defense Technology proposed DiffusionEdge

Feb 07, 2024 pm 10:12 PM

Current deep edge detection networks usually adopt an encoder-decoder architecture, which contains up and down sampling modules to better extract multi-level features. However, this structure limits the network to output accurate and detailed edge detection results. In response to this problem, a paper on AAAI2024 provides a new solution. Thesis title: DiffusionEdge:DiffusionProbabilisticModelforCrispEdgeDetection Authors: Ye Yunfan (National University of Defense Technology), Xu Kai (National University of Defense Technology), Huang Yuxing (National University of Defense Technology), Yi Renjiao (National University of Defense Technology), Cai Zhiping (National University of Defense Technology) Paper link: https ://ar

Large models can also be sliced, and Microsoft SliceGPT greatly increases the computational efficiency of LLAMA-2

Jan 31, 2024 am 11:39 AM

Large models can also be sliced, and Microsoft SliceGPT greatly increases the computational efficiency of LLAMA-2

Jan 31, 2024 am 11:39 AM

Large language models (LLMs) typically have billions of parameters and are trained on trillions of tokens. However, such models are very expensive to train and deploy. In order to reduce computational requirements, various model compression techniques are often used. These model compression techniques can generally be divided into four categories: distillation, tensor decomposition (including low-rank factorization), pruning, and quantization. Pruning methods have been around for some time, but many require recovery fine-tuning (RFT) after pruning to maintain performance, making the entire process costly and difficult to scale. Researchers from ETH Zurich and Microsoft have proposed a solution to this problem called SliceGPT. The core idea of this method is to reduce the embedding of the network by deleting rows and columns in the weight matrix.

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Boston Dynamics Atlas officially enters the era of electric robots! Yesterday, the hydraulic Atlas just "tearfully" withdrew from the stage of history. Today, Boston Dynamics announced that the electric Atlas is on the job. It seems that in the field of commercial humanoid robots, Boston Dynamics is determined to compete with Tesla. After the new video was released, it had already been viewed by more than one million people in just ten hours. The old people leave and new roles appear. This is a historical necessity. There is no doubt that this year is the explosive year of humanoid robots. Netizens commented: The advancement of robots has made this year's opening ceremony look like a human, and the degree of freedom is far greater than that of humans. But is this really not a horror movie? At the beginning of the video, Atlas is lying calmly on the ground, seemingly on his back. What follows is jaw-dropping

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

I cry to death. The world is madly building big models. The data on the Internet is not enough. It is not enough at all. The training model looks like "The Hunger Games", and AI researchers around the world are worrying about how to feed these data voracious eaters. This problem is particularly prominent in multi-modal tasks. At a time when nothing could be done, a start-up team from the Department of Renmin University of China used its own new model to become the first in China to make "model-generated data feed itself" a reality. Moreover, it is a two-pronged approach on the understanding side and the generation side. Both sides can generate high-quality, multi-modal new data and provide data feedback to the model itself. What is a model? Awaker 1.0, a large multi-modal model that just appeared on the Zhongguancun Forum. Who is the team? Sophon engine. Founded by Gao Yizhao, a doctoral student at Renmin University’s Hillhouse School of Artificial Intelligence.

Kuaishou version of Sora 'Ke Ling' is open for testing: generates over 120s video, understands physics better, and can accurately model complex movements

Jun 11, 2024 am 09:51 AM

Kuaishou version of Sora 'Ke Ling' is open for testing: generates over 120s video, understands physics better, and can accurately model complex movements

Jun 11, 2024 am 09:51 AM

What? Is Zootopia brought into reality by domestic AI? Exposed together with the video is a new large-scale domestic video generation model called "Keling". Sora uses a similar technical route and combines a number of self-developed technological innovations to produce videos that not only have large and reasonable movements, but also simulate the characteristics of the physical world and have strong conceptual combination capabilities and imagination. According to the data, Keling supports the generation of ultra-long videos of up to 2 minutes at 30fps, with resolutions up to 1080p, and supports multiple aspect ratios. Another important point is that Keling is not a demo or video result demonstration released by the laboratory, but a product-level application launched by Kuaishou, a leading player in the short video field. Moreover, the main focus is to be pragmatic, not to write blank checks, and to go online as soon as it is released. The large model of Ke Ling is already available in Kuaiying.

The U.S. Air Force showcases its first AI fighter jet with high profile! The minister personally conducted the test drive without interfering during the whole process, and 100,000 lines of code were tested for 21 times.

May 07, 2024 pm 05:00 PM

The U.S. Air Force showcases its first AI fighter jet with high profile! The minister personally conducted the test drive without interfering during the whole process, and 100,000 lines of code were tested for 21 times.

May 07, 2024 pm 05:00 PM

Recently, the military circle has been overwhelmed by the news: US military fighter jets can now complete fully automatic air combat using AI. Yes, just recently, the US military’s AI fighter jet was made public for the first time and the mystery was unveiled. The full name of this fighter is the Variable Stability Simulator Test Aircraft (VISTA). It was personally flown by the Secretary of the US Air Force to simulate a one-on-one air battle. On May 2, U.S. Air Force Secretary Frank Kendall took off in an X-62AVISTA at Edwards Air Force Base. Note that during the one-hour flight, all flight actions were completed autonomously by AI! Kendall said - "For the past few decades, we have been thinking about the unlimited potential of autonomous air-to-air combat, but it has always seemed out of reach." However now,