Technology peripherals

Technology peripherals

AI

AI

Training BERT and ResNet on a smartphone for the first time, reducing energy consumption by 35%

Training BERT and ResNet on a smartphone for the first time, reducing energy consumption by 35%

Training BERT and ResNet on a smartphone for the first time, reducing energy consumption by 35%

The researchers said that they viewed edge training as an optimization problem and thus discovered the optimal schedule to achieve minimum energy consumption under a given memory budget.

Currently, deep learning models have been widely deployed on edge devices such as smartphones and embedded platforms for inference. Among them, training is still mainly done on large cloud servers with high-throughput accelerators such as GPUs. Centralized cloud training models require transferring sensitive data such as photos and keystrokes from edge devices to the cloud, sacrificing user privacy and incurring additional data movement costs.

Caption: Twitter @Shishir Patil

Therefore, in order to allow users to personalize their models without sacrificing privacy, federated learning Device-based training methods such as Alibaba Cloud do not need to integrate data into the cloud and can also perform local training updates. These methods have been deployed on Google's Gboard keyboard to personalize keyboard suggestions and are also used by iPhones to improve automatic speech recognition. At the same time, current device-based training methods do not support training modern architectures and large models. Training larger models on edge devices is not feasible, mainly because limited device memory cannot store backpropagation activations. A single training iteration of ResNet-50 requires over 200 times more memory than inference.

Strategies proposed in previous work include paging to auxiliary memory and reimplementation to reduce the memory footprint of cloud training. However, these methods significantly increase overall energy consumption. Data transfers associated with paging methods generally require more energy than heavy computation of data. As the memory budget shrinks, reimplementation increases energy consumption at a rate of O(n^2).

In a recent UC Berkeley paper, several researchers showed that paging and reimplementation are highly complementary. By reimplementing simple operations while paging the results of complex operations onto secondary storage such as flash or SD cards, they are able to expand the effective memory capacity with minimal energy consumption. Moreover, through the combination of these two methods, the researchers also proved that it is possible to train models such as BERT on mobile-grade edge devices. By treating edge training as an optimization problem, they discovered the optimal schedule that achieves minimum energy consumption under a given memory budget.

- Paper address: https://arxiv.org/pdf/2207.07697.pdf

- Project homepage: https://poet.cs .berkeley.edu/

- GitHub address: https://github.com/shishirpatil/poet

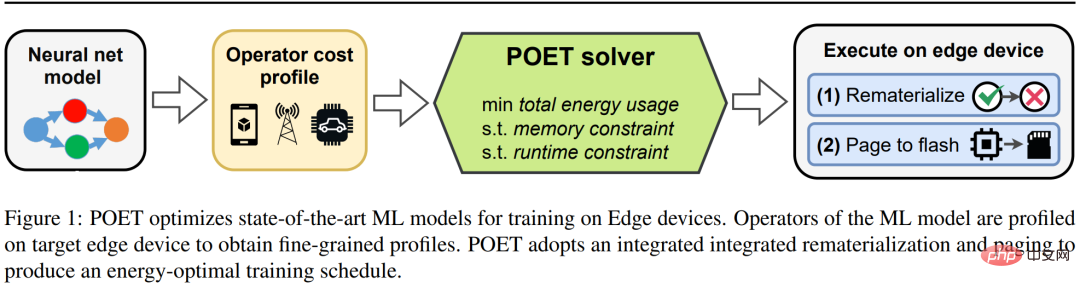

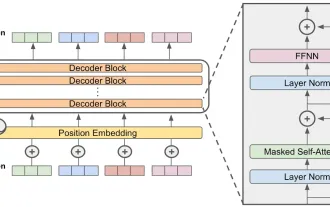

The researcher proposed POET (Private Optimal Energy Training ), which is an algorithm for energy-optimal training of modern neural networks on memory-constrained edge devices. Its architecture is shown in Figure 1 below. Given the extremely high cost of caching all activation tensors for backpropagation, POET optimizes paging and reimplementation of activations, thereby reducing memory consumption by up to a factor of two. They reformulated the marginal training problem as an integer linear programming (ILP) and found that it could be solved to optimality by a solver in 10 minutes.

Caption: POET optimizes the training of SOTA machine learning models on edge devices.

For models deployed on real-world edge devices, training occurs when the edge device appears idle and can compute cycles, such as Google Gboard, which schedules model updates while the phone is charging. Therefore, POET also contains strict training constraints. Given memory constraints and the number of training epochs, POET generates solutions that also meet the given training deadline. Additionally, we develop a comprehensive cost model using POET and show that it is mathematically value-preserving (i.e., does not make approximations) and works with existing out-of-the-box architectures.

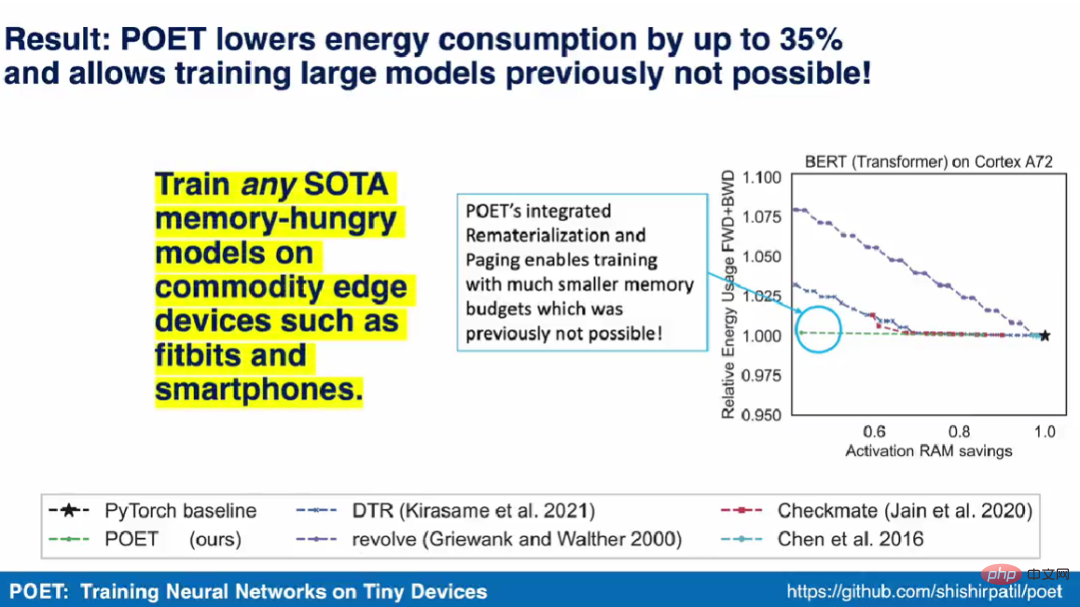

Shishir Patil, the first author of the paper, said in the demonstration video that the POET algorithm can train any SOTA model that requires huge memory on commercial edge devices such as smartphones. They also became the first research team to demonstrate training SOTA machine learning models such as BERT and ResNet on smartphones and ARM Cortex-M devices.

Integrated paging and reimplementation

Reimplementation and paging are two techniques for reducing memory consumption of large SOTA ML models. In reimplementations, activation tensors are deleted once they are no longer needed, most commonly during forward pass. This frees up valuable memory that can be used to store activations for subsequent layers. When the deleted tensor is needed again, the method recomputes it from other related activations as specified by the lineage. Paging, also known as offloading, is a complementary technique for reducing memory. In paging, activation tensors that are not immediately needed are called out from primary storage to secondary storage, such as flash memory or an SD card. When the tensor is needed again, page it out.

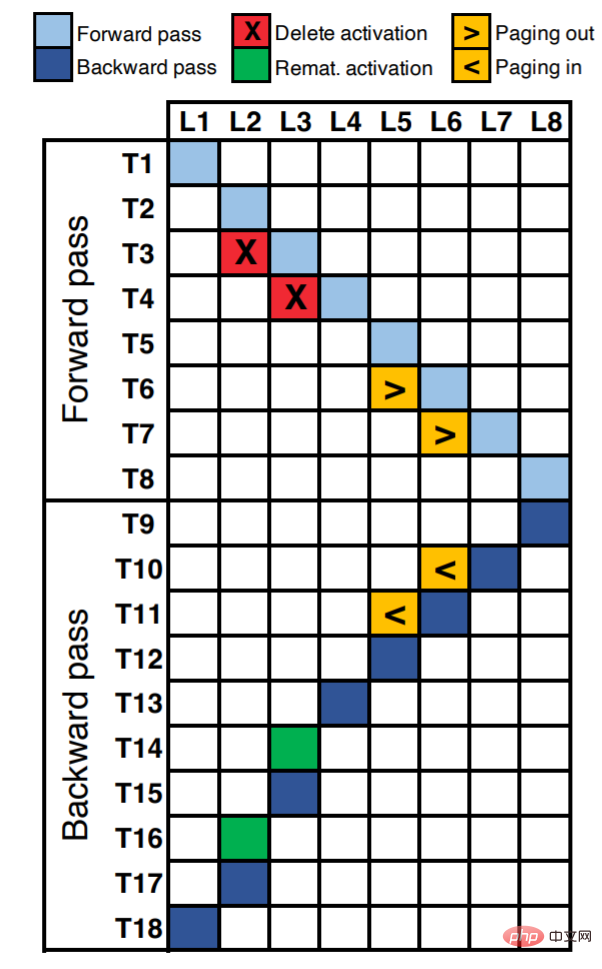

Figure 2 shows the execution schedule of an eight-layer neural network. Along the X-axis, each unit corresponds to each layer of the neural network (a total of 8 layers L8). The Y-axis represents the logical time step within an epoch. The occupied cells in the diagram (filled with color) represent the operations performed at the corresponding time step (forward/backpropagation computation, reimplementation, or paging).

For example, we can see that the activation of L1 is calculated at the first time step (T1). At T2 and T3, the activation amounts of L2 and L3 are calculated respectively. Assuming that layers L2 and L3 happen to be memory-intensive but computationally cheap operations, such as nonlinearities (tanH, ReLU, etc.), then reimplementation becomes the best choice. We can delete activations ({T3, L2}, {T4, L3}) to free up memory, and when these activations are needed during backpropagation, we can reimplement them ({T14, L3}, {T16, L2}) .

Assume that the L5 and L6 layers are computationally intensive operations, such as convolution, dense matrix multiplication, etc. For such operations, reimplementation will result in increased running time and energy and is suboptimal. For these layers, it is better to page the activation tensors to auxiliary storage ({T6, L5}, {T7, L6}) and when needed to ({T10, L6}, {T11, L5}).

One of the main advantages of paging is that it can be pipelined to hide latency based on memory bus occupancy. This is because modern systems have a DMA (Direct Memory Access) feature that moves activation tensors from secondary storage to main memory while the compute engines are running in parallel. For example, at time step T7, L6 can be called out and L7 calculated at the same time. However, the reimplementation is computationally intensive and cannot be parallelized, which results in increased runtime. For example, we have to use time step T14 to recompute L3, thus delaying the rest of the backpropagation execution.

POET

This research proposes POET, a graph-level compiler for deep neural networks that rewrites the training DAG of large models to fit in the memory of edge devices limits while maintaining high energy efficiency.

POET is hardware-aware, it first tracks the execution of forward and backward passes and the associated memory allocation requests, runtime, and memory and energy consumption of each operation. This fine-grained analysis of each workload occurs only once for a given hardware, is automated, cheap, and provides the most accurate cost model for POET.

POET then generates a mixed integer linear program (MILP) that can be solved efficiently. The POET optimizer searches for efficient reimplementations and paging schedules that minimize memory-bound end-to-end energy consumption. The resulting schedule is then used to generate a new DAG for execution on the edge device.

Although MILP is solved on commodity hardware, the schedule sent to the edge device is only a few hundred bytes, making it very memory efficient.

Reimplementation is most efficient for operations that are computationally cheap but memory intensive. However, paging is best suited for computationally intensive operations where reimplementation would result in significant energy overhead. POET jointly considers reimplementation and paging in an integrated search space.

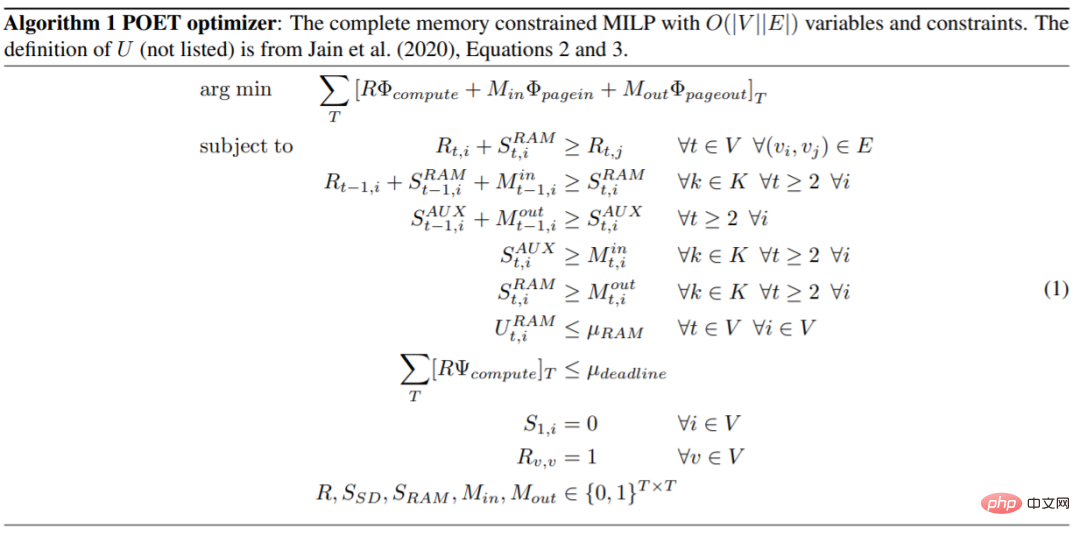

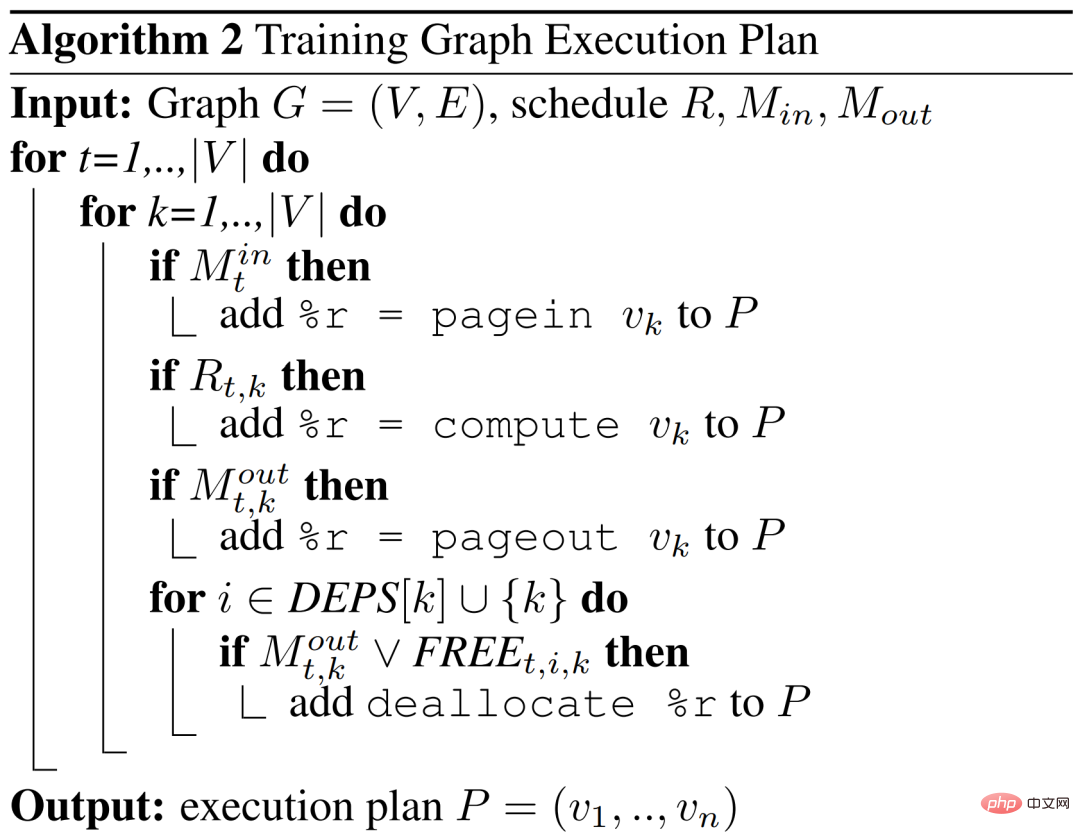

The method in this article can be extended to complex and realistic architectures. The POET optimizer algorithm is as follows.

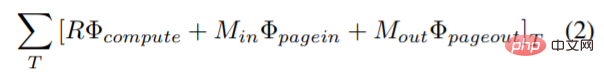

This study introduces a new objective function in the optimization problem to minimize the combined energy consumption of computation, page-in and page-out, paging and re-implementation The new objective function combining energy consumption is:

where Φ_compute, Φ_pagein and Φ_pageout represent the energy consumed by each node in calculation, page-in and page-out respectively. .

POET outputs the DAG schedule based on which nodes (k) of the graph are reimplemented and which nodes are page-in  or page-out

or page-out  at each time step (t).

at each time step (t).

Experimental results

In the evaluation of POET, researchers tried to answer three key questions. First, how much energy can POET reduce across different models and platforms? Second, how does POET benefit from a hybrid paging and reimplementation strategy? Finally, how does POET adapt to different runtime budgets?

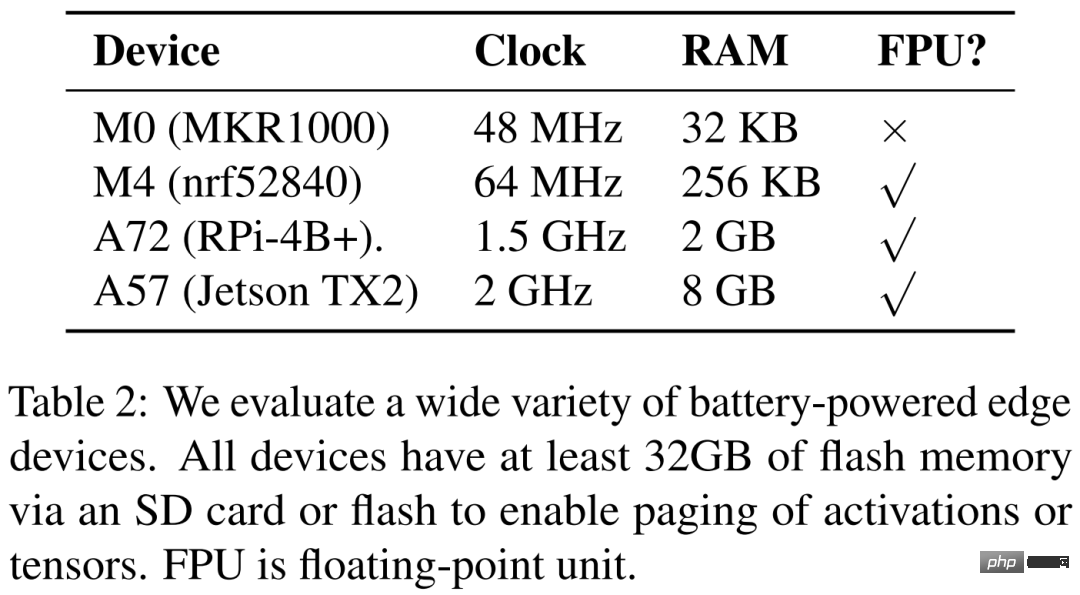

The researchers list four different hardware devices in Table 2 below, namely ARM Cortex M0 MKR1000, ARM Cortex M4F nrf52840, A72 Raspberry Pi 4B and Nvidia Jetson TX2. POET is fully hardware-aware and relies on fine-grained analysis.

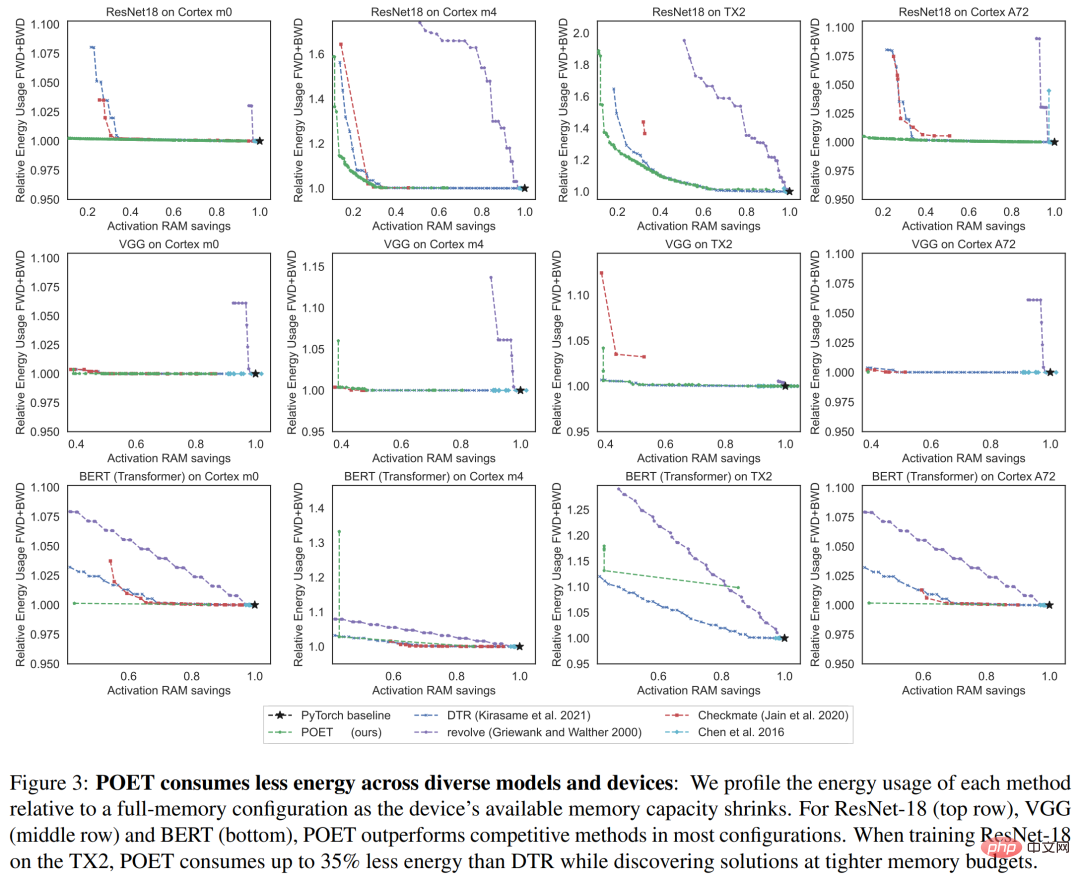

Figure 3 below shows the energy consumption of a single training epoch. Each column corresponds to a different hardware platform. The researchers found that POET generates energy-efficient schedules (Y-axis) across all platforms while reducing peak memory consumption (X-axis) and meeting time budgets.

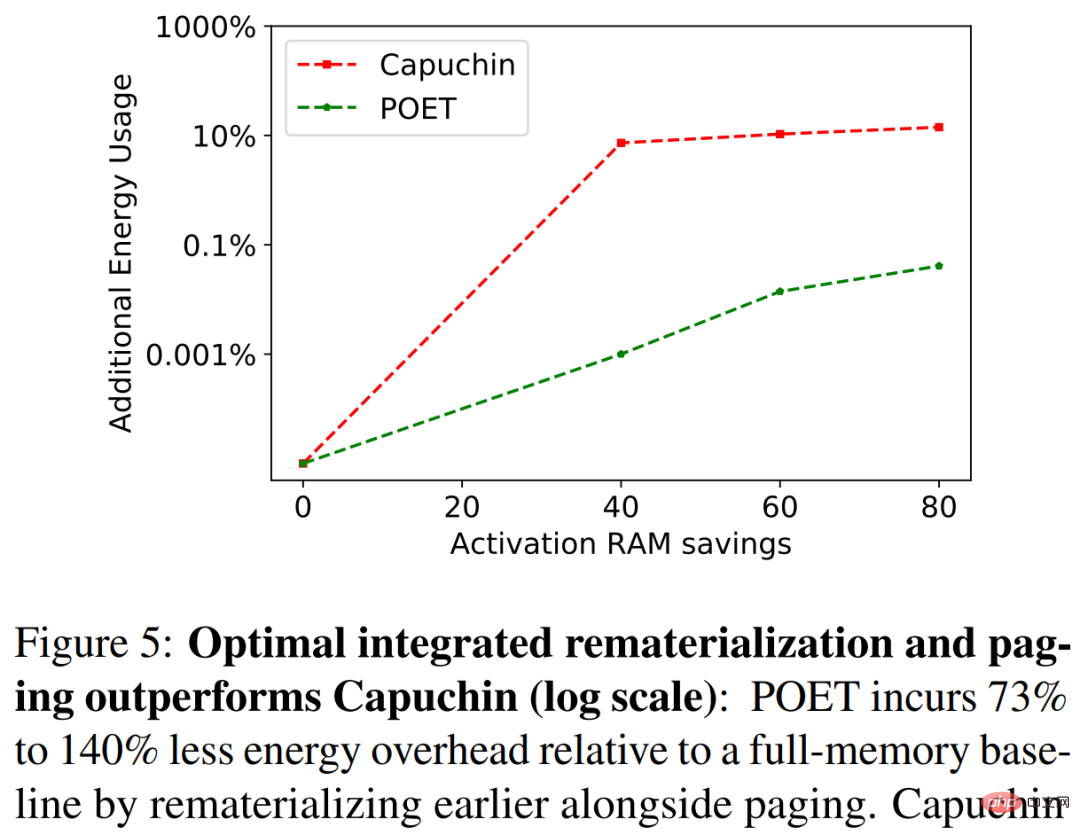

In Figure 5 below, the researchers benchmarked POET and Capuchin when training ResNet-18 on A72. With reduced RAM budget, Capuchin consumes 73% to 141% more energy than the baseline with full memory. In comparison, POET generates less than 1% of the energy consumed. This trend applies to all architectures and platforms tested.

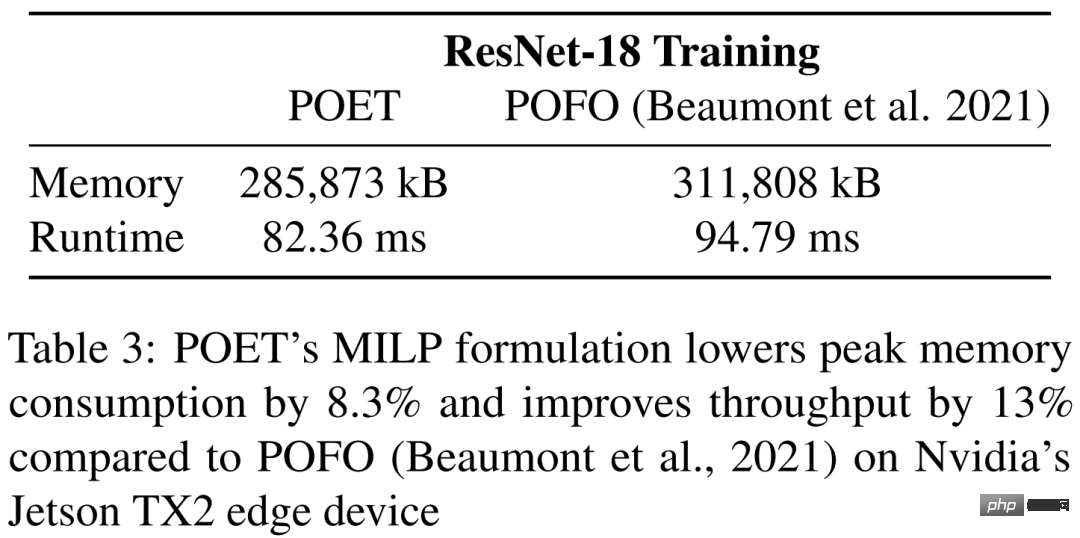

In Table 3, the study benchmarks POET and POFO when training ResNet-18 on Nvidia’s Jetson TX2. The study found that POET found an integrated reimplementation and paging that reduced peak memory consumption by 8.3% and improved throughput by 13%. This demonstrates the advantage of POET's MILP solver, which is able to optimize over a larger search space. While POFO only supports linear models, POET can be generalized to nonlinear models, as shown in Figure 3.

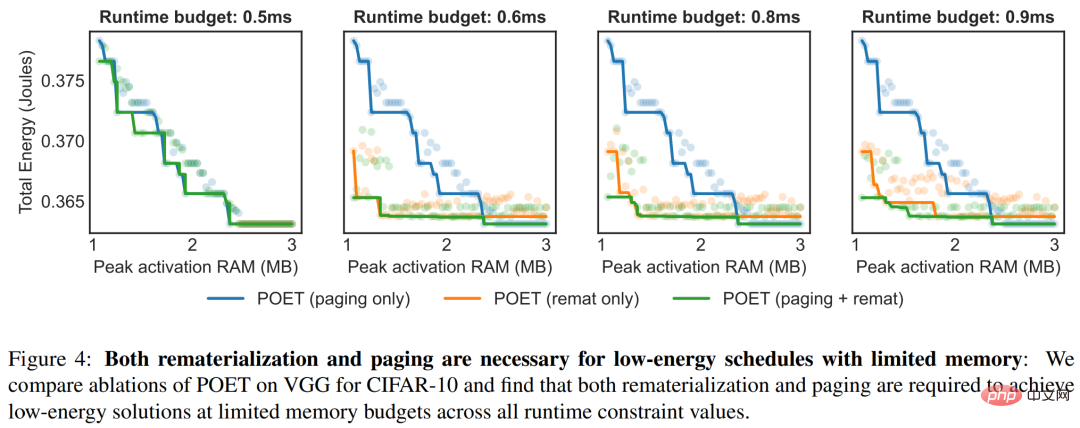

Figure 4 highlights the benefits of POET adopting an integration strategy under different time constraints. For each runtime, the figure below plots the total energy consumption.

The above is the detailed content of Training BERT and ResNet on a smartphone for the first time, reducing energy consumption by 35%. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1393

1393

52

52

1205

1205

24

24

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

The article reviews top AI art generators, discussing their features, suitability for creative projects, and value. It highlights Midjourney as the best value for professionals and recommends DALL-E 2 for high-quality, customizable art.

Is ChatGPT 4 O available?

Mar 28, 2025 pm 05:29 PM

Is ChatGPT 4 O available?

Mar 28, 2025 pm 05:29 PM

ChatGPT 4 is currently available and widely used, demonstrating significant improvements in understanding context and generating coherent responses compared to its predecessors like ChatGPT 3.5. Future developments may include more personalized interactions and real-time data processing capabilities, further enhancing its potential for various applications.

Getting Started With Meta Llama 3.2 - Analytics Vidhya

Apr 11, 2025 pm 12:04 PM

Getting Started With Meta Llama 3.2 - Analytics Vidhya

Apr 11, 2025 pm 12:04 PM

Meta's Llama 3.2: A Leap Forward in Multimodal and Mobile AI Meta recently unveiled Llama 3.2, a significant advancement in AI featuring powerful vision capabilities and lightweight text models optimized for mobile devices. Building on the success o

Best AI Chatbots Compared (ChatGPT, Gemini, Claude & More)

Apr 02, 2025 pm 06:09 PM

Best AI Chatbots Compared (ChatGPT, Gemini, Claude & More)

Apr 02, 2025 pm 06:09 PM

The article compares top AI chatbots like ChatGPT, Gemini, and Claude, focusing on their unique features, customization options, and performance in natural language processing and reliability.

Top AI Writing Assistants to Boost Your Content Creation

Apr 02, 2025 pm 06:11 PM

Top AI Writing Assistants to Boost Your Content Creation

Apr 02, 2025 pm 06:11 PM

The article discusses top AI writing assistants like Grammarly, Jasper, Copy.ai, Writesonic, and Rytr, focusing on their unique features for content creation. It argues that Jasper excels in SEO optimization, while AI tools help maintain tone consist

How to Access Falcon 3? - Analytics Vidhya

Mar 31, 2025 pm 04:41 PM

How to Access Falcon 3? - Analytics Vidhya

Mar 31, 2025 pm 04:41 PM

Falcon 3: A Revolutionary Open-Source Large Language Model Falcon 3, the latest iteration in the acclaimed Falcon series of LLMs, represents a significant advancement in AI technology. Developed by the Technology Innovation Institute (TII), this open

Choosing the Best AI Voice Generator: Top Options Reviewed

Apr 02, 2025 pm 06:12 PM

Choosing the Best AI Voice Generator: Top Options Reviewed

Apr 02, 2025 pm 06:12 PM

The article reviews top AI voice generators like Google Cloud, Amazon Polly, Microsoft Azure, IBM Watson, and Descript, focusing on their features, voice quality, and suitability for different needs.

Top 7 Agentic RAG System to Build AI Agents

Mar 31, 2025 pm 04:25 PM

Top 7 Agentic RAG System to Build AI Agents

Mar 31, 2025 pm 04:25 PM

2024 witnessed a shift from simply using LLMs for content generation to understanding their inner workings. This exploration led to the discovery of AI Agents – autonomous systems handling tasks and decisions with minimal human intervention. Buildin