Technology peripherals

Technology peripherals

AI

AI

Unlocking the correct combination of CNN and Transformer, ByteDance proposes an effective next-generation visual Transformer

Unlocking the correct combination of CNN and Transformer, ByteDance proposes an effective next-generation visual Transformer

Unlocking the correct combination of CNN and Transformer, ByteDance proposes an effective next-generation visual Transformer

Due to complex attention mechanisms and model designs, most existing visual transformers (ViT) cannot perform as efficiently as convolutional neural networks (CNN) in real-world industrial deployment scenarios. This raises the question: can a visual neural network infer as fast as a CNN and as powerful as a ViT?

Some recent works have tried to design CNN-Transformer hybrid architectures to solve this problem, but the overall performance of these works is far from satisfactory. Based on this, researchers from ByteDance proposed a next-generation visual Transformer—Next-ViT—that can be effectively deployed in real-life industrial scenarios. From a latency/accuracy trade-off perspective, Next-ViT's performance is comparable to excellent CNNs and ViTs.

##Paper address: https://arxiv.org/pdf/2207.05501.pdf

Next-ViT’s research team deployed friendly mechanisms to capture local and global information by developing novel convolution blocks (NCB) and Transformer blocks (NTB). The study then proposes a novel hybrid strategy NHS that aims to stack NCBs and NTBs in an efficient hybrid paradigm to improve the performance of various downstream tasks.

Extensive experiments show that Next-ViT significantly outperforms existing CNN, ViT, and CNN-Transformer hybrid architectures in terms of latency/accuracy trade-offs for various vision tasks. On TensorRT, Next-ViT outperforms ResNet by 5.4 mAP on the COCO detection task (40.4 VS 45.8) and 8.2% mIoU on the ADE20K segmentation (38.8% VS 47.0%). Meanwhile, Next-ViT achieves comparable performance to CSWin and achieves 3.6x faster inference. On CoreML, Next-ViT outperforms EfficientFormer by 4.6 mAP on the COCO detection task (42.6 VS 47.2) and 3.5% mIoU on the ADE20K segmentation (from 45.2% to 48.7%).

MethodThe overall architecture of Next-ViT is shown in Figure 2 below. Next-ViT follows a hierarchical pyramid architecture, with a patch embedding layer and a series of convolution or Transformer blocks at each stage. The spatial resolution will be gradually reduced to 1/32 of the original, while the channel dimensions will be expanded in stages.

The researchers first in-depth designed the core module of information interaction, and developed powerful NCB and NTB respectively to simulate the short-term and long-term in visual data. Dependencies. Local and global information are also fused in NTB to further improve modeling capabilities. Finally, in order to overcome the inherent shortcomings of existing methods, this study systematically studies the integration of convolution and Transformer blocks and proposes an NHS strategy to stack NCB and NTB to build a novel CNN-Transformer hybrid architecture.

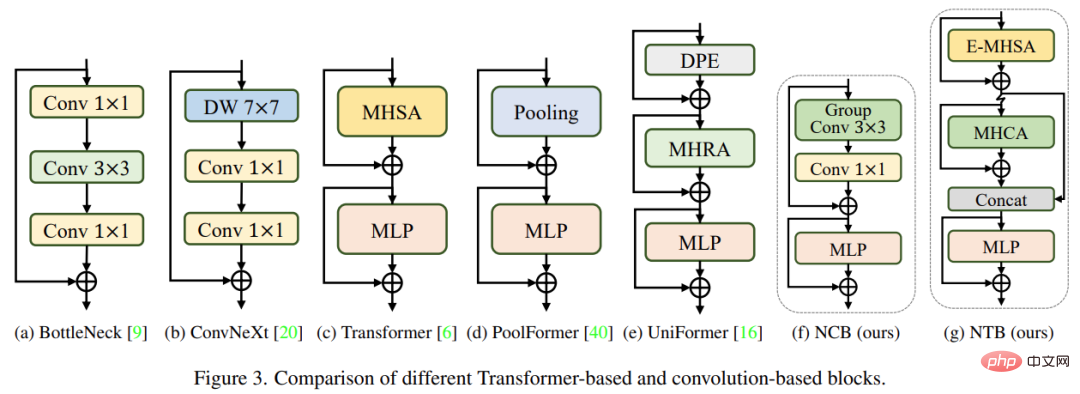

NCBResearchers analyzed several classic structural designs, as shown in Figure 3 below. The BottleNeck block proposed by ResNet [9] has long dominated visual neural networks due to its inherent inductive bias and easy deployment on most hardware platforms. Unfortunately, BottleNeck blocks are less effective than Transformer blocks. The ConvNeXt block [20] modernizes the BottleNeck block by mimicking the design of the Transformer block. While ConvNeXt blocks improve network performance, its inference speed on TensorRT/CoreML is severely limited by inefficient components. The Transformer block has achieved excellent results in various vision tasks. However, the inference speed of the Transformer block is much slower than the BottleNeck block on TensorRT and CoreML because its attention mechanism is more complex, which is not the case in most real-world industrial scenarios. Unbearable.

In order to overcome the problems of the above blocks, this study proposed Next Convolution Block (NCB), which maintains the deployment advantages of BottleNeck blocks. At the same time, outstanding performance of the Transformer block is obtained. As shown in Figure 3(f), NCB follows the general architecture of the MetaFormer (which has been proven to be critical to the Transformer block).

In addition, an efficient attention-based token mixer is equally important. This study designed a multi-head convolutional attention (MHCA) as an efficient token mixer deploying convolutional operations, and built NCB using MHCA and MLP layers in the paradigm of MetaFormer [40].

NTB

NCB has effectively learned local representations, and the next step needs to capture global information. The Transformer architecture has a strong ability to capture low-frequency signals that provide global information (such as global shape and structure).

However, related research has found that the Transformer block may deteriorate high-frequency information, such as local texture information, to a certain extent. Signals from different frequency bands are essential in the human visual system, and they are fused in a specific way to extract more essential and unique features.

Influenced by these known results, this research developed Next Transformer Block (NTB) to capture multi-frequency signals in a lightweight mechanism. Additionally, NTB can be used as an efficient multi-frequency signal mixer, further enhancing overall modeling capabilities.

NHS

Some recent work has tried to combine CNN and Transformer for efficient deployment. As shown in Figure 4(b)(c) below, they almost all use convolutional blocks in the shallow stage and only stack Transformer blocks in the last one or two stages. This combination is effective in classification tasks. But the study found that these hybrid strategies can easily reach performance saturation on downstream tasks such as segmentation and detection. The reason is that classification tasks only use the output of the last stage for prediction, while downstream tasks (such as segmentation and detection) usually rely on the features of each stage to obtain better results. This is because traditional hybrid strategies just stack Transformer blocks in the last few stages, and the shallow layers cannot capture global information.

This study proposes a new hybrid strategy (NHS) that creatively combines convolution blocks (NCB) and Transformer blocks (NTB) combined with the (N 1) * L hybrid paradigm. NHS significantly improves the model's performance on downstream tasks and achieves efficient deployment while controlling the proportion of Transformer blocks.

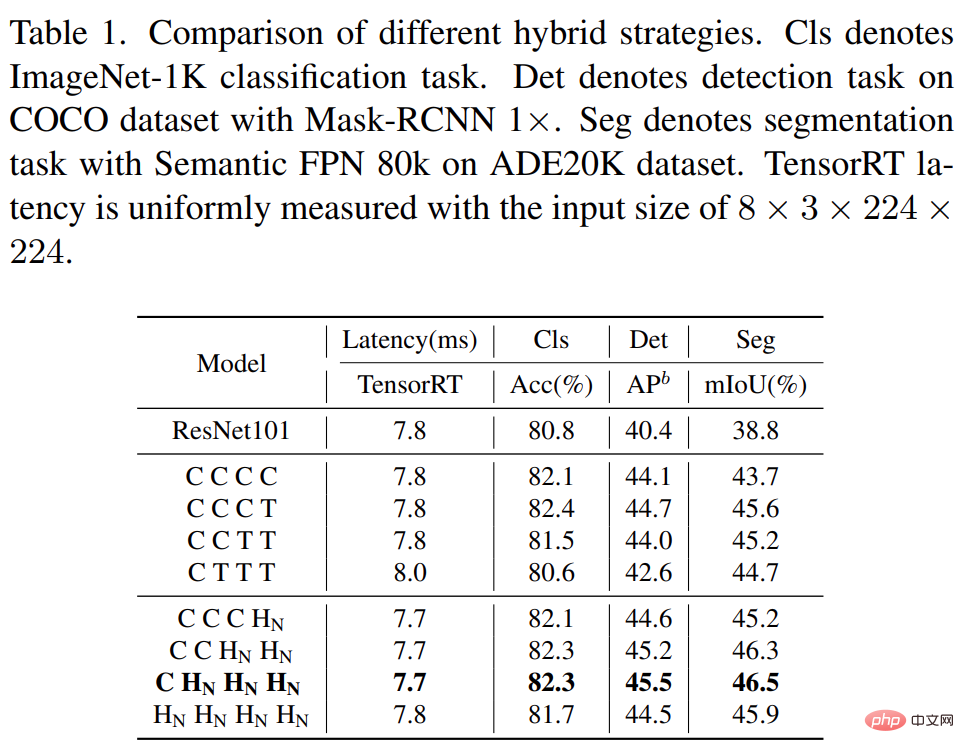

First, in order to give shallow layers the ability to capture global information, this study proposes a (NCB×N NTB×1) mode mixing strategy, stacking N in each stage in turn NCB and an NTB, as shown in Figure 4(d). Specifically, Transformer Blocks (NTB) are placed at the end of each stage, enabling the model to learn global representations in shallow layers. This study conducted a series of experiments to verify the superiority of the proposed hybrid strategy, and the performance of different hybrid strategies is shown in Table 1 below.

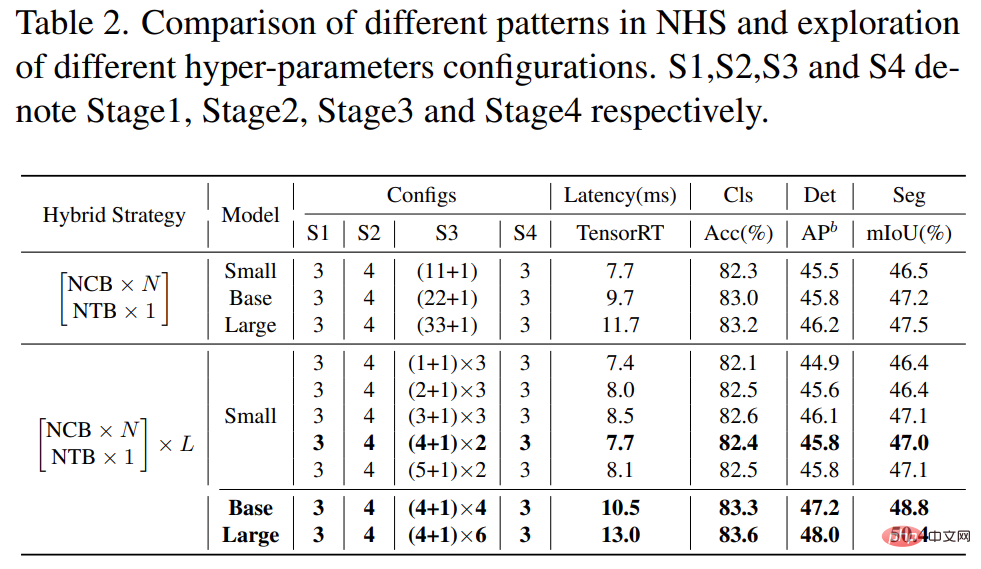

In addition, as shown in Table 2 below, the performance of large models will gradually reach saturation. This phenomenon shows that enlarging the model size by enlarging N in (NCB × N NTB × 1) mode, i.e. simply adding more convolutional blocks is not the optimal choice, in (NCB × N NTB × 1) mode. The value of N can severely impact model performance.

Therefore, researchers began to explore the impact of the value of N on model performance through extensive experiments. As shown in Table 2 (middle), the study constructed models with different values of N in the third stage. To construct models with similar latencies for fair comparison, this study stacks L sets of (NCB × N NTB × 1) patterns at small values of N.

As shown in Table 2, the model with N = 4 in the third stage achieves the best trade-off between performance and latency. The study further builds a larger model by expanding L in the (NCB × 4 NTB × 1) × L mode in the third stage. As shown in Table 2 (below), the performance of the Base (L = 4) and Large (L = 6) models is significantly improved compared to the small model, verifying the proposed (NCB × N NTB × 1) × L mode. General effectiveness.

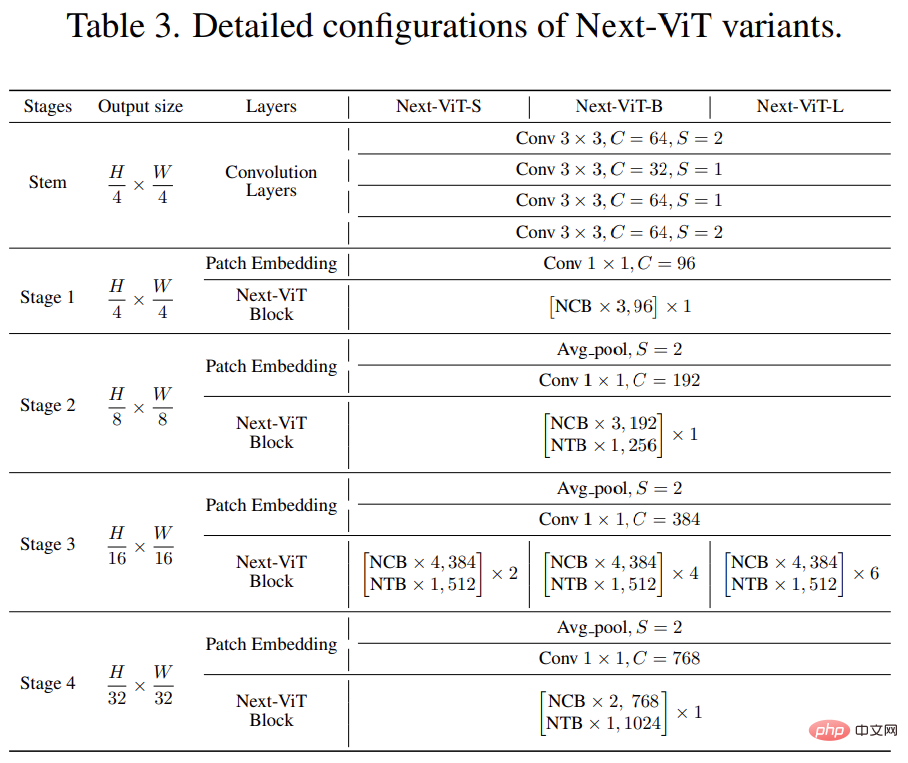

Finally, in order to provide a fair comparison with existing SOTA networks, the researchers proposed three typical variants, namely Next-ViTS/B/L.

Experimental results

Classification task on ImageNet-1K

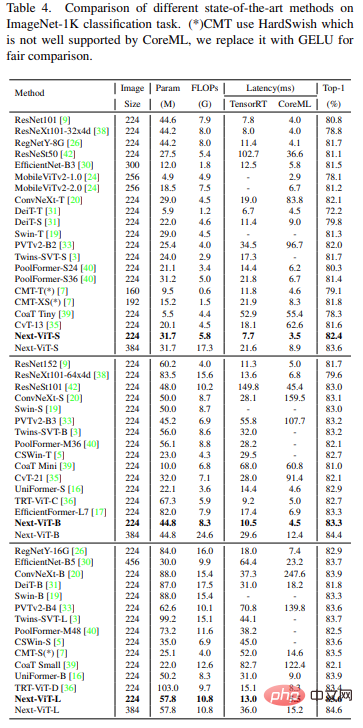

Compared with the latest SOTA methods such as CNN, ViT and hybrid networks, Next-ViT achieves better accuracy The best trade-off is achieved between latency and latency, and the results are shown in Table 4 below.

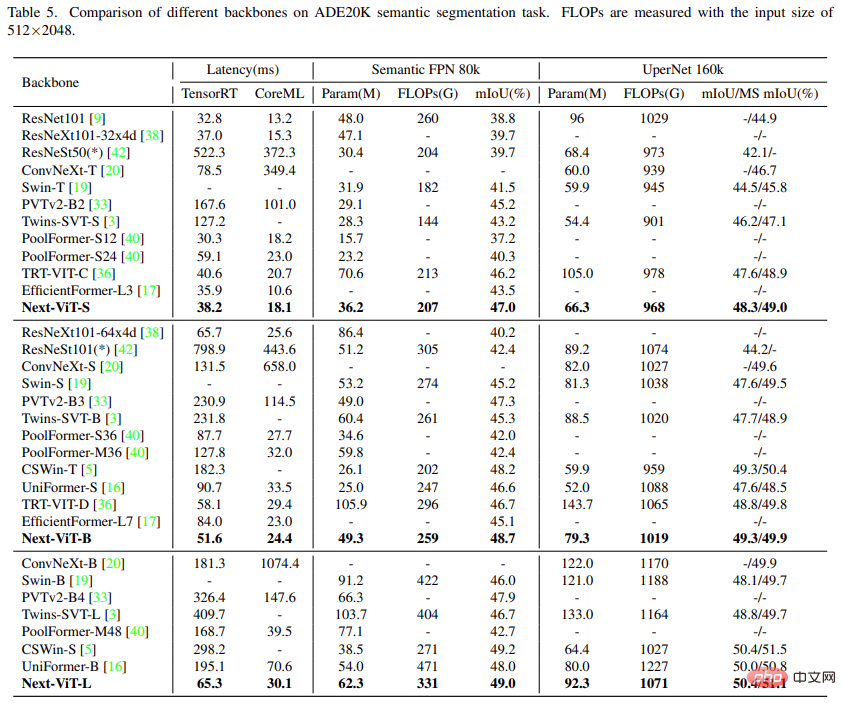

Semantic segmentation task on ADE20K

This study combines Next-ViT with CNN , ViT and some recent hybrid architectures are compared for semantic segmentation tasks. As shown in Table 5 below, extensive experiments show that Next-ViT has excellent potential on segmentation tasks.

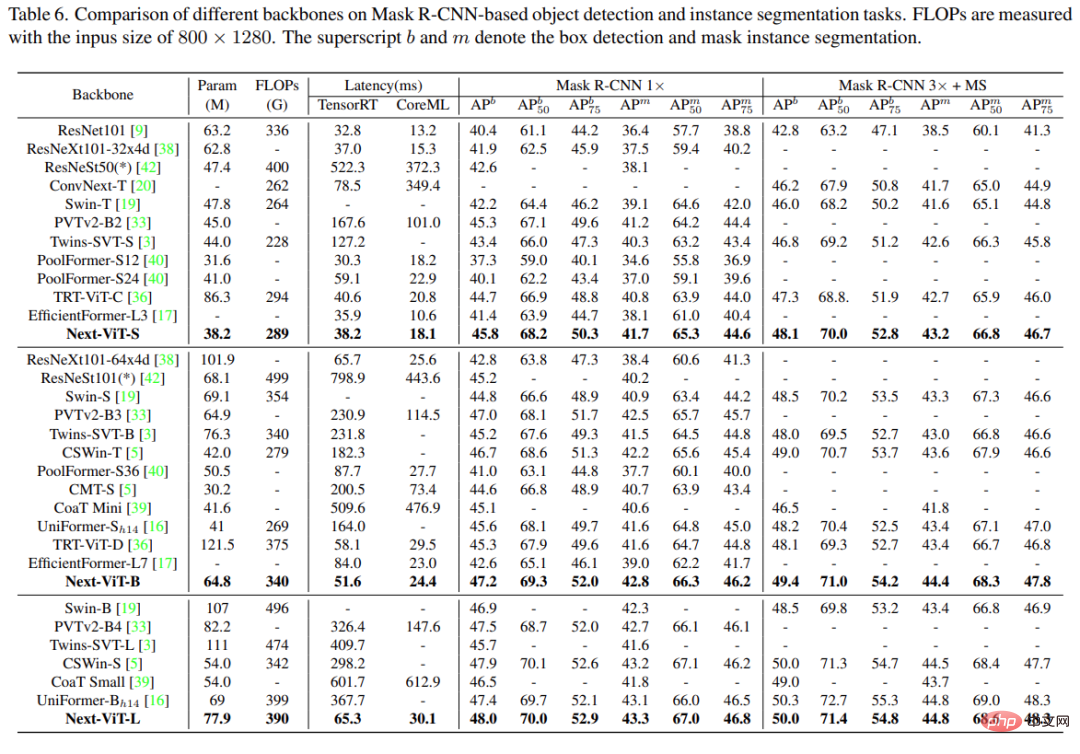

Object detection and instance segmentation

On the task of object detection and instance segmentation, This study compared Next-ViT with the SOTA model, and the results are shown in Table 6 below.

Ablation experiments and visualization

To better understand Next-ViT, researchers evaluated its performance on ImageNet-1K classification and performance on downstream tasks to analyze the role of each key design, and visualize the Fourier spectrum and heat map of the output features to show the inherent advantages of Next-ViT.

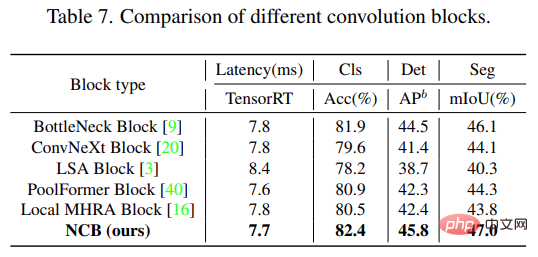

As shown in Table 7 below, NCB achieves the best latency/accuracy trade-off on all three tasks.

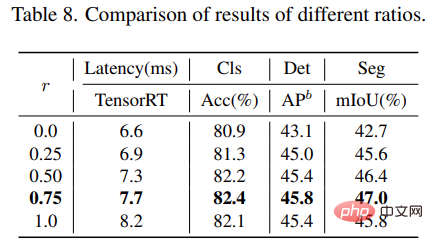

For NTB blocks, this study explored the impact of the shrinkage rate r of NTB on the overall performance of Next-ViT. The results are shown in Table 8 below, Decreasing the shrinkage r will reduce model latency.

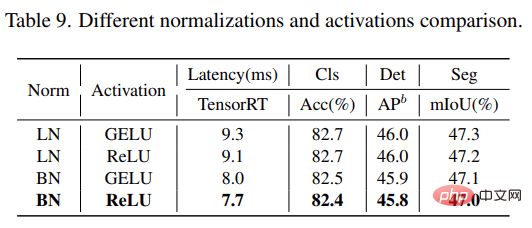

In addition, the model with r = 0.75 and r = 0.5 has better performance than the model with pure Transformer (r = 1) . This indicates that fusing multi-frequency signals in an appropriate manner will enhance the model's representation learning capabilities. In particular, the model with r = 0.75 achieves the best latency/accuracy trade-off. These results illustrate the effectiveness of NTB blocks. This study further analyzes the impact of different normalization layers and activation functions in Next-ViT. As shown in Table 9 below, although LN and GELU bring some performance improvements, the inference latency on TensorRT is significantly higher. On the other hand, BN and ReLU achieve the best latency/accuracy trade-off on the overall task. Therefore, Next-ViT uses BN and ReLU uniformly for efficient deployment in real-world industrial scenarios.

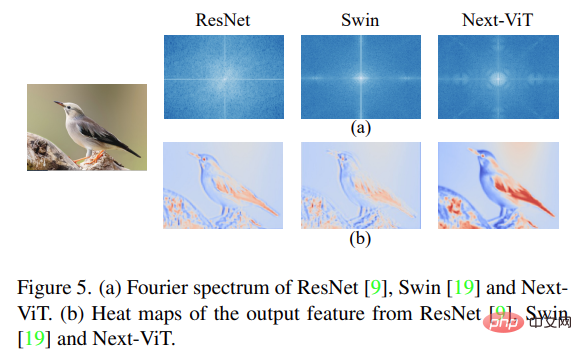

Finally, the study visualized the Fourier spectrum and heat map of the output features of ResNet, Swin Transformer and Next-ViT, as shown in Figure 5 below (a) is shown. The spectrum distribution of ResNet shows that the convolution block tends to capture high-frequency signals and has difficulty paying attention to low-frequency signals; ViT is good at capturing low-frequency signals and ignores high-frequency signals; and Next-ViT can capture high-quality multi-frequency signals at the same time, which shows that NTB effectiveness.

In addition, as shown in Figure 5(b), Next-ViT can capture richer texture information and more accurate texture information than ResNet and Swin. Global information, which shows that Next-ViT has stronger modeling capabilities.

The above is the detailed content of Unlocking the correct combination of CNN and Transformer, ByteDance proposes an effective next-generation visual Transformer. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

This site reported on June 27 that Jianying is a video editing software developed by FaceMeng Technology, a subsidiary of ByteDance. It relies on the Douyin platform and basically produces short video content for users of the platform. It is compatible with iOS, Android, and Windows. , MacOS and other operating systems. Jianying officially announced the upgrade of its membership system and launched a new SVIP, which includes a variety of AI black technologies, such as intelligent translation, intelligent highlighting, intelligent packaging, digital human synthesis, etc. In terms of price, the monthly fee for clipping SVIP is 79 yuan, the annual fee is 599 yuan (note on this site: equivalent to 49.9 yuan per month), the continuous monthly subscription is 59 yuan per month, and the continuous annual subscription is 499 yuan per year (equivalent to 41.6 yuan per month) . In addition, the cut official also stated that in order to improve the user experience, those who have subscribed to the original VIP

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Improve developer productivity, efficiency, and accuracy by incorporating retrieval-enhanced generation and semantic memory into AI coding assistants. Translated from EnhancingAICodingAssistantswithContextUsingRAGandSEM-RAG, author JanakiramMSV. While basic AI programming assistants are naturally helpful, they often fail to provide the most relevant and correct code suggestions because they rely on a general understanding of the software language and the most common patterns of writing software. The code generated by these coding assistants is suitable for solving the problems they are responsible for solving, but often does not conform to the coding standards, conventions and styles of the individual teams. This often results in suggestions that need to be modified or refined in order for the code to be accepted into the application

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Large Language Models (LLMs) are trained on huge text databases, where they acquire large amounts of real-world knowledge. This knowledge is embedded into their parameters and can then be used when needed. The knowledge of these models is "reified" at the end of training. At the end of pre-training, the model actually stops learning. Align or fine-tune the model to learn how to leverage this knowledge and respond more naturally to user questions. But sometimes model knowledge is not enough, and although the model can access external content through RAG, it is considered beneficial to adapt the model to new domains through fine-tuning. This fine-tuning is performed using input from human annotators or other LLM creations, where the model encounters additional real-world knowledge and integrates it

No OpenAI data required, join the list of large code models! UIUC releases StarCoder-15B-Instruct

Jun 13, 2024 pm 01:59 PM

No OpenAI data required, join the list of large code models! UIUC releases StarCoder-15B-Instruct

Jun 13, 2024 pm 01:59 PM

At the forefront of software technology, UIUC Zhang Lingming's group, together with researchers from the BigCode organization, recently announced the StarCoder2-15B-Instruct large code model. This innovative achievement achieved a significant breakthrough in code generation tasks, successfully surpassing CodeLlama-70B-Instruct and reaching the top of the code generation performance list. The unique feature of StarCoder2-15B-Instruct is its pure self-alignment strategy. The entire training process is open, transparent, and completely autonomous and controllable. The model generates thousands of instructions via StarCoder2-15B in response to fine-tuning the StarCoder-15B base model without relying on expensive manual annotation.

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

Editor |ScienceAI Question Answering (QA) data set plays a vital role in promoting natural language processing (NLP) research. High-quality QA data sets can not only be used to fine-tune models, but also effectively evaluate the capabilities of large language models (LLM), especially the ability to understand and reason about scientific knowledge. Although there are currently many scientific QA data sets covering medicine, chemistry, biology and other fields, these data sets still have some shortcomings. First, the data form is relatively simple, most of which are multiple-choice questions. They are easy to evaluate, but limit the model's answer selection range and cannot fully test the model's ability to answer scientific questions. In contrast, open-ended Q&A

Yolov10: Detailed explanation, deployment and application all in one place!

Jun 07, 2024 pm 12:05 PM

Yolov10: Detailed explanation, deployment and application all in one place!

Jun 07, 2024 pm 12:05 PM

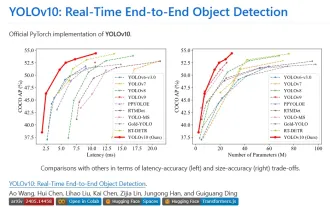

1. Introduction Over the past few years, YOLOs have become the dominant paradigm in the field of real-time object detection due to its effective balance between computational cost and detection performance. Researchers have explored YOLO's architectural design, optimization goals, data expansion strategies, etc., and have made significant progress. At the same time, relying on non-maximum suppression (NMS) for post-processing hinders end-to-end deployment of YOLO and adversely affects inference latency. In YOLOs, the design of various components lacks comprehensive and thorough inspection, resulting in significant computational redundancy and limiting the capabilities of the model. It offers suboptimal efficiency, and relatively large potential for performance improvement. In this work, the goal is to further improve the performance efficiency boundary of YOLO from both post-processing and model architecture. to this end

SK Hynix will display new AI-related products on August 6: 12-layer HBM3E, 321-high NAND, etc.

Aug 01, 2024 pm 09:40 PM

SK Hynix will display new AI-related products on August 6: 12-layer HBM3E, 321-high NAND, etc.

Aug 01, 2024 pm 09:40 PM

According to news from this site on August 1, SK Hynix released a blog post today (August 1), announcing that it will attend the Global Semiconductor Memory Summit FMS2024 to be held in Santa Clara, California, USA from August 6 to 8, showcasing many new technologies. generation product. Introduction to the Future Memory and Storage Summit (FutureMemoryandStorage), formerly the Flash Memory Summit (FlashMemorySummit) mainly for NAND suppliers, in the context of increasing attention to artificial intelligence technology, this year was renamed the Future Memory and Storage Summit (FutureMemoryandStorage) to invite DRAM and storage vendors and many more players. New product SK hynix launched last year

SOTA performance, Xiamen multi-modal protein-ligand affinity prediction AI method, combines molecular surface information for the first time

Jul 17, 2024 pm 06:37 PM

SOTA performance, Xiamen multi-modal protein-ligand affinity prediction AI method, combines molecular surface information for the first time

Jul 17, 2024 pm 06:37 PM

Editor | KX In the field of drug research and development, accurately and effectively predicting the binding affinity of proteins and ligands is crucial for drug screening and optimization. However, current studies do not take into account the important role of molecular surface information in protein-ligand interactions. Based on this, researchers from Xiamen University proposed a novel multi-modal feature extraction (MFE) framework, which for the first time combines information on protein surface, 3D structure and sequence, and uses a cross-attention mechanism to compare different modalities. feature alignment. Experimental results demonstrate that this method achieves state-of-the-art performance in predicting protein-ligand binding affinities. Furthermore, ablation studies demonstrate the effectiveness and necessity of protein surface information and multimodal feature alignment within this framework. Related research begins with "S