Technology peripherals

Technology peripherals

AI

AI

Meta: No need for intubation! AI can tell what you are thinking by looking at your electroencephalogram

Meta: No need for intubation! AI can tell what you are thinking by looking at your electroencephalogram

Meta: No need for intubation! AI can tell what you are thinking by looking at your electroencephalogram

Every year, more than 69 million people worldwide suffer from traumatic brain injuries, many of whom are unable to communicate through speech, typing or gestures. If researchers develop a technology that can decode language directly from brain activity in a non-invasive way, the lives of these people would be greatly improved. Now, Meta has conducted a new study to solve this problem.

Just now, the Meta AI official blog published an article introducing a new technology that can use AI to directly decode speech from brain activity.

From a period of 3 seconds of brain activity, this AI can learn from people’s daily Using a vocabulary of 793 words, the corresponding speech segments are decoded with an accuracy of 73%.

Historically, decoding speech from brain activity has been a long-standing goal for neuroscientists and clinicians, but most progress has relied on invasive brain recording techniques such as stereotactic electroencephalography and Electrocorticography.

These devices can provide clearer signals than noninvasive methods, but require neurosurgical intervention.

While the results of this work suggest that decoding speech from brain activity recordings is feasible, using non-invasive methods to decode speech would provide a safer, more scalable solutions that can ultimately benefit more people.

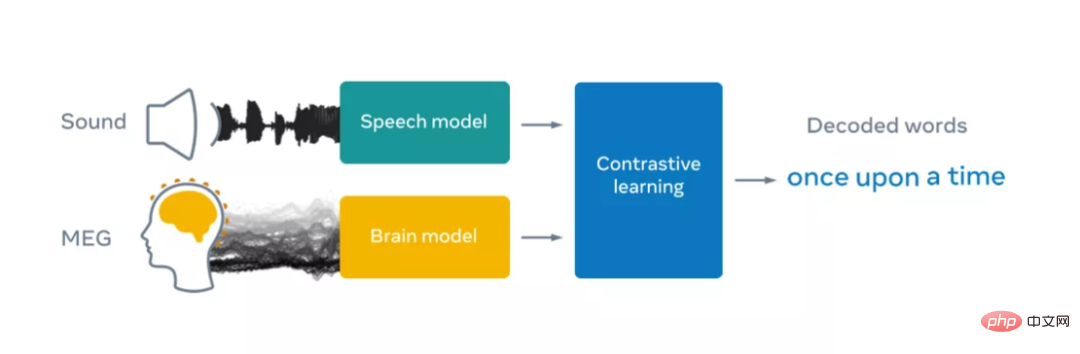

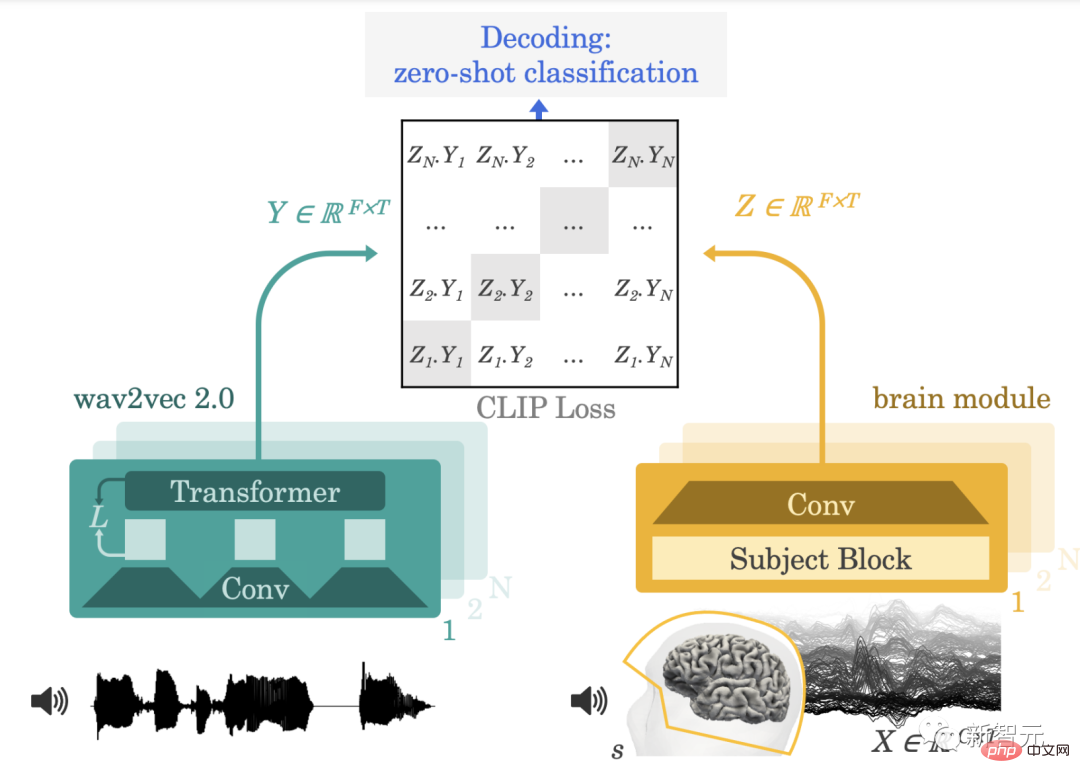

However, this is very challenging because non-invasive recordings are notoriously noisy, and for a variety of reasons, including the nature of each person's brain and where the sensors are placed. Differences can vary greatly between recording sessions and individuals. Meta addresses these challenges by creating a deep learning model trained with contrastive learning and then using it to maximize alignment of non-invasive brain recordings and speech.

To this end, Meta uses an open source self-supervised learning model wave2vec 2.0 developed by the FAIR team in 2020. , to identify complex representations of speech in the brains of volunteers listening to audiobooks. Meta focuses on two non-invasive technologies: electroencephalography and magnetoencephalography (EEG and MEG for short), which measure fluctuations in electric and magnetic fields caused by neuronal activity, respectively.

In practice, the two systems can take about 1,000 snapshots of macroscopic brain activity per second using hundreds of sensors. Meta leverages four open source EEG and MEG datasets from academic institutions, leveraging more than 150 hours of recordings of 169 healthy volunteers listening to audiobooks and isolated sentences in English and Dutch. .

Meta then feeds these EEG and MEG recordings into a "brain" model consisting of a standard deep convolutional network with residual connections.

It is well known that EEG and MEG recordings vary widely between individuals due to differences in individual brain anatomy, location and timing of neural function in brain regions, and the position of the sensors during recording.

In practice, this means that analyzing brain data often requires a complex engineering pipeline for retuning brain signals on a template brain. In previous studies, brain decoders were trained on a small number of recordings to predict a limited set of speech features, such as part-of-speech categories or words in a small vocabulary.

To facilitate research, Meta designed a new topic embedding layer, which is trained end-to-end to arrange all brain recordings in a common space.

To decode speech from non-invasive brain signals, Meta trained a model with contrastive learning to calibrate the speech and its corresponding brain Activity Finally, Meta’s architecture learned to match the output of the brain model to a deep representation of the speech presented to the participant.

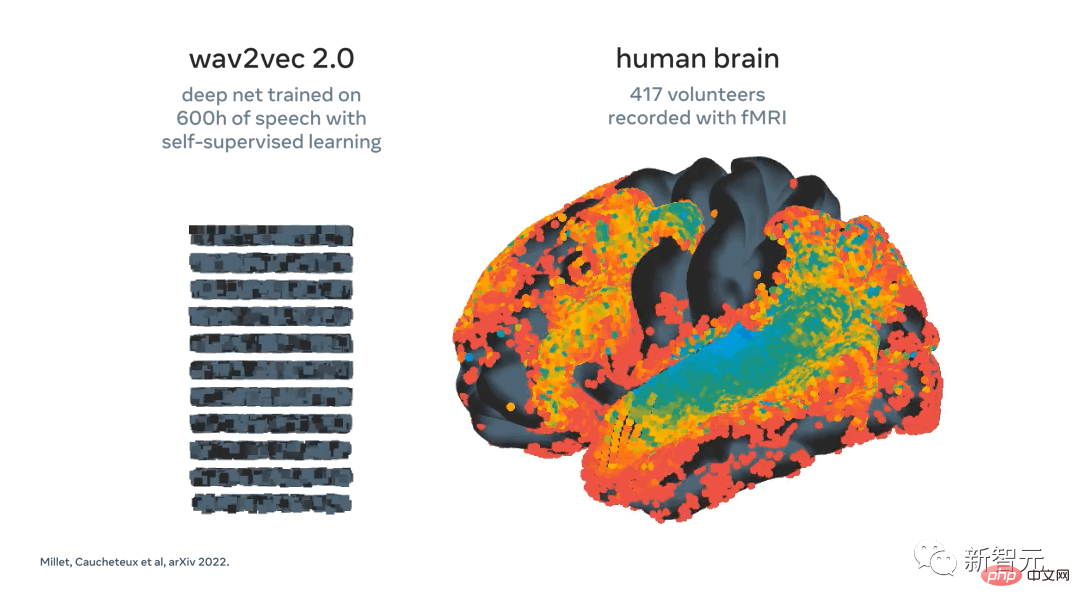

In Meta’s previous work, we used wav2vec 2.0 and showed that this speech algorithm automatically learns to generate speech representations that are consistent with the brain.

The emergence of “brain-like” representations of speech in wav2vec 2.0 made it a natural choice for Meta’s researchers to build their own decoders, as it helped to inform Meta’s researchers what should be extracted from brain signals Which representations.

Meta recently demonstrated activations of wav2vec 2.0 (left) mapped to the brain (right) in response to the same speech sounds. The representations of the first layer of the algorithm (cool colors) map to early auditory cortex, while the deepest layers map to higher brain areas (such as prefrontal and parietal cortex)

After training, Meta The system performs what's called zero-shot classification: given a clip of brain activity, it can determine from a large pool of new audio clips which clip the person actually heard.

The algorithm infers the words the person is most likely to hear. This is an exciting step because it shows that artificial intelligence can successfully learn to decode noisy and variable non-invasive recordings of brain activity when perceiving speech.

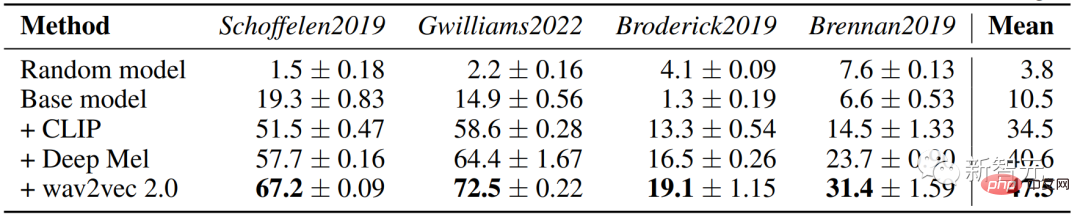

The next step is to see if the researchers can extend this model to decode speech directly from brain activity without the need for a pool of audio clips, i.e., moving towards safe and versatile speech decoding device. The researchers' analysis further shows that several components of our algorithm, including the use of wav2vec 2.0 and topic layers, are beneficial to decoding performance.

Additionally, Meta’s algorithms improve with the number of EEG and MEG recordings. Practically speaking, this means that Meta researchers’ methods benefit from the extraction of large amounts of heterogeneous data and could in principle help improve the decoding of small datasets.

This is important because in many cases it is difficult to collect large amounts of data from a given participant. For example, it's unrealistic to ask patients to spend dozens of hours in a scanner to check whether the system is right for them. Instead, algorithms can be pretrained on large data sets that include many individuals and conditions, and then provide decoding support for new patients’ brain activity with little data.

Meta’s research is encouraging as the results show that artificial intelligence trained with self-supervision can successfully decode perceived speech from non-invasive recordings of brain activity, despite these There is inherent noise and variability in the data. Of course, these results are just a first step. In this research effort, Meta focused on decoding speech perception, but achieving the ultimate goal of patient communication requires extending this work to speech production.

This area of research could even go beyond helping patients, potentially including enabling new ways of interacting with computers.

Looking at the larger picture, Meta's work is part of the scientific community's efforts to use artificial intelligence to better understand the human brain. Meta hopes to share this research publicly to accelerate progress on future challenges.

Paper analysis

Paper link: https://arxiv.org/pdf/2208.12266.pdf

This paper proposes a single end-to-end architecture trained on contrastive learning on a large group of individuals to predict self-supervised representations of natural speech .

We evaluated the model on four public datasets, consisting of 169 volunteers recorded with magnetoencephalography or electroencephalography (M/EEG) while listening to natural speech. data.

This provides a new idea for real-time decoding of natural language processing from non-invasive brain activity recordings.

Methods and Architecture

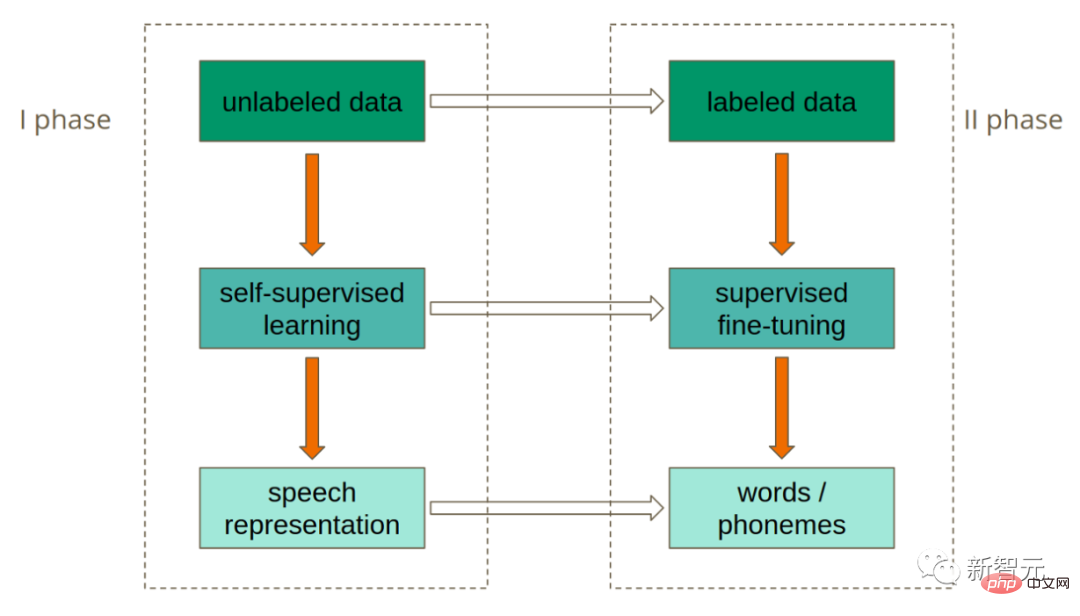

We first formalize the general task of neural decoding and incentivize training using contrastive losses. Before introducing the deep learning architecture for brain decoding, we introduce the rich speech representation provided by the pre-trained self-supervised module wav2vec 2.0.

We aimed to obtain high-dimensional data from high-dimensional brain scans recorded with non-invasive magnetoencephalography (MEG) or electroencephalography (EEG) while healthy volunteers passively listened to spoken sentences in their native language. Decoding speech from time series of brain signals.

How spoken language is represented in the brain is largely unknown, so decoders are typically trained in a supervised manner to Predicting potential representations of speech known to be relevant to the brain.

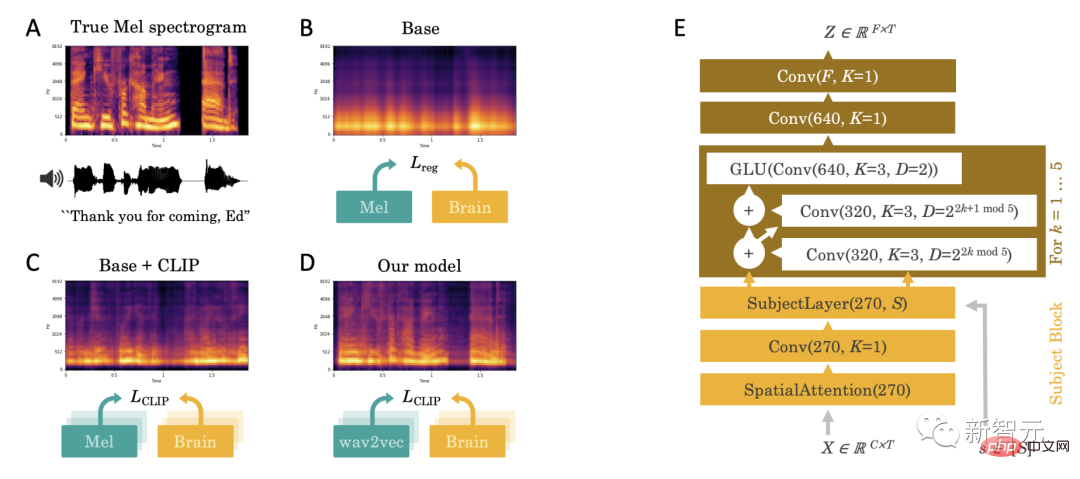

Empirically, we observe that this direct regression approach faces several challenges: when speech is present, decoding predictions appear to be dominated by an indistinguishable broadband component (Fig. 2.A-B).

This challenge prompted us to make three main contributions: introducing contrastive losses, pre-trained deep speech representations, and specialized brain decoders.

1. Contrastive loss

First, we infer that regression may be an invalid loss because It distracts from our goal: decoding speech from brain activity. Therefore, we replace it with a contrastive loss, the "CLIP" loss, which was originally designed to match latent representations in both modalities: text and images.

2. Pre-trained deep speech representation

Secondly, the Mel spectrum is a low-level representation of speech, so unlikely to match rich cortical representations. Therefore, we replace the Mel spectrum Y with latent representations of speech that are either learned end-to-end ("Deep Mel" models) or learned with an independent self-supervised speech model. In practice, we use wav2vec2-large-xlsr-531, which has been pre-trained on 56k hours of speech in 53 different languages.

3. Specialized "Brain Decoder"

Finally, for the brain module, we use a deep neural Network fclip takes as input a raw M/EEG time series X and the corresponding single-shot encoding of subject s, and outputs a latent brain representation Z sampled at the same rate as X.

This architecture includes (1) a spatial attention layer on the M/EEG sensor , then consists of a subject-specific 1x1 convolution designed to exploit inter-subject variability, whose input is a stack of convolutional blocks.

The results show that the wav2vec 2.0 model can identify the corresponding speech fragments from the 3-second EEG signal. The accuracy was as high as 72.5% in 1,594 different clips and 19.1% in 2,604 EEG recording clips, allowing phrases not in the training set to be decoded.

The above is the detailed content of Meta: No need for intubation! AI can tell what you are thinking by looking at your electroencephalogram. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1377

1377

52

52

How to add a new column in SQL

Apr 09, 2025 pm 02:09 PM

How to add a new column in SQL

Apr 09, 2025 pm 02:09 PM

Add new columns to an existing table in SQL by using the ALTER TABLE statement. The specific steps include: determining the table name and column information, writing ALTER TABLE statements, and executing statements. For example, add an email column to the Customers table (VARCHAR(50)): ALTER TABLE Customers ADD email VARCHAR(50);

What is the syntax for adding columns in SQL

Apr 09, 2025 pm 02:51 PM

What is the syntax for adding columns in SQL

Apr 09, 2025 pm 02:51 PM

The syntax for adding columns in SQL is ALTER TABLE table_name ADD column_name data_type [NOT NULL] [DEFAULT default_value]; where table_name is the table name, column_name is the new column name, data_type is the data type, NOT NULL specifies whether null values are allowed, and DEFAULT default_value specifies the default value.

SQL Clear Table: Performance Optimization Tips

Apr 09, 2025 pm 02:54 PM

SQL Clear Table: Performance Optimization Tips

Apr 09, 2025 pm 02:54 PM

Tips to improve SQL table clearing performance: Use TRUNCATE TABLE instead of DELETE, free up space and reset the identity column. Disable foreign key constraints to prevent cascading deletion. Use transaction encapsulation operations to ensure data consistency. Batch delete big data and limit the number of rows through LIMIT. Rebuild the index after clearing to improve query efficiency.

How to set default values when adding columns in SQL

Apr 09, 2025 pm 02:45 PM

How to set default values when adding columns in SQL

Apr 09, 2025 pm 02:45 PM

Set the default value for newly added columns, use the ALTER TABLE statement: Specify adding columns and set the default value: ALTER TABLE table_name ADD column_name data_type DEFAULT default_value; use the CONSTRAINT clause to specify the default value: ALTER TABLE table_name ADD COLUMN column_name data_type CONSTRAINT default_constraint DEFAULT default_value;

Use DELETE statement to clear SQL tables

Apr 09, 2025 pm 03:00 PM

Use DELETE statement to clear SQL tables

Apr 09, 2025 pm 03:00 PM

Yes, the DELETE statement can be used to clear a SQL table, the steps are as follows: Use the DELETE statement: DELETE FROM table_name; Replace table_name with the name of the table to be cleared.

phpmyadmin creates data table

Apr 10, 2025 pm 11:00 PM

phpmyadmin creates data table

Apr 10, 2025 pm 11:00 PM

To create a data table using phpMyAdmin, the following steps are essential: Connect to the database and click the New tab. Name the table and select the storage engine (InnoDB recommended). Add column details by clicking the Add Column button, including column name, data type, whether to allow null values, and other properties. Select one or more columns as primary keys. Click the Save button to create tables and columns.

How to deal with Redis memory fragmentation?

Apr 10, 2025 pm 02:24 PM

How to deal with Redis memory fragmentation?

Apr 10, 2025 pm 02:24 PM

Redis memory fragmentation refers to the existence of small free areas in the allocated memory that cannot be reassigned. Coping strategies include: Restart Redis: completely clear the memory, but interrupt service. Optimize data structures: Use a structure that is more suitable for Redis to reduce the number of memory allocations and releases. Adjust configuration parameters: Use the policy to eliminate the least recently used key-value pairs. Use persistence mechanism: Back up data regularly and restart Redis to clean up fragments. Monitor memory usage: Discover problems in a timely manner and take measures.

How to create an oracle database How to create an oracle database

Apr 11, 2025 pm 02:33 PM

How to create an oracle database How to create an oracle database

Apr 11, 2025 pm 02:33 PM

Creating an Oracle database is not easy, you need to understand the underlying mechanism. 1. You need to understand the concepts of database and Oracle DBMS; 2. Master the core concepts such as SID, CDB (container database), PDB (pluggable database); 3. Use SQL*Plus to create CDB, and then create PDB, you need to specify parameters such as size, number of data files, and paths; 4. Advanced applications need to adjust the character set, memory and other parameters, and perform performance tuning; 5. Pay attention to disk space, permissions and parameter settings, and continuously monitor and optimize database performance. Only by mastering it skillfully requires continuous practice can you truly understand the creation and management of Oracle databases.