Technology peripherals

Technology peripherals

AI

AI

Stanford/Google Brain: Double distillation, guided diffusion model sampling speed up 256 times!

Stanford/Google Brain: Double distillation, guided diffusion model sampling speed up 256 times!

Stanford/Google Brain: Double distillation, guided diffusion model sampling speed up 256 times!

Recently, classifier-free guided diffusion models have been very effective in high-resolution image generation and have been widely used in large-scale diffusion frameworks, including DALL-E 2, GLIDE and Imagen.

However, a drawback of classifier-free guided diffusion models is that they are computationally expensive at inference time. Because they require evaluating two diffusion models—a class-conditional model and an unconditional model—hundreds of times.

In order to solve this problem, scholars from Stanford University and Google Brain proposed to use a two-step distillation method to improve the sampling efficiency of the classifier-free guided diffusion model.

##Paper address: https://arxiv.org/abs/2210.03142

How to refine the classifier-free guided diffusion model into a fast sampling model?

First, for a pre-trained classifier-free guidance model, the researchers first learned a single model to match the combined output of the conditional model and the unconditional model.

The researchers then gradually distilled this model into a diffusion model with fewer sampling steps.

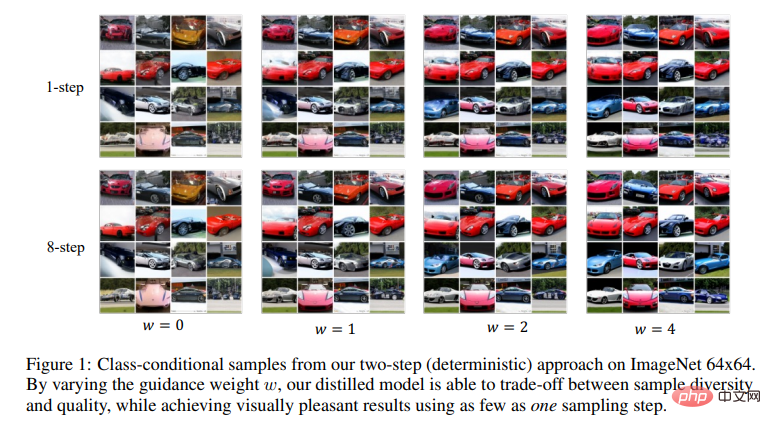

It can be seen that on ImageNet 64x64 and CIFAR-10, this method is able to generate images that are visually comparable to the original model.

With only 4 sampling steps, FID/IS scores comparable to those of the original model can be obtained, while the sampling speed is as high as 256 times.

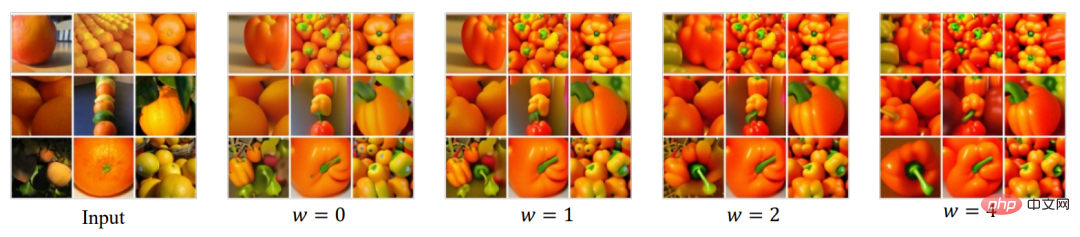

It can be seen that by changing the guidance weight w, the model distilled by the researcher can make a trade-off between sample diversity and quality. And with just one sampling step, visually pleasing results are achieved.

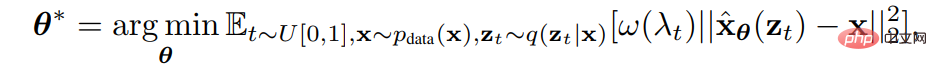

Background of the Diffusion ModelWith samples x from the data distribution, the noise scheduling function The researchers trained a model with parameters θ by minimizing the weighted mean square error Diffusion model

The researchers trained a model with parameters θ by minimizing the weighted mean square error Diffusion model .

.

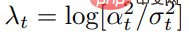

where  is the signal-to-noise ratio,

is the signal-to-noise ratio,  and

and  is a pre-specified weighting function.

is a pre-specified weighting function.

Once you have trained a diffusion model , you can use the discrete-time DDIM sampler to sample from the model.

, you can use the discrete-time DDIM sampler to sample from the model.

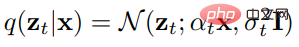

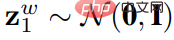

Specifically, the DDIM sampler starts from z1 ∼ N (0,I) and is updated as follows

Where, N is the total number of sampling steps. Using  , the final sample is generated.

, the final sample is generated.

Classifier-free guidance is an effective method that can significantly improve the sample quality of conditional diffusion models and has been widely used including GLIDE, DALL·E 2 and Imagen.

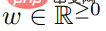

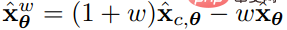

It introduces a guidance weight parameter to measure the quality and diversity of the sample. To generate samples, classifier-free guidance uses

to measure the quality and diversity of the sample. To generate samples, classifier-free guidance uses  as the prediction model at each update step to evaluate the conditional diffusion model

as the prediction model at each update step to evaluate the conditional diffusion model  and the jointly trained

and the jointly trained  .

.

Sampling using classifier-free guidance is generally expensive since two diffusion models need to be evaluated for each sampling update.

In order to solve this problem, the researchers used progressive distillation, which is a method to increase the sampling speed of the diffusion model through repeated distillation.

Previously, this method could not be used directly to guide the distillation of the model, nor could it be used on samplers other than the deterministic DDIM sampler. In this paper, the researchers solved these problems.

Distillation of the guided diffusion model without a classifier

Their approach is to distill the guided diffusion model without a classifier.

For a trained teacher-led model , they took two steps.

, they took two steps.

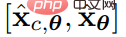

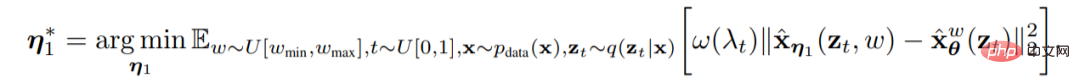

In the first step, the researcher introduced a continuous-time student model , which has learnable parameters η1, to match the output of the teacher model at any time step t ∈ [0, 1]. After specifying a range of instructional intensities

, which has learnable parameters η1, to match the output of the teacher model at any time step t ∈ [0, 1]. After specifying a range of instructional intensities that they were interested in, they used the following objectives to optimize the student model.

that they were interested in, they used the following objectives to optimize the student model.

in .

.

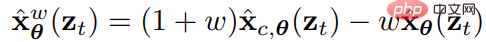

In order to combine the guidance weight w, the researcher introduced the w conditional model, where w serves as the input of the student model. To better capture the features, they applied Fourier embedding w and then incorporated it into the backbone of the diffusion model using the time-stepping method used by Kingma et al.

Since initialization plays a key role in performance, when the researchers initialized the student model, they used the same parameters as the teacher condition model (except for the newly introduced parameters related to w-conditioning).

The second step, the researcher imagined a discrete time step scenario, and gradually changed the learning model from The first step  is distilled into a student model

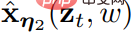

is distilled into a student model  with learnable parameters η2 and fewer steps.

with learnable parameters η2 and fewer steps.

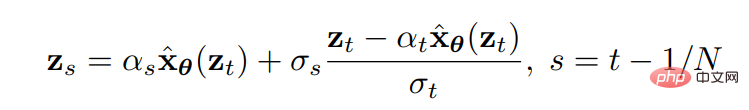

Among them, N represents the number of sampling steps. For  and

and  , the researcher starts to train the student model, Let it match the output of the teacher model's two-step DDIM sampling with one step (eg: from t/N to t - 0.5/N, from t - 0.5/N to t - 1/N).

, the researcher starts to train the student model, Let it match the output of the teacher model's two-step DDIM sampling with one step (eg: from t/N to t - 0.5/N, from t - 0.5/N to t - 1/N).

After distilling the 2N steps in the teacher model into N steps in the student model, we can use the new N-step student model as the new teacher model, and then repeat the same The process of distilling the teacher model into an N/2-step student model. At each step, the researchers initialize the chemical model using the parameters of the teacher model.

N-step deterministic and random sampling

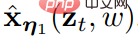

Once the model is trained, for

is trained, for  , the researcher can perform sampling through DDIM update rules. The researcher noticed that for the distillation model

, the researcher can perform sampling through DDIM update rules. The researcher noticed that for the distillation model , this sampling process is deterministic given the initialization

, this sampling process is deterministic given the initialization .

.

In addition, researchers can also conduct N-step random sampling. Use a deterministic sampling step twice the original step size (i.e., the same as the N/2-step deterministic sampler), and then take a random step back (i.e., perturb it with noise) using the original step size.

, when t > 1/N, the following update rules can be used——

, when t > 1/N, the following update rules can be used——

in, .

.

When t=1/N, the researcher uses the deterministic update formula to derive  from

from  .

.

It is worth noting that we note that performing random sampling requires evaluating the model at slightly different time steps compared to the deterministic sampler, and requires edge cases Small modifications to the training algorithm.

Other distillation methods

There is also a method that directly applies progressive distillation to the bootstrap model, That is, following the structure of the teacher model, the student model is directly distilled into a jointly trained conditional and unconditional model. After researchers tried it, they found that this method was not effective.

Experiments and Conclusions

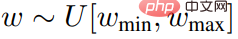

Model experiments were conducted on two standard data sets: ImageNet (64*64) and CIFAR 10.

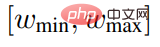

Different ranges of the guidance weight w were explored in the experiment, and it was observed that all ranges were comparable, so [wmin, wmax] = [0, 4] was used for the experiment. The first- and second-step models are trained using signal-to-noise loss.

Baseline standards include DDPM ancestral sampling and DDIM sampling.

To better understand how to incorporate the guidance weight w, a model trained with a fixed w value is used as a reference.

In order to make a fair comparison, the experiment uses the same pre-trained teacher model for all methods. Using the U-Net (Ronneberger et al., 2015) architecture as the baseline, and using the same U-Net backbone, a structure with w embedded in it is introduced as a two-step student model.

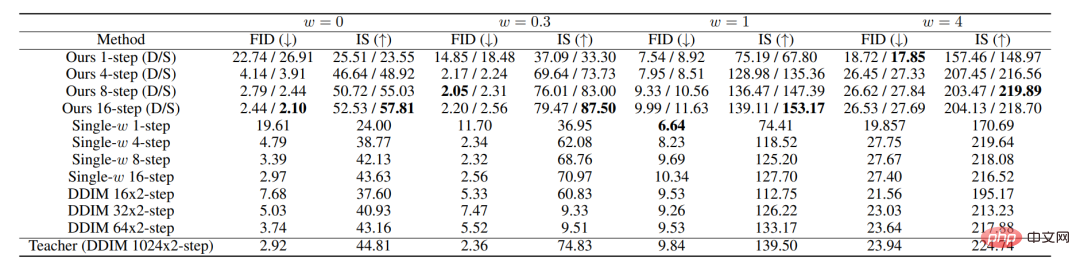

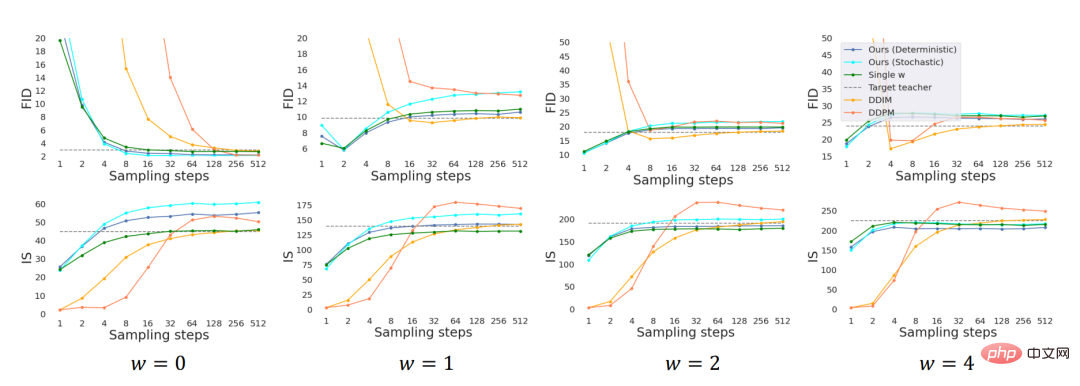

The above picture shows the performance of all methods on ImageNet 64x64. where D and S represent deterministic and stochastic samplers respectively.

In the experiment, the model training conditional on the guidance interval w∈[0, 4] was equivalent to the model training with w as a fixed value. When there are fewer steps, our method significantly outperforms the DDIM baseline performance, and basically reaches the performance level of the teacher model at 8 to 16 steps.

ImageNet 64x64 sampling quality evaluated by FID and IS scores

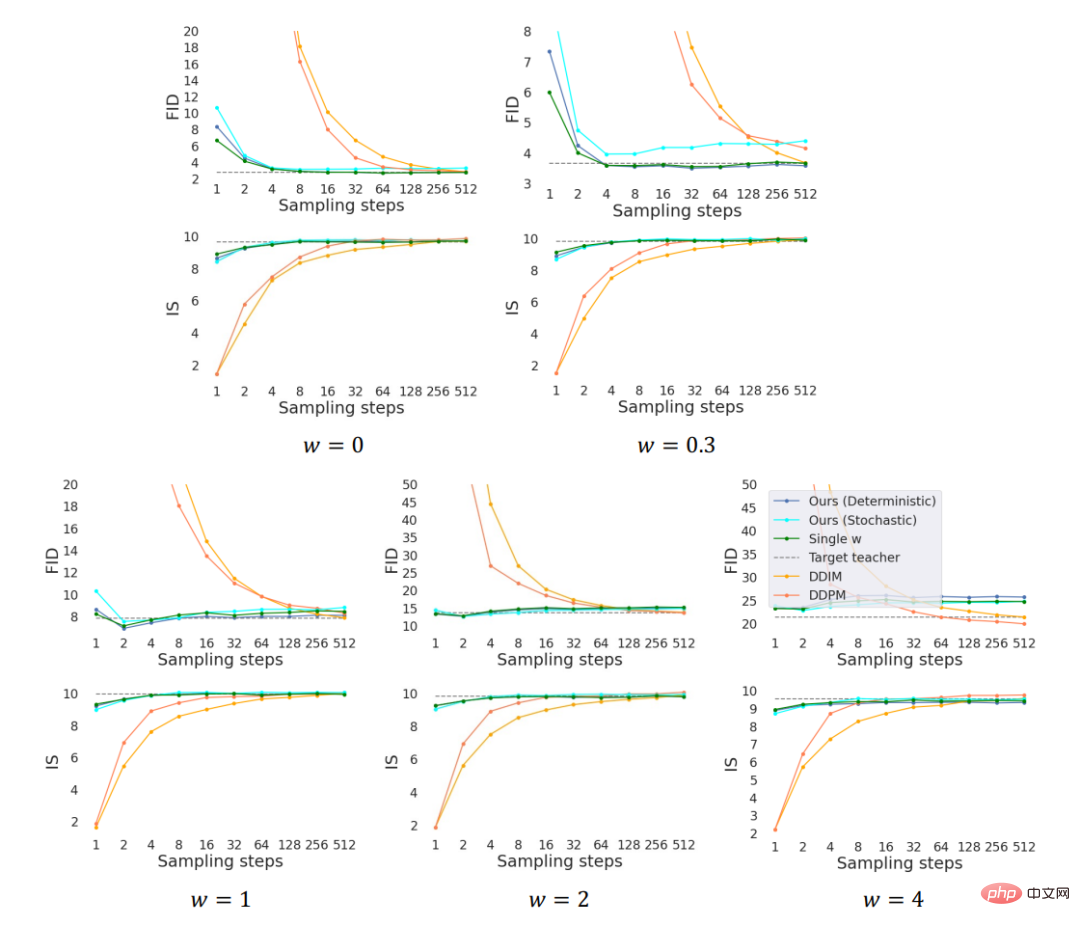

CIFAR-10 sampling quality evaluated by FID and IS scores

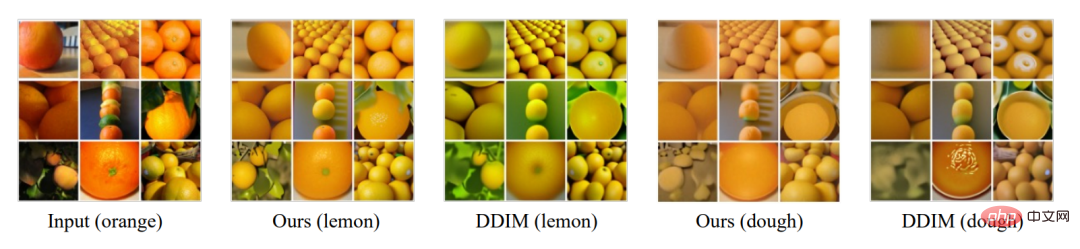

We also distill the encoding process of the teacher model, And conducted experiments on style transfer. Specifically, to perform style transfer between two domains A and B, images from domain A are encoded using a diffusion model trained on domain A, and then decoded using a diffusion model trained on domain B.

Since the encoding process can be understood as an inverted sampling process of DDIM, we distilled both the encoder and decoder with classifier-free guidance and compared it with the DDIM encoder and decoder, as above As shown in the figure. We also explore the performance impact of changes to the boot strength w.

In summary, we propose a distillation method for guided diffusion models, and a random sampler to sample from the distilled model. Empirically, our method achieves visually high-experience sampling in only one step, and obtains FID/IS scores comparable to those of teachers in only 8 to 16 steps.

The above is the detailed content of Stanford/Google Brain: Double distillation, guided diffusion model sampling speed up 256 times!. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

How to comment deepseek

Feb 19, 2025 pm 05:42 PM

How to comment deepseek

Feb 19, 2025 pm 05:42 PM

DeepSeek is a powerful information retrieval tool. Its advantage is that it can deeply mine information, but its disadvantages are that it is slow, the result presentation method is simple, and the database coverage is limited. It needs to be weighed according to specific needs.

How to search deepseek

Feb 19, 2025 pm 05:39 PM

How to search deepseek

Feb 19, 2025 pm 05:39 PM

DeepSeek is a proprietary search engine that only searches in a specific database or system, faster and more accurate. When using it, users are advised to read the document, try different search strategies, seek help and feedback on the user experience in order to make the most of their advantages.

Sesame Open Door Exchange Web Page Registration Link Gate Trading App Registration Website Latest

Feb 28, 2025 am 11:06 AM

Sesame Open Door Exchange Web Page Registration Link Gate Trading App Registration Website Latest

Feb 28, 2025 am 11:06 AM

This article introduces the registration process of the Sesame Open Exchange (Gate.io) web version and the Gate trading app in detail. Whether it is web registration or app registration, you need to visit the official website or app store to download the genuine app, then fill in the user name, password, email, mobile phone number and other information, and complete email or mobile phone verification.

Why can't the Bybit exchange link be directly downloaded and installed?

Feb 21, 2025 pm 10:57 PM

Why can't the Bybit exchange link be directly downloaded and installed?

Feb 21, 2025 pm 10:57 PM

Why can’t the Bybit exchange link be directly downloaded and installed? Bybit is a cryptocurrency exchange that provides trading services to users. The exchange's mobile apps cannot be downloaded directly through AppStore or GooglePlay for the following reasons: 1. App Store policy restricts Apple and Google from having strict requirements on the types of applications allowed in the app store. Cryptocurrency exchange applications often do not meet these requirements because they involve financial services and require specific regulations and security standards. 2. Laws and regulations Compliance In many countries, activities related to cryptocurrency transactions are regulated or restricted. To comply with these regulations, Bybit Application can only be used through official websites or other authorized channels

Sesame Open Door Trading Platform Download Mobile Version Gateio Trading Platform Download Address

Feb 28, 2025 am 10:51 AM

Sesame Open Door Trading Platform Download Mobile Version Gateio Trading Platform Download Address

Feb 28, 2025 am 10:51 AM

It is crucial to choose a formal channel to download the app and ensure the safety of your account.

gate.io exchange official registration portal

Feb 20, 2025 pm 04:27 PM

gate.io exchange official registration portal

Feb 20, 2025 pm 04:27 PM

Gate.io is a leading cryptocurrency exchange that offers a wide range of crypto assets and trading pairs. Registering Gate.io is very simple. You just need to visit its official website or download the app, click "Register", fill in the registration form, verify your email, and set up two-factor verification (2FA), and you can complete the registration. With Gate.io, users can enjoy a safe and convenient cryptocurrency trading experience.

Top 10 recommended for crypto digital asset trading APP (2025 global ranking)

Mar 18, 2025 pm 12:15 PM

Top 10 recommended for crypto digital asset trading APP (2025 global ranking)

Mar 18, 2025 pm 12:15 PM

This article recommends the top ten cryptocurrency trading platforms worth paying attention to, including Binance, OKX, Gate.io, BitFlyer, KuCoin, Bybit, Coinbase Pro, Kraken, BYDFi and XBIT decentralized exchanges. These platforms have their own advantages in terms of transaction currency quantity, transaction type, security, compliance, and special features. For example, Binance is known for its largest transaction volume and abundant functions in the world, while BitFlyer attracts Asian users with its Japanese Financial Hall license and high security. Choosing a suitable platform requires comprehensive consideration based on your own trading experience, risk tolerance and investment preferences. Hope this article helps you find the best suit for yourself

Binance binance official website latest version login portal

Feb 21, 2025 pm 05:42 PM

Binance binance official website latest version login portal

Feb 21, 2025 pm 05:42 PM

To access the latest version of Binance website login portal, just follow these simple steps. Go to the official website and click the "Login" button in the upper right corner. Select your existing login method. If you are a new user, please "Register". Enter your registered mobile number or email and password and complete authentication (such as mobile verification code or Google Authenticator). After successful verification, you can access the latest version of Binance official website login portal.