Technology peripherals

Technology peripherals

AI

AI

Text-generated images are so popular, you need to understand the evolution of these technologies

Text-generated images are so popular, you need to understand the evolution of these technologies

Text-generated images are so popular, you need to understand the evolution of these technologies

OpenAI recently released the DALL·E 2 system, which caused an "earthquake" in the AI world. This system can create images based on text descriptions. This is the second version of the DALL·E system, the first version was released nearly a year ago. However, within OpenAI, the model behind DALL·E 2 is called unCLIP, which is closer to OpenAI's GLIDE system than the original DALL·E.

For the author, the impact of the DALL·E 2 system is comparable to AlphaGo. It appears that the model captures many complex concepts and combines them in a meaningful way. Just a few years ago, it was difficult to predict whether computers could generate images from such textual descriptions. Sam Altman mentioned in his blog post that our predictions about AI seem to be wrong and need to be updated, because AI has begun to affect creative work rather than just mechanical and repetitive work.

This article aims to take readers through the evolution of OpenAI's text-guided image generation model, including the first and second versions of DALL·E and other models.

DALL·E Evolution History

DALL·E 1

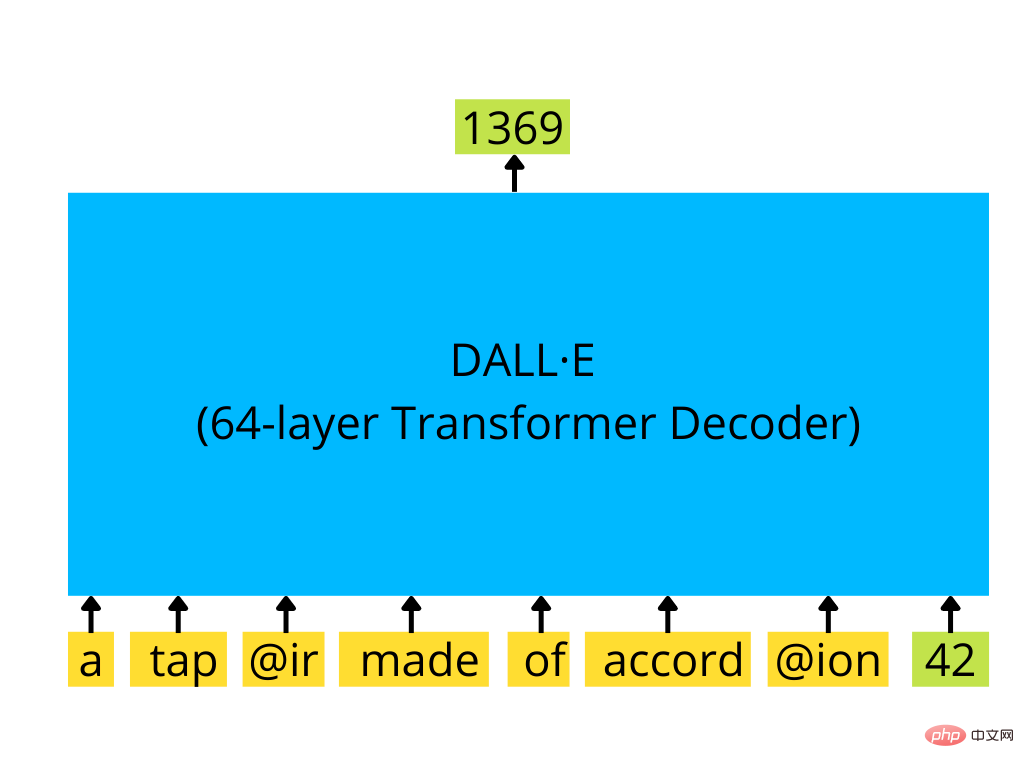

The first version of DALL·E is a GPT-3 style transformer decoder that can be based on text input and optionally image starting autoregression to generate an image of size 256×256.

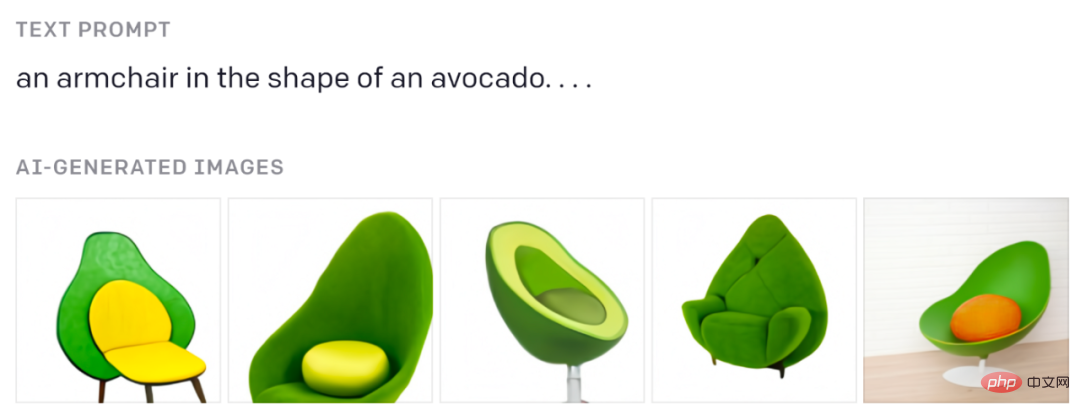

You must have seen these avocado chairs:

From the original blog post.

If you want to understand how GPT-like transformers work, see Jay Alammar’s excellent visual explanation: https://jalammar.github.io/how-gpt3-works-visualizations-animations/

Text is encoded by BPE tokens (up to 256), and images are encoded by special image tokens (1024 of them) generated by discrete variational autoencoders (dVAE). dVAE encodes a 256×256 image into a grid of 32×32 tokens, with a vocabulary of 8192 possible values. dVAE loses some details and high-frequency features in the generated image, so DALL·E uses some blurring and smoothing of features in the generated image.

Comparison of original image (top) and dVAE reconstruction (bottom). Image from original paper.

The transformer used here is a large model with 12B size parameters, consisting of 64 sparse transformer blocks. It has a complex set of attention mechanisms internally, including: 1) Classic text to text attention masking mechanism, 2) image to text attention, 3) image to image sparse attention. All three attention types are combined into one attention operation. The model is trained on a dataset of 250M image-text pairs.

The GPT-3-like transformer decoder uses a series of text tokens and (optional) image tokens (here a single image token with id 42) and generates an image Continuation of (here is the next image token with id 1369)

The trained model generates several samples (up to 512) based on the provided text, and then passes all these samples through a program called CLIP The special models are sorted and the top-ranked one is selected as the model result.

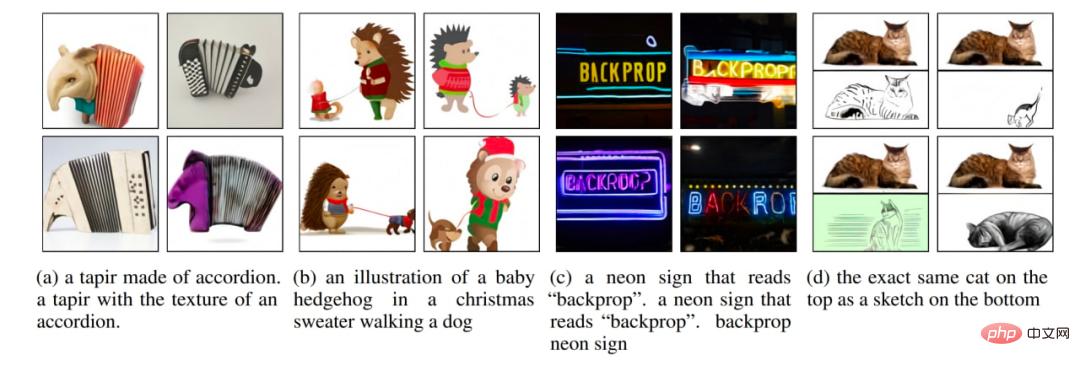

A few image generation examples from the original paper.

CLIP

CLIP was originally a separate auxiliary model for sorting the results of DALL·E. Its name is the abbreviation of Contrastive Language-Image Pre-Training.

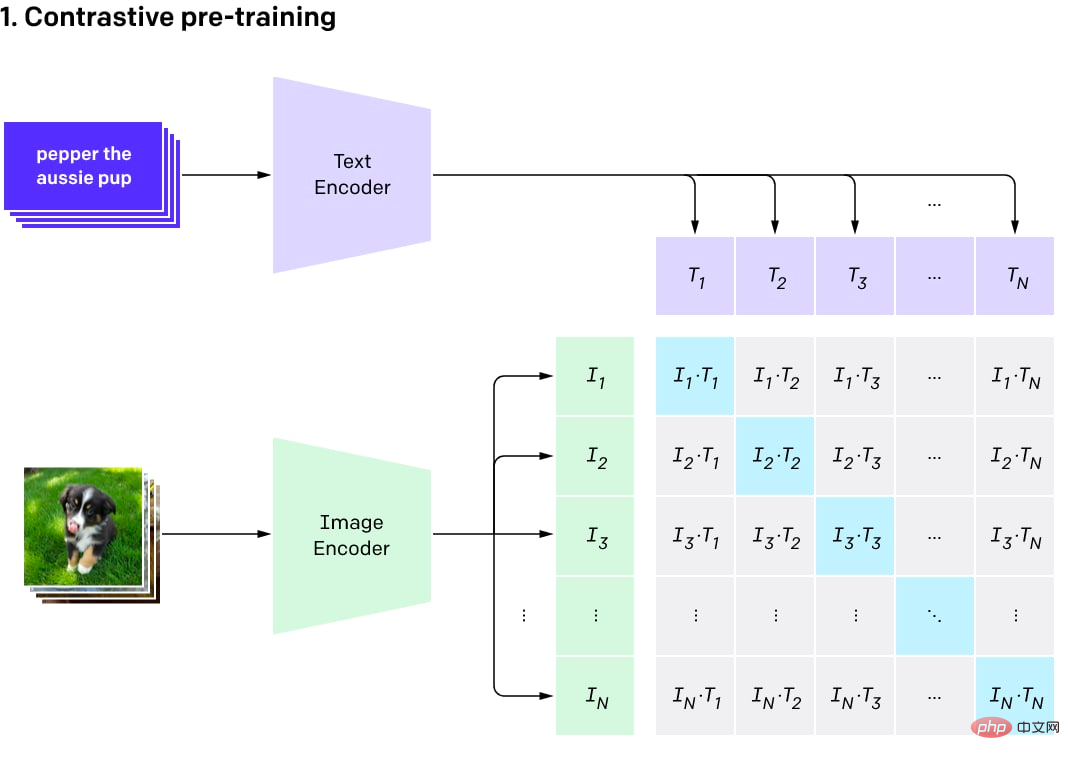

The idea behind CLIP is simple. The author grabbed an image-text pair dataset (400M in size) from the Internet, and then trained a comparison model on such a dataset. Contrastive models can produce high similarity scores for images and text from the same pair (so they are similar) and low scores for mismatched text and images (we want a certain image to be the same as any other in the current training batch). The chance of getting high similarity results between pairs of texts is very small).

The model consists of two encoders: one for text and another for images. The encoder produces an embedding (a multidimensional vector representation of an object, e.g. a 512-byte vector). The dot product is then calculated using the two embeddings and a similarity score is derived. Because the embeddings are normalized, this process of calculating the similarity score outputs cosine similarity. Cosine similarity is close to 1 for vectors pointing in the same direction (with a small angle between them), 0 for orthogonal vectors, and -1 for opposite vectors.

Comparison visualization of the pre-training process (picture from the original post)

CLIP is a set of models. There are 9 image encoders, 5 convolutional encoders, and 4 transformer encoders. The convolutional encoders are ResNet-50, ResNet-101 and EfficientNet-like models called RN50x4, RN50x16, RN50x64 (the higher the number, the better the model). The transformer encoders are the visual transformers (or ViTs): ViT-B/32, ViT-B/16, ViT-L/14 and ViT-L/14@336. The last one was fine-tuned on images with a resolution of 336×336 pixels, and the others were trained on 224×224 pixels.

OpenAI released programs in stages, first releasing ViT-B/32 and ResNet-50, then ResNet-101 and RN50x4, then RN50x16 and ViT-B/16 in July 2021, and then It was RN50x64 and ViT-L/14 released in January 2022, and finally ViT-L/14@336 appeared in April 2022.

The text encoder is an ordinary transformer encoder, but has a masked attention mechanism. This encoder consists of 12 layers, each layer has 8 attention heads, and has a total of 63M parameters. Interestingly, the attention span is only 76 tokens (compared to 2048 tokens for GPT-3 and 512 tokens for standard BERT). Therefore, the text part of the model is only suitable for fairly short texts, and you cannot put large blocks of text in the model. Since DALL·E 2 and CLIP are roughly the same, they should have the same limitations.

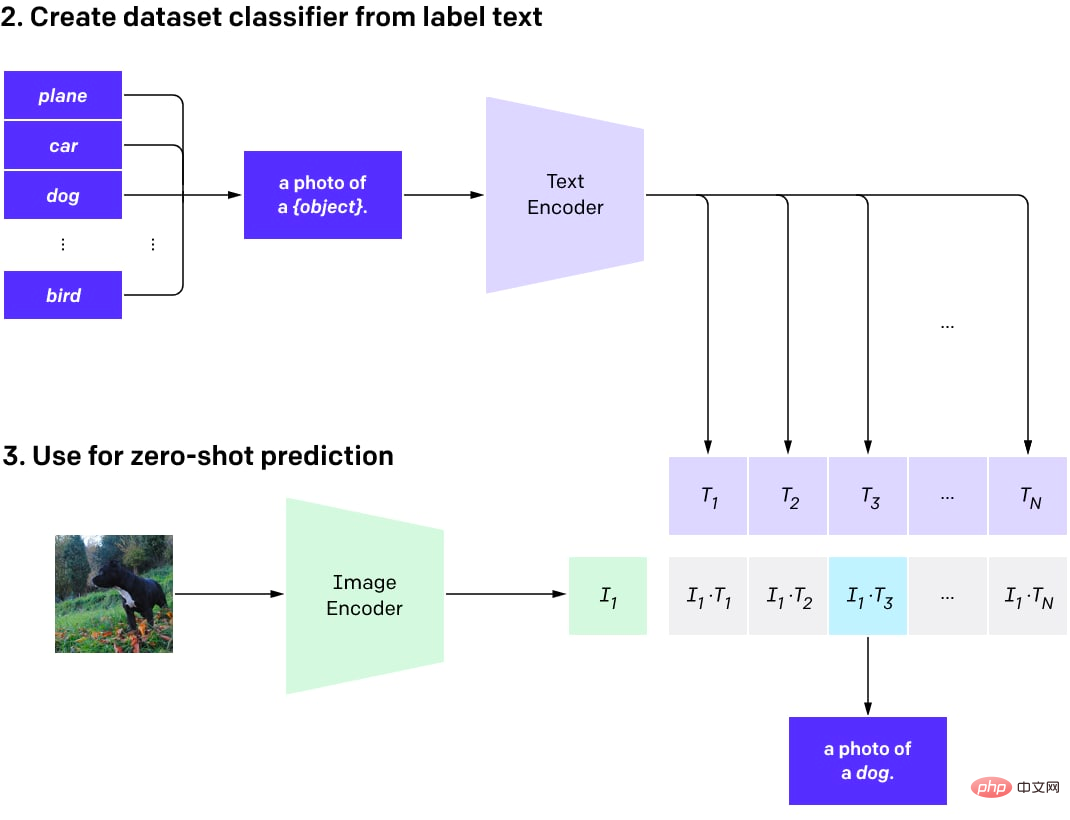

After CLIP is pre-trained, it can be used for different tasks (with the advantage of a good basic model).

Most importantly, readers can score multiple results using models sorted in DALL·E and choose the best one. Alternatively, you can use the CLIP function to train a custom classifier on top of it, but there are not many successful examples yet.

Next, CLIP can be used to perform zero-shot classification on any number of classes (when the model is not specifically trained to use those classes). These classes can be adjusted without retraining the model.

Simply put, you can create a text data set describing the objects in the picture for as many classes as you need. Text embeddings are then generated for these descriptions and stored as vectors. When images are used for classification, an image encoder is used to generate image embeddings and the dot product between the image embeddings and all precomputed text embeddings is calculated. The pair with the highest score is selected and its corresponding class is the result.

Program for zero-shot classification using CLIP.

The zero-shot classification model means that the model is not trained for a specific set of categories. Instead of training a classifier from scratch or by fine-tuning a pretrained image model, there is now the option to use pretrained CLIP for on-the-fly engineering (same as using GPT models).

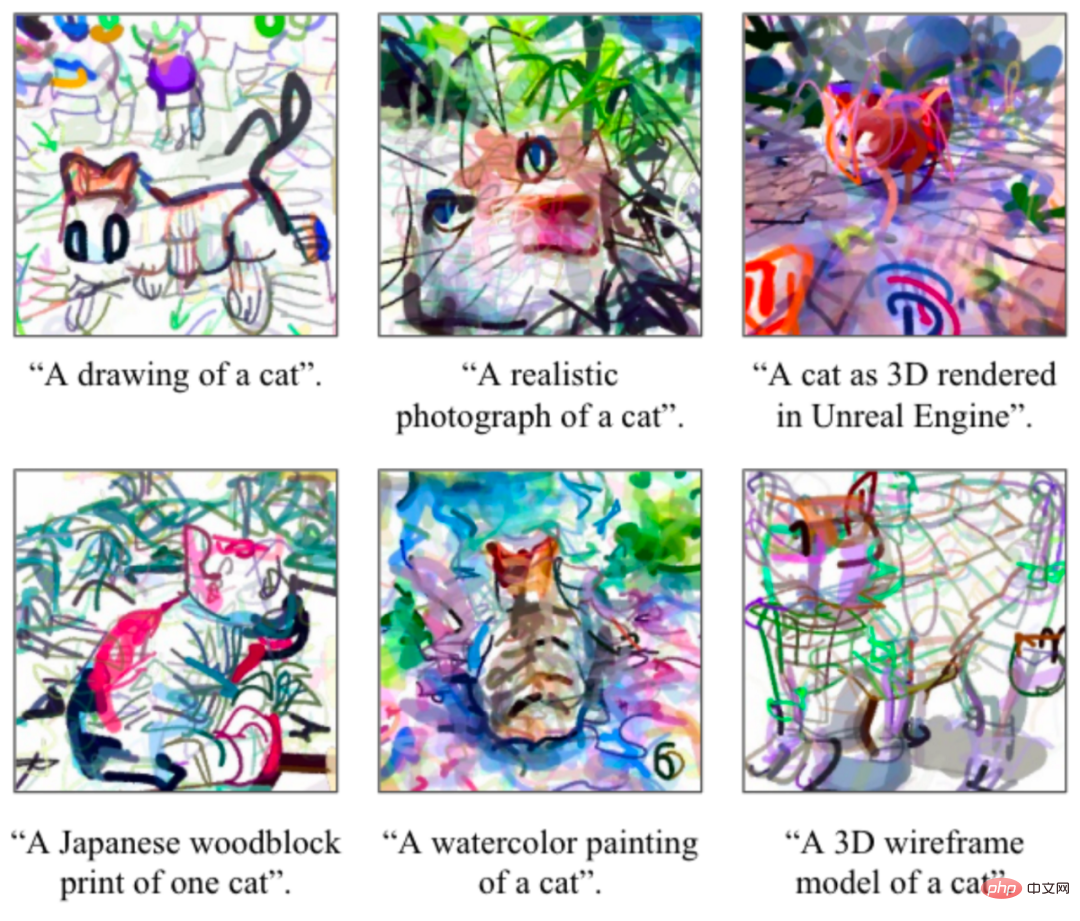

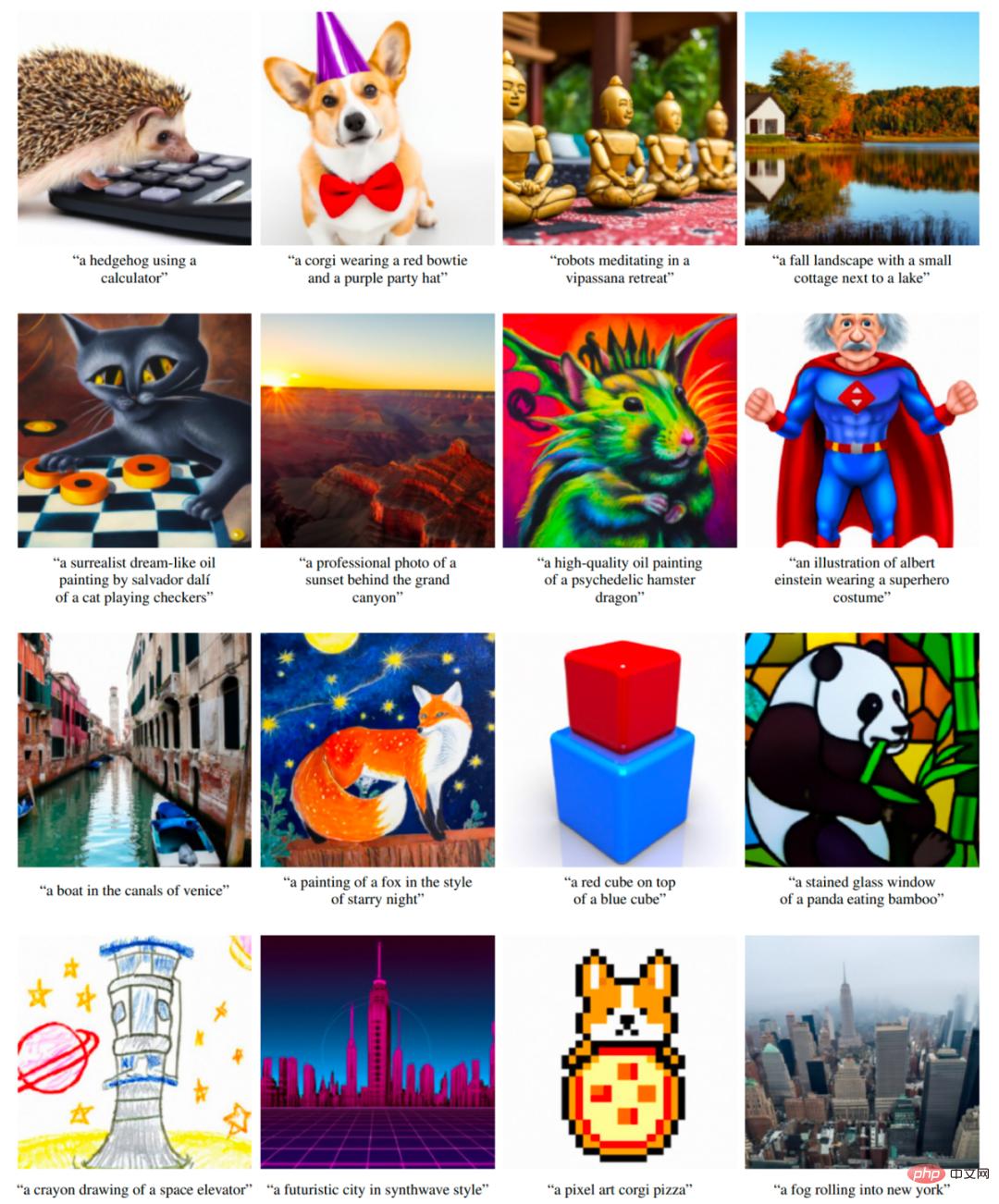

Many people don’t realize that you can also use CLIP to generate images (even if it’s not preset to do so). Successful cases include CLIPDraw and VQGAN-CLIP.

CLIPD drawing example. Pictures from the original paper.

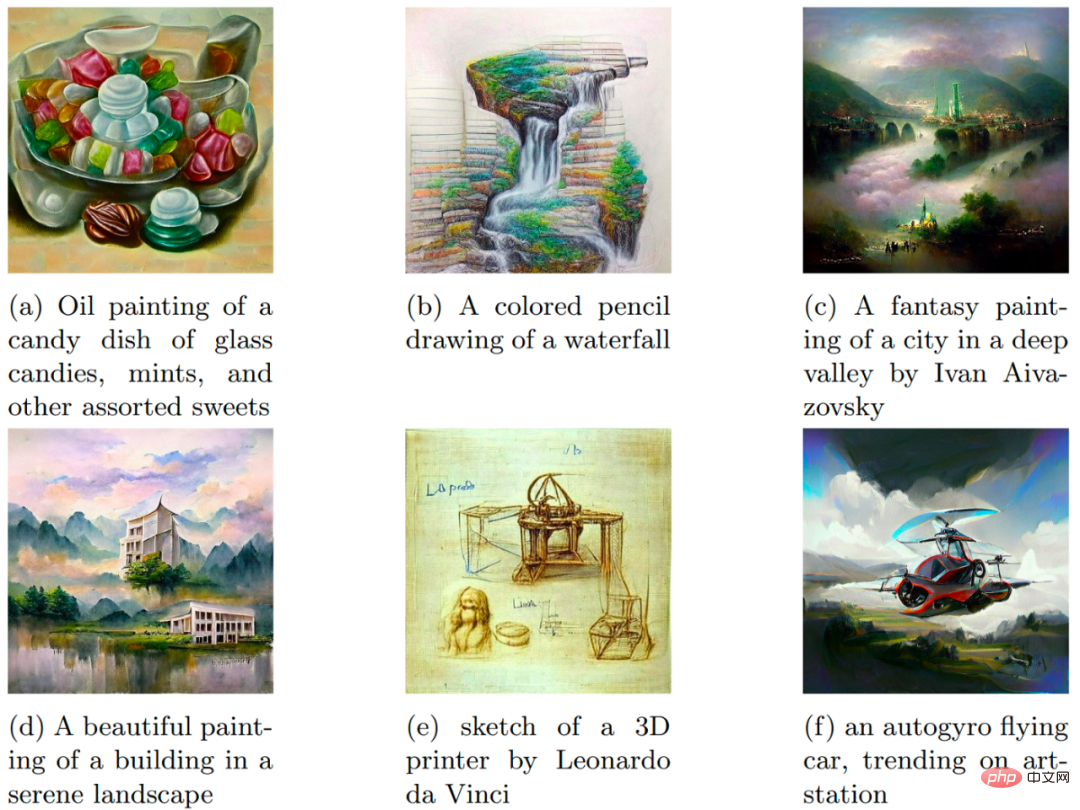

Generation example of VQGAN-CLIP and its text prompt. Pictures from the original paper.

The process is simple and beautiful, very similar to DeepDream. Start with a text description of the desired image and an initial image (random embedding, scene description in splines or pixels, any image created in a distinguishable way), then run a loop that generates the image, adding some enhancements for stability property, obtain a CLIP embedding of the resulting image and compare it with a CLIP embedding of the text describing the image. The loss is calculated based on this difference and a gradient descent program is run to update the image and reduce the loss. After some iterations, you can get an image that matches the text description well. The way the initial scene is created (using splines, pixels, rendering primitives, underlying code from VQGAN, etc.) can significantly affect image characteristics.

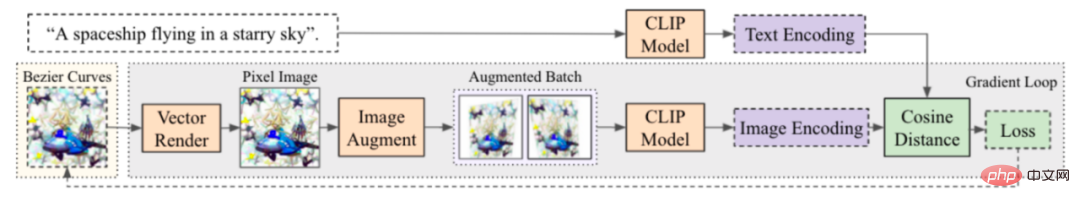

CLIPDraw generation process: Starting from a set of random Bezier curves, the position and color of the curves are optimized so that the generated graphics best match the given description prompt. Pictures from the original paper.

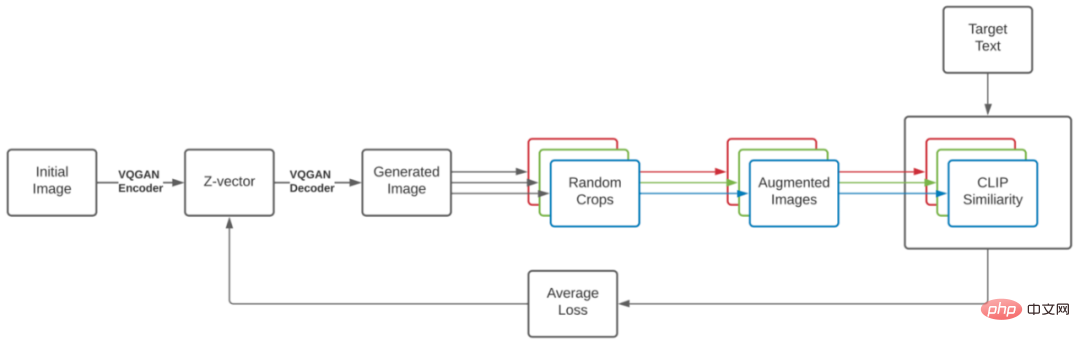

VQGAN-CLIP generation process. Pictures from the original paper.

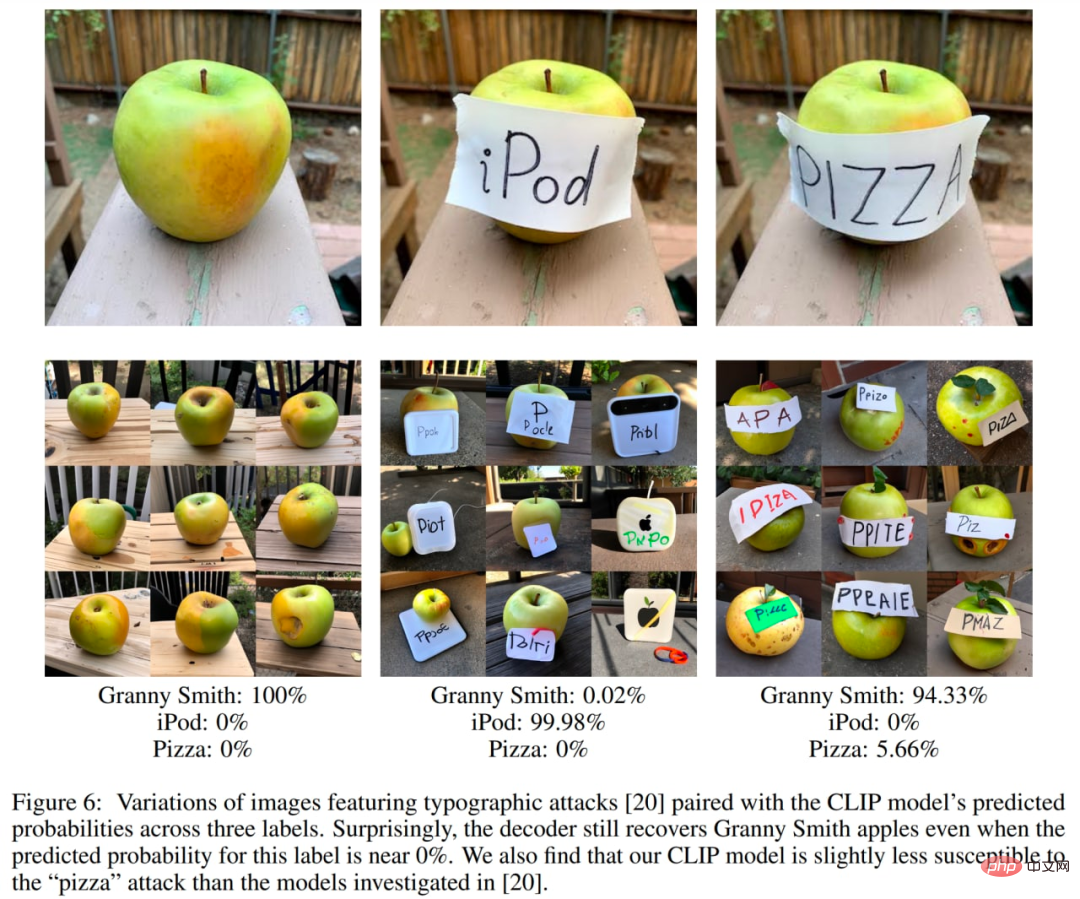

CLIP embedding doesn't catch everything, and some interesting demonstrations demonstrate its weaknesses. One of the most well-known examples is typographical attacks. In this attack, text on the image can lead to misclassification of the image.

There are currently some alternative models with a similar structure to CLIP, such as Google's ALIGN or Huawei's FILIP.

GLIDE

GLIDE, or Guided Language to Image Diffusion for Generation and Editing, is a text-guided image generation model launched by OpenAI. It has defeated DALL·E, but has received relatively less attention. few. It doesn’t even have a dedicated post on the OpenAI website. GLIDE generates images with a resolution of 256×256 pixels.

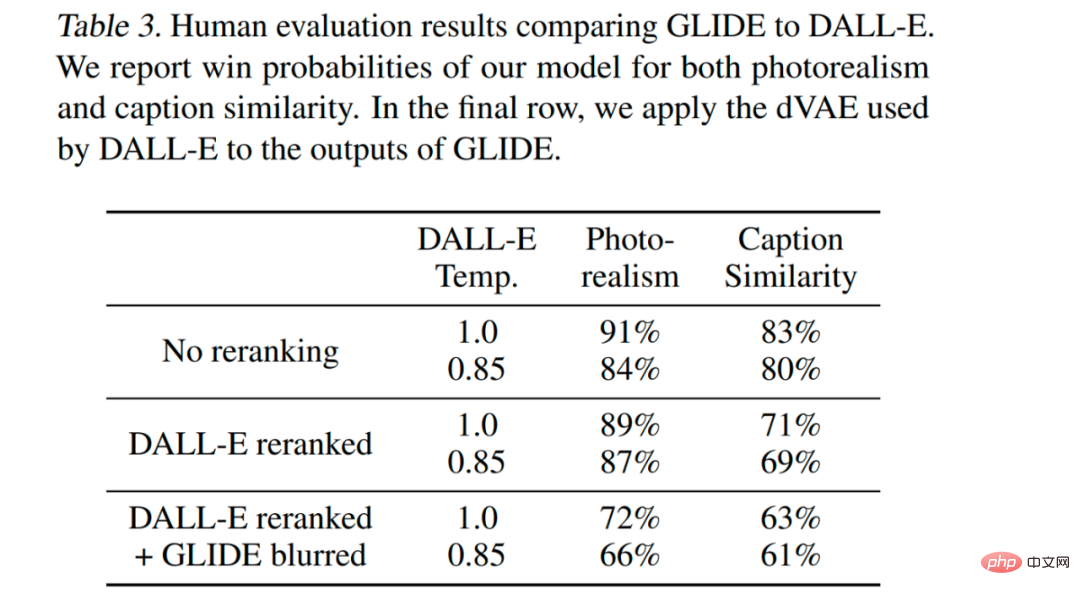

The GLIDE model with 3.5B parameters (but it seems the correct number is 5B parameters, since there is a separate upsampling model with 1.5B parameters) is preferred over the 12B parameter DALL·E, and Also beat DALL·E in FID score.

Sample from GLIDE. Image from original paper.

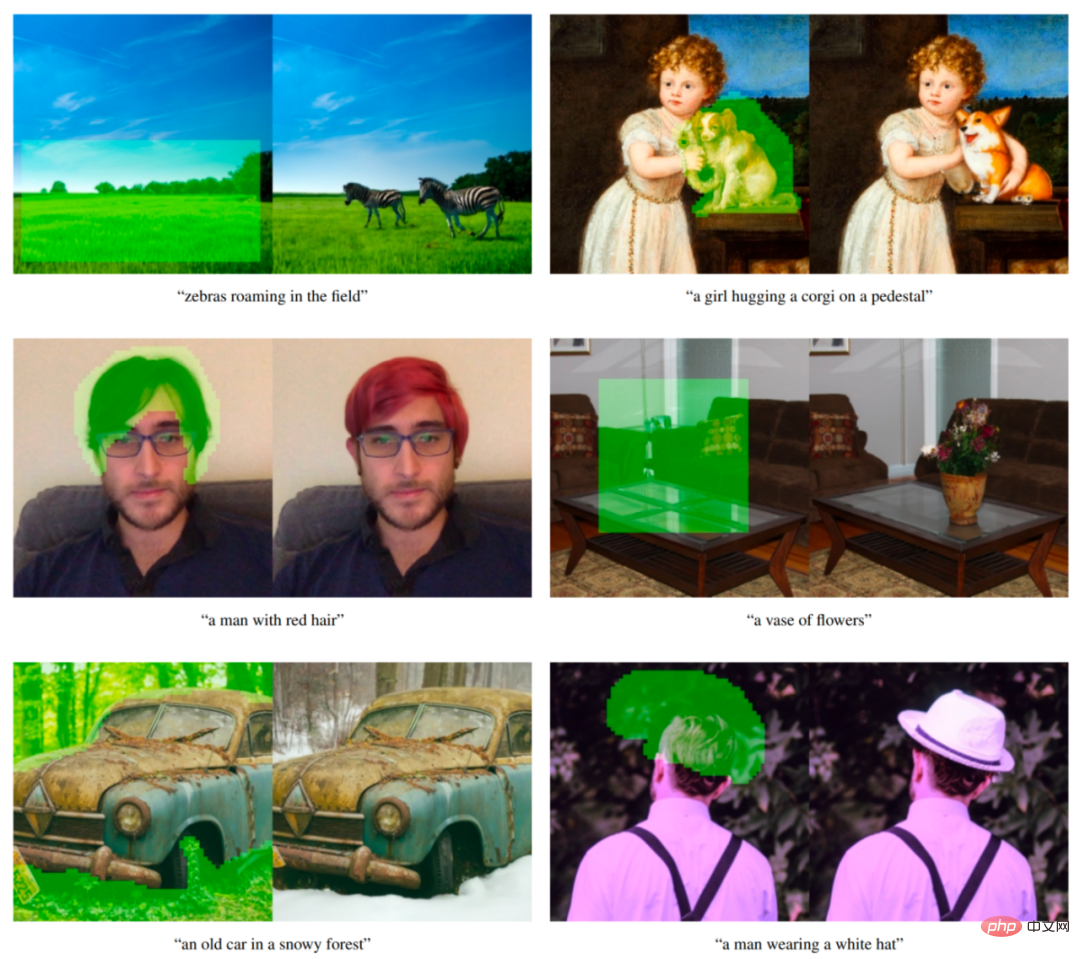

The GLIDE model can also be fine-tuned to perform image inpainting, enabling powerful text-driven image editing, which is used in DALL·E 2.

Text conditional image repair example from GLIDE. The green area is erased and the model fills this area according to the given prompts. The model is able to match the style and lighting of its surroundings, producing a realistic finish. Examples are from the original paper.

GLIDE could be called "DALL·E 2" at the time of release. Now, when a separate DALL·E 2 system is released (actually called unCLIP in the paper and heavily uses GLIDE itself), we can refer to GLIDE as DALL·E 1.5 :)

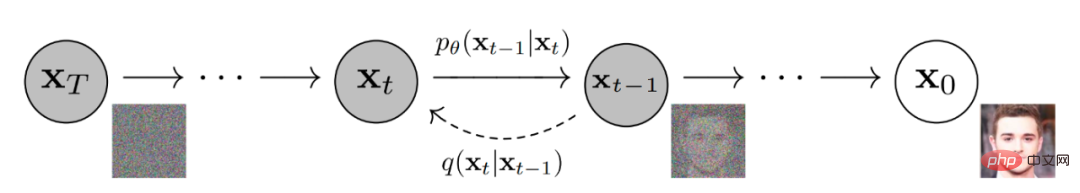

GLIDE is similar to Another model is called the diffusion model. Simply put, diffusion models add random noise to the input data through a chain of diffusion steps, and then they learn the inverse diffusion process to construct an image from the noise.

Denoising diffusion model generates images.

The following figure is a visual illustration of Google’s use of the diffusion model to generate images.

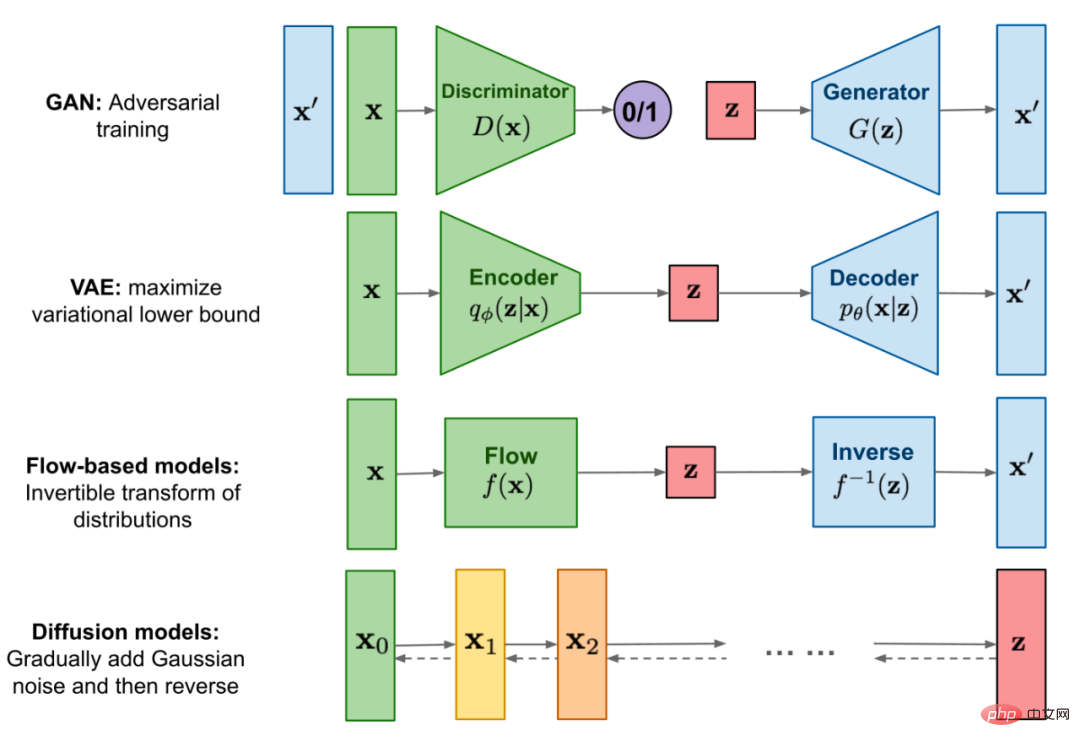

Comparison of diffusion models with other categories of generative models.

First, the authors trained a 3.5B parameter diffusion model conditioned on natural language descriptions using a text encoder. Next, they compared two techniques for bootstrapping diffusion models to text prompts: CLIP bootstrapping and classifier-free bootstrapping (the latter yielded better results).

Classifier bootstrapping allows the diffusion model to be conditioned on the label of the classifier, and the gradient from the classifier is used to guide samples towards the label.

Classifier-free bootstrapping does not require training a separate classifier model. This is just a form of bootstrapping, interpolating between the predictions of the diffusion model with and without labels.

As the author said, classification-free guidance has two attractive properties. First, it allows a single model to leverage its own knowledge during the bootstrapping process, rather than relying on the knowledge of a separate (sometimes smaller) classification model. Second, it simplifies guidance when conditioning on information that is difficult to predict with a classifier, such as text.

Under CLIP guidance, the classifier is replaced with a CLIP model. It uses the dot product of an image and the gradient encoded with respect to the image's caption.

In classifier and CLIP bootstrapping, we have to train CLIP on noisy images in order to get the correct gradient during back-diffusion. The authors used CLIP models that were explicitly trained to be noise-aware, these models are called noisy CLIP models. Public CLIP models that have not been trained on noisy images can still be used to guide diffusion models, but noisy CLIP guidance performs well for this approach.

The text conditional diffusion model is an enhanced ADM model architecture that predicts the image for the next diffusion step based on the noisy image xₜ and the corresponding text caption c.

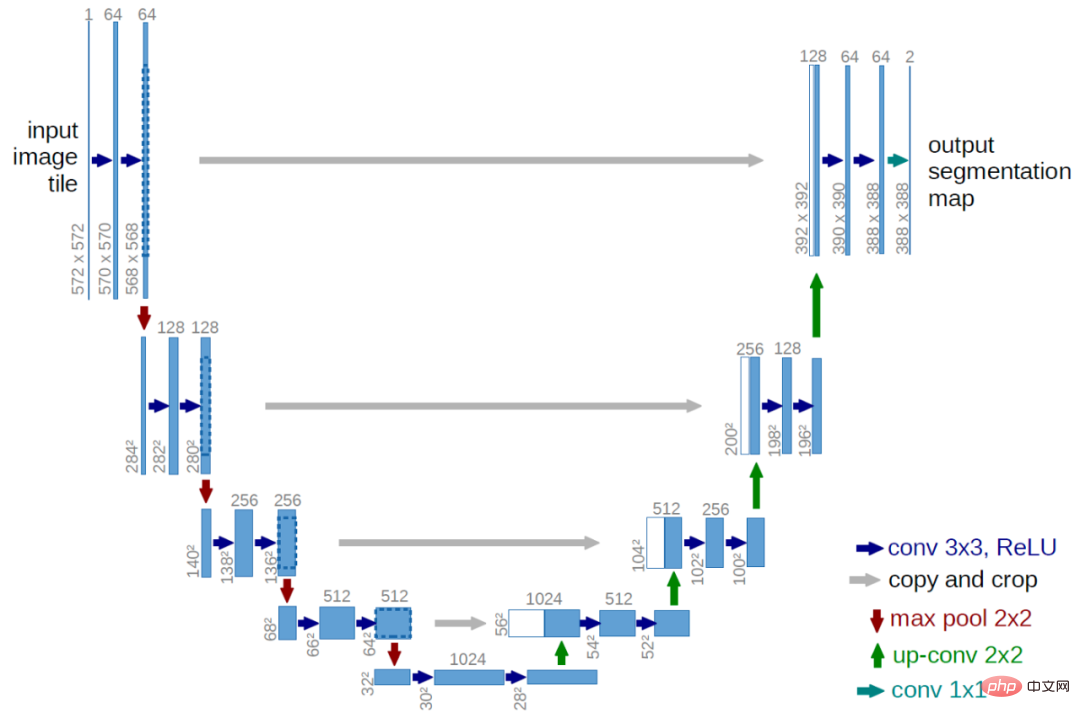

The visual part is a modified U-Net architecture. The U-Net model uses a bunch of residual layers and downsampling convolutions, then a bunch of residual layers with upsampling convolutions, using a residual connection (skip connection) to connect the layers with the same spatial size.

Original U-Net architecture. Pictures from the original paper.

GLIDE has different modifications to the width, depth, etc. of the original U-Net architecture, and adds global attention with multiple attention heads at 8×8, 16×16 and 32×32 resolutions. force layer. Additionally, the projection of the time step embedding is added to each residual block.

For the classifier bootstrap model, the classifier architecture is a downsampled backbone network of the U-Net model with an attention pool at 8×8 layers to generate the final output.

Text is encoded into a sequence of K tokens (the maximum attention span is not yet known) through the transformer model.

The output of the transformer can be used in two ways: first, the final token embedding is used to replace the class embedding in the ADM model; second, the last layer of token embedding (sequence of K feature vectors) is projected onto the entire The dimensions of each attention layer in the ADM model are then connected to the attention context of each layer.

The text transformer has 24 residual blocks of width 2048, resulting in approximately 1.2B parameters. The visual part of the model trained for 64×64 resolution consists of 2.3B parameters. In addition to the text conditional diffusion model with 3.5B parameters, the author also trained another text conditional upsampling diffusion model with 1.5B parameters, increasing the resolution to 256×256 (this idea will also be used in DALL·E).

The upsampling model conditions on text in the same way as the base model, but uses a smaller text encoder with a width of 1024 instead of 2048. For CLIP guidance, they also trained a noisy 64×64 ViT-L CLIP model.

GLIDE is trained on the same data set as DALL·E, and the total training computational effort is roughly equal to that used to train DALL·E.

GLIDE is optimal in all settings, even settings that allow DALL·E to use more test time calculations to achieve superior performance while reducing GLIDE sample quality (via VAE blurring).

The model is fine-tuned to support unconditional image generation. This training process is exactly the same as pre-training, except that 20% of the text token sequences are replaced with empty sequences. This way, the model retains the ability to generate text conditional output, but can also generate images unconditionally.

The model is also explicitly fine-tuned to perform repairs. During fine-tuning, random regions of the training examples are removed and the remaining portions along with the mask channel are fed into the model as additional conditional information.

GLIDE can iteratively generate complex scenes using zero-shot generation, followed by a series of repair edits.

First generate the image prompt "a comfortable living room", then use the repair mask, and then add a painting and a coffee table to the wall with the text prompt. There is also a vase and finally the wall is moved to the sofa. Examples are from the original paper.

DALL·E 2/unCLIP

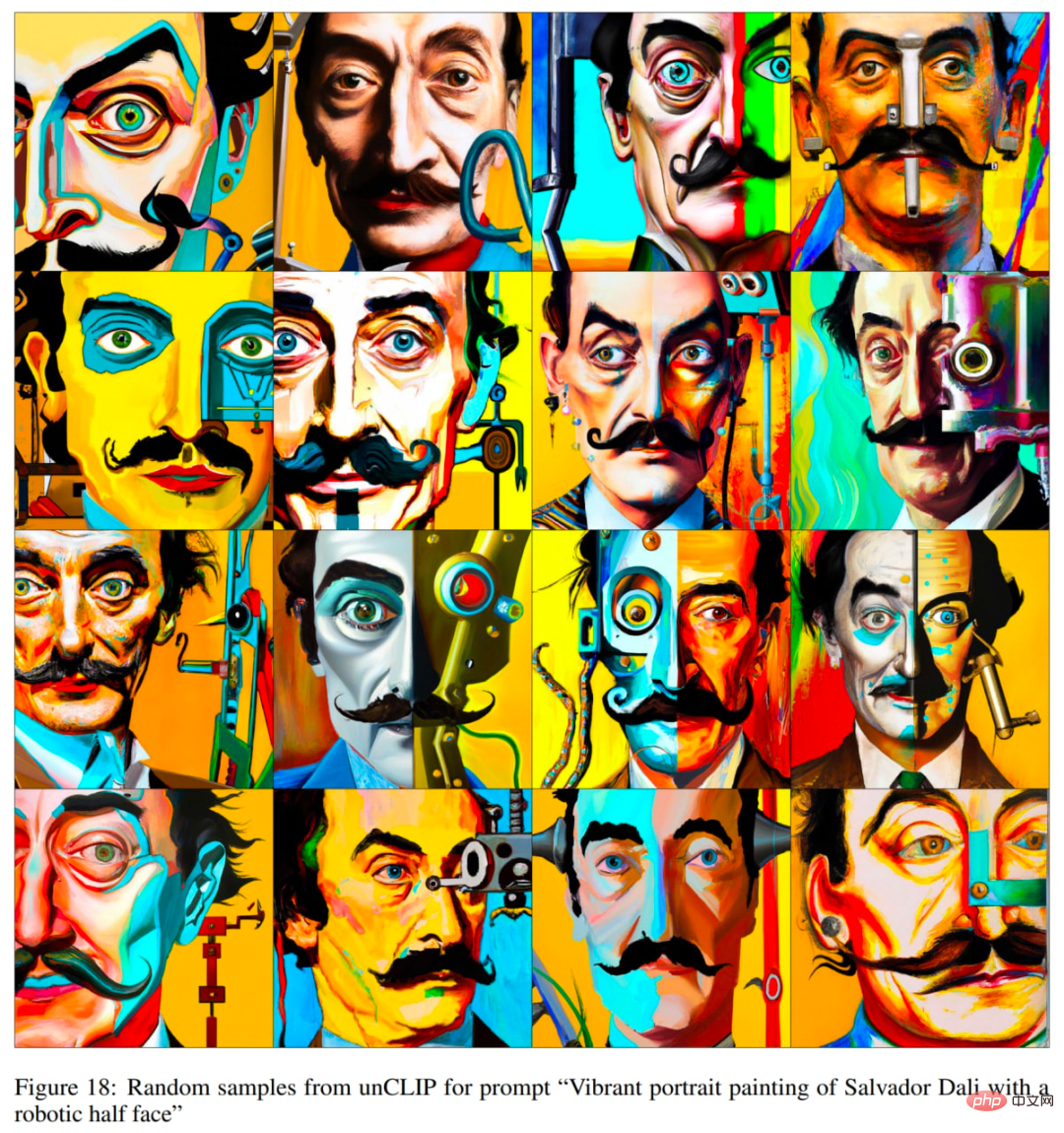

OpenAI released the DALL·E 2 system on April 6, 2022. The DALL·E 2 system significantly improves results over the original DALL·E. It produces images with 4x higher resolution (compared to the original DALL·E and GLIDE), now up to 1024×1024 pixels. The model behind the DALL·E 2 system is called unCLIP.

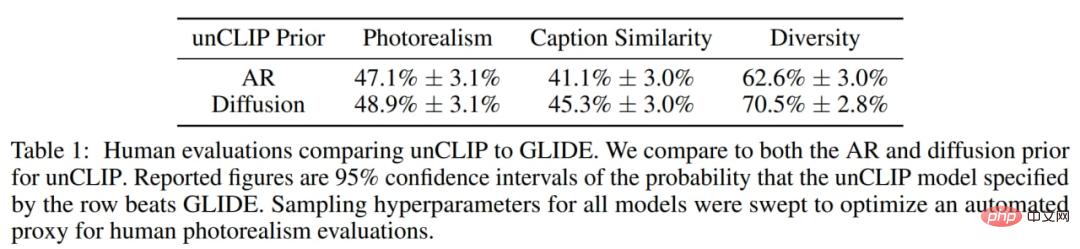

The authors found that humans slightly prefer GLIDE to unCLIP in terms of photorealism, but the difference is very small. With similar realism, unCLIP is preferred over GLIDE in terms of diversity, highlighting one of its benefits. Keep in mind that the GLIDE itself is more popular than the DALL·E 1, so the DALL·E 2 is a significant improvement over its predecessor, the DALL·E 1.

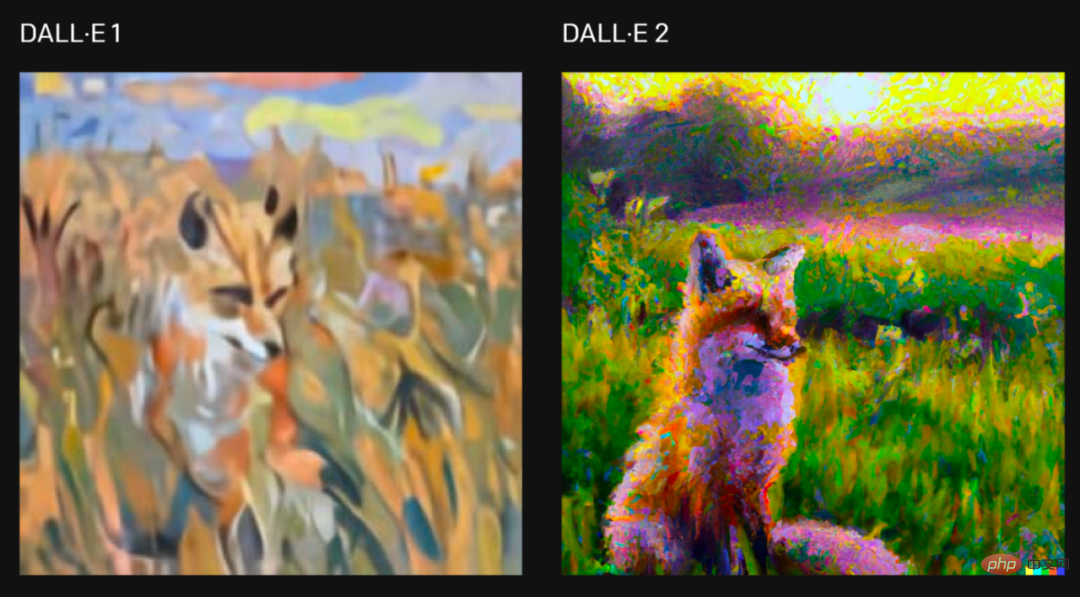

For the request "Paint a painting of a fox sitting in a field at sunrise in the style of Claude Monet", two versions of the system were generated Picture, picture from the original article.

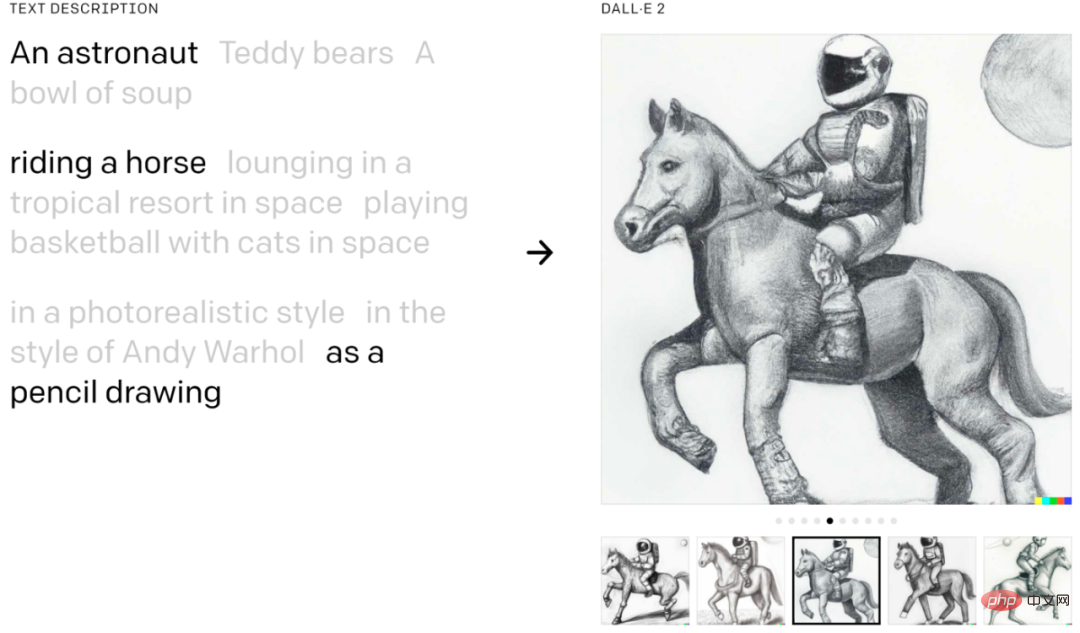

DALL·E 2 You can combine concepts, attributes and styles:

Examples from the original article.

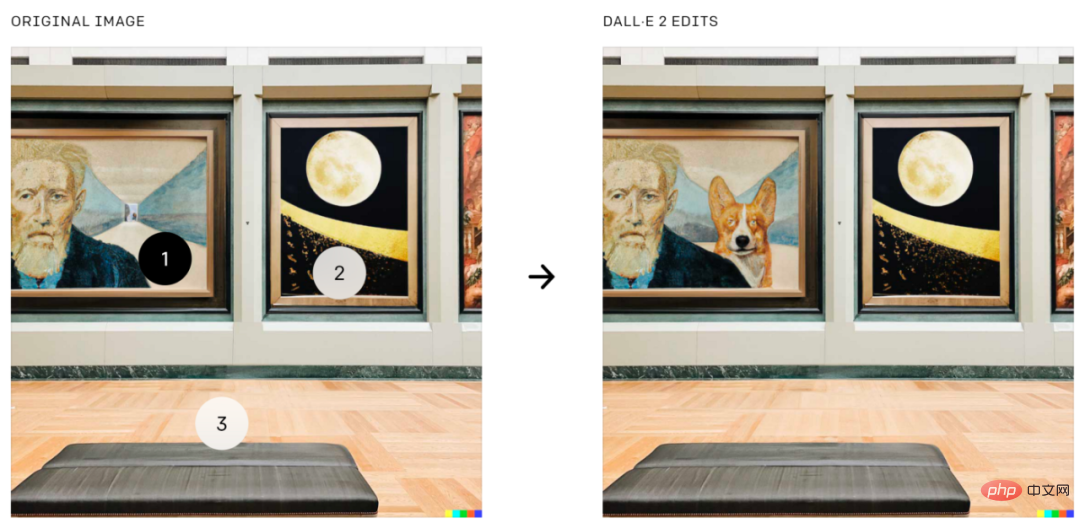

DALL·E 2 can also perform image editing based on text guidance, which is a feature in GLIDE. It can add and remove elements while taking into account shadows, reflections and textures:

#Add a corgi to a specific location on an image, from the original paper.

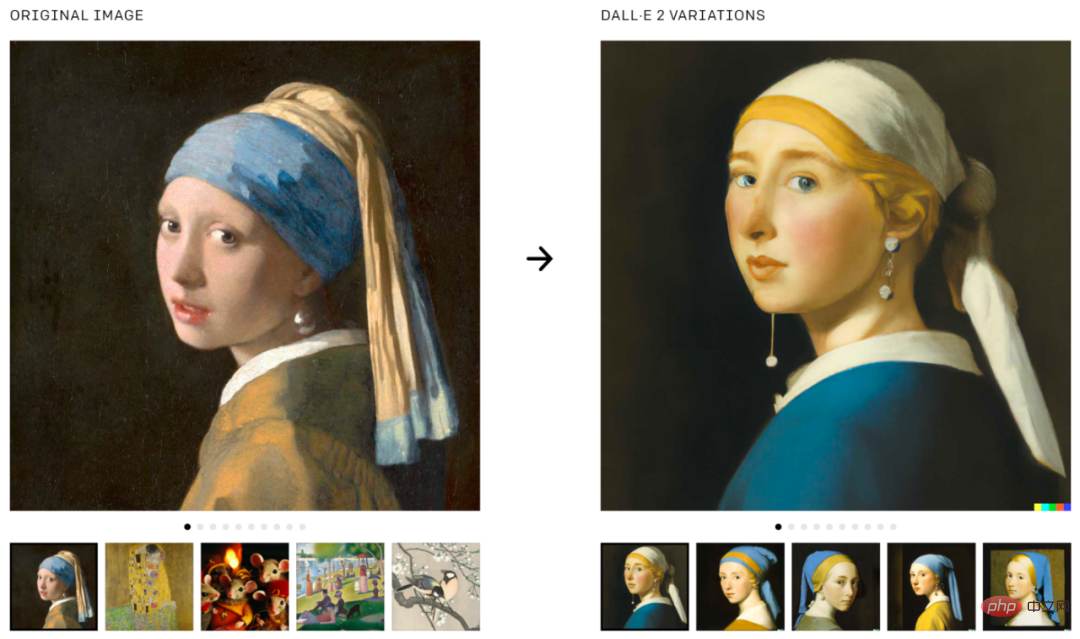

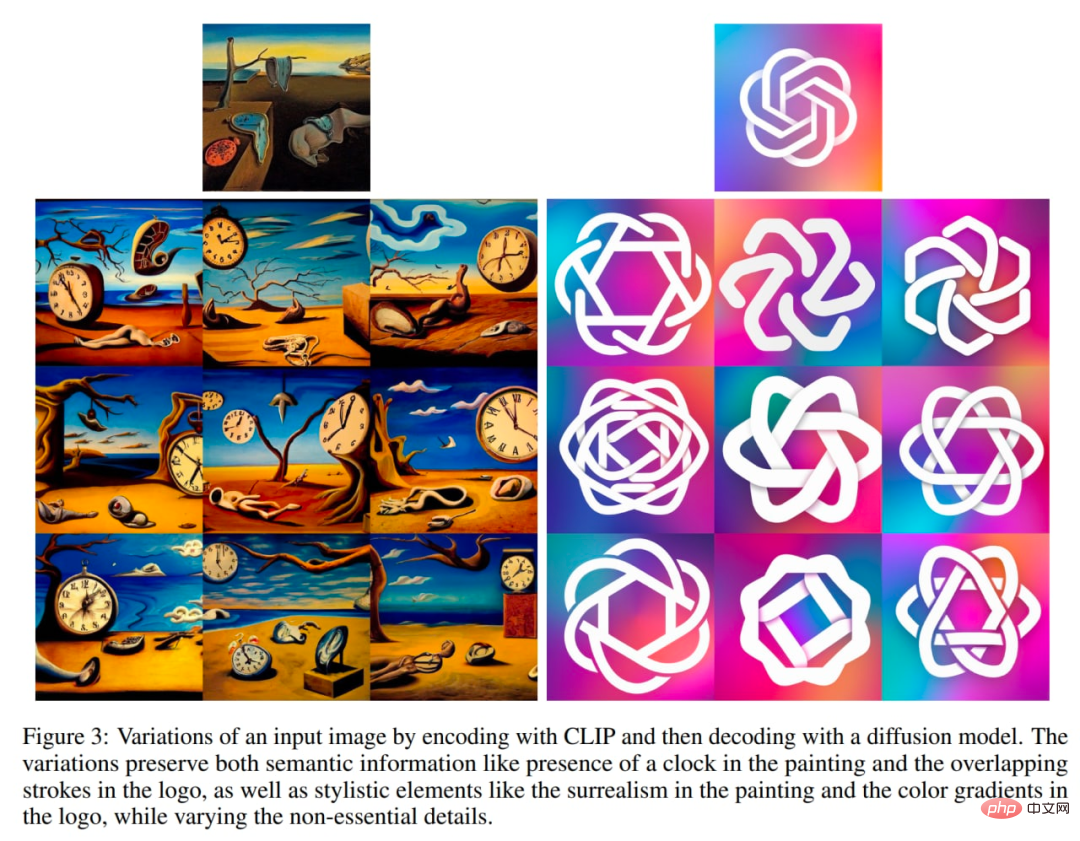

DALL·E 2 can also be used to generate variations of the original image:

Generate variations of the image, the image is from the original text.

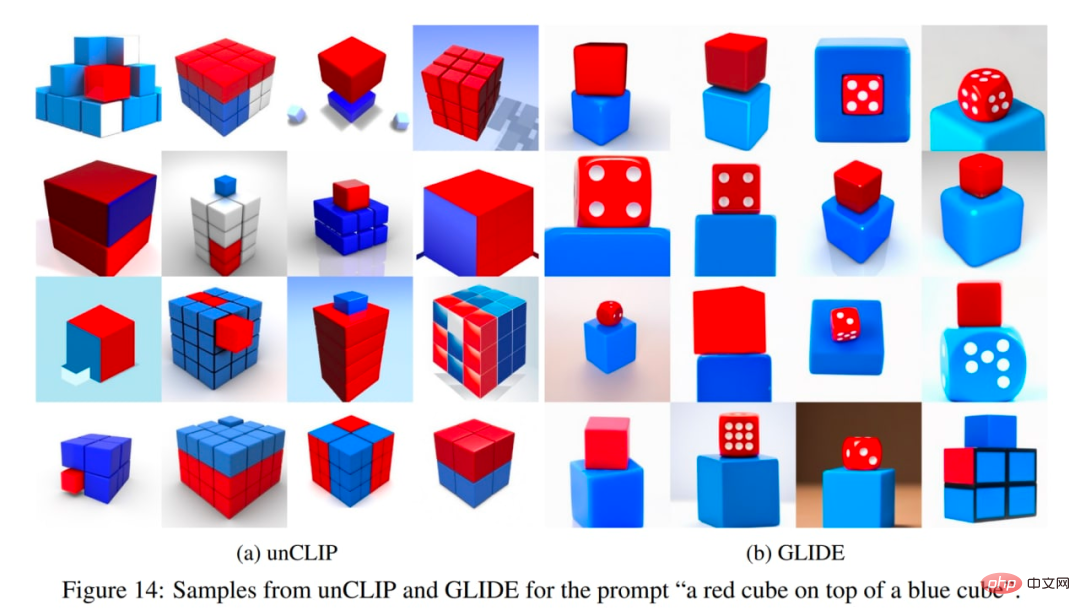

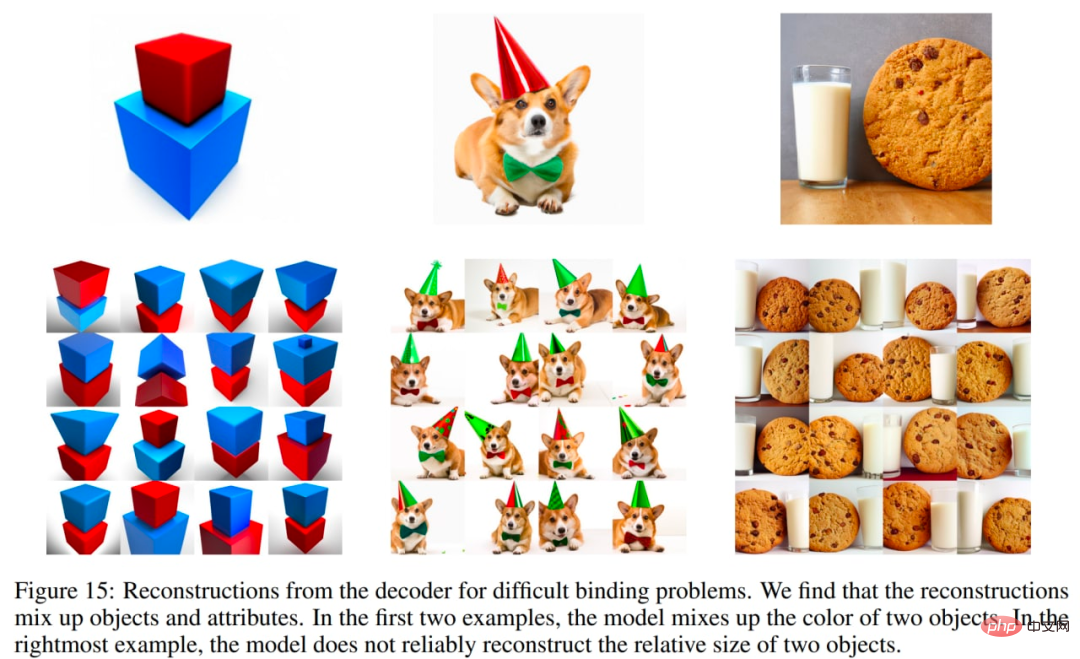

DALL·E 2 also has some problems. In particular, unCLIP is worse than the GLIDE model at binding properties to objects. For example, unCLIP is more difficult than GLIDE to face the prompt of having to bind two separate objects (cubes) to two separate properties (colors):

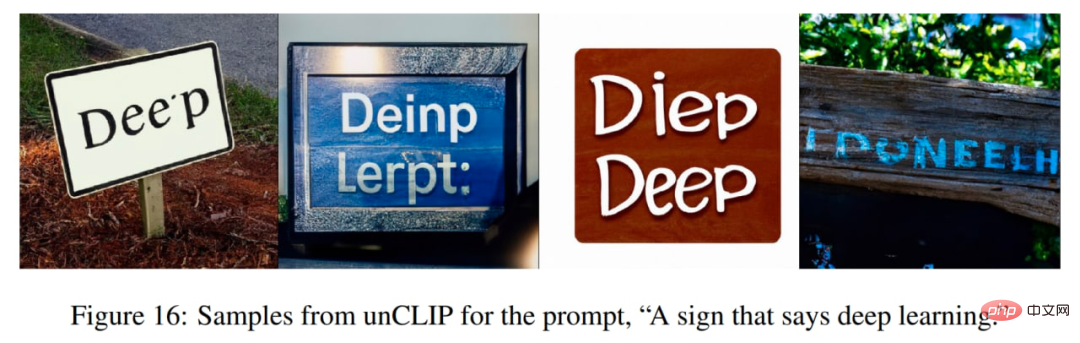

unCLIP also has some difficulties in generating coherent text:

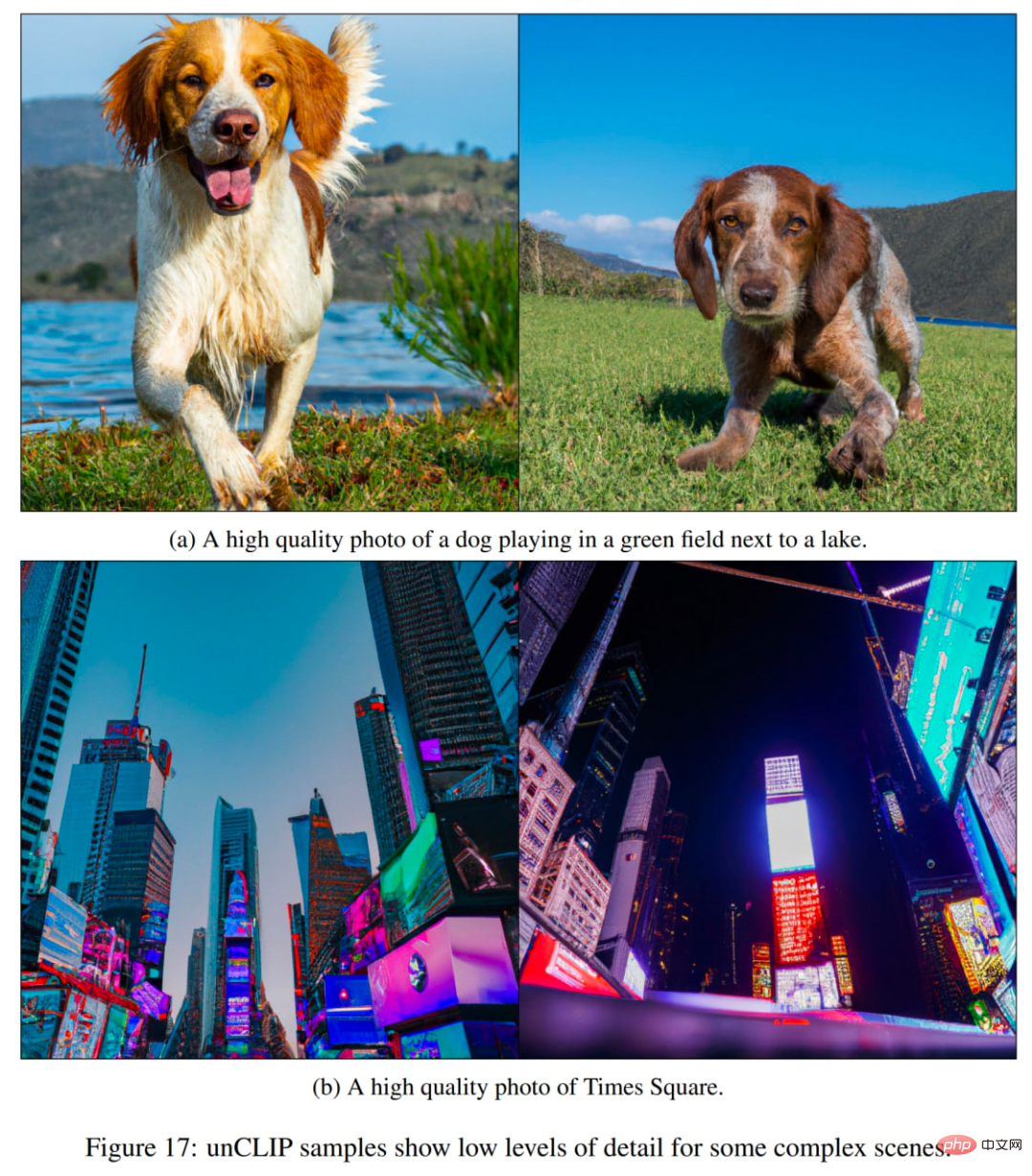

Another problem is that unCLIP is difficult to generate in complex Generation details in the scene:

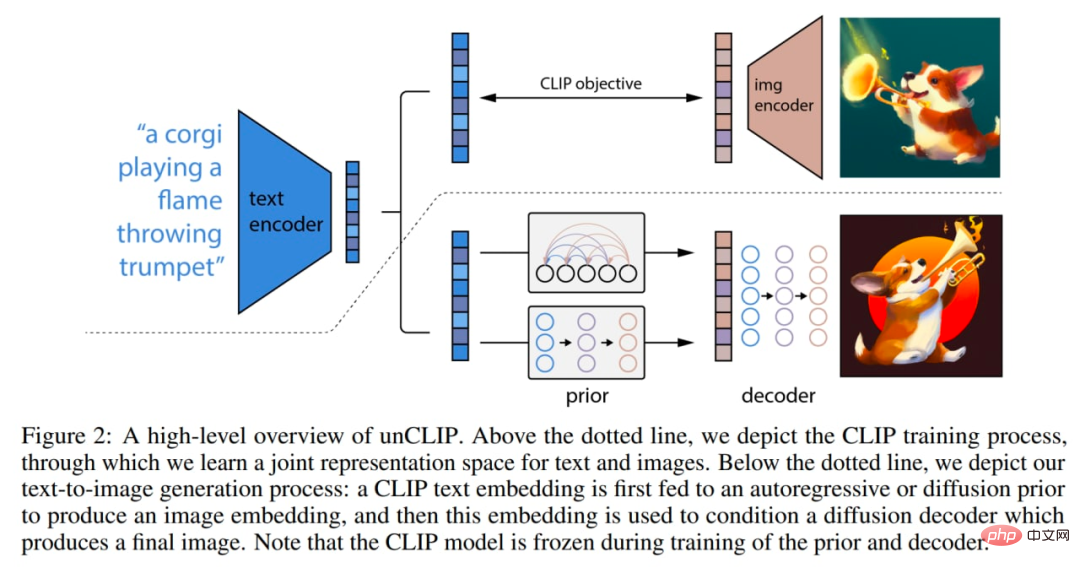

# Some changes have taken place inside the model. The image below is a combination of CLIP and GLIDE, the model itself (full-text conditional image generation stack) is called unCLIP internally in the paper because it generates images by inverting the CLIP image encoder.

Here’s how the model works: CLIP models are trained individually. The CLIP text encoder then generates embeddings for the input text (title). A special prior model then generates image embeddings based on text embeddings. A diffusion decoder then generates an image based on the image embedding. The decoder essentially inverts the image embedding back into an image.

Macro overview of the system. Some details (such as decoder text conditions) are not shown. Pictures from the original paper.

The CLIP model uses the ViT-H/16 image encoder, which uses a 256×256 resolution image with a width of 1280 and 32 Transformer blocks (it is larger than the largest ViT-L in the original CLIP work Deeper). The text encoder is a Transformer with causal attention mask, width 1024 and 24 Transformer blocks (the original CLIP model has 12 Transformer blocks). It is unclear whether the attention span of the text transformer is the same as in the original CLIP model (76 tokens).

The diffusion decoder is a modified GLIDE with 3.5B parameters. CLIP image embeddings are projected and added to existing time step embeddings. The CLIP embedding is also projected into four additional context tokens, which are connected to the output sequence of the GLIDE text encoder. The text conditional path of the original GLIDE is retained as it allows the diffusion model to learn aspects of natural language that CLIP fails to capture (however, it does not help much). During training, 10% of the time is spent randomly setting CLIP embeddings to zero, and 50% of the time is spent randomly removing text captions.

The decoder generates a 64×64 pixel image, and then two upsampling diffusion models subsequently generate 256×256 and 1024×1024 images, the former with 700M parameters and the latter with 300M parameters. To improve the robustness of upsampling, the conditional images are slightly corrupted during training. The first upsampling stage uses Gaussian blur, and the second stage uses a more diverse BSR degradation including JPEG compression artifacts, camera sensor noise, bilinear and bicubic interpolation, and Gaussian noise. The models are trained on random images that are a quarter of the size of the target. Text conditioning is not used for upsampling models.

A priori generates image embeddings based on text descriptions. The authors explore two different model classes of prior models: autoregressive (AR) priors and diffusion priors. Both prior models have 1B parameters.

In the AR prior, CLIP image embeddings are converted into a sequence of discrete codes and autoregressive predictions are made based on the captions. In the diffusion prior, the continuous embedding vectors are modeled directly using a Gaussian diffusion model conditioned on the title.

In addition to the title, the prior model can also be conditioned on the CLIP text embedding, since it is a deterministic function of the title. To improve sampling quality, the authors also enabled classifier-free guided sampling for AR and diffusion priors by randomly removing this text condition information 10% of the time during training.

For AR priors, principal component analysis (PCA) reduces the dimensionality of CLIP image embeddings. 319 of the 1024 principal components retained more than 99% of the information. Each dimension is quantized to 1024 buckets. The authors tuned the AR prior by encoding text titles and CLIP text embeddings as prefixes to the sequence. Furthermore, they add a token representing the (quantized) dot product between the text embedding and the image embedding. This allows tuning the model on higher dot products, since higher text-image dot products correspond to captions that better describe the image. The dot product is sampled from the upper half of the distribution. Predict the generated sequences using a Transformer model with a causal attention mask.

For diffusion prior, a decoder-only Transformer with causal attention mask is trained on a sequence consisting of:

- Encoded text

- CLIP text embedding

- Diffusion time step embedding

- Noise CLIP image embedding

Final embedding whose output from Transformer is used Predicting noiseless CLIP image embeddings.

Dot product is not used to adjust the diffusion prior. Instead, to improve the quality of the sampling time, two image embedding samples were generated and the one with higher dot product and text embedding was selected.

Diffusion priors outperform AR priors for comparable model sizes and reduced training computation. The diffusion prior also performed better than the AR prior in pairwise comparisons with GLIDE.

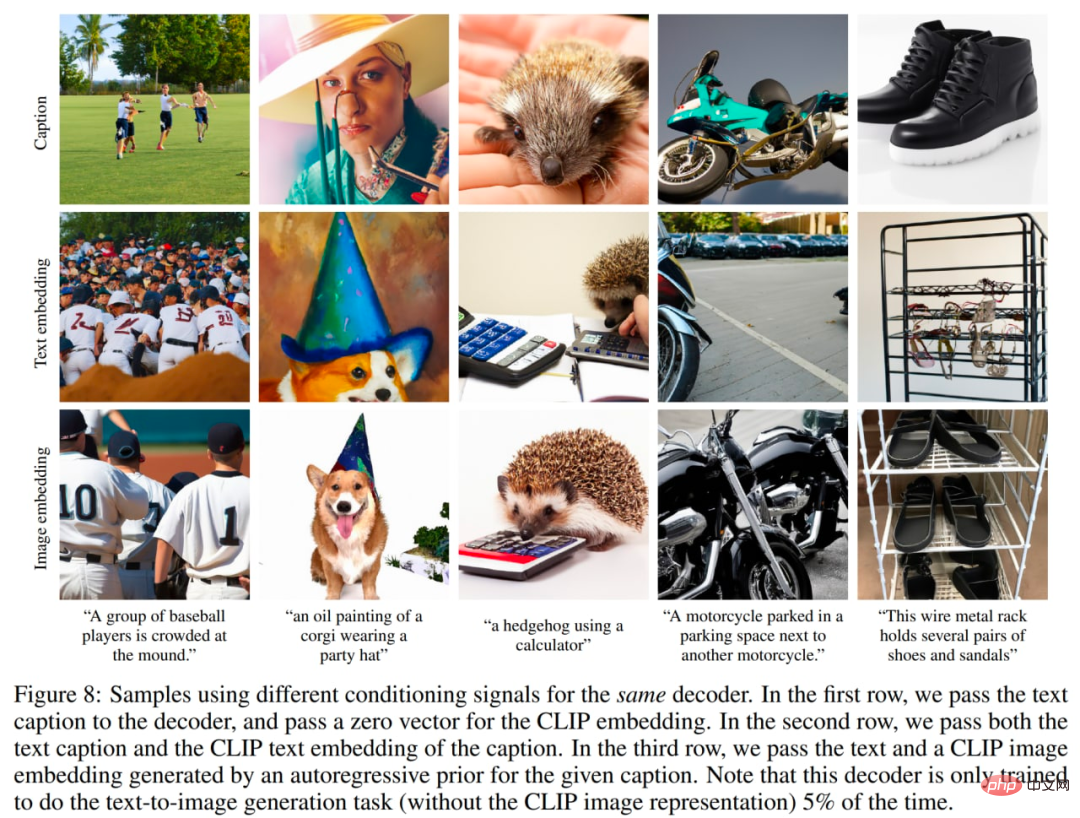

# The authors also investigate the importance of priors. They try to condition the same decoder with different signals: 1. text caption and zero CLIP embedding, 2. text caption and CLIP text embedding (as if it were an image embedding), 3. text generated by a prior and CLIP Image embedding. Tuning the decoder based on the title alone is obviously the worst, but tuning the text embedding zero samples does produce the desired results.

Use different adjustment signals, the picture is from the original text.

When training the encoder, the authors sampled from the CLIP and DALL-E datasets (~650 million images in total) with equal probability. When training the decoder, upsampler, and previous model, they only used the DALL-E dataset (approximately 250 million images) because the noisier CLIP dataset was merged when training the generation stack, thus initially Sample quality was negatively affected in the evaluation.

The total size of the model seems to be: 632M? Parameters (CLIP ViT-H/16 image encoder) 340M? (CLIP text encoder) 1B (diffusion prior) 3.5B (diffusion decoder) 1B (two diffusion upsamplers) = ~ about 6.5B parameters (if I remember correctly). This method allows the generation of images based on textual descriptions. However, some other interesting applications are also possible.

Example from the original paper.

Each image x can be encoded into a bipartite latent representation (z_i, x_T), which is sufficient for the decoder to produce an accurate reconstruction. latent z_i is a CLIP image embedding that describes the image aspect recognized by CLIP. latent x_T is obtained by applying DDIM (Denoising Diffusion Implicit Model) inversion using the decoder on x while conditional on z_i. In other words, it is the starting noise of the diffusion process when generating image x (or equivalently x_0, see the denoising diffusion model scheme in the GLIDE section).

This binary representation allows three interesting operations.

First, you create image variants for a given binary latent representation (z_i, x_T) by sampling with DDIM with η > 0 in the decoder. When η = 0, the decoder becomes deterministic and will reconstruct a given image x. The larger the η parameter, the larger the change, and we can see what information is captured in the CLIP image embedding and presented in all samples.

Explore changes in your images.

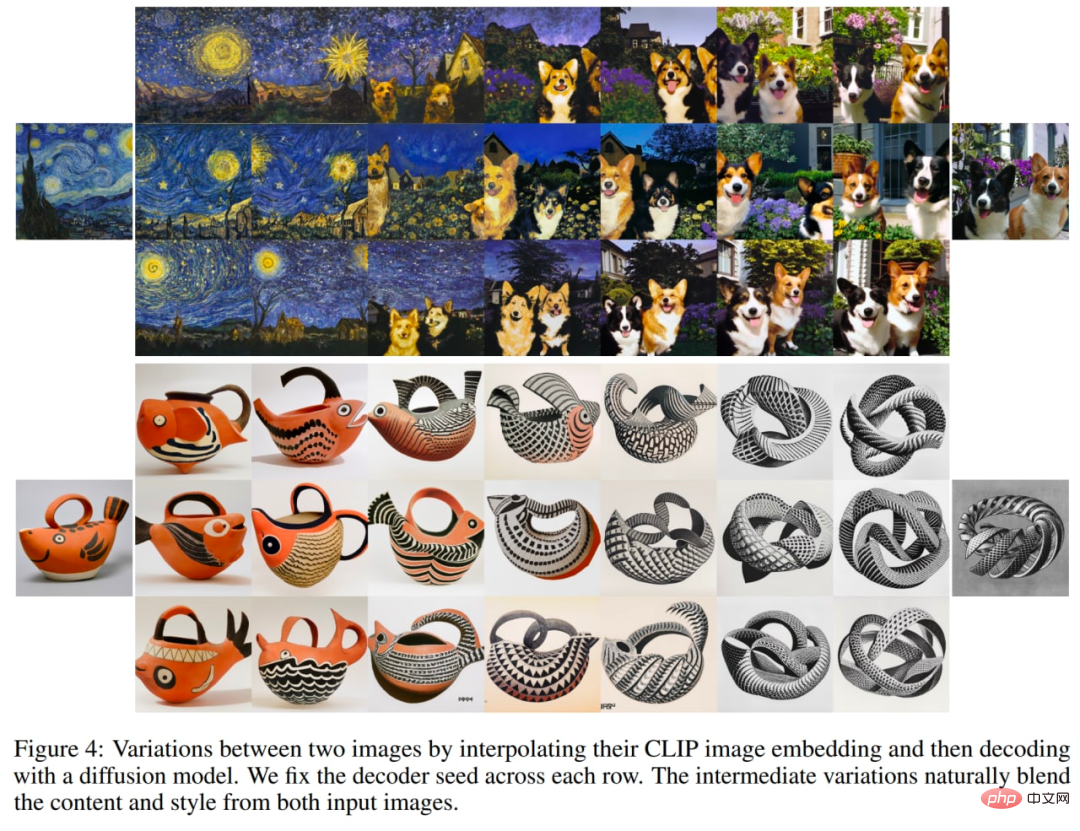

Secondly, you can interpolate between images x1 and x2. To do this, you have to take CLIP image embeddings z_i1 and z_i2 and then apply slerp (spherical linear interpolation) to obtain an intermediate CLIP image representation. There are two options for the corresponding intermediate DDIM latent x_Ti: 1) use slerp to interpolate between x_T1 and x_T2, 2) fix the DDIM latent to a randomly sampled value for all interpolations in the trajectory (an unlimited number of trajectories can be generated). The following images were generated using the second option.

Explore the interpolation of two images.

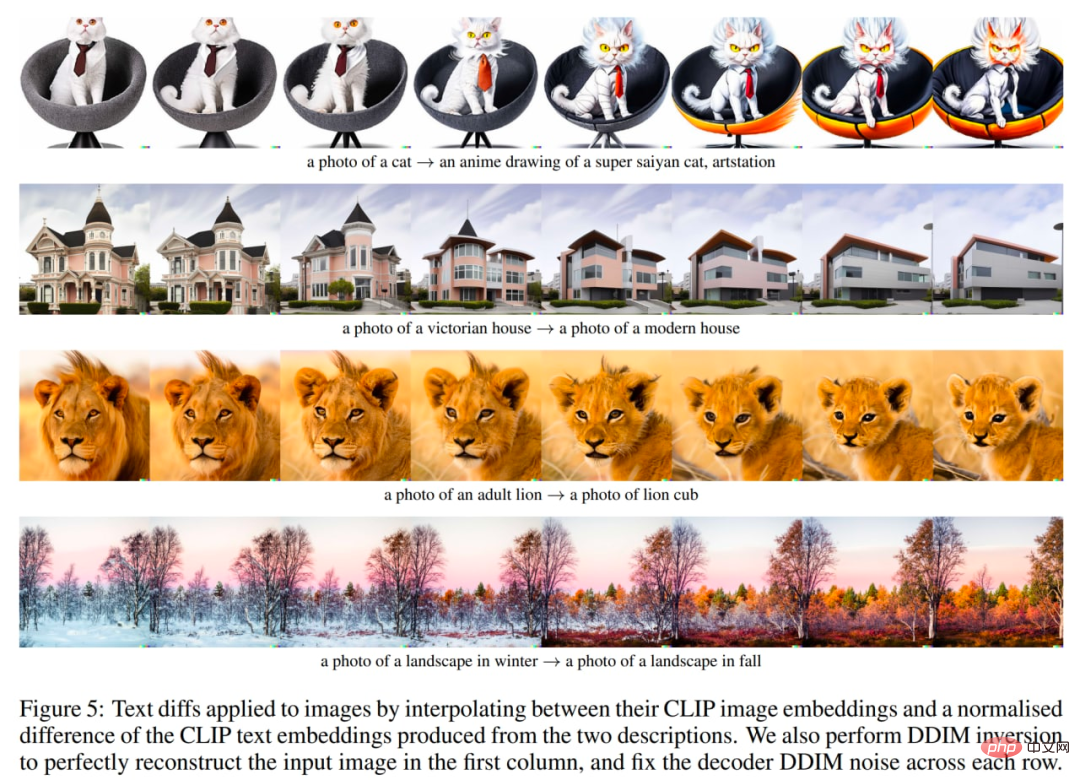

Finally, the third thing is language-guided image manipulation or text differentiation. To modify the image to reflect the new text description y, you first get its CLIP text embedding z_t, and the CLIP text embedding z_t0 of the title describing the current image (perhaps a dummy title like "Photo" or an empty title). Then calculate the text difference vector z_d = norm(z_t - z_t0). slerp is then used to rotate between the image CLIP embedding z_i and the text difference vector z_d, and generate an image with a fixed base DDIM noise x_T throughout the trajectory.

# Explore textual differences, from the original text.

The author also conducted a series of experiments to explore the CLIP potential space. Previous research has shown that CLIP is vulnerable to printing attacks. In these attacks, a piece of text is overlaid on top of an object, which causes CLIP to predict the object the text describes rather than the object described in the image (remember Apple with the “iPod” banner?). The authors now try to generate variants of such images and find that although the probability of the image being correctly classified is very low, the generated variants are correct with a high probability. Although the relative prediction probability of this title is very high, the model never generates a picture of an iPod.

Print attack case.

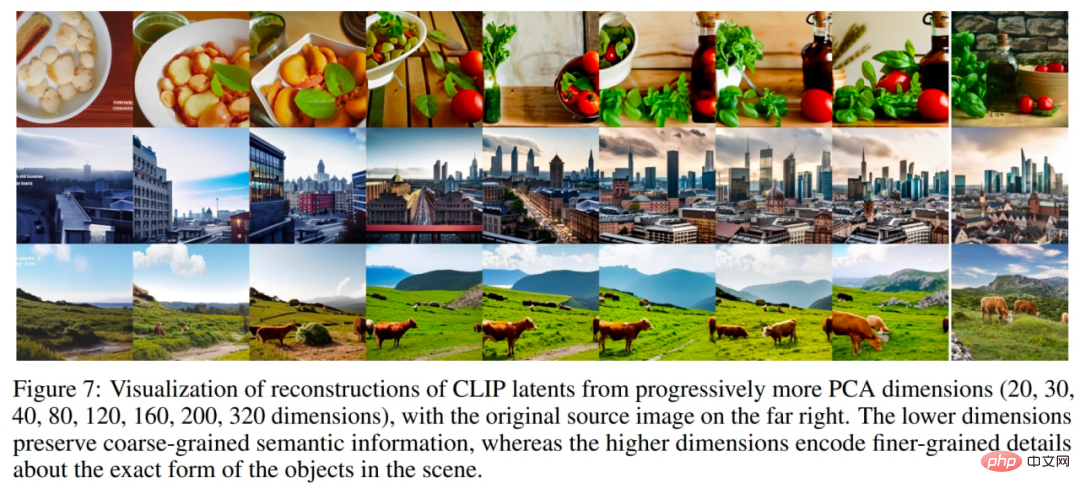

Another interesting experiment is to reconstruct the image with more and more principal components. In the image below, they took CLIP image embeddings of a small number of source images, reconstructed them with increasing PCA dimensions, and then visualized the reconstructed image embeddings using a decoder with DDIM. This allows viewing of semantic information encoded in different dimensions.

More and more principal components, from the original paper.

Also keep in mind the difficulties unCLIP has with property binding, text generation, and details in complex scenes.

The first two issues may be due to the CLIP embedded attribute.

Property binding issues may occur because the CLIP embedding itself does not explicitly bind properties to objects, so the decoder confuses properties and objects when generating images.

Another set of reconstructions for difficult binding problems, from the original text.

Text generation issues may be caused by the CLIP embedding not accurately encoding the spelling information of the rendered text.

Low detail issues may occur due to the decoder hierarchy generating images at a base resolution of 64×64 and then upsampling them. Therefore, using a higher base resolution, the problem may disappear (at the cost of additional training and inference computations).

We’ve seen the evolution of OpenAI’s text-based image generation model. There are other companies working in this area as well.

DALL·E 2 (or unCLIP) is a vast improvement over the first version of the system, DALL·E 1, which was produced just one year ago. However, it still has a lot of room for improvement.

Unfortunately, these powerful and interesting models have not been open source. The author would like to see more such models published or at least made available via API. Otherwise, all these results will only be applicable to a very limited audience.

It is undeniable that such models can have errors, sometimes produce incorrect types of content, or be used by malicious agents. The author calls for the need to discuss how to deal with these issues. There are countless potentially good uses for these models, but the failure to address the above issues has hindered these explorations.

The author hopes that DALL·E 2 (or other similar models) will soon be made available to everyone through an open API.

The above is the detailed content of Text-generated images are so popular, you need to understand the evolution of these technologies. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI is indeed changing mathematics. Recently, Tao Zhexuan, who has been paying close attention to this issue, forwarded the latest issue of "Bulletin of the American Mathematical Society" (Bulletin of the American Mathematical Society). Focusing on the topic "Will machines change mathematics?", many mathematicians expressed their opinions. The whole process was full of sparks, hardcore and exciting. The author has a strong lineup, including Fields Medal winner Akshay Venkatesh, Chinese mathematician Zheng Lejun, NYU computer scientist Ernest Davis and many other well-known scholars in the industry. The world of AI has changed dramatically. You know, many of these articles were submitted a year ago.

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

The performance of JAX, promoted by Google, has surpassed that of Pytorch and TensorFlow in recent benchmark tests, ranking first in 7 indicators. And the test was not done on the TPU with the best JAX performance. Although among developers, Pytorch is still more popular than Tensorflow. But in the future, perhaps more large models will be trained and run based on the JAX platform. Models Recently, the Keras team benchmarked three backends (TensorFlow, JAX, PyTorch) with the native PyTorch implementation and Keras2 with TensorFlow. First, they select a set of mainstream

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Boston Dynamics Atlas officially enters the era of electric robots! Yesterday, the hydraulic Atlas just "tearfully" withdrew from the stage of history. Today, Boston Dynamics announced that the electric Atlas is on the job. It seems that in the field of commercial humanoid robots, Boston Dynamics is determined to compete with Tesla. After the new video was released, it had already been viewed by more than one million people in just ten hours. The old people leave and new roles appear. This is a historical necessity. There is no doubt that this year is the explosive year of humanoid robots. Netizens commented: The advancement of robots has made this year's opening ceremony look like a human, and the degree of freedom is far greater than that of humans. But is this really not a horror movie? At the beginning of the video, Atlas is lying calmly on the ground, seemingly on his back. What follows is jaw-dropping

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

Earlier this month, researchers from MIT and other institutions proposed a very promising alternative to MLP - KAN. KAN outperforms MLP in terms of accuracy and interpretability. And it can outperform MLP running with a larger number of parameters with a very small number of parameters. For example, the authors stated that they used KAN to reproduce DeepMind's results with a smaller network and a higher degree of automation. Specifically, DeepMind's MLP has about 300,000 parameters, while KAN only has about 200 parameters. KAN has a strong mathematical foundation like MLP. MLP is based on the universal approximation theorem, while KAN is based on the Kolmogorov-Arnold representation theorem. As shown in the figure below, KAN has

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

The latest video of Tesla's robot Optimus is released, and it can already work in the factory. At normal speed, it sorts batteries (Tesla's 4680 batteries) like this: The official also released what it looks like at 20x speed - on a small "workstation", picking and picking and picking: This time it is released One of the highlights of the video is that Optimus completes this work in the factory, completely autonomously, without human intervention throughout the process. And from the perspective of Optimus, it can also pick up and place the crooked battery, focusing on automatic error correction: Regarding Optimus's hand, NVIDIA scientist Jim Fan gave a high evaluation: Optimus's hand is the world's five-fingered robot. One of the most dexterous. Its hands are not only tactile

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

Target detection is a relatively mature problem in autonomous driving systems, among which pedestrian detection is one of the earliest algorithms to be deployed. Very comprehensive research has been carried out in most papers. However, distance perception using fisheye cameras for surround view is relatively less studied. Due to large radial distortion, standard bounding box representation is difficult to implement in fisheye cameras. To alleviate the above description, we explore extended bounding box, ellipse, and general polygon designs into polar/angular representations and define an instance segmentation mIOU metric to analyze these representations. The proposed model fisheyeDetNet with polygonal shape outperforms other models and simultaneously achieves 49.5% mAP on the Valeo fisheye camera dataset for autonomous driving

The latest from Oxford University! Mickey: 2D image matching in 3D SOTA! (CVPR\'24)

Apr 23, 2024 pm 01:20 PM

The latest from Oxford University! Mickey: 2D image matching in 3D SOTA! (CVPR\'24)

Apr 23, 2024 pm 01:20 PM

Project link written in front: https://nianticlabs.github.io/mickey/ Given two pictures, the camera pose between them can be estimated by establishing the correspondence between the pictures. Typically, these correspondences are 2D to 2D, and our estimated poses are scale-indeterminate. Some applications, such as instant augmented reality anytime, anywhere, require pose estimation of scale metrics, so they rely on external depth estimators to recover scale. This paper proposes MicKey, a keypoint matching process capable of predicting metric correspondences in 3D camera space. By learning 3D coordinate matching across images, we are able to infer metric relative