Technology peripherals

Technology peripherals

AI

AI

Michael Bronstein draws from algebraic topology and proposes a new graph neural network computing structure!

Michael Bronstein draws from algebraic topology and proposes a new graph neural network computing structure!

Michael Bronstein draws from algebraic topology and proposes a new graph neural network computing structure!

This article is co-authored by Cristian Bodnar and Fabrizio Frasca, and published by C. Bodnar, F. Frasca and others in 2021 ICML "Weisfeiler and Lehman Go Topological: Information Transfer Simple Network" and 2021 NeurIPS "Weisfeiler and Lehman Go Cellular: CW Network" paper is used as a reference.

This article is only part of the discussion of the graph neural network series from the perspective of differential geometry and algebraic topology.

Graphs can be used to model anything from computer networks to particle interactions in the Large Hadron Collider. Graphs are ubiquitous because of their discrete and combinatorial nature, which allows them to express abstract relationships while being easy to compute. One of the reasons for their popularity is that graphs abstract away geometry, i.e. where nodes are in space or how edges are curved, leaving only a representation of how nodes are connected.

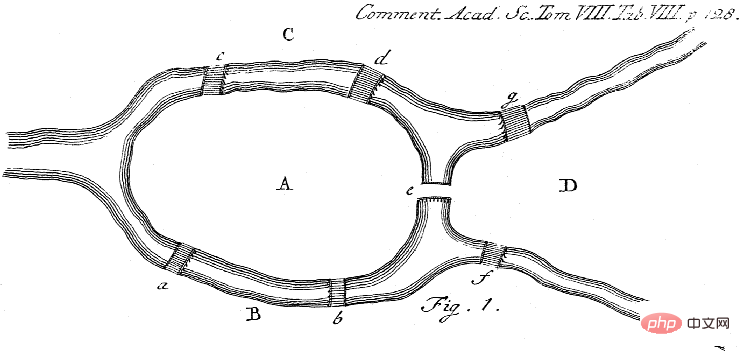

Graph theory originated from the observations of Leonhard Euler in his 1741 book "Geometria situs", where he pointed out the famous Seven Bridges Problem of Königsberg There is no solution to the problem.

Note: The seven-bridge problem requires finding a circular walking route in Königsberg without crossing the bridge multiple times. . As Euler said, the exact shape of the city of Königsberg is not important, what matters is how the different lands (nodes of the graph) are connected to each other (edges). Euler showed that such a cycle exists if and only if all nodes have an even degree. Additionally, only five of the original bridges survive into modern times. Source: Wikipedia

Interestingly, the discovery of Euler not only marked the beginning of graph theory, but is also often considered to be the birth of topology. As with graphs, topologists are interested in those properties of a space that are independent of its specific shape or geometry.

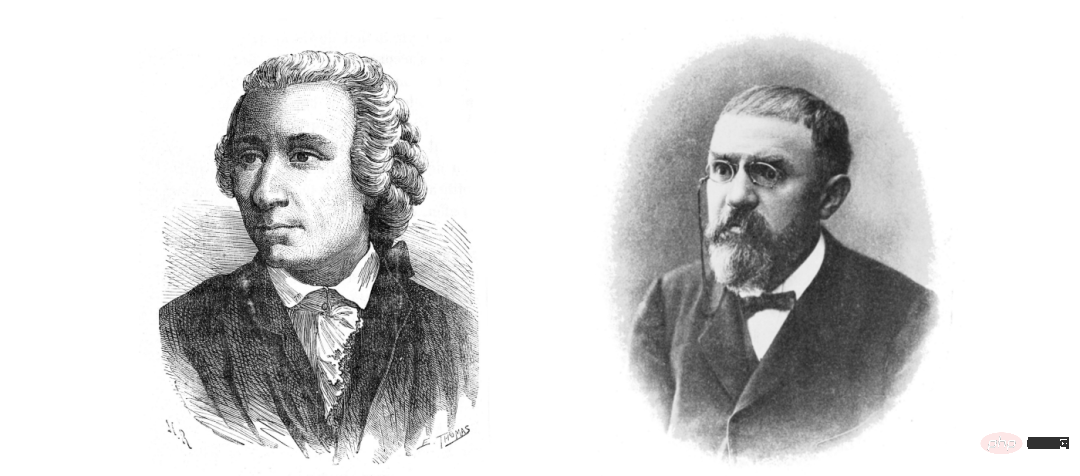

The modern expression of these ideas appears in 1895's "Analysis situs," a seminal paper by Henri Poincaré, whose work ignited convection Combinatorial descriptions of shapes are of interest, and topological invariants can be more easily found and computed from these manifolds.

## Caption: Leonhard Euler (1707-1783) and Henri Poincaré (1854-1912)

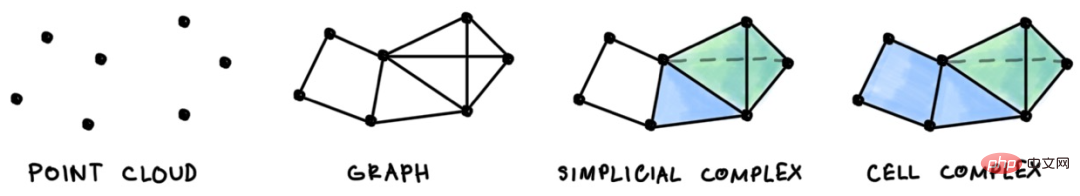

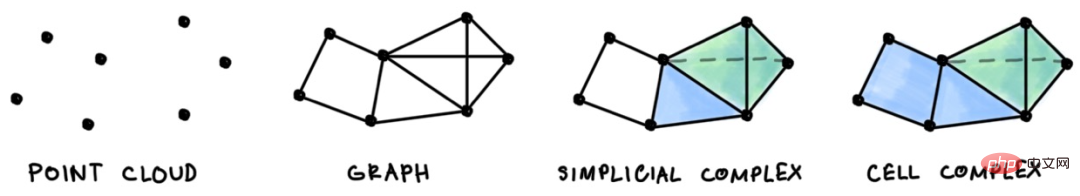

These combinatorial descriptions are known today as cell complexes and can be thought of as high-dimensional generalizations of graphs.

Unlike graphs formed by nodes and edges, cell complexes can also contain higher-dimensional structures or "cells": vertices are 0-cells, edges are 1-cells, 2D surfaces are 2-cells etc. To build a cell complex, we can layer it by gluing the boundaries of one cell to other lower-dimensional cells.

In special cases, when cells are composed of simplexes (such as edges, triangles, tetrahedrons, etc.), these spaces are also called simplex complexes.

Note: The graph can be viewed as a set of vertices to which we attach edges (1-cell). Similarly, single cell complexes and cell complexes can be viewed as graphs where we connect 2-cells (shown in blue), 3-cells (shown in green), etc.

1 Topology in Machine Learning and Data ScienceWe believe that people do not have to wait 400 years for topology to become a practical tool .

Under the umbrella of topological data analysis (TDA), topological structures such as shallow composites have been used in machine learning and data science. This type of method emerged in the 1990s. s, attempts to analyze the "shape of the data" in a way that is metric insensitive and robust to noise.

The roots of TDA can be traced back to the work of one of the most prolific topologists, Leopold Vietnam Oris, in the late 1920s. However, these technologies had to wait until the advent of modern computing before they could be applied on a large scale.

Legend: Given a point cloud, the intersection between closed spheres of fixed radius around each point produces a simple complex. By gradually increasing the radius of the sphere, we can obtain a nested sequence of simple complexes. Image source: Bastian Rieck.

#The workhorse of TDA is Persistent Homology (PH), a method for extracting topological features from point clouds. Given a dataset of points, PH creates a nested sequence of simple complex numbers, where each complex number corresponds to a certain scale of the underlying point cloud analyzed. It then tracks various topological features (e.g., connected components, loops, or holes) that appear and disappear as the scale gradually increases and one transitions from one complex in the sequence to the next.

In the era of deep learning, persistent homology has a "second life" because it shows that one can perform backpropagation through it, allowing to convert already The established TDA device is integrated into a deep learning framework.

A recent body of work proposes different uses of simplifications and cellular complexes in geometric deep learning, as a richer underlying topological space to support data and calculations performed.

The first few works to exploit this idea proposed convolutional models and random walk methods operating on simplified complexes. As in this article, convolutional models can be understood as simple and concrete examples of information transfer on complexes of cells.

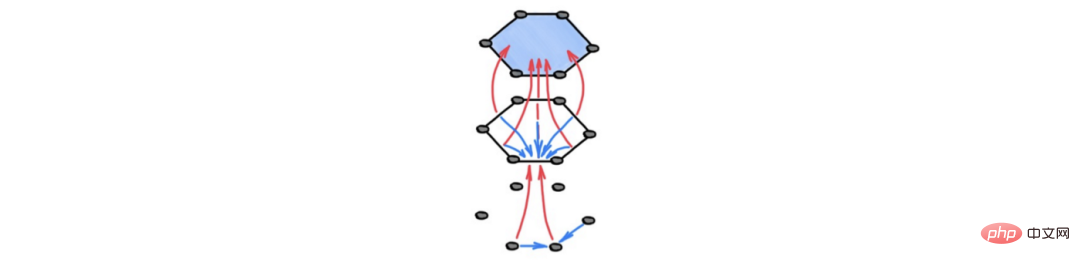

Since the calculation is driven by the topological structure of these spaces (i.e., neighborhood structure), we call this method topological information passing. In this framework, adjacent units, possibly of different dimensions, are exchanging information, as shown in the figure below.

Note: Schematic diagram of topological information transmission. The blue arrows describe the "horizontal" information propagation between adjacent cells in the upper layer, i.e. cells on the boundaries of the same high-dimensional cell. The red arrow depicts "vertical" information propagation, where a cell receives information from lower-dimensional cells at its borders. This computation can be interpreted as a (differentiable) ensemble form by summarizing information from boundary cells into a coarser representation.

Beyond Graphs in GNNs

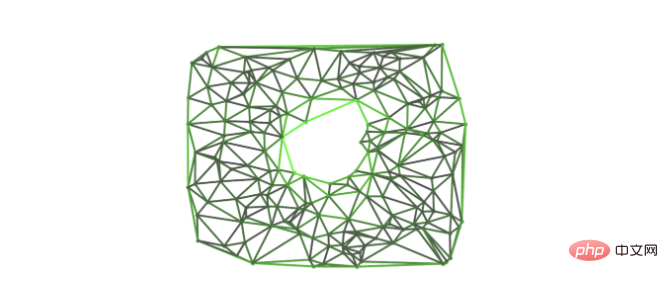

Despite the rich structure provided by cellular complexes, we cannot ignore that graphs are by far the most common in machine learning topological objects, and few datasets surpass them. Nonetheless, one can still exploit these interesting topological spaces by transforming the input graph.

We call converting a graph into a high-dimensional topological space "lifting" to resemble the concept of the same name in category theory. It is a transformation that appends high-dimensional cells to the input graph by following certain rules. For example, a graph can be promoted to a cell complex by attaching a higher-dimensional cell to each cliff or cycle of the graph. By doing this, the graph is replaced with a different space that has more structure and can provide the GNN with a better computational structure than the original graph. Below, we discuss the specific advantages of this approach.

Legend: By gluing the boundary of the two-dimensional closed disk to the induced loop in the figure, it can be obtained from the figure Construct high-dimensional cell complexes.

High-order features and structures

GNN usually adopts a node-centric view, and the data residing on the edges are only regarded as auxiliary information to increase communication between vertices. In topological information transfer, all units are first-class citizens. Regardless of their dimensions, they are assigned a specific representation, which is developed by exchanging information with neighboring units. This provides a recipe for explicitly modeling certain higher-order structures and interactions between them. In particular, it provides a principled approach to evolve the edge (i.e. 1 unit) features of the input graph, which is a problem that is not considered by a large class of GNN models.

Higher Order Interactions

Diagrams are by definition binary ("pairwise") and cannot represent relationships and interactions involving more than two objects . This can be a problem when modeling complex systems characterized by higher-order interactions: for example, three reactants in a chemical reaction might interact simultaneously. In a cell complex, this situation can be encoded by connecting reactants between two cells (i.e., a "filled" triangle). Therefore, the computational flow of the model is adapted to the presence of higher-order interactions.

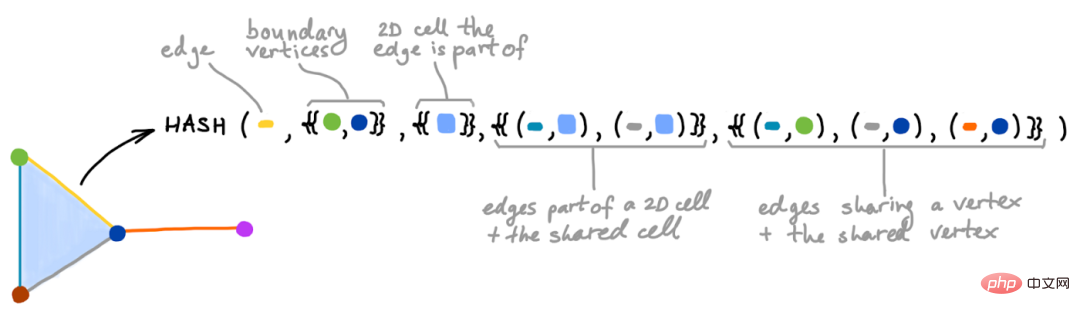

Legend: Cell Weisfeiler-Lehman (CWL) test, extending the classic WL test to the cell population, each step of the algorithm Both perfectly hash the colors of adjacent cells (which may have different dimensions).

Expressiveness

The expressive ability of information transfer GNN is limited by the Weisfeiler-Leman (WL) graph isomorphism test. It is well known that WL cannot detect some graphs. Substructures, such as triangles or cycles, are indistinguishable from even very simple non-isomorphic graphs.

According to previous papers (Paper address: https://arxiv.org/abs/2103.03212; https://arxiv.org/ abs/2106.12575), the cellular version of the WL test (CWL) can be used to test the isomorphism of cellular complexes. When this new test is matched with the graph lifting procedure described above, it is found that it can distinguish larger graph classes than the WL test. Therefore, under certain conditions, the topological information transfer process inherits the advantages of this test and improves expression ability compared with standard GNN. Insufficient, over-smoothing and bottlenecks

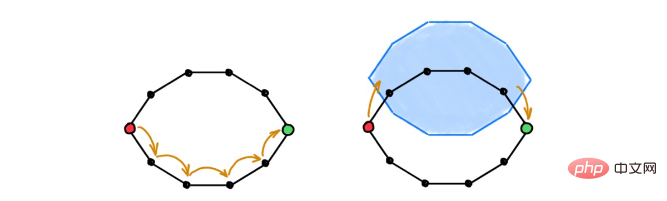

Information transfer GNN requires n layers to enable nodes that are n hops apart to communicate. When only a few layers are used so that nodes that are far apart cannot exchangeinformation, this phenomenon is called underreach.

In contrast, using too many layers can lead to over-smoothing, and information can be lost in structural bottlenecks of the graph.Cell complexes can alleviate these problems because the richer neighborhood structure induced by high-dimensional cells creates shortcuts between nodes that may be far apart. Therefore, information only needs to contain some computational steps to be disseminated to be valid.

Caption: GNN requires many layers to enable nodes that are far apart to communicate (left). High-dimensional cells change the underlying topology of space by creating shortcuts (right). This allows remote nodes to exchange information in several messaging steps. Hierarchical modeling

TopologyInformationThe calculation performed by information passing is hierarchical, and information flows from low-dimensional units to high-dimensional units. dimensional units and back, can be thought of as a form of "vertical" (and differentiable) pooling, rather than the "horizontal" pooling in standard graph neural networks. This preserves the inductive bias of “compressed” graph regions without ignoring fine-grained information of the input graph that would harm GNN pooling-based performance.

Note: Topological information transfer allows information to exist hierarchically between units of different dimensions

Domain alignment

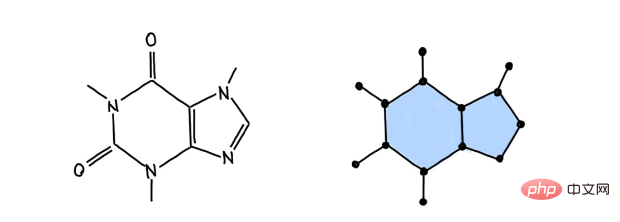

Certain applications are naturally consistent with the structure of cellular complexes, for example, the atoms, bonds, and chemical rings of a molecule can be represented as 0-cell, 1-cell, and 2-cell, a direct connection between the physical structure of the molecule and the complex representation of the cell. Correspondence allows topological information transfer to take advantage of the above properties, and these representations also demonstrate the state-of-the-art results achieved by topological information transfer in molecular property prediction tasks.

Other applications that exhibit good alignment might include discrete manifolds (grids) in computer graphics applications, social networks (cliques are particularly important), or spatial graphs such as Google Maps (Blocks between streets can be naturally represented as "cubic" cells).

Caption: Caffeine factors are modeled as two-dimensional cellular complexes

2 Combination of topology and differential geometry

Hole algebra and directional equivalence

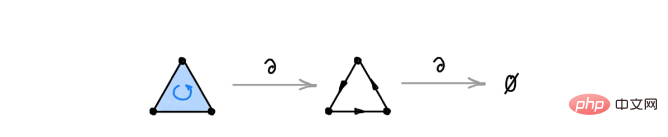

In algebraic topology, it is common to use directed simplicial complexes, where there is an arbitrary "orientation" for each simplex, e.g. We choose a source node and a target node in each edge, and for each triangle we choose an order in which to traverse its nodes. Once the direction is chosen, interesting algebraic operators can be performed on complex shapes, such as computing the boundaries of certain simplexes via "boundary operators". These algebraic operations can also be used to find "holes" in simplicial complexes - regions that have no boundaries but are not on the boundaries of something else. Behind the scenes, persistent homology relies on these calculations to detect topological features.

Legend: The boundary operator applied to a 2-simplex produces a triangle. Applying the operator to the triangle again, the result is zero, since the triangle is a cycle it has no bounds.

#Topological information transfer can be seen as a (nonlinear) generalization of algebraic operators (such as boundary operators). Therefore, it is necessary that the topological information passing behaves similarly: we want the outputs of each layer to respond "uniformly" to changes in the orientation of the input complex. In other words, we want our layers to be directionally equivalent. In our work, we study how topological information transfer satisfies this property by choosing appropriate nonlinearities and information transfer functions, also in a pure convolutional setting. This was studied.Distinguish topological spacesOne of the earliest known topological invariants, Euler characteristics, was originally used for the classification of Platonic solids. We can define it as every The alternating sum of the number of cells in dimensions. Surprisingly, if two cellular complexes are homeomorphic, these sums will be consistent even if they are different discretizations of the same space.

Interestingly, the readout operation of the topological information transfer model makes it easy to calculate the invariance of the topology because it applies a subsumption to each dimensional unit. Restoration of invariants.Therefore, this type of model can structurally distinguish certain non-isomorphic spaces (that is, have different Euler characteristics). From a computational perspective, this can be seen as a generalization of the WL test, where we are not only interested in determining whether two cellular complexes are identical, but also whether they are isomorphic to each other.

Discrete Hodge Theory

Discrete Hodge Theory provides a more geometric explanation for the topological properties of cellular complexes. When the sign of the features associated with a k-cell depends on the orientation of the k-cell, these features can be viewed mathematically as discrete versions of differential k-shapes in differential geometry (i.e., k-dimensional volume elements that can be integrated) . An operator called the Hoch Laplacian generalizes the graphical Laplacian and operates on these differential forms. It can be proved that The diffusion PDE based on this Laplacian operator will converge to the signal related to the hole of the composite in the limit.

Note: The diffusion partial differential equation based on the Hoch Laplace operator converges to the initial differential form The limit of projection on the Laplacian kernel. This image shows how the zero eigenvector of the Hoch Laplacian takes high values around holes in the complex.

#The first simple neural network model was actually based on Hoch Laplace’s convolutional model, which in turn was inspired by topological signal processing. Just recently, a version of the convolutional model based on this operator was used to solve NP-hard problems in computational algebraic topology.

3 Final Thoughts

Are these just charts in disguise?

Recent papers argue that, among other things, topological information transfer methods are nothing more than GNNs that operate information transfer on modified graphs encoding the structure of cellular complexes. This is true for convolutional models, where information transfer calculations involve pairs of cells.

However, in its most general form, the information function allows a high-dimensional cell to modulate the energy passed between low-dimensional cells on its boundary. ##information. In general, information can be passed through the regular information on the graph, because an edge connects exactly two nodes, and a 2-cell can connect as many edges as desired. In both cases, the computation is driven by the topology of the underlying space to which the data is attached. We believe that the benefits of adopting this topological perspective on information transfer extend beyond purely computational considerations. In addition to valuable mathematical connections, it opens up research discourse to other mathematical and computational disciplines, facilitating positive cross-fertilization among our often too monotonous communities.

What is the next step for topological information transfer?We foresee two main future directions for topological information transfer methods:

First, many architectures developed in GNNs over the years ( Such as attention mechanisms) may be adopted in these new topological spaces while exploiting their specific characteristics.

Secondly, more mathematical objects and tools from the field of algebraic topology (including structures such as honeycomb pulleys) will satisfy even the most mathematically savvy ML researchers , which may also sound foreign to them) will be adopted by the graph and geometric deep learning communities.

These methods can provide answers to old problems and help solve new problems. As Robert Ghrist said: "novel challenges necessitate novel math" (new challenges require novel math) new mathematics).

The above is the detailed content of Michael Bronstein draws from algebraic topology and proposes a new graph neural network computing structure!. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1390

1390

52

52

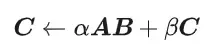

CUDA's universal matrix multiplication: from entry to proficiency!

Mar 25, 2024 pm 12:30 PM

CUDA's universal matrix multiplication: from entry to proficiency!

Mar 25, 2024 pm 12:30 PM

General Matrix Multiplication (GEMM) is a vital part of many applications and algorithms, and is also one of the important indicators for evaluating computer hardware performance. In-depth research and optimization of the implementation of GEMM can help us better understand high-performance computing and the relationship between software and hardware systems. In computer science, effective optimization of GEMM can increase computing speed and save resources, which is crucial to improving the overall performance of a computer system. An in-depth understanding of the working principle and optimization method of GEMM will help us better utilize the potential of modern computing hardware and provide more efficient solutions for various complex computing tasks. By optimizing the performance of GEMM

How to calculate addition, subtraction, multiplication and division in word document

Mar 19, 2024 pm 08:13 PM

How to calculate addition, subtraction, multiplication and division in word document

Mar 19, 2024 pm 08:13 PM

WORD is a powerful word processor. We can use word to edit various texts. In Excel tables, we have mastered the calculation methods of addition, subtraction and multipliers. So if we need to calculate the addition of numerical values in Word tables, How to subtract the multiplier? Can I only use a calculator to calculate it? The answer is of course no, WORD can also do it. Today I will teach you how to use formulas to calculate basic operations such as addition, subtraction, multiplication and division in tables in Word documents. Let's learn together. So, today let me demonstrate in detail how to calculate addition, subtraction, multiplication and division in a WORD document? Step 1: Open a WORD, click [Table] under [Insert] on the toolbar, and insert a table in the drop-down menu.

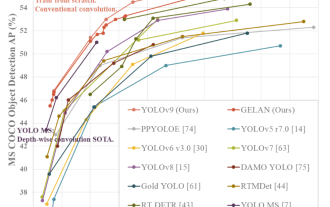

YOLO is immortal! YOLOv9 is released: performance and speed SOTA~

Feb 26, 2024 am 11:31 AM

YOLO is immortal! YOLOv9 is released: performance and speed SOTA~

Feb 26, 2024 am 11:31 AM

Today's deep learning methods focus on designing the most suitable objective function so that the model's prediction results are closest to the actual situation. At the same time, a suitable architecture must be designed to obtain sufficient information for prediction. Existing methods ignore the fact that when the input data undergoes layer-by-layer feature extraction and spatial transformation, a large amount of information will be lost. This article will delve into important issues when transmitting data through deep networks, namely information bottlenecks and reversible functions. Based on this, the concept of programmable gradient information (PGI) is proposed to cope with the various changes required by deep networks to achieve multi-objectives. PGI can provide complete input information for the target task to calculate the objective function, thereby obtaining reliable gradient information to update network weights. In addition, a new lightweight network framework is designed

How to count the number of elements in a list using Python's count() function

Nov 18, 2023 pm 02:53 PM

How to count the number of elements in a list using Python's count() function

Nov 18, 2023 pm 02:53 PM

How to use Python's count() function to calculate the number of an element in a list requires specific code examples. As a powerful and easy-to-learn programming language, Python provides many built-in functions to handle different data structures. One of them is the count() function, which can be used to count the number of elements in a list. In this article, we will explain how to use the count() function in detail and provide specific code examples. The count() function is a built-in function of Python, used to calculate a certain

Java program to calculate the area of a triangle using determinants

Aug 31, 2023 am 10:17 AM

Java program to calculate the area of a triangle using determinants

Aug 31, 2023 am 10:17 AM

Introduction The Java program for calculating the area of a triangle using determinants is a concise and efficient program that can calculate the area of a triangle given the coordinates of three vertices. This program is useful for anyone learning or working with geometry, as it demonstrates how to use basic arithmetic and algebraic calculations in Java, as well as how to use the Scanner class to read user input. The program prompts the user for the coordinates of three points of the triangle, which are then read in and used to calculate the determinant of the coordinate matrix. Use the absolute value of the determinant to ensure the area is always positive, then use a formula to calculate the area of the triangle and display it to the user. The program can be easily modified to accept input in different formats or to perform additional calculations, making it a versatile tool for geometric calculations. ranks of determinants

Count the number of occurrences of a substring recursively in Java

Sep 17, 2023 pm 07:49 PM

Count the number of occurrences of a substring recursively in Java

Sep 17, 2023 pm 07:49 PM

Given two strings str_1 and str_2. The goal is to count the number of occurrences of substring str2 in string str1 using a recursive procedure. A recursive function is a function that calls itself within its definition. If str1 is "Iknowthatyouknowthatiknow" and str2 is "know" the number of occurrences is -3. Let us understand through examples. For example, input str1="TPisTPareTPamTP", str2="TP"; output Countofoccurrencesofasubstringrecursi

How to use the Math.Pow function in C# to calculate the power of a specified number

Nov 18, 2023 am 11:32 AM

How to use the Math.Pow function in C# to calculate the power of a specified number

Nov 18, 2023 am 11:32 AM

In C#, there is a Math class library, which contains many mathematical functions. These include the function Math.Pow, which calculates powers, which can help us calculate the power of a specified number. The usage of the Math.Pow function is very simple, you only need to specify the base and exponent. The syntax is as follows: Math.Pow(base,exponent); where base represents the base and exponent represents the exponent. This function returns a double type result, that is, the power calculation result. Let's

1.3ms takes 1.3ms! Tsinghua's latest open source mobile neural network architecture RepViT

Mar 11, 2024 pm 12:07 PM

1.3ms takes 1.3ms! Tsinghua's latest open source mobile neural network architecture RepViT

Mar 11, 2024 pm 12:07 PM

Paper address: https://arxiv.org/abs/2307.09283 Code address: https://github.com/THU-MIG/RepViTRepViT performs well in the mobile ViT architecture and shows significant advantages. Next, we explore the contributions of this study. It is mentioned in the article that lightweight ViTs generally perform better than lightweight CNNs on visual tasks, mainly due to their multi-head self-attention module (MSHA) that allows the model to learn global representations. However, the architectural differences between lightweight ViTs and lightweight CNNs have not been fully studied. In this study, the authors integrated lightweight ViTs into the effective