Technology peripherals

Technology peripherals

AI

AI

Marcus posted an article criticizing LeCun: Deep learning alone cannot achieve human-like intelligence

Marcus posted an article criticizing LeCun: Deep learning alone cannot achieve human-like intelligence

Marcus posted an article criticizing LeCun: Deep learning alone cannot achieve human-like intelligence

In March of this year, Gary Marcus caused a stir in the artificial intelligence academic community after he proposed the idea that "deep learning has hit a wall."

#At that time, even the three giants of deep learning could not sit still. First, Geoffrey Hinton refuted this view in a podcast.

The following June, Yann LeCun wrote an article in response to this, and pointed out that don’t treat temporary difficulties as hitting a wall.

Now, Marcus has published an article titled "Deep Learning Alone Isn't Getting Us To Human-Like AI" in the US NOEMA magazine .

Similarly, he still has not changed his view - deep learning alone cannot achieve human-like intelligence.

# and proposed that the current artificial intelligence research mainly focuses on deep learning, and it is time to reconsider it.

##In the past 70 years, the most fundamental debate in the field of artificial intelligence is: whether artificial intelligence systems should be built on "symbols" In terms of operation, it should still be based on a brain-like "neural network" system.

#In fact, there is a third possibility here: hybrid model - combining the deep learning of neural networks with the powerful abstraction capabilities of symbolic operations.

LeCun's recent article "What AI Can Tell Us About Intelligence" published in NOEMA magazine also discussed this issue, but Marcus pointed out that his article It seems clear, but it has obvious flaws, namely logical contradictions.

The article begins with them rejecting the hybrid model, but the article ends by acknowledging the existence of the hybrid model and mentioning it as a possible way forward.

Hybrid Model of Neural Networks and Symbolic OperationsMarcus pointed out that LeCun and Browning’s point is mainly that “if a model learns Symbolic manipulation, it is not mixed".

#But the problem of machine learning is a development problem (how is the system generated?)

How the system operates once it is developed is a computational problem (for example, does it use one mechanism or two mechanisms?), that is, "any system that utilizes both symbols and neural networks is a hybrid model."

Perhaps what they really want to say is that artificial intelligence is likely to be a learned hybrid rather than a born hybrid. But a hybrid of learning is still a hybrid.

#And Marcus’s point of view is, “Symbol operation itself is innate, or there is another thing that is innate, This kind of thing indirectly contributed to the emergence of symbolic operations."

#So our research focus should be on how to discover this medium that indirectly promotes symbolic operations.

That is to put forward the hypothesis that as long as we can figure out what medium allows the system to reach the level of learning symbolic abstraction, we can build and utilize all the knowledge in the world system.

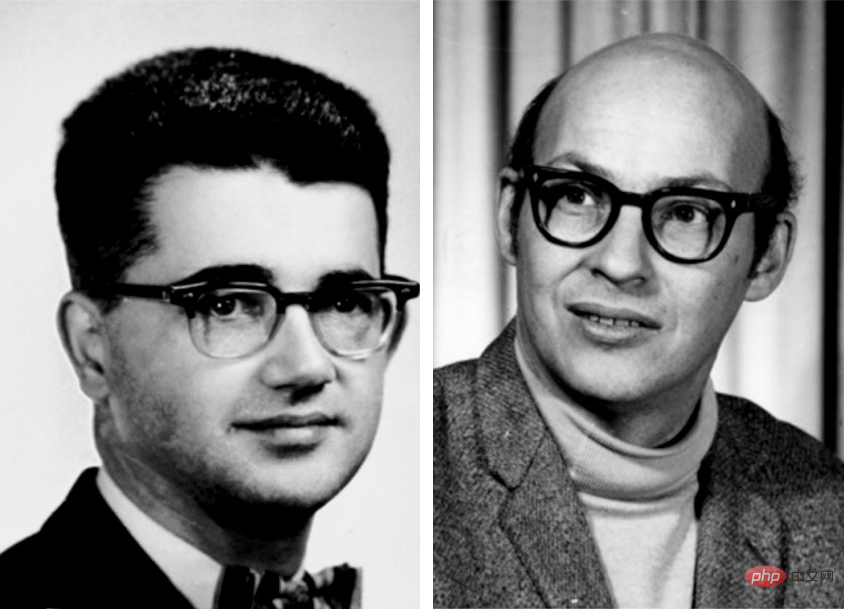

#Next, Marcus cited classics and combed the history of the debate on symbolic manipulation and neural networks in the field of artificial intelligence.

Early artificial intelligence pioneers such as Marvin Minsky and John McCarthy believed that symbolic manipulation was the only reasonable way forward.

Frank Rosenblatt, the pioneer of neural networks, believes that AI may perform better based on a structure in which neural nodes superimpose and process digital inputs.

In fact, these two possibilities are not mutually exclusive.

The neural network used by AI is not a literal biological neuron network. On the contrary, it is a simplified digital model that has some characteristics of the human brain. features, but with minimal complexity.

In principle, these abstract symbols can be connected in many different ways, some of which can directly implement logical and symbolic operations.

Warren S. McCulloch and Walter Pitts published A Logical Calculus of the Ideas Inmanent in Nervous Activity in 1943, explicitly recognizing this This possibility.

Others, including Frank Rosenblatt in the 1950s and David Rumelhart and Jay McClelland in the 1980s, proposed neural networks as an alternative to symbolic manipulation. Geoffrey Hinton also generally supports this position.

#Then Marcus cueed Turing Award winners such as LeCun, Hinton and Yoshua Bengio one after another.

It means that it doesn’t matter what I say, other big guys said so!

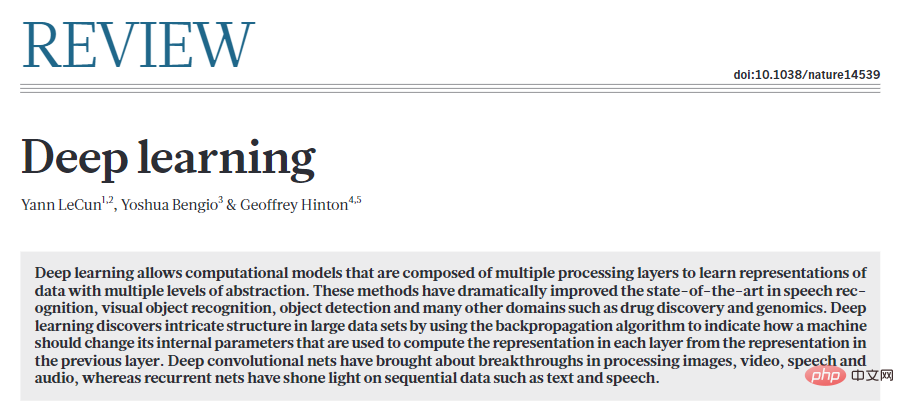

In 2015, LeCun, Bengio and Hinton wrote a manifesto-style paper on deep learning in Nature.

The article ends with an attack on symbols, arguing that "a new paradigm is needed to replace rule-based operations on symbolic expressions by operations on large vectors ”.

In fact, Hinton was so convinced that symbols were a dead end that the same year he published a paper at Stanford University called "The Ether Symbols” speech—likening symbols to one of the biggest mistakes in the history of science.

A similar argument was made in the 1980s by two of his former collaborators, Rumelhart and McClelland, in a famous 1986 book Arguing that symbols are not "the essence of human computation" sparked a great debate.

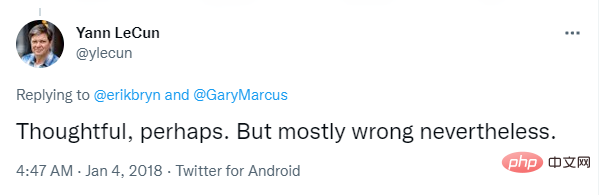

Marcus said that when he wrote an article in 2018 defending symbolic manipulation, LeCun dismissed his argument for hybrid AI without explanation. , dismissed it as "mostly wrong" on Twitter.

Then he said that two famous experts in the field of deep learning also expressed support for hybrid AI.

Andrew Ng expressed support for such a system in March. Sepp Hochreiter—the co-creator of LSTMs, one of the leading deep learning architectures for learning sequences—has done the same thing, publicly stating in April that the most promising broad-based AI approach is neurosymbolic AI.

In LeCun and Browning’s new perspective, symbolic manipulation is actually crucial, as Marcus and Steven Pinker have proposed since 1988.

Marcus therefore accused Lecun, "I proposed your point of view decades ago, but your research has gone back decades." .

#And it’s not just me who says this, other big guys think so too.

The rest of LeCun and Browning’s articles can be roughly divided into three parts:

1. My position Error description 2. Efforts to narrow the scope of the hybrid model 3. Symbolic manipulation may be due to learning rather than innate reasons.

Next, Marcus refuted the views in LeCun’s paper:

LeCun and Browning said, “Marcus said , if you don't have symbolic manipulation in the first place, you'll never have it".

#In fact, I explicitly admitted in my 2001 book "Algebraic Thinking" that we are not sure whether symbolic operations are innate.

They criticized my statement that "deep learning cannot make further progress", but my actual point is not that DL will never make progress on any issue. , but that deep learning itself is the wrong tool for certain tasks such as composition and reasoning.

#Similarly, they slandered me by saying that symbolic reasoning is either present or not (1 or 0) in the system.

#This is just nonsense.

It is true that DALL-E does not use symbols for reasoning, but this does not mean that any system that includes symbolic reasoning must be either there or not.

At least as early as the system MYCIN in the 1970s, there was a purely symbolic system that could carry out various quantitative reasoning.

Symbolic manipulation innateness

Can symbolic manipulation ability be learned rather than established from the beginning?

The answer is yes.

# Marcus said that although previous experiments cannot guarantee that the ability to manipulate symbols is innate, they are almost indistinguishable from this view. They do pose a challenge to any theory of learning that relies on large amounts of experience.

and put forward the following 2 main arguments:

1 , Learnability

In his 2001 book The Algebraic Mind, Marcus showed that some systems can learn symbolic operations .

#A system that has some built-in starting points will be more effective at understanding the world than a pure blank slate.

In fact, even LeCun’s own most famous work-On Convolutional Neural Networks-is a good example: the study of neural network learning methods Built-in constraints greatly improve efficiency. When symbolic operations are well integrated, even greater gains may be achieved.

2. Human infants show some ability to manipulate symbols

In a series of oft-cited rule-learning experiments, infants generalized abstract patterns beyond the concrete examples they had been trained on. Subsequent research on the implicit logical reasoning ability of human infants further proved this point.

# Additionally, research has shown that bees, for example, can generalize the solar azimuth function to lighting conditions they have never seen before.

In LeCun’s view, learning symbols is equivalent to something acquired in later years, because when you are young, you need to be more precise and more precise. Professional skills.

What is puzzling is that, after objecting to the innate nature of symbolic manipulation, LeCun did not provide strong evidence to prove that symbolic manipulation is an acquired habit. Got it.

If a baby goat can crawl down a hill soon after birth, why can't a nascent neural network incorporate a little symbolic manipulation out of the box?

At the same time, LeCun and Browning did not specify, how can the lack of intrinsic mechanisms of symbolic manipulation solve well-known specific problems in language understanding and reasoning?

They only give a weak generalization: since deep learning has overcome the problem from 1 to N, we should have some confidence that it can overcome the N 1 problem confidence.

One should wonder whether deep learning has reached its limits. Given the continued incremental improvements to missions seen recently in DALL-E 2, Gato, and PaLM, it seems wise not to mistake momentary difficulties for "walls." The inevitable failure of deep learning has been predicted before, but it’s not worth betting on.

Optimism is one thing, but reality must be seen clearly.

Deep learning in principle faces some specific challenges, mainly in terms of compositionality, systematization and language understanding, all of which revolve around generalization and "distribution" migrate".

#Now everyone realizes that distribution migration is the Achilles heel of current neural networks. Of course, deep learning has made progress, but not much progress has been made on these basic problems.

In Marcus’ view, the circumstances under which symbolic manipulation might be innate are much the same as before:

1. The current system, 20 years after the emergence of "algebraic thinking", is still unable to reliably extract symbolic operations (such as multiplication) even in the face of massive data sets and training.

2. The example of human infants shows that before formal education, they have the ability to generalize complex aspects of natural language and reasoning. 3. A little inner symbolic meaning can greatly improve learning efficiency. Part of the power of AlphaFold 2 comes from carefully constructed innate representations of molecular biology.

In short, the world may be roughly divided into three bins:

One is a system in which symbol manipulation equipment is fully installed in the factory.

#The second is that the system with innate learning device lacks symbolic operations, but with the correct data and training environment, it has enough ability to obtain it.

#The third is a system that cannot obtain a complete symbol manipulation mechanism even with sufficient training.

#Current deep learning systems seem to fall into the third category: no symbolic manipulation mechanisms at the beginning, and no reliable symbolic manipulation mechanisms in the process.

#Currently, understanding the origins of symbolic operations is our top priority. Even the most ardent proponents of neural networks now recognize the importance of symbolic operations in achieving AI.

And this is what the neurosemiotics community has been paying attention to: How to integrate data-driven learning and symbolic representation into a single, more powerful intelligence Working in harmony?

The above is the detailed content of Marcus posted an article criticizing LeCun: Deep learning alone cannot achieve human-like intelligence. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1387

1387

52

52

Methods and steps for using BERT for sentiment analysis in Python

Jan 22, 2024 pm 04:24 PM

Methods and steps for using BERT for sentiment analysis in Python

Jan 22, 2024 pm 04:24 PM

BERT is a pre-trained deep learning language model proposed by Google in 2018. The full name is BidirectionalEncoderRepresentationsfromTransformers, which is based on the Transformer architecture and has the characteristics of bidirectional encoding. Compared with traditional one-way coding models, BERT can consider contextual information at the same time when processing text, so it performs well in natural language processing tasks. Its bidirectionality enables BERT to better understand the semantic relationships in sentences, thereby improving the expressive ability of the model. Through pre-training and fine-tuning methods, BERT can be used for various natural language processing tasks, such as sentiment analysis, naming

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Written previously, today we discuss how deep learning technology can improve the performance of vision-based SLAM (simultaneous localization and mapping) in complex environments. By combining deep feature extraction and depth matching methods, here we introduce a versatile hybrid visual SLAM system designed to improve adaptation in challenging scenarios such as low-light conditions, dynamic lighting, weakly textured areas, and severe jitter. sex. Our system supports multiple modes, including extended monocular, stereo, monocular-inertial, and stereo-inertial configurations. In addition, it also analyzes how to combine visual SLAM with deep learning methods to inspire other research. Through extensive experiments on public datasets and self-sampled data, we demonstrate the superiority of SL-SLAM in terms of positioning accuracy and tracking robustness.

Latent space embedding: explanation and demonstration

Jan 22, 2024 pm 05:30 PM

Latent space embedding: explanation and demonstration

Jan 22, 2024 pm 05:30 PM

Latent Space Embedding (LatentSpaceEmbedding) is the process of mapping high-dimensional data to low-dimensional space. In the field of machine learning and deep learning, latent space embedding is usually a neural network model that maps high-dimensional input data into a set of low-dimensional vector representations. This set of vectors is often called "latent vectors" or "latent encodings". The purpose of latent space embedding is to capture important features in the data and represent them into a more concise and understandable form. Through latent space embedding, we can perform operations such as visualizing, classifying, and clustering data in low-dimensional space to better understand and utilize the data. Latent space embedding has wide applications in many fields, such as image generation, feature extraction, dimensionality reduction, etc. Latent space embedding is the main

Understand in one article: the connections and differences between AI, machine learning and deep learning

Mar 02, 2024 am 11:19 AM

Understand in one article: the connections and differences between AI, machine learning and deep learning

Mar 02, 2024 am 11:19 AM

In today's wave of rapid technological changes, Artificial Intelligence (AI), Machine Learning (ML) and Deep Learning (DL) are like bright stars, leading the new wave of information technology. These three words frequently appear in various cutting-edge discussions and practical applications, but for many explorers who are new to this field, their specific meanings and their internal connections may still be shrouded in mystery. So let's take a look at this picture first. It can be seen that there is a close correlation and progressive relationship between deep learning, machine learning and artificial intelligence. Deep learning is a specific field of machine learning, and machine learning

Super strong! Top 10 deep learning algorithms!

Mar 15, 2024 pm 03:46 PM

Super strong! Top 10 deep learning algorithms!

Mar 15, 2024 pm 03:46 PM

Almost 20 years have passed since the concept of deep learning was proposed in 2006. Deep learning, as a revolution in the field of artificial intelligence, has spawned many influential algorithms. So, what do you think are the top 10 algorithms for deep learning? The following are the top algorithms for deep learning in my opinion. They all occupy an important position in terms of innovation, application value and influence. 1. Deep neural network (DNN) background: Deep neural network (DNN), also called multi-layer perceptron, is the most common deep learning algorithm. When it was first invented, it was questioned due to the computing power bottleneck. Until recent years, computing power, The breakthrough came with the explosion of data. DNN is a neural network model that contains multiple hidden layers. In this model, each layer passes input to the next layer and

AlphaFold 3 is launched, comprehensively predicting the interactions and structures of proteins and all living molecules, with far greater accuracy than ever before

Jul 16, 2024 am 12:08 AM

AlphaFold 3 is launched, comprehensively predicting the interactions and structures of proteins and all living molecules, with far greater accuracy than ever before

Jul 16, 2024 am 12:08 AM

Editor | Radish Skin Since the release of the powerful AlphaFold2 in 2021, scientists have been using protein structure prediction models to map various protein structures within cells, discover drugs, and draw a "cosmic map" of every known protein interaction. . Just now, Google DeepMind released the AlphaFold3 model, which can perform joint structure predictions for complexes including proteins, nucleic acids, small molecules, ions and modified residues. The accuracy of AlphaFold3 has been significantly improved compared to many dedicated tools in the past (protein-ligand interaction, protein-nucleic acid interaction, antibody-antigen prediction). This shows that within a single unified deep learning framework, it is possible to achieve

Improved RMSprop algorithm

Jan 22, 2024 pm 05:18 PM

Improved RMSprop algorithm

Jan 22, 2024 pm 05:18 PM

RMSprop is a widely used optimizer for updating the weights of neural networks. It was proposed by Geoffrey Hinton et al. in 2012 and is the predecessor of the Adam optimizer. The emergence of the RMSprop optimizer is mainly to solve some problems encountered in the SGD gradient descent algorithm, such as gradient disappearance and gradient explosion. By using the RMSprop optimizer, the learning rate can be effectively adjusted and the weights adaptively updated, thereby improving the training effect of the deep learning model. The core idea of the RMSprop optimizer is to perform a weighted average of gradients so that gradients at different time steps have different effects on weight updates. Specifically, RMSprop calculates the square of each parameter

How to use CNN and Transformer hybrid models to improve performance

Jan 24, 2024 am 10:33 AM

How to use CNN and Transformer hybrid models to improve performance

Jan 24, 2024 am 10:33 AM

Convolutional Neural Network (CNN) and Transformer are two different deep learning models that have shown excellent performance on different tasks. CNN is mainly used for computer vision tasks such as image classification, target detection and image segmentation. It extracts local features on the image through convolution operations, and performs feature dimensionality reduction and spatial invariance through pooling operations. In contrast, Transformer is mainly used for natural language processing (NLP) tasks such as machine translation, text classification, and speech recognition. It uses a self-attention mechanism to model dependencies in sequences, avoiding the sequential computation in traditional recurrent neural networks. Although these two models are used for different tasks, they have similarities in sequence modeling, so