Technology peripherals

Technology peripherals

AI

AI

Fans and Thoughts on Big Companies Spending Money to Pursue Big AI Models

Fans and Thoughts on Big Companies Spending Money to Pursue Big AI Models

Fans and Thoughts on Big Companies Spending Money to Pursue Big AI Models

This article is reproduced from Lei Feng.com. If you need to reprint, please go to the official website of Lei Feng.com to apply for authorization.

Have you all heard the story of the electric fan and the empty soap box?

It is rumored that a well-known international fast-moving consumer goods manufacturer once introduced a soap packaging production line. It was found that this production line had a flaw in the process of packaging soap, that is, there were often boxes that were not loaded. Soap. They couldn't sell empty boxes to customers, so they hired a postdoctoral fellow in automation to design a plan for sorting empty soap boxes.

The postdoctoral fellow immediately convened a technical team of more than a dozen people, comprehensively using machinery, automation, microelectronics, X-ray detection and other technologies, spending 900,000, and finally successfully developed A solution is to install two integrated detectors on both sides of the production line. Whenever an empty soap box is detected passing by, a robot will be driven to push the empty soap box away.

It can be said that this is an important breakthrough in the implementation of technology to solve actual industrial problems.

Coincidentally, at the same time, a township enterprise in southern China also purchased the same production line. When the boss discovered this problem, he was very angry. He called a worker to the factory and said, "Come and think of a way to solve this problem." Under pressure, the worker quickly came up with a clever plan: He spent 190 yuan. I bought a high-power electric fan and placed it next to the soap packaging production line. As soon as the production line turned around, it started blowing hard. As soon as the empty soap boxes appeared, they were blown away.

One small worker, with his clever creativity, quickly solved this problem and achieved a big goal often #ed in the industry: reducing costs and increasing efficiency.

Technological innovation and the meaning of intelligence in the industry are nothing more than these two slogans: one is to save money, and the other is to increase efficiency. However, in the development of AI in recent years, there has been such a "weird" phenomenon that seems to violate the laws of capital: whether it is academia or industry, whether it is a large company or a small company, whether it is a private enterprise or a state-funded research The institutes are all spending a lot of money to "refining" large models.

Leading to two voices in the circle:

One voice said that the large model has demonstrated strong performance on a variety of task benchmarks. Performance and potential must be the development direction of artificial intelligence in the future. The investment at this time is to prepare for not missing the great opportunities of the times in the future. It is worthwhile to invest millions (or more) in training. In other words, seizing the high ground of large models is the main contradiction, and high cost investment is the secondary contradiction.

Another voice says that in the actual process of AI technology implementation, the current comprehensive touting of large models not only robs research resources of small models and other AI directions, but also due to The investment cost is high, the cost-effectiveness is low in solving actual industrial problems, and it cannot benefit more small and medium-sized enterprises in the context of digital transformation.

In other words, "economic availability" and "whether the ability is strong" constitute the two major focuses of AI algorithms in solving practical problems. Today, the industry has reached a consensus: in the future, AI will become the "power" empowering all walks of life. So, from the perspective of large-scale implementation of AI, which one is better, large models or small models? Has the industry really thought about it?

1The arrival of the "big" model

In recent years, among the voices of major domestic and foreign technology companies publicizing their AI R&D capabilities, there is always a high-frequency one. Vocabulary appears: Big Model.

This competition begins with foreign technology giants. In 2018, Google launched the large-scale pre-trained language model BERT. After opening the curtain on large models, OpenAI launched GPT-2 and GPT-3 in 2019 and 2020; in 2021, Google was not to be left behind, launching an overwhelming number of parameters. The former's Switch Transformer...

The so-called model size, the main measurement indicator is the size of the model parameters. The "bigness" of the model refers to the huge number of parameters.

For example, the number of parameters of BERT reached 300 million for the first time in 2018, surpassing humans in two indicators of the top-level machine reading comprehension test SQuAD1.1, and surpassing humans in all aspects. Achieved SOTA performance in 11 different NLP tests, including pushing the GLUE benchmark to 80.4% (absolute improvement 7.6%), MultiNLI accuracy reaching 86.7% (absolute improvement 5.6%), demonstrating the impact of the increase in parameter size on AI algorithms The power of performance improvements.

OpenAI has successively launched GPT-2 with a parameter volume reaching 1.5 billion. The parameter volume of GPT-3 exceeded 100 billion for the first time, reaching 175 billion. The Switch Transformer released by Google in January 2021 reached trillions for the first time, with a parameter volume of 1.6 trillion.

Faced with this raging situation, major domestic manufacturers and even government-funded research institutions have not been far behind, and have successively launched their results on large-scale refining models: In April 2021, Alibaba DAMO Academy released Chinese pre-trained language model "PLUG" with 27 billion parameters; in April, Huawei and Pengcheng Laboratory jointly released "Pangu α" with 200 billion parameters; in June, Beijing Zhiyuan Artificial Intelligence Research Institute released "Enlightenment 2.0" , with 1.75 trillion parameters; in September, Baidu released the Chinese-English bilingual model PLATO-X, with 10 billion parameters.

By October last year, Alibaba Damo Academy released "M6-10T", which has reached 10 trillion parameters and is currently the largest AI model in China. Although it is not as good as Alibaba, Baidu is not far behind in pursuing the number of parameters of the model. It jointly released "Baidu Wenxin" with Pengcheng Laboratory, with a parameter number of 260 billion, which is 10 times larger than PLATO-X.

In addition, Tencent also said that they have developed a large model "Pai Daxing", but the parameter magnitude is unknown. In addition to the major AI R&D companies that have generally attracted everyone's attention, the main domestic large model R&D players also include the computing power provider Inspur. They released the large model "Source 1.0" in October last year, with the number of parameters reaching 245.7 billion. All in all, 2021 can be called China’s “first year of large models.”

This year, large models continue to be popular. In the beginning, large models were concentrated in the field of computational language, but now they have gradually expanded to vision, decision-making, and their applications even cover major scientific issues such as protein prediction, aerospace, etc. Google, Meta, Baidu and other major companies have corresponding results. . For a time, AI models with parameters below 100 million had no voice.

There is no doubt that whether it is performance transcendence or task expansion, AI large models have demonstrated their inherent potential, bringing unlimited imagination to academia and industry.

Research experiments have shown that increasing the amount of data and parameters can effectively improve the accuracy of the model in solving problems. Taking the visual transfer model Big Transfer released by Google in 2021 as an example, two data sets of 1.28 million images in 1,000 categories and 300 million images in 18,291 categories were used for training. The accuracy of the model can be increased from 77% to 79 %.

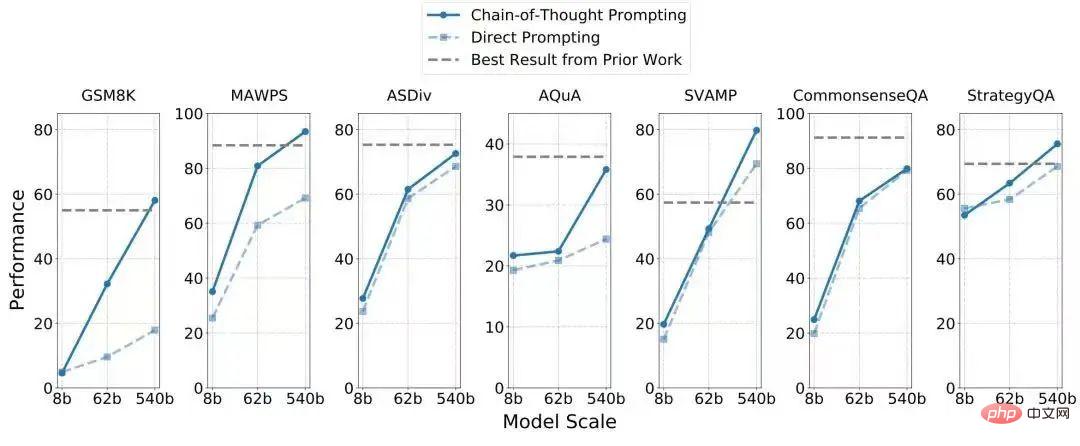

Take the 540 billion parameter one-way language model PaLM launched by Google this year. It is based on Pathways, a new generation AI framework released by Google this year. It not only surpasses the 175 billion parameter in fine-tuning GPT-3, and the reasoning ability has been greatly improved. On the 7 arithmetic problem/common sense reasoning data sets, 4 have surpassed the current SOTA (as shown in the table below), and only 8 samples (that is, the collected data) were used. .

Vision is perception, language is intelligence, but the two have never made a dazzling breakthrough in conquering "causal reasoning", and causal reasoning This ability is very important for the evolution of AI systems. It can be understood this way: a child's simple ability to derive 100 100=200 based on 1 1=2 is very complicated for a machine system, because the system lacks the imagination of causal reasoning. If machines don’t even have reasonable reasoning ability/imagination, then we will be far away from developing the super-intelligent robots in science fiction movies. The emergence of large models makes the realization of general artificial intelligence (AGI) possible.

So, we can see that when large companies promote a large model, they often emphasize that it can solve multiple tasks at the same time and reach SOTA (the current highest level) on multiple task benchmarks. For example, the 540 billion parameter language model PaLM launched by Google this year can interpret jokes and guess movies through emoji expressions. The "Enlightenment 2.0" launched by Zhiyuan can incubate Hua Zhibing, a virtual student who is proficient in chess, calligraphy and painting.

In short, large models often have one characteristic: they are versatile and can wear many hats. This is critical to solving the challenges of complex scenarios.

"The small model has a small number of parameters and is limited to a single task; while the (advantage) of the large model is like the knowledge a person learns when learning to play table tennis. Badminton has an auxiliary effect. There is generalization between tasks of large models. When facing new tasks, small models may need thousands or tens of thousands of training data, while large models need only one training data. It doesn’t even require training data at all.” Lan Zhenzhong, head of the Deep Learning Laboratory of West Lake University, explained to Leifeng.com-AI Technology Review.

Take the research on dialogue systems as an example. Dialogue systems are mainly divided into two categories: one is task-based dialogue, where users assign tasks and the AI system automatically executes them, such as quickly booking air tickets, buying movie tickets, etc.; the other is open-type dialogue, such as the movie "Her" (Her) is a fictional robot that can communicate with humans on any topic and even make users feel emotionally accompanied. Among them, the latter's ability level is obviously higher and its research and development is more difficult. There is a lot of fog ahead, and you don't know what kind of challenges you will face. At this time, the large model itself has a rich "capability package" and extraordinary performance in new tasks, and its combat effectiveness is obviously better than that of the small model.

Lan Zhenzhong pointed out that currently AI researchers in academia and industry have not fully grasped many characteristics of large models. For example, from the previous generation of GPT-3 to this generation of instruct GPT, we can see that it has made a qualitative leap. It is also a large model, but the effect of instruct GPT is much better when accepting commands. This is what they have done. This can only be experienced when studying large models.

As the number of parameters increases, what will happen to the performance of the AI model? This is a scientific issue that requires in-depth exploration, therefore, it is necessary to continue investing in research on large models.

2 The ideal is far away, but the reality is very close

If human beings want to progress, there must always be people who dare to go where no one is.

However, in the real world, not everyone can afford the ideal of stars and sea. More people just want to solve the problems they are facing in a quick, easy and cost-effective way. The problem. In the final analysis, for AI algorithms to be implemented, the input-output ratio of technology research and development must be considered. At this time, the disadvantages of large models begin to be exposed.

A cruel fact that cannot be ignored is that the calculation of large models is slow and the training cost is extremely high.

Generally speaking, the larger the number of parameters of the model, the slower the machine will run and the higher the computational cost. According to foreign media disclosures, OpenAI spent nearly US$5 million (approximately RMB 35 million) on training GPT-3, which contains 175 billion parameters. Google used 6,144 TPUs when training PaLM, which contains 540 billion parameters. According to statistics from enthusiastic netizens, the cost for ordinary people to train a PaLM is between US$9 million and US$17 million. This is just the cost of computing power.

Major domestic manufacturers have not disclosed their economic costs for training large models, but based on the existing globally shared computing methods and resources, the computing expenditures should not be far apart. Both GPT-3 and PaLM are only in the hundreds of billions, but the cost of large models with parameters reaching more than trillions must be staggering. If a large manufacturer is generous enough in R&D, the investment cost of large models will not be a "stumbling block." However, at a time when capital is becoming more cautious about AI, some startups and government-funded research institutions are still betting heavily on large models, which means It seems a bit magical.

The high requirements for computing power of large models have turned the competition for technical strength between enterprises into a competition for money. In the long run, once algorithms become high-consumption commodities, the most cutting-edge AI is destined to be available only to a few people, resulting in a monopoly situation of siege and enclosure. In other words, even if general artificial intelligence does appear one day, it will not benefit all users.

At the same time, on this track, the innovation power of small businesses will be squeezed. To build a large model, small businesses must either cooperate with large manufacturers and stand on the shoulders of giants (but this is not something every small factory can do), or they must invest heavily and prepare their treasury (but in terms of capital, In the cold winter, this is not practical).

After calculating the input, calculate the output. Unfortunately, no company currently refining large models has disclosed how much economic benefits large models have created. However, it can be known from public information that these large models have begun to be implemented one after another to solve problems. For example, after Alibaba Damo Academy released the trillion-parameter model M6, it said that its image generation capabilities can already assist car designers in vehicle design. , the copywriting created by borrowing the copywriting generation ability of M6 has also been used on mobile Taobao, Alipay and Alibaba Xiaomi.

For large models that are in the initial stage of exploration, emphasizing short-term returns is too harsh. However, we still have to answer the question: Whether it is the business community or academia, when betting on large models, is it to avoid missing a technical direction that may dominate in the future, or because it can better solve Any known issues at hand? The former has a strong color of academic exploration, while the latter is a real concern of the industry pioneers who apply AI technology to solve problems.

The large model was kicked off by Google's release of BERT, which was a chaotic and open-minded idea: before the BERT experiment, Google Brain's technical team was not developed around a known real-life problem Model , I did not expect that this AI model with the largest number of parameters at the time (300 million) could bring about a significant improvement in results. In the same way, when OpenAI imitated Google to develop GPT-2 and GPT-3, it did not have a specific task. Instead, after the successful development, everyone tested the task effect on GPT-3 and found that all indicators have improved. Was just amazed. Today's GPT-3 is like a platform and has been used by thousands of users.

But as time goes by, the development of large models inevitably returns to the original intention of solving a certain practical problem. For example, the large protein prediction model ESMFold released by Meta this year, Baidu soon A large aerospace model released previously. If the initial large-scale models such as GPT-3 were mainly intended to explore the impact that an increase in the number of parameters would have on the performance of the algorithm, and were purely "unknowns guiding the unknown", then current large-scale model research has begun to reflect a more complex Clear goal: to solve real problems and create entrepreneurial value.

At this time, the guidance for the development of the large model changes from the will of the researcher to the needs of the users. In some very small requirements (such as license plate recognition), large models can also solve the problem, but due to its expensive training cost, it is a bit like "killing a pig with a sledgehammer", and the performance is not necessarily excellent. In other words, if the accuracy improvement of a few points is obtained at a cost of tens of millions, the price/performance ratio will be extremely low.

An industry insider told Leifeng.com-AI Technology Review that in most cases, we study a technology to solve a known practical problem. Such as sentiment analysis and news summary. At this time, we can actually design a special small task to study, and the effect of the resulting "small model" is easily better than that of large models such as GPT-3. Even for some specific tasks, large models are "impossible to use."

Therefore, in the process of promoting the development of AI, the combination of large models and small models is inevitable. Since the research and development threshold for large models is extremely high, in the visible future, small models that are economically available and capable of precise attack will be the main force in shouldering the important task of large-scale implementation of AI.

Even some scientists who are studying large models clearly told Leifeng.com-AI Technology Review that although large models can perform many tasks at the same time, "Now when talking about general artificial intelligence It’s still too early for intelligence.” Large models may be an important way to achieve the ultimate goal, but the ideal is still far away, and AI must first satisfy the current situation.

3 Do AI models have to get bigger and bigger?

In fact, in response to the phenomenon of increasingly larger AI models, some researchers in academia and industry have noticed its advantages and disadvantages in implementation, and have actively developed countermeasures.

If we want to talk about what kind of enlightenment technology has given people about the changes in society, then one of the important things that will definitely be mentioned is: how to lower the threshold of technological products (whether technically or In terms of cost), only by allowing more people to enjoy the benefits of this technology can its influence be expanded.

Switching to a large model, the core contradiction is how to improve its training speed, reduce training costs, or propose a new architecture. If we look at the use of computing resources alone, the dilemma of large models is actually not outstanding. The latest training results of the MLPerf benchmark released by the Open Engineering Alliance MLCommons at the end of June this year show that the training speed of this year's machine learning system is almost twice that of last year, which has exceeded Moore's Law (doubling every 18-24 months).

In fact, with the update and iteration of various servers and the emergence of new methods such as cloud computing, computing has been accelerating and energy consumption has been reduced. For example, GPT-3 was launched only two years ago, and now Meta’s calculations based on the OPT model it developed have been reduced to 1/7 of what it was in 2020. In addition, a recent article showed that the large model BERT, which required thousands of GPUs to train in 2018, can now be trained on a single card in 24 hours, and can be easily trained in an ordinary laboratory.

The bottleneck of obtaining computing power no longer exists, the only obstacle is the acquisition cost.

In addition to relying solely on computing power, in recent years, some researchers have also hoped to find another way to achieve the "economic availability" of large models based solely on the characteristics of the model and algorithm itself.

One approach is data-centered "dimensionality reduction."

Recently, DeepMind has a work ("Training Compute-Optimal Large Language Models") that successfully explored and found that, with the same amount of calculations, the training data of the model is made larger instead of the number of parameters of the model. Zoom in to get better results than just zooming in on the model.

In this DeepMind study, Chinchilla, a 70 billion parameter model that fully leverages data, surpasses the 175 billion parameter GPT-3 and 2800 in the evaluation of a series of downstream tasks. Gopher with billions of parameters. Lan Zhenzhong explained that the reason why Chinchilla was able to win was because it expanded and doubled the data during training, and then calculated it only once.

Another way is to rely on innovation in algorithms and architecture to "lightweight" large models.

Zhou Ming, former vice president of Microsoft Research Asia and current founder of Lanzhou Technology, is a follower of this track.

As an entrepreneur, Zhou Ming’s idea is very “duty”, which is to save money. He pointed out that many large companies are now pursuing large models. First, they are scrambling to be the first. Second, they also want to express their computing capabilities, especially the capabilities of cloud services. As a small company that was just born, Lanzhou Technology has the dream of using AI to create value, but it does not have strong cloud capabilities and does not have enough money to burn. So Zhou Ming initially thought about how to adjust the model architecture and distill knowledge. , turning large models into "lightweight models" for customers to use.

The lightweight model "Mencius" they launched last July proved the feasibility of this idea. "Mencius" has only 1 billion parameters, but its performance on the Chinese language understanding evaluation list CLUE surpasses large models such as BERTSG and Pangu with parameter levels of tens or even hundreds of billions (table below). A consensus in the field is that under the same architecture, the larger the number of parameters of the model, the better the performance. However, the ingenuity of "Mencius" lies in the innovation of the architecture.

In academia, not long ago, Professor Ma Yi from the University of California, Berkeley, Shen Xiangyang, and Cao Ying jointly published a study ( "On the Principles of Parsimony and Self-Consistency for the Emergence of Intelligence") theoretically analyzes the technical reasons why large models are getting larger and larger, that is, deep neural networks are essentially an "open-loop" system, that is, The training of the discriminative model used for classification and the generative model used for sampling or replay are separated in most cases, resulting in inefficient training of parameters, and the only way to improve the performance of the model is to rely on heap parameters and heap computing power.

To this end, the "reform" method they proposed is more thorough, which is to combine the discriminative model and the generative model to form a complete "compressed" closed-loop system, so that the AI model It can learn independently, be more efficient and stable, and have stronger adaptability and responsiveness when facing new problems that may arise in a new environment. In other words, if researchers in the field of AI can develop models along this route, the parameter magnitude of the model will be greatly reduced, returning to the path of "small and beautiful", and the ability of large models to "solve unknown problems" can also be achieved .

In terms of achieving economic availability, there is even a voice that advocates using AutoML or AutoAI to solve the difficulty of model training, lower the research threshold of AI algorithms, and allow algorithm engineers or Non-AI practitioners can flexibly create single-function models according to their own needs, forming countless small models. A single spark can start a prairie fire.

This voice comes from the perspective of "demand" and opposes closed doors.

For example, visual algorithms are used for identification, detection and positioning. Among them, the requirements for identifying smoke and fireworks are different, so they provide a platform or tool to allow those in need It can quickly generate a visual algorithm for smoke recognition and fireworks recognition respectively, with higher accuracy, and there is no need to pursue "universality" or "generalization" across scenarios. At this time, a large model that is proficient in all aspects of piano, chess, calligraphy and painting can be divided into countless small models that are proficient in piano, chess, calligraphy and painting respectively, which can also solve the problem.

4 Write at the end

and return to the story of the electric fan blowing the empty soap dish.

When it comes to solving real-world problems with AI technology, large models and small models are like a postdoc’s automation solution and a small worker’s electric fan. Although the former seems redundant and cumbersome when solving a small problem, the effect is also poor. Not as fast as an electric fan, but almost no one would deny the value provided by postdocs and their teams, let alone "eliminate" them. On the contrary, we can even name hundreds of reasons to emphasize the rationality of technological research and development.

But in many cases, technical researchers often ignore the wisdom of small workers in solving problems: starting from actual problems rather than limiting themselves to the advantages of technology. From this perspective, the research on large models has the inherent value of leading the frontier, but the "economically usable" goal of cost reduction and efficiency improvement must also be considered.

Returning to the research itself, Lan Zhenzhong said that although there are many results on large models, there are very few open sources and ordinary researchers have limited access, which is very regrettable. .

Since the large model is not open source, ordinary users cannot evaluate the practicality of the large model from the perspective of demand. In fact, we have previously conducted experiments on a few open source large models and found that the performance of large language models in understanding social ethics and emotions is extremely unstable.

Because they are not open to the public, the introduction of their own large models by major manufacturers also stays on academic indicators, which creates a dilemma similar to Schrödinger: you never know the box There is no way to judge whether it is true or false based on what is in it. In a word, they have the final say on everything. Finally, I hope that large AI models can really benefit more people.

The above is the detailed content of Fans and Thoughts on Big Companies Spending Money to Pursue Big AI Models. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

WorldCoin (WLD) price forecast 2025-2031: Will WLD reach USD 4 by 2031?

Apr 21, 2025 pm 02:42 PM

WorldCoin (WLD) price forecast 2025-2031: Will WLD reach USD 4 by 2031?

Apr 21, 2025 pm 02:42 PM

WorldCoin (WLD) stands out in the cryptocurrency market with its unique biometric verification and privacy protection mechanisms, attracting the attention of many investors. WLD has performed outstandingly among altcoins with its innovative technologies, especially in combination with OpenAI artificial intelligence technology. But how will the digital assets behave in the next few years? Let's predict the future price of WLD together. The 2025 WLD price forecast is expected to achieve significant growth in WLD in 2025. Market analysis shows that the average WLD price may reach $1.31, with a maximum of $1.36. However, in a bear market, the price may fall to around $0.55. This growth expectation is mainly due to WorldCoin2.

What does cross-chain transaction mean? What are the cross-chain transactions?

Apr 21, 2025 pm 11:39 PM

What does cross-chain transaction mean? What are the cross-chain transactions?

Apr 21, 2025 pm 11:39 PM

Exchanges that support cross-chain transactions: 1. Binance, 2. Uniswap, 3. SushiSwap, 4. Curve Finance, 5. Thorchain, 6. 1inch Exchange, 7. DLN Trade, these platforms support multi-chain asset transactions through various technologies.

'Black Monday Sell' is a tough day for the cryptocurrency industry

Apr 21, 2025 pm 02:48 PM

'Black Monday Sell' is a tough day for the cryptocurrency industry

Apr 21, 2025 pm 02:48 PM

The plunge in the cryptocurrency market has caused panic among investors, and Dogecoin (Doge) has become one of the hardest hit areas. Its price fell sharply, and the total value lock-in of decentralized finance (DeFi) (TVL) also saw a significant decline. The selling wave of "Black Monday" swept the cryptocurrency market, and Dogecoin was the first to be hit. Its DeFiTVL fell to 2023 levels, and the currency price fell 23.78% in the past month. Dogecoin's DeFiTVL fell to a low of $2.72 million, mainly due to a 26.37% decline in the SOSO value index. Other major DeFi platforms, such as the boring Dao and Thorchain, TVL also dropped by 24.04% and 20, respectively.

Aavenomics is a recommendation to modify the AAVE protocol token and introduce token repurchase, which has reached the quorum number of people.

Apr 21, 2025 pm 06:24 PM

Aavenomics is a recommendation to modify the AAVE protocol token and introduce token repurchase, which has reached the quorum number of people.

Apr 21, 2025 pm 06:24 PM

Aavenomics is a proposal to modify the AAVE protocol token and introduce token repos, which has implemented a quorum for AAVEDAO. Marc Zeller, founder of the AAVE Project Chain (ACI), announced this on X, noting that it marks a new era for the agreement. Marc Zeller, founder of the AAVE Chain Initiative (ACI), announced on X that the Aavenomics proposal includes modifying the AAVE protocol token and introducing token repos, has achieved a quorum for AAVEDAO. According to Zeller, this marks a new era for the agreement. AaveDao members voted overwhelmingly to support the proposal, which was 100 per week on Wednesday

Why is the rise or fall of virtual currency prices? Why is the rise or fall of virtual currency prices?

Apr 21, 2025 am 08:57 AM

Why is the rise or fall of virtual currency prices? Why is the rise or fall of virtual currency prices?

Apr 21, 2025 am 08:57 AM

Factors of rising virtual currency prices include: 1. Increased market demand, 2. Decreased supply, 3. Stimulated positive news, 4. Optimistic market sentiment, 5. Macroeconomic environment; Decline factors include: 1. Decreased market demand, 2. Increased supply, 3. Strike of negative news, 4. Pessimistic market sentiment, 5. Macroeconomic environment.

What are the hybrid blockchain trading platforms?

Apr 21, 2025 pm 11:36 PM

What are the hybrid blockchain trading platforms?

Apr 21, 2025 pm 11:36 PM

Suggestions for choosing a cryptocurrency exchange: 1. For liquidity requirements, priority is Binance, Gate.io or OKX, because of its order depth and strong volatility resistance. 2. Compliance and security, Coinbase, Kraken and Gemini have strict regulatory endorsement. 3. Innovative functions, KuCoin's soft staking and Bybit's derivative design are suitable for advanced users.

How to win KERNEL airdrop rewards on Binance Full process strategy

Apr 21, 2025 pm 01:03 PM

How to win KERNEL airdrop rewards on Binance Full process strategy

Apr 21, 2025 pm 01:03 PM

In the bustling world of cryptocurrencies, new opportunities always emerge. At present, KernelDAO (KERNEL) airdrop activity is attracting much attention and attracting the attention of many investors. So, what is the origin of this project? What benefits can BNB Holder get from it? Don't worry, the following will reveal it one by one for you.

The top ten free platform recommendations for real-time data on currency circle markets are released

Apr 22, 2025 am 08:12 AM

The top ten free platform recommendations for real-time data on currency circle markets are released

Apr 22, 2025 am 08:12 AM

Cryptocurrency data platforms suitable for beginners include CoinMarketCap and non-small trumpet. 1. CoinMarketCap provides global real-time price, market value, and trading volume rankings for novice and basic analysis needs. 2. The non-small quotation provides a Chinese-friendly interface, suitable for Chinese users to quickly screen low-risk potential projects.